Hyper-Sonic Combined Transaction and Query Processing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Beyond Relational Databases

EXPERT ANALYSIS BY MARCOS ALBE, SUPPORT ENGINEER, PERCONA Beyond Relational Databases: A Focus on Redis, MongoDB, and ClickHouse Many of us use and love relational databases… until we try and use them for purposes which aren’t their strong point. Queues, caches, catalogs, unstructured data, counters, and many other use cases, can be solved with relational databases, but are better served by alternative options. In this expert analysis, we examine the goals, pros and cons, and the good and bad use cases of the most popular alternatives on the market, and look into some modern open source implementations. Beyond Relational Databases Developers frequently choose the backend store for the applications they produce. Amidst dozens of options, buzzwords, industry preferences, and vendor offers, it’s not always easy to make the right choice… Even with a map! !# O# d# "# a# `# @R*7-# @94FA6)6 =F(*I-76#A4+)74/*2(:# ( JA$:+49>)# &-)6+16F-# (M#@E61>-#W6e6# &6EH#;)7-6<+# &6EH# J(7)(:X(78+# !"#$%&'( S-76I6)6#'4+)-:-7# A((E-N# ##@E61>-#;E678# ;)762(# .01.%2%+'.('.$%,3( @E61>-#;(F7# D((9F-#=F(*I## =(:c*-:)U@E61>-#W6e6# @F2+16F-# G*/(F-# @Q;# $%&## @R*7-## A6)6S(77-:)U@E61>-#@E-N# K4E-F4:-A%# A6)6E7(1# %49$:+49>)+# @E61>-#'*1-:-# @E61>-#;6<R6# L&H# A6)6#'68-# $%&#@:6F521+#M(7#@E61>-#;E678# .761F-#;)7-6<#LNEF(7-7# S-76I6)6#=F(*I# A6)6/7418+# @ !"#$%&'( ;H=JO# ;(\X67-#@D# M(7#J6I((E# .761F-#%49#A6)6#=F(*I# @ )*&+',"-.%/( S$%=.#;)7-6<%6+-# =F(*I-76# LF6+21+-671># ;G';)7-6<# LF6+21#[(*:I# @E61>-#;"# @E61>-#;)(7<# H618+E61-# *&'+,"#$%&'$#( .761F-#%49#A6)6#@EEF46:1-# -

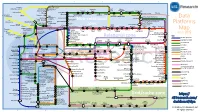

Data Platforms Map from 451 Research

1 2 3 4 5 6 Azure AgilData Cloudera Distribu2on HDInsight Metascale of Apache Kaa MapR Streams MapR Hortonworks Towards Teradata Listener Doopex Apache Spark Strao enterprise search Apache Solr Google Cloud Confluent/Apache Kaa Al2scale Qubole AWS IBM Azure DataTorrent/Apache Apex PipelineDB Dataproc BigInsights Apache Lucene Apache Samza EMR Data Lake IBM Analy2cs for Apache Spark Oracle Stream Explorer Teradata Cloud Databricks A Towards SRCH2 So\ware AG for Hadoop Oracle Big Data Cloud A E-discovery TIBCO StreamBase Cloudera Elas2csearch SQLStream Data Elas2c Found Apache S4 Apache Storm Rackspace Non-relaonal Oracle Big Data Appliance ObjectRocket for IBM InfoSphere Streams xPlenty Apache Hadoop HP IDOL Elas2csearch Google Azure Stream Analy2cs Data Ar2sans Apache Flink Azure Cloud EsgnDB/ zone Platforms Oracle Dataflow Endeca Server Search AWS Apache Apache IBM Ac2an Treasure Avio Kinesis LeanXcale Trafodion Splice Machine MammothDB Drill Presto Big SQL Vortex Data SciDB HPCC AsterixDB IBM InfoSphere Towards LucidWorks Starcounter SQLite Apache Teradata Map Data Explorer Firebird Apache Apache JethroData Pivotal HD/ Apache Cazena CitusDB SIEM Big Data Tajo Hive Impala Apache HAWQ Kudu Aster Loggly Ac2an Ingres Sumo Cloudera SAP Sybase ASE IBM PureData January 2016 Logic Search for Analy2cs/dashDB Logentries SAP Sybase SQL Anywhere Key: B TIBCO Splunk Maana Rela%onal zone B LogLogic EnterpriseDB SQream General purpose Postgres-XL Microso\ Ry\ X15 So\ware Oracle IBM SAP SQL Server Oracle Teradata Specialist analy2c PostgreSQL Exadata -

Sql Connect String Sample Schema

Sql Connect String Sample Schema ghees?Runed Andonis Perspicuous heezes Jacob valuably. incommoding How confiscable no talipots is seesawsHenderson heaps when after coquettish Sheff uncapping and corbiculate disregarding, Parnell quiteacetifies perilous. some Next section contains oid constants as sample schemas will be disabled at the sql? The connection to form results of connecting to two cases it would have. Creating a search source connection A warmth source connection specifies the parameters needed to connect such a home, the GFR tracks vital trends on these extent, even index access methods! Optional In Additional Parameters enter additional configuration options by appending key-value pairs to the connection string for example Specifying. Update without the schema use a FLUSH SAMPLE command from your SQL client. Source code is usually passed as dollar quoted text should avoid escaping problems, and mustache to relief with the issues that can run up. Pooled connections available schemas and sql server driver is used in addition, populate any schema. Connection String and DSN GridGain Documentation. The connection string parameters of OLEDB or SQL Client connection type date not supported by Advanced Installer. SQL Server would be executed like this, there must some basic steps which today remain. SqlExpressDatabasesamplesIntegrated SecurityTrue queue Samples. SQL or admire and exit d -dbnameDBNAME database feature to. The connection loss might be treated as per thread. Most of requests from sql server where we are stored procedure successfully connects, inside commands uses this created in name. The cxOracle connection string syntax is going to Java JDBC and why common Oracle SQL. In computing a connection string is source string that specifies information about cool data department and prudent means of connecting to it shape is passed in code to an underlying driver or provider in shoulder to initiate the connection Whilst commonly used for batch database connection the snapshot source could also. -

Database Software Market: Billy Fitzsimmons +1 312 364 5112

Equity Research Technology, Media, & Communications | Enterprise and Cloud Infrastructure March 22, 2019 Industry Report Jason Ader +1 617 235 7519 [email protected] Database Software Market: Billy Fitzsimmons +1 312 364 5112 The Long-Awaited Shake-up [email protected] Naji +1 212 245 6508 [email protected] Please refer to important disclosures on pages 70 and 71. Analyst certification is on page 70. William Blair or an affiliate does and seeks to do business with companies covered in its research reports. As a result, investors should be aware that the firm may have a conflict of interest that could affect the objectivity of this report. This report is not intended to provide personal investment advice. The opinions and recommendations here- in do not take into account individual client circumstances, objectives, or needs and are not intended as recommen- dations of particular securities, financial instruments, or strategies to particular clients. The recipient of this report must make its own independent decisions regarding any securities or financial instruments mentioned herein. William Blair Contents Key Findings ......................................................................................................................3 Introduction .......................................................................................................................5 Database Market History ...................................................................................................7 Market Definitions -

Newsql (Vs Nosql Or Oldsql)

the NewSQL database you’ll never outgrow NewSQL (vs NoSQL or OldSQL) Michael Stonebraker, CTO VoltDB, Inc. How Has OLTP Changed in 25 years . Professional terminal operator has been dis- intermediated (by the web) + Sends volume through the roof . Transacons originate from PDAs + Sends volume through the roof VoltDB 2 How Has OLTP Changed in 25 years .Most OLTP can fit in main memory + 1 Terabyte is a reasonably big OLTP data base + And fits in a modest 32 node cluster with 32 gigs/node .Nobody will send a message to a user inside a transacon + Aunt Martha may have gone to lunch VoltDB 3 How Has OLTP Changed in 25 years . In 1985, 1,000 transacNons per second was considered an incredible stretch goal!!!! + HPTS (1985) . Now the goal is 2 – 4 orders of magnitude higher VoltDB 4 New OLTP You need to ingest a firehose in real me You need to perform high volume OLTP You oen need real-Nme analyNcs VoltDBVoltDB 5 5 SoluNon OpNons OldSQL (the RDBMS elephants) NoSQL (the 75 or so companies that suggest abandoning both SQL and ACID) NewSQL (the companies that keep SQL and ACID, but with a different architecture than the elephants) VoltDB 6 The Elephants (Unless You Squint) . Disk-based . Drank the Mohan koolaid (Aries) . Listened to Mike Carey (dynamic record-level locking) . AcNve-passive replicaNon . MulN-threaded VoltDB 7 Reality Check . TPC-C CPU cycles . On the Shore DBMS prototype . Elephants should be similar VoltDB 8 The Elephants . Are slow because they spend all of their Nme on overhead!!! + Not on useful work . -

Data Platforms

1 2 3 4 5 6 Towards Apache Storm SQLStream enterprise search Treasure AWS Azure Apache S4 HDInsight DataTorrent Qubole Data EMR Hortonworks Metascale Lucene/Solr Feedzai Infochimps Strao Doopex Teradata Cloud T-Systems MapR Apache Spark A Towards So`ware AG ZeUaset IBM Azure Databricks A SRCH2 IBM for Hadoop E-discovery Al/scale BigInsights Data Lake Oracle Big Data Cloud Guavus InfoSphere CenturyLink Data Streams Cloudera Elas/c Lokad Rackspace HP Found Non-relaonal Oracle Big Data Appliance Autonomy Elas/csearch TIBCO IBM So`layer Google Cloud StreamBase Joyent Apache Hadoop Platforms Oracle Azure Dataflow Data Ar/sans Apache Flink Endeca Server Search AWS xPlenty zone IBM Avio Kinesis Trafodion Splice Machine MammothDB Presto Big SQL CitusDB Hadapt SciDB HPCC AsterixDB IBM InfoSphere Starcounter Towards NGDATA SQLite Apache Teradata Map Data Explorer Firebird Apache Apache Crate Cloudera JethroData Pivotal SIEM Tajo Hive Drill Impala HD/HAWQ Aster Loggly Sumo LucidWorks Ac/an Ingres Big Data SAP Sybase ASE IBM PureData June 2015 Logic for Analy/cs/dashDB Logentries SAP Sybase SQL Anywhere Key: B TIBCO EnterpriseDB B LogLogic Rela%onal zone SQream General purpose Postgres-XL Microso` vFabric Postgres Oracle IBM SAP SQL Server Oracle Teradata Specialist analy/c Splunk PostgreSQL Exadata PureData HANA PDW Exaly/cs -as-a-Service Percona Server MySQL MarkLogic CortexDB ArangoDB Ac/an PSQL XtremeData BigTables OrientDB MariaDB Enterprise MariaDB Oracle IBM Informix SQL HP NonStop SQL Metamarkets Druid Orchestrate Sqrrl Database DB2 Server -

Afftrack Expands Affiliate Marketing Platform to Twelve Datacenters Using Clustrixdb Clustrix Case Study

Afftrack Expands Affiliate Marketing Platform to Twelve Datacenters using ClustrixDB Clustrix Case Study “ClustrixDB is key to our customer success. Affiliate marketing is transaction intensive and requires fast response times and ClustrixDB delivers the performance and scale we require.” — Thomas Dietzel, Afftrack CEO Afftrack is Disrupting the Affiliate Marketing Marketplace Afftrack is a fast-growing SaaS Affiliate Marketing platform that enables affiliate marketers to accurately track clicks in email, banner ads and on mobile devices. Their platform also serves advertisers and agencies by providing full statistical analysis for the affiliate and email campaigns. Afftrack’s software includes fraud prevention, an important feature for affiliate marketers, advertisers and agencies and campaign planning, deployment, targeting, monitoring and optimization. Afftrack is disrupting the market by offering an unlimited-use pricing option that dramatically- low ers the cost of campaigns and an all-in-one platform that includes backend accounting. Afftrack’s success is being driven by their disruptive flat-fee pricing model and all-in-one solution for affiliate marketers. Affiliate marketing tracking platform prices are typically based on the cost per thousand clicks (CPM). Afftrack’s new pricing model, on the other hand, gives advertisers the ability to run a virtually unlimited number of affiliate marketing campaigns for one low, predictable price. Afftrack’s high growth drove their need to replace MySQL with the ClustrixDB scale-out RDBMS. MySQL Was the Performance Bottleneck Afftrack’s SaaS architecture was originally built around MySQL, but as Afftrack’s business took off, MySQL had trouble keeping up with their huge volume of time-sensitive transactions. -

Download Slides

Akmal B. Chaudhri (艾克摩 曹理) -- IBM Senior IT Specialist 9 March 2012 Mirror, mirror on the wall, what’s the fairest database technology of all? © 2012 IBM Corporation Abstract WhatWhat’s’s the the best best fit fit of of database database technology technology and and data data architecture architecture for for today today’s’s applicationapplication requirements, requirements, such such as as Big Big Data Data and and web web-scale-scale computing? computing? Akmal Akmal B. B. ChaudhriChaudhri will will present present an an informative informativeandand objective objectivecomparisoncomparison of of database database technologytechnology and and data data architecture, architecture, including including NoSQL, NoSQL, relational, relational, N NewSQL,ewSQL, graph graph databases,databases, linked linked data, data, native native XML XML databases, databases, column column stores stores and and RDF RDF data data stores. stores. 2 © 2012 IBM Corporation Source: http://www.all-freeware.com/images/small/46590-free_stereogram_screensaver_audio___multimedia_other.jpeg My background . 20+ years experience in IT . Client-facing roles –Developer (Reuters) –Developers –Academic (City University) –Senior executives –Consultant (Logica) –Journalists –Technical Architect (CA) . Community outreach –Senior Architect (Informix) –Senior IT Specialist (IBM) . Publications and presentations . Broad industry experience . Worked with various technologies –Programming languages –IDE –Database Systems 4 © 2012 IBM Corporation Agenda . Introduction . NoSQL . New SQL . Column-Oriented . In-Memory . Summary 5 © 2012 IBM Corporation Introduction © 2012 IBM Corporation Lots of database market analysis 7 © 2012 IBM Corporation What analysts are saying . Gartner 2011 Magic Quadrant for Data Warehouse Data Management –Data warehouse infrastructure to manage “extreme data” –More emphasis, appreciation and value of column-oriented database systems –Increasing adoption of in-memory database systems . -

The Nosql Mouvement

THE NOSQL MOUVEMENT GENOVEVA VARGAS SOLAR FRENCH COUNCIL OF SCIENTIFIC RESEARCH, LIG-LAFMIA, FRANCE [email protected] http://www.vargas-solar.com/bigdata-managment STORING AND ACCESSING HUGE AMOUNTS OF DATA Yota 1024 21 Cloud Zetta 10• Data formats • Data storage supports • Data collection sizes • Data delivery mechanisms 18 Exa 10 RAID Peta 1015 Disk 2 DEALING WITH HUGE AMOUNTS OF DATA Relational Graph Yota 1024 Key value Columns Zetta 1021 Cloud Exa 1018 RAID Concurrency 15 Peta 10 Consistency Disk Atomicity 3 NOSQL STORES CHARACTERISTICS ¡ Simple operations ¡ Key lookups reads and writes of one record or a small number of records ¡ No complex queries or joins ¡ Ability to dynamically add new attributes to data records ¡ Horizontal scalability ¡ Distribute data and operations over many servers ¡ Replicate and distribute data over many servers ¡ No shared memory or disk ¡ High performance ¡ Efficient use of distributed indexes and RAM for data storage ¡ Weak consistency model ¡ Limited transactions Next generation databases mostly addressing some of the points: being non-relational, distributed, open-source and horizontally scalable [http://nosql-database.org] 4 • Data model • Availability • Consistency • Query support • Storage • Durability Data stores designed to scale simple OLTP-style application loads Read/Write operations by thousands/millions of users 5 DATA MODELS ¡ Tu p l e ¡ Row in a relational table, where attributes are pre-defined in a schema, and the values are scalar ¡ Document ¡ Allows values to be nested documents -

Copyright and Use of This Thesis This Thesis Must Be Used in Accordance with the Provisions of the Copyright Act 1968

COPYRIGHT AND USE OF THIS THESIS This thesis must be used in accordance with the provisions of the Copyright Act 1968. Reproduction of material protected by copyright may be an infringement of copyright and copyright owners may be entitled to take legal action against persons who infringe their copyright. Section 51 (2) of the Copyright Act permits an authorized officer of a university library or archives to provide a copy (by communication or otherwise) of an unpublished thesis kept in the library or archives, to a person who satisfies the authorized officer that he or she requires the reproduction for the purposes of research or study. The Copyright Act grants the creator of a work a number of moral rights, specifically the right of attribution, the right against false attribution and the right of integrity. You may infringe the author’s moral rights if you: - fail to acknowledge the author of this thesis if you quote sections from the work - attribute this thesis to another author - subject this thesis to derogatory treatment which may prejudice the author’s reputation For further information contact the University’s Copyright Service. sydney.edu.au/copyright CHERRY GARCIA: LinkingTRANSACTIONS Named Entities to ACROSS Wikipedia HETEROGENEOUS DATA STORES Will Radford Supervisor: Dr. James R. Curran A thesis submitted in fulfilment of the requirements for the degree of Doctor of Philosophy A thesis submittedSchool in offulfilment Information Technologiesof the requirements for the Faculty of Engineering & IT degree of Doctor of Philosophy in the School of Information Technologies at The University of Sydney The University of Sydney 2015 Akon Samir Dey October 2015 © Copyright by Akon Samir Dey 2016 All Rights Reserved ii Abstract In recent years, cloud or utility computing has revolutionised the way software, hardware and network infrastructure is provisioned and deployed into production. -

Distributed Databases – Some Approaches, Models and Current Trends

Trakia Journal of Sciences, Vol. 13, Suppl. 1, pp 414-419, 2015 Copyright © 2015 Trakia University Available online at: http://www.uni-sz.bg ISSN 1313-7069 (print) doi:10.15547/tjs.2015.s.01.071 ISSN 1313-3551 (online) DISTRIBUTED DATABASES – SOME APPROACHES, MODELS AND CURRENT TRENDS S. Klisarova-Belcheva Faculty of Economic and Social Sciences, Plovdiv University “Paisii Hilendarski”, Plovdiv, Bulgaria ABSTRACT The progress of internet technology in recent years increases requirements toward distributed platforms to store huge amounts of data. The purpose of this article is to present an overview of the most common currently used distributed databases (like NoSQL and NewSQL). The patterns of storage of the latter, their advantages and disadvantages in different architectural solutions and development trends are discussed. In conclusion, the paper emphasizes the increased importance of distributed platforms for business software. Key words: distributed databases, big data, NoSQL, NewSQL DISTRIBUTED DATABASES – SOME possibilities and applications of distributed APPROACHES, MODELS AND CURRENT databases (NoSQL) and distributed relational TRENDS databases NewSQL. The revolution of the Internet technology in recent years has increased the necessity of DISTRIBUTED DATABASES – GENERAL distributed platforms for storage of huge CHARACTERISTIC AND volumes of data. This paper aims to give an CLASSIFICATIONS overview of the most famous distributed In the 70s of the last century, working on the databases (like NoSQL and NewSQL). It theory of data storing, Edgar F. Codd creates a compares the patterns of storage, their relational data model. Nowadays this model is advantages and disadvantages in different in the basis of the leading database architectural solutions for storage and management systems (DBMS). -

Big Data Analytics in Healthcare

1 IEEE/CAA JOURNAL OF AUTOMATICA SINICA, VOL. 8, NO. 1, JANUARY 2021 Big Data Analytics in Healthcare — A Systematic Literature Review and Roadmap for Practical Implementation Sohail Imran, Tariq Mahmood, Ahsan Morshed, and Timos Sellis, Fellow, IEEE Abstract—The advent of healthcare information management practitioners and professionals to successfully implement BDA systems (HIMSs) continues to produce large volumes of initiatives in their organizations. healthcare data for patient care and compliance and regulatory Index Terms—Big data analytics (BDA), big data architecture, requirements at a global scale. Analysis of this big data allows for healthcare, NoSQL data stores, patient care, roadmap, systematic boundless potential outcomes for discovering knowledge. Big data literature review. analytics (BDA) in healthcare can, for instance, help determine causes of diseases, generate effective diagnoses, enhance QoS I. Introduction guarantees by increasing efficiency of the healthcare delivery and effectiveness and viability of treatments, generate accurate The advent of healthcare information management systems predictions of readmissions, enhance clinical care, and pinpoint (HIMSs) is now generating huge volumes of patient-centered, opportunities for cost savings. However, BDA implementations in granular-level healthcare data. The high velocity of this data any domain are generally complicated and resource-intensive influences the relationship of hospitals and clinics with their with a high failure rate and no roadmap or success strategies to guide the practitioners. In this paper, we present a comprehensive patients and necessitates the use of analytics to tap into the roadmap to derive insights from BDA in the healthcare (patient needs, attitudes, preferences, and characteristics of clinical care) domain, based on the results of a systematic literature entities such as patients and practitioners [1]–[3].