Design and Development of an Indian Classical Vocal Training Tool

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Music) (Credit System)

Bharati Vidyapeeth Deemed University School of Performing Arts, Pune Semester Wise Detailed Chart of Syllabus of B.A. (Music) (Credit System) B.A. 1st Year (Music) Credits (Vocal / Instrumental / Percussion) Sem – 1st Two Language Papers 01 Credit each Theory Paper 02 Stage Performance 14 Viva 07 Sr. Subject Paper Syllabus no 1 Language (English) L11 Biography of Pt. Sapan Chaudhari, Padma Subramaniam, Pt. Shivkumar Sharma, Pt. Jasraj 2 Language (Marathi) L12 Biography Pt. Vishnu Digambar Paluskar 3 Theory (Music) (Vocal & T11 1. Notation System Instrumental) a) Concept of Notation and use (Notation System) b) History of Notation c) Bhatkhande and Paluskar Notation System 2. Concept and Definitions of Terms: Raga, That, Nad, Swara, Shruti, Awartan, Aroha, Awaroha, Swaralankar, Shuddha Swara, Komal Swara, Teewra Swara, That etc. 3. Old Concepts: Gram, Murchna etc. 4 Theory (Music) T12 1) Notation system (Taal Paddhatee) (Percussion) a) In North Indian Classical Style (Notation System) Bhatkhande and Paluskar styles of notation b) Karnataki system. 2) Writing of different taals 3) Notation writing of intricacies of developing Taal : like Kayda, Tukda, Paran, Tihai etc 4) Definition of terms: Taal, Matra, Khanda, Sam, Kaal, Theka 5 Viva (Music) (Vocal & V11 Information on Practical Syllabus Sem I Instrumental) Note – 1. Presentation of other Raga from syllabus.( Except the Raga sung / Played in stage performance) 2. Information of the Ragas mentioned in syllabus i.e. Aaroh – Avaroh, Swar, Varjya Swar, Vadi, Samvadi, Anuvadi, Vivadi, Jaati, Time of Singing Raga etc. 3. Names of the Raga similar to the Raga mentioned in syllabus. 4. Definition – Sangeet, Raag, Taal.. 5. Information of Taal Teentaal – i.e. -

MRIDANGA SYLLABUS for MA (CBCS) Effective from 2014-15

BANGALORE UNIVERSITY P.G. DEPARTMENT OF PERFORMING ARTS JNANABHARATHI, BANGALORE-560056 MRIDANGA SYLLABUS FOR M.A (CBCS) Effective from 2014-15 Dr. B.M. Jayashree. Professor of Music Chairperson, BOS (PG) M.A. MRIDANGA Semester scheme syllabus CBCS Scheme of Examination, continuous Evaluation and other Requirements: 1. ELIGIBILITY: A Degree music with Mridanga as one of the optional subject with at least 50% in the concerned optional subject an merit internal among these applicant Of A Graduate with minimum of 50% marks secured in the senior grade examination of Mridanga conducted by secondary education board of Karnataka OR a graduate with a minimum of 50% marks secured in PG Diploma or 2 years diploma or 4 year certificate course in Mridanga conducted either by any recognized Universities of any state out side Karnataka or central institution/Universities Any degree with: a) Any certificate course in Mridanga b) All India Radio/Doordarshan gradation c) Any diploma in Mridanga or five years of learning certificate by any veteran musician d) Entrance test (practical) is compulsory for admission. 2. M.A. Mridanga course consists of four semesters. 3. First semester will have three theory paper (core), three practical papers (core) and one practical paper (soft core). 4. Second semester will have three theory papers (core), three practical papers (core), one is project work/Dissertation practical paper and one is practical paper (soft core) 5. Third semester will have two theory papers (core), three practical papers (core) and one is open Elective Practical paper 6. Fourth semester will have two theory Papers (core) two practical papers (core), one project work and one is Elective paper. -

6. ADDITIONAL SUBJECTS (A) MUSIC Any One of the Following Can Be Offered: (Hindustani Or Carnatic)

6. ADDITIONAL SUBJECTS (A) MUSIC Any one of the following can be offered: (Hindustani or Carnatic) 1. Carnatic Music-Vocal 4. Hindustani Music-Vocal or or 2. Carnatic Music-Melodic Instruments 5. Hindustani Music Melodic Instruments or or 3. Carnatic Music-Percussion 6. Hindustani Music Percussion Instruments Instruments (i) CARNATIC MUSIC VOCAL THE WEIGHTAGE FOR FORMATIVE ASSESSMENT (F.A.) AND SUMMATIVE ASSESSMENT (S.A.) FOR TERM I & II SHALL BE AS FOLLOWS Term Type of Assessment Percentage of Termwise Total Weightage in Weightage Academic Session for both Terms First Term Summative 1 15% 15+35 50% (April - Theory Paper Sept.) Practicals 35% Second Summative 2 15% 15+35 50% Term Theory Paper (Oct.- Practicals 35% March) Total 100% Term-I Term-II Total Theory 15% + 15% = 30% Practical 35% + 35% = 70% Total 100% 163 Carnatic Music (Code No. 034) Any one of the following can be offered : (Hindustani or Carnatic) 1. Carnatic Music-Vocal 4. Hindustani Music-Vocal or or 2. Carnatic Music-Melodic Instruments 5. Hindustani Music Melodic Instruments or or 3. Carnatic Music-Percussion 6. Hindustani Music Percussion Instruments Instruments (i) CARNATIC MUSIC VOCAL THE WEIGHTAGE FOR FORMATIVE ASSESSMENT (F.A.) AND SUMMATIVE ASSESSMENT (S.A.) FOR TERM I & II SHALL BE AS FOLLOWS Term Type of Assessment Percentage of Termwise Total Weightage in Weightage Academic Session for both Terms First Term Summative 1 15% 15+35 50% (April - Theory Paper Sept.) Practicals 35% Second Summative 2 15% 15+35 50% Term Theory Paper (Oct.- Practicals 35% March) Total 100% Term-I Term-II Total Theory 15% + 15% = 30% Practical 35% + 35% = 70% Total 100% 164 SYLLABUS FOR SUMMATIVE ASSESSMENT FIRST TERM (APRIL 2016 - SEPTEMBER 2016) CARNATIC MUSIC (VOCAL) (CODE 031) CLASS IX TOPIC (A) Theory 15 Marks 1. -

Sreenivasarao's Blogs HOME ABOUT THIS WORDPRESS.COM SITE IS the BEE's KNEES

sreenivasarao's blogs HOME ABOUT THIS WORDPRESS.COM SITE IS THE BEE'S KNEES ← Music of India – a brief outline – Part fifteen Music of India – a brief outline – Part Seventeen → Music of India – a brief outline – Part sixteen Continued from Part Fifteen – Lakshana Granthas– Continued Part Sixteen (of 22 ) – Lakshana Granthas – Continued Pages 8. Sangita-ratnakara by Sarangadeva About Archives January 2016 October 2015 September 2015 August 2015 July 2015 June 2015 May 2015 April 2015 February 2015 January 2014 December 2013 Sarangadeva’s Sangita-ratnakara (first half of 13th century) is of particular importance, because it was written November 2013 just before influence of the Muslim conquest began to assert itself on Indian culture. The Music discussed in Sangita-ratnakara is free from Persian influence. Sangita-ratnakara therefore marks the stage at which the October 2013 ‘integrated’ Music of India was before it branched into North-South Music traditions. October 2012 It is clear that by the time of Sarangadeva, the Music of India had moved far away from Marga or Gandharva, as September 2012 also from the system based on Jatis (class of melodies) and two parent scales. By his time, many new August 2012 conventions had entered into the main stream; and the concept of Ragas that had taken firm roots was wielding considerable authority. Sarangadeva brought together various strands of the past music traditions, defined almost 267 Ragas, established a sound theoretical basis for music and provided a model for the later Categories musicology (Samgita Shastra). Abhinavagupta (3) Sarangadeva’s emphasis was on the ever changing nature of music, the expanding role of regional (Desi) Agama (6) influences on it, and the increasing complexity of musical material that needed to be systemised time and again. -

1. Could You Tell Us a Little Bit About Yourself? 2. Could You Tell Us a Little Bit About What You Do?

1. Could you tell us a little bit about yourself? 2. Could you tell us a little bit about what you do? For both questions above, please check my website: www.shemeem.com 3. Has Sufi music and Qawwali been part of your childhood? Did the place you grew up in have an influence on your interest in Qawwali? Sufism and Qawwali has been a part of my childhood as well as of my life. My mother’s family belong to the Suhrawardy and Qadiriyya order of Sufis such as Hazrat Bahauddin Zakaria of Multan and Rukunuddin Shah Alam. We also have a connection with Hazrat Shams-e Tabriz, the great Sufi master who was mentor to Maulana Rumi of Konya. I’ve been exposed to musical traditions from my father’s side who was a connoisseur of classical music and played the tabla well. My father’s family trace descent from Ziya’al Din Barani (1285-1361). Barani’s history of the Delhi sultanate in the thirteenth and fourteenth centuries is a major source to study the Muslim ethnomusicology of the time, especially the study of Amir Khusrau, the founder of the qawwali tradition in India. As such the qaul, “mun kunto maula fa Ali un maula” with which traditional classical qawwali is initiated is attributed to Amir Khusrau. I grew up in East Pakistan in the early 1950s which at that time had a strong musical tradition because of its interfaith communities of Muslims, Hindus, Buddhists, Christians and other faiths, until it got taken over by orthodoxy and militarism. -

Bhakti Movement

TELLINGS AND TEXTS Tellings and Texts Music, Literature and Performance in North India Edited by Francesca Orsini and Katherine Butler Schofield http://www.openbookpublishers.com © Francesca Orsini and Katherine Butler Schofield. Copyright of individual chapters is maintained by the chapters’ authors. This work is licensed under a Creative Commons Attribution 4.0 International license (CC BY 4.0). This license allows you to share, copy, distribute and transmit the work; to adapt the work and to make commercial use of the work providing attribution is made to the author (but not in any way that suggests that they endorse you or your use of the work). Attribution should include the following information: Orsini, Francesca and Butler Schofield, Katherine (eds.), Tellings and Texts: Music, Literature and Performance in North India. Cambridge, UK: Open Book Publishers, 2015. http://dx.doi.org/10.11647/OBP.0062 Further details about CC BY licenses are available at http://creativecommons.org/ licenses/by/4.0/ In order to access detailed and updated information on the license, please visit: http://www.openbookpublishers.com/isbn/9781783741021#copyright All external links were active on 22/09/2015 and archived via the Internet Archive Wayback Machine: https://archive.org/web/ Digital material and resources associated with this volume are available at http:// www.openbookpublishers.com/isbn/9781783741021#resources ISBN Paperback: 978-1-78374-102-1 ISBN Hardback: 978-1-78374-103-8 ISBN Digital (PDF): 978-1-78374-104-5 ISBN Digital ebook (epub): 978-1-78374-105-2 ISBN Digital ebook (mobi): 9978-1-78374-106-9 DOI: 10.11647/OBP.0062 King’s College London has generously contributed to the publication of this volume. -

Sanskrit Scholars of Orissa

Orissa Review * April - 2006 Sanskrit Scholars of Orissa Jayanti Rath The contribution of Orissan scholars in the domain The famous drama 'Anargha Raghava' of Sanskrit literature is inormous. They have has been written by Murari Mishra. The drama shown their excellence in different branches of deals with the story of Ramayana. The earliest knowledge, i.e. Grammar, Politics, Dharma reference to car festival of Orissa is found in this Sastras, Kavyas, Poetics, Astrology, Astronomy, work. He describes about the assemblage of a Tantra, Dance, Music, Architecture, Arithmetic, large number of people near Tamila-Studde Geography, Trade Routes, Occult Practices, War Purusottama Kshetra, which is situated at the and War Preparation, Temple Rituals and so on. sea-sore. Murari Misra approximately belonged They have enriched it immensely. to 8th century A.D, because he was referred to Vishnu Sharma, the famous another of by Raja Sekhar of 9th century A.D. in his Kavya Pancha Tantra, is the earliest known Sanskrit Mimamsa. scholars of Orissa. He was the court poet and Satananda, a great astronomer of Orissa priest of Ananda Sakti Varman, the ruler of has left indelible mark in the field of Sanskrit study. Mathara dynasty. The Matharas were ruling over His work Bhasvati was once accepted as an Orissa in 4th and 5th Century A.D. Pancha authority on Astronomy and several Tantra is considered to be one of the most commentaries were written on this work by outstanding work of India of early period. But scholars of different parts of India which bear prior to this Upanishadas in Altharva Veda and testimony to its popularity. -

Vol.74-76 2003-2005.Pdf

ISSN. 0970-3101 THE JOURNAL Of THE MUSIC ACADEMY MADRAS Devoted to the Advancement of the Science and Art of Music Vol. LXXIV 2003 ^ JllilPd frTBrf^ ^TTT^ II “I dwell not in Vaikunta, nor in the hearts of Yogins, not in the Sun; (but) where my Bhaktas sing, there be /, N arada !” Narada Bhakti Sutra EDITORIAL BOARD Dr. V.V. Srivatsa (Editor) N. Murali, President (Ex. Officio) Dr. Malathi Rangaswami (Convenor) Sulochana Pattabhi Raman Lakshmi Viswanathan Dr. SA.K. Durga Dr. Pappu Venugopala Rao V. Sriram THE MUSIC ACADEMY MADRAS New No. 168 (Old No. 306), T.T.K. Road, Chennai 600 014. Email : [email protected] Website : www.musicacademymadras.in ANNUAL SUBSCRIPTION - INLAND Rs. 150 FOREIGN US $ 5 Statement about ownership and other particulars about newspaper “JOURNAL OF THE MUSIC ACADEMY MADRAS” Chennai as required to be published under Section 19-D sub-section (B) of the Press and Registration Books Act read with rule 8 of the Registration of Newspapers (Central Rules) 1956. FORM IV JOURNAL OF THE MUSIC ACADEMY MADRAS Place of Publication Chennai All Correspondence relating to the journal should be addressed Periodicity of Publication and all books etc., intended for it should be sent in duplicate to the Annual Editor, The journal o f the Music Academy Madras, New 168 (Old 306), Printer Mr. N Subramanian T.T.K. Road, Chennai 600 014. 14, Neelakanta Mehta Street Articles on music and dance are accepted for publication on the T Nagar, Chennai 600 017 recommendation of the Editor. The Editor reserves the right to accept Publisher Dr. -

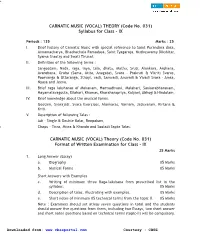

CARNATIC MUSIC (VOCAL) THEORY (Code No

CARNATIC MUSIC (VOCAL) THEORY (Code No. 031) Syllabus for Class - IX Periods : 135 Marks : 25 I. Brief history of Carnatic Music with special reference to Saint Purandara dasa, Annamacharya, Bhadrachala Ramadasa, Saint Tyagaraja, Muthuswamy Dikshitar, Syama Shastry and Swati Tirunal. II. Definition of the following terms : Sangeetam, Nada, raga, laya, tala, dhatu, Mathu, Sruti, Alankara, Arohana, Avarohana, Graha (Sama, Atita, Anagata), Svara - Prakruti & Vikriti Svaras, Poorvanga & Uttaranga, Sthayi, vadi, Samvadi, Anuvadi & Vivadi Svara - Amsa, Nyasa and Jeeva. III. Brief raga lakshanas of Mohanam, Hamsadhvani, Malahari, Sankarabharanam, Mayamalavagoula, Bilahari, Khamas, Kharaharapriya, Kalyani, Abhogi & Hindolam. IV. Brief knowledge about the musical forms. Geetam, Svarajati, Svara Exercises, Alankaras, Varnam, Jatisvaram, Kirtana & Kriti. V. Description of following Talas : Adi - Single & Double Kalai, Roopakam, Chapu - Tisra, Misra & Khanda and Sooladi Sapta Talas. CARNATIC MUSIC (VOCAL) Theory (Code No. 031) Format of Written Examination for Class - IX 25 Marks 1. Long Answer (Essay) a. Biography 05 Marks b. Musical Forms 05 Marks Short Answers with Examples c. Writing of minimum three Raga-lakshana from prescribed list in the syllabus. 05 Marks d. Description of talas, illustrating with examples. 05 Marks e. Short notes of minimum 05 technical terms from the topic II. 05 Marks Note : Examiners should set atleas seven questions in total and the students should answer five questions from them, including two Essays, two short answer and short notes questions based on technical terms (topic-II) will be compulsory. Downloaded from: www.cbseportal.com Courtesy : CBSE 101 CARNATIC MUSIC (VOCAL) Practical (Code No. 031) Syllabus for Class - IX Periods : 405 Marks : 75 I. Vocal exercises - Svaravalis, Hechchu and Taggu Sthayi, Alankaras in three degrees of speed. -

CARNATIC MUSIC (VOCAL) (Code No

CARNATIC MUSIC (VOCAL) (code no. 031) Class IX (2020-21) Theory Marks: 30 Time: 2 Hours No. of periods I. Brief history of Carnatic Music with special reference to Saint 10 Purandara dasa, Annamacharya, Bhadrachala Ramadasa, Saint Tyagaraja, Muthuswamy Dikshitar, Syama Sastry and Swati Tirunal. II. Definition of the following terms: 12 Sangeetam, Nada, raga, laya, Tala, Dhatu, Mathu, Sruti, Alankara, Arohana, Avarohana, Graha (Sama, Atita, Anagata), Svara – Prakruti & Vikriti Svaras, Poorvanga & Uttaranga, Sthayi, vadi, Samvadi, Anuvadi & Vivadi Svara – Amsa, Nyasa and Jeeva. III. Brief raga lakshanas of Mohanam, Hamsadhvani, Malahari, 12 Sankarabharanam, Mayamalavagoula, Bilahari, khamas, Kharaharapriya, Kalyani, Abhogi & Hindolam. IV. Brief knowledge about the musical forms. 8 Geetam, Svarajati, Svara Exercises, Alankaras, Varnam, Jatisvaram, Kirtana & Kriti. V. Description of following Talas: 8 Adi – Single & Double Kalai, Roopakam, Chapu – Tisra, Misra & Khanda and Sooladi Sapta Talas. VI. Notation of Gitams in Roopaka and Triputa Tala 10 Total Periods 60 CARNATIC MUSIC (VOCAL) Format of written examination for class IX Theory Marks: 30 Time: 2 Hours 1 Section I Six MCQ based on all the above mentioned topic 6 Marks 2 Section II Notation of Gitams in above mentioned Tala 6 Marks Writing of minimum Two Raga-lakshana from prescribed list in 6 Marks the syllabus. 3 Section III 6 marks Biography Musical Forms 4 Section IV 6 marks Description of talas, illustrating with examples Short notes of minimum 05 technical terms from the topic II. Definition of any two from the following terms (Sangeetam, Nada, Raga,Sruti, Alankara, Svara) Total Marks 30 marks Note: - Examiners should set atleast seven questions in total and the students should answer five questions from them, including two Essays, two short answer and short notes questions based on technical terms will be compulsory. -

Raga (Melodic Mode) Raga This Article Is About Melodic Modes in Indian Music

FREE SAMPLES FREE VST RESOURCES EFFECTS BLOG VIRTUAL INSTRUMENTS Raga (Melodic Mode) Raga This article is about melodic modes in Indian music. For subgenre of reggae music, see Ragga. For similar terms, see Ragini (actress), Raga (disambiguation), and Ragam (disambiguation). A Raga performance at Collège des Bernardins, France Indian classical music Carnatic music · Hindustani music · Concepts Shruti · Svara · Alankara · Raga · Rasa · Tala · A Raga (IAST: rāga), Raag or Ragam, literally means "coloring, tingeing, dyeing".[1][2] The term also refers to a concept close to melodic mode in Indian classical music.[3] Raga is a remarkable and central feature of classical Indian music tradition, but has no direct translation to concepts in the classical European music tradition.[4][5] Each raga is an array of melodic structures with musical motifs, considered in the Indian tradition to have the ability to "color the mind" and affect the emotions of the audience.[1][2][5] A raga consists of at least five notes, and each raga provides the musician with a musical framework.[3][6][7] The specific notes within a raga can be reordered and improvised by the musician, but a specific raga is either ascending or descending. Each raga has an emotional significance and symbolic associations such as with season, time and mood.[3] The raga is considered a means in Indian musical tradition to evoke certain feelings in an audience. Hundreds of raga are recognized in the classical Indian tradition, of which about 30 are common.[3][7] Each raga, state Dorothea -

PROFILE Besides Saniya Has Been Showered with Blessings And

PROFILE Born in the family of Music lovers, Saniya's talent was discovered by her parents at the tender age of 3, when she started playing songs on harmonium by herself. As an inborn Artist, since childhood, she had good understanding towards Swar and Laya. Her father is a violin player, mother is a good connoisseur of Music and brother, Sameep Kulkarni is disciple of Sitar Maestro Ustad Shahid Parvez. Education is also given utmost importance in her family and all family members are Merit rank holders. Saniya was fortunate to receive blessings from Ganasaraswati Kishoritai Amonkar at the age of 6 and she started learning classical music from Smt. Lilatai Gharpure (senior disciple of Smt. Hirabai Badodekar). Saniya's first debut was her performance in front of legendary Pt.Jitendra Abhisheki at the age of 12 and that time she created long lasting impression on the audience. Saniya had a privilege to learn under able guidance of renowned senior vocalist Dr.Ashwini Bhide- Deshpande of Jaipur-Atrauli Gharana. She was trained under Ashwiniji with a comprehensive gharana talim for 12 years and frequent Pune-Mumbai travels Saniya is a “Sangeet Visharad” with distinction and a gold medalist in M.COM. Further she also completed the intricate C.S. (Company Secretary) Course. Her hard work, perseverance, and love for music contributed to prove herself on both musical as well as academic fronts. Besides Saniya has been showered with blessings and appreciation by musicologists, music critics and stalwarts of Hindustani Classical Music like Pt. Bhimsen Joshi, Bharatratna LATA MANGESHKAR, Late Pt.