The General Linear Model

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

![Arxiv:1910.08883V3 [Stat.ML] 2 Apr 2021 in Real Data [8,9]](https://docslib.b-cdn.net/cover/3763/arxiv-1910-08883v3-stat-ml-2-apr-2021-in-real-data-8-9-13763.webp)

Arxiv:1910.08883V3 [Stat.ML] 2 Apr 2021 in Real Data [8,9]

Nonpar MANOVA via Independence Testing Sambit Panda1;2, Cencheng Shen3, Ronan Perry1, Jelle Zorn4, Antoine Lutz4, Carey E. Priebe5 and Joshua T. Vogelstein1;2;6∗ Abstract. The k-sample testing problem tests whether or not k groups of data points are sampled from the same distri- bution. Multivariate analysis of variance (Manova) is currently the gold standard for k-sample testing but makes strong, often inappropriate, parametric assumptions. Moreover, independence testing and k-sample testing are tightly related, and there are many nonparametric multivariate independence tests with strong theoretical and em- pirical properties, including distance correlation (Dcorr) and Hilbert-Schmidt-Independence-Criterion (Hsic). We prove that universally consistent independence tests achieve universally consistent k-sample testing, and that k- sample statistics like Energy and Maximum Mean Discrepancy (MMD) are exactly equivalent to Dcorr. Empirically evaluating these tests for k-sample-scenarios demonstrates that these nonparametric independence tests typically outperform Manova, even for Gaussian distributed settings. Finally, we extend these non-parametric k-sample- testing procedures to perform multiway and multilevel tests. Thus, we illustrate the existence of many theoretically motivated and empirically performant k-sample-tests. A Python package with all independence and k-sample tests called hyppo is available from https://hyppo.neurodata.io/. 1 Introduction A fundamental problem in statistics is the k-sample testing problem. Consider the p p two-sample problem: we obtain two datasets ui 2 R for i = 1; : : : ; n and vj 2 R for j = 1; : : : ; m. Assume each ui is sampled independently and identically (i.i.d.) from FU and that each vj is sampled i.i.d. -

On the Meaning and Use of Kurtosis

Psychological Methods Copyright 1997 by the American Psychological Association, Inc. 1997, Vol. 2, No. 3,292-307 1082-989X/97/$3.00 On the Meaning and Use of Kurtosis Lawrence T. DeCarlo Fordham University For symmetric unimodal distributions, positive kurtosis indicates heavy tails and peakedness relative to the normal distribution, whereas negative kurtosis indicates light tails and flatness. Many textbooks, however, describe or illustrate kurtosis incompletely or incorrectly. In this article, kurtosis is illustrated with well-known distributions, and aspects of its interpretation and misinterpretation are discussed. The role of kurtosis in testing univariate and multivariate normality; as a measure of departures from normality; in issues of robustness, outliers, and bimodality; in generalized tests and estimators, as well as limitations of and alternatives to the kurtosis measure [32, are discussed. It is typically noted in introductory statistics standard deviation. The normal distribution has a kur- courses that distributions can be characterized in tosis of 3, and 132 - 3 is often used so that the refer- terms of central tendency, variability, and shape. With ence normal distribution has a kurtosis of zero (132 - respect to shape, virtually every textbook defines and 3 is sometimes denoted as Y2)- A sample counterpart illustrates skewness. On the other hand, another as- to 132 can be obtained by replacing the population pect of shape, which is kurtosis, is either not discussed moments with the sample moments, which gives or, worse yet, is often described or illustrated incor- rectly. Kurtosis is also frequently not reported in re- ~(X i -- S)4/n search articles, in spite of the fact that virtually every b2 (•(X i - ~')2/n)2' statistical package provides a measure of kurtosis. -

Generalized Linear Models

Generalized Linear Models A generalized linear model (GLM) consists of three parts. i) The first part is a random variable giving the conditional distribution of a response Yi given the values of a set of covariates Xij. In the original work on GLM’sby Nelder and Wedderburn (1972) this random variable was a member of an exponential family, but later work has extended beyond this class of random variables. ii) The second part is a linear predictor, i = + 1Xi1 + 2Xi2 + + ··· kXik . iii) The third part is a smooth and invertible link function g(.) which transforms the expected value of the response variable, i = E(Yi) , and is equal to the linear predictor: g(i) = i = + 1Xi1 + 2Xi2 + + kXik. ··· As shown in Tables 15.1 and 15.2, both the general linear model that we have studied extensively and the logistic regression model from Chapter 14 are special cases of this model. One property of members of the exponential family of distributions is that the conditional variance of the response is a function of its mean, (), and possibly a dispersion parameter . The expressions for the variance functions for common members of the exponential family are shown in Table 15.2. Also, for each distribution there is a so-called canonical link function, which simplifies some of the GLM calculations, which is also shown in Table 15.2. Estimation and Testing for GLMs Parameter estimation in GLMs is conducted by the method of maximum likelihood. As with logistic regression models from the last chapter, the generalization of the residual sums of squares from the general linear model is the residual deviance, Dm 2(log Ls log Lm), where Lm is the maximized likelihood for the model of interest, and Ls is the maximized likelihood for a saturated model, which has one parameter per observation and fits the data as well as possible. -

Univariate and Multivariate Skewness and Kurtosis 1

Running head: UNIVARIATE AND MULTIVARIATE SKEWNESS AND KURTOSIS 1 Univariate and Multivariate Skewness and Kurtosis for Measuring Nonnormality: Prevalence, Influence and Estimation Meghan K. Cain, Zhiyong Zhang, and Ke-Hai Yuan University of Notre Dame Author Note This research is supported by a grant from the U.S. Department of Education (R305D140037). However, the contents of the paper do not necessarily represent the policy of the Department of Education, and you should not assume endorsement by the Federal Government. Correspondence concerning this article can be addressed to Meghan Cain ([email protected]), Ke-Hai Yuan ([email protected]), or Zhiyong Zhang ([email protected]), Department of Psychology, University of Notre Dame, 118 Haggar Hall, Notre Dame, IN 46556. UNIVARIATE AND MULTIVARIATE SKEWNESS AND KURTOSIS 2 Abstract Nonnormality of univariate data has been extensively examined previously (Blanca et al., 2013; Micceri, 1989). However, less is known of the potential nonnormality of multivariate data although multivariate analysis is commonly used in psychological and educational research. Using univariate and multivariate skewness and kurtosis as measures of nonnormality, this study examined 1,567 univariate distriubtions and 254 multivariate distributions collected from authors of articles published in Psychological Science and the American Education Research Journal. We found that 74% of univariate distributions and 68% multivariate distributions deviated from normal distributions. In a simulation study using typical values of skewness and kurtosis that we collected, we found that the resulting type I error rates were 17% in a t-test and 30% in a factor analysis under some conditions. Hence, we argue that it is time to routinely report skewness and kurtosis along with other summary statistics such as means and variances. -

Multivariate Chemometrics As a Strategy to Predict the Allergenic Nature of Food Proteins

S S symmetry Article Multivariate Chemometrics as a Strategy to Predict the Allergenic Nature of Food Proteins Miroslava Nedyalkova 1 and Vasil Simeonov 2,* 1 Department of Inorganic Chemistry, Faculty of Chemistry and Pharmacy, University of Sofia, 1 James Bourchier Blvd., 1164 Sofia, Bulgaria; [email protected]fia.bg 2 Department of Analytical Chemistry, Faculty of Chemistry and Pharmacy, University of Sofia, 1 James Bourchier Blvd., 1164 Sofia, Bulgaria * Correspondence: [email protected]fia.bg Received: 3 September 2020; Accepted: 21 September 2020; Published: 29 September 2020 Abstract: The purpose of the present study is to develop a simple method for the classification of food proteins with respect to their allerginicity. The methods applied to solve the problem are well-known multivariate statistical approaches (hierarchical and non-hierarchical cluster analysis, two-way clustering, principal components and factor analysis) being a substantial part of modern exploratory data analysis (chemometrics). The methods were applied to a data set consisting of 18 food proteins (allergenic and non-allergenic). The results obtained convincingly showed that a successful separation of the two types of food proteins could be easily achieved with the selection of simple and accessible physicochemical and structural descriptors. The results from the present study could be of significant importance for distinguishing allergenic from non-allergenic food proteins without engaging complicated software methods and resources. The present study corresponds entirely to the concept of the journal and of the Special issue for searching of advanced chemometric strategies in solving structural problems of biomolecules. Keywords: food proteins; allergenicity; multivariate statistics; structural and physicochemical descriptors; classification 1. -

Robust Bayesian General Linear Models ⁎ W.D

www.elsevier.com/locate/ynimg NeuroImage 36 (2007) 661–671 Robust Bayesian general linear models ⁎ W.D. Penny, J. Kilner, and F. Blankenburg Wellcome Department of Imaging Neuroscience, University College London, 12 Queen Square, London WC1N 3BG, UK Received 22 September 2006; revised 20 November 2006; accepted 25 January 2007 Available online 7 May 2007 We describe a Bayesian learning algorithm for Robust General Linear them from the data (Jung et al., 1999). This is, however, a non- Models (RGLMs). The noise is modeled as a Mixture of Gaussians automatic process and will typically require user intervention to rather than the usual single Gaussian. This allows different data points disambiguate the discovered components. In fMRI, autoregressive to be associated with different noise levels and effectively provides a (AR) modeling can be used to downweight the impact of periodic robust estimation of regression coefficients. A variational inference respiratory or cardiac noise sources (Penny et al., 2003). More framework is used to prevent overfitting and provides a model order recently, a number of approaches based on robust regression have selection criterion for noise model order. This allows the RGLM to default to the usual GLM when robustness is not required. The method been applied to imaging data (Wager et al., 2005; Diedrichsen and is compared to other robust regression methods and applied to Shadmehr, 2005). These approaches relax the assumption under- synthetic data and fMRI. lying ordinary regression that the errors be normally (Wager et al., © 2007 Elsevier Inc. All rights reserved. 2005) or identically (Diedrichsen and Shadmehr, 2005) distributed. In Wager et al. -

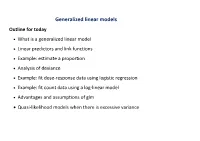

Generalized Linear Models Outline for Today

Generalized linear models Outline for today • What is a generalized linear model • Linear predictors and link functions • Example: estimate a proportion • Analysis of deviance • Example: fit dose-response data using logistic regression • Example: fit count data using a log-linear model • Advantages and assumptions of glm • Quasi-likelihood models when there is excessive variance Review: what is a (general) linear model A model of the following form: Y = β0 + β1X1 + β2 X 2 + ...+ error • Y is the response variable • The X ‘s are the explanatory variables • The β ‘s are the parameters of the linear equation • The errors are normally distributed with equal variance at all values of the X variables. • Uses least squares to fit model to data, estimate parameters • lm in R The predicted Y, symbolized here by µ, is modeled as µ = β0 + β1X1 + β2 X 2 + ... What is a generalized linear model A model whose predicted values are of the form g(µ) = β0 + β1X1 + β2 X 2 + ... • The model still include a linear predictor (to right of “=”) • g(µ) is the “link function” • Wide diversity of link functions accommodated • Non-normal distributions of errors OK (specified by link function) • Unequal error variances OK (specified by link function) • Uses maximum likelihood to estimate parameters • Uses log-likelihood ratio tests to test parameters • glm in R The two most common link functions Log • used for count data η η = logµ The inverse function is µ = e Logistic or logit • used for binary data µ eη η = log µ = 1− µ The inverse function is 1+ eη In all cases log refers to natural logarithm (base e). -

In the Term Multivariate Analysis Has Been Defined Variously by Different Authors and Has No Single Definition

Newsom Psy 522/622 Multiple Regression and Multivariate Quantitative Methods, Winter 2021 1 Multivariate Analyses The term "multivariate" in the term multivariate analysis has been defined variously by different authors and has no single definition. Most statistics books on multivariate statistics define multivariate statistics as tests that involve multiple dependent (or response) variables together (Pituch & Stevens, 2105; Tabachnick & Fidell, 2013; Tatsuoka, 1988). But common usage by some researchers and authors also include analysis with multiple independent variables, such as multiple regression, in their definition of multivariate statistics (e.g., Huberty, 1994). Some analyses, such as principal components analysis or canonical correlation, really have no independent or dependent variable as such, but could be conceived of as analyses of multiple responses. A more strict statistical definition would define multivariate analysis in terms of two or more random variables and their multivariate distributions (e.g., Timm, 2002), in which joint distributions must be considered to understand the statistical tests. I suspect the statistical definition is what underlies the selection of analyses that are included in most multivariate statistics books. Multivariate statistics texts nearly always focus on continuous dependent variables, but continuous variables would not be required to consider an analysis multivariate. Huberty (1994) describes the goals of multivariate statistical tests as including prediction (of continuous outcomes or -

Nonparametric Multivariate Kurtosis and Tailweight Measures

Nonparametric Multivariate Kurtosis and Tailweight Measures Jin Wang1 Northern Arizona University and Robert Serfling2 University of Texas at Dallas November 2004 – final preprint version, to appear in Journal of Nonparametric Statistics, 2005 1Department of Mathematics and Statistics, Northern Arizona University, Flagstaff, Arizona 86011-5717, USA. Email: [email protected]. 2Department of Mathematical Sciences, University of Texas at Dallas, Richardson, Texas 75083- 0688, USA. Email: [email protected]. Website: www.utdallas.edu/∼serfling. Support by NSF Grant DMS-0103698 is gratefully acknowledged. Abstract For nonparametric exploration or description of a distribution, the treatment of location, spread, symmetry and skewness is followed by characterization of kurtosis. Classical moment- based kurtosis measures the dispersion of a distribution about its “shoulders”. Here we con- sider quantile-based kurtosis measures. These are robust, are defined more widely, and dis- criminate better among shapes. A univariate quantile-based kurtosis measure of Groeneveld and Meeden (1984) is extended to the multivariate case by representing it as a transform of a dispersion functional. A family of such kurtosis measures defined for a given distribution and taken together comprises a real-valued “kurtosis functional”, which has intuitive appeal as a convenient two-dimensional curve for description of the kurtosis of the distribution. Several multivariate distributions in any dimension may thus be compared with respect to their kurtosis in a single two-dimensional plot. Important properties of the new multivariate kurtosis measures are established. For example, for elliptically symmetric distributions, this measure determines the distribution within affine equivalence. Related tailweight measures, influence curves, and asymptotic behavior of sample versions are also discussed. -

Stat 714 Linear Statistical Models

STAT 714 LINEAR STATISTICAL MODELS Fall, 2010 Lecture Notes Joshua M. Tebbs Department of Statistics The University of South Carolina TABLE OF CONTENTS STAT 714, J. TEBBS Contents 1 Examples of the General Linear Model 1 2 The Linear Least Squares Problem 13 2.1 Least squares estimation . 13 2.2 Geometric considerations . 15 2.3 Reparameterization . 25 3 Estimability and Least Squares Estimators 28 3.1 Introduction . 28 3.2 Estimability . 28 3.2.1 One-way ANOVA . 35 3.2.2 Two-way crossed ANOVA with no interaction . 37 3.2.3 Two-way crossed ANOVA with interaction . 39 3.3 Reparameterization . 40 3.4 Forcing least squares solutions using linear constraints . 46 4 The Gauss-Markov Model 54 4.1 Introduction . 54 4.2 The Gauss-Markov Theorem . 55 4.3 Estimation of σ2 in the GM model . 57 4.4 Implications of model selection . 60 4.4.1 Underfitting (Misspecification) . 60 4.4.2 Overfitting . 61 4.5 The Aitken model and generalized least squares . 63 5 Distributional Theory 68 5.1 Introduction . 68 i TABLE OF CONTENTS STAT 714, J. TEBBS 5.2 Multivariate normal distribution . 69 5.2.1 Probability density function . 69 5.2.2 Moment generating functions . 70 5.2.3 Properties . 72 5.2.4 Less-than-full-rank normal distributions . 73 5.2.5 Independence results . 74 5.2.6 Conditional distributions . 76 5.3 Noncentral χ2 distribution . 77 5.4 Noncentral F distribution . 79 5.5 Distributions of quadratic forms . 81 5.6 Independence of quadratic forms . 85 5.7 Cochran's Theorem . -

Iterative Approaches to Handling Heteroscedasticity with Partially Known Error Variances

International Journal of Statistics and Probability; Vol. 8, No. 2; March 2019 ISSN 1927-7032 E-ISSN 1927-7040 Published by Canadian Center of Science and Education Iterative Approaches to Handling Heteroscedasticity With Partially Known Error Variances Morteza Marzjarani Correspondence: Morteza Marzjarani, National Marine Fisheries Service, Southeast Fisheries Science Center, Galveston Laboratory, 4700 Avenue U, Galveston, Texas 77551, USA. Received: January 30, 2019 Accepted: February 20, 2019 Online Published: February 22, 2019 doi:10.5539/ijsp.v8n2p159 URL: https://doi.org/10.5539/ijsp.v8n2p159 Abstract Heteroscedasticity plays an important role in data analysis. In this article, this issue along with a few different approaches for handling heteroscedasticity are presented. First, an iterative weighted least square (IRLS) and an iterative feasible generalized least square (IFGLS) are deployed and proper weights for reducing heteroscedasticity are determined. Next, a new approach for handling heteroscedasticity is introduced. In this approach, through fitting a multiple linear regression (MLR) model or a general linear model (GLM) to a sufficiently large data set, the data is divided into two parts through the inspection of the residuals based on the results of testing for heteroscedasticity, or via simulations. The first part contains the records where the absolute values of the residuals could be assumed small enough to the point that heteroscedasticity would be ignorable. Under this assumption, the error variances are small and close to their neighboring points. Such error variances could be assumed known (but, not necessarily equal).The second or the remaining portion of the said data is categorized as heteroscedastic. Through real data sets, it is concluded that this approach reduces the number of unusual (such as influential) data points suggested for further inspection and more importantly, it will lowers the root MSE (RMSE) resulting in a more robust set of parameter estimates. -

Bayes Estimates for the Linear Model

Bayes Estimates for the Linear Model By D. V. LINDLEY AND A. F. M. SMITH University College, London [Read before the ROYAL STATISTICAL SOCIETY at a meeting organized by the RESEARCH SECTION on Wednesday, December 8th, 1971, Mr M. J. R. HEALY in the Chair] SUMMARY The usual linear statistical model is reanalyzed using Bayesian methods and the concept of exchangeability. The general method is illustrated by applica tions to two-factor experimental designs and multiple regression. Keywords: LINEAR MODEL; LEAST SQUARES; BAYES ESTIMATES; EXCHANGEABILITY; ADMISSIBILITY; TWO-FACTOR EXPERIMENTAL DESIGN; MULTIPLE REGRESSION; RIDGE REGRESSION; MATRIX INVERSION. INTRODUCTION ATIENTION is confined in this paper to the linear model, E(y) = Ae, where y is a vector of observations, A a known design matrix and e a vector of unknown para meters. The usual estimate of e employed in this situation is that derived by the method of least squares. We argue that it is typically true that there is available prior information about the parameters and that this may be exploited to find improved, and sometimes substantially improved, estimates. In this paper we explore a particular form of prior information based on de Finetti's (1964) important idea of exchangeability. The argument is entirely within the Bayesian framework. Recently there has been much discussion of the respective merits of Bayesian and non-Bayesian approaches to statistics: we cite, for example, the paper by Cornfield (1969) and its ensuing discussion. We do not feel that it is necessary or desirable to add to this type of literature, and since we know of no reasoned argument against the Bayesian position we have adopted it here.