A Brief and Informal Introduction to the Lambda Calculus

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Data Types & Arithmetic Expressions

Data Types & Arithmetic Expressions 1. Objective .............................................................. 2 2. Data Types ........................................................... 2 3. Integers ................................................................ 3 4. Real numbers ....................................................... 3 5. Characters and strings .......................................... 5 6. Basic Data Types Sizes: In Class ......................... 7 7. Constant Variables ............................................... 9 8. Questions/Practice ............................................. 11 9. Input Statement .................................................. 12 10. Arithmetic Expressions .................................... 16 11. Questions/Practice ........................................... 21 12. Shorthand operators ......................................... 22 13. Questions/Practice ........................................... 26 14. Type conversions ............................................. 28 Abdelghani Bellaachia, CSCI 1121 Page: 1 1. Objective To be able to list, describe, and use the C basic data types. To be able to create and use variables and constants. To be able to use simple input and output statements. Learn about type conversion. 2. Data Types A type defines by the following: o A set of values o A set of operations C offers three basic data types: o Integers defined with the keyword int o Characters defined with the keyword char o Real or floating point numbers defined with the keywords -

Calculus Terminology

AP Calculus BC Calculus Terminology Absolute Convergence Asymptote Continued Sum Absolute Maximum Average Rate of Change Continuous Function Absolute Minimum Average Value of a Function Continuously Differentiable Function Absolutely Convergent Axis of Rotation Converge Acceleration Boundary Value Problem Converge Absolutely Alternating Series Bounded Function Converge Conditionally Alternating Series Remainder Bounded Sequence Convergence Tests Alternating Series Test Bounds of Integration Convergent Sequence Analytic Methods Calculus Convergent Series Annulus Cartesian Form Critical Number Antiderivative of a Function Cavalieri’s Principle Critical Point Approximation by Differentials Center of Mass Formula Critical Value Arc Length of a Curve Centroid Curly d Area below a Curve Chain Rule Curve Area between Curves Comparison Test Curve Sketching Area of an Ellipse Concave Cusp Area of a Parabolic Segment Concave Down Cylindrical Shell Method Area under a Curve Concave Up Decreasing Function Area Using Parametric Equations Conditional Convergence Definite Integral Area Using Polar Coordinates Constant Term Definite Integral Rules Degenerate Divergent Series Function Operations Del Operator e Fundamental Theorem of Calculus Deleted Neighborhood Ellipsoid GLB Derivative End Behavior Global Maximum Derivative of a Power Series Essential Discontinuity Global Minimum Derivative Rules Explicit Differentiation Golden Spiral Difference Quotient Explicit Function Graphic Methods Differentiable Exponential Decay Greatest Lower Bound Differential -

Parsing Arithmetic Expressions Outline

Parsing Arithmetic Expressions https://courses.missouristate.edu/anthonyclark/333/ Outline Topics and Learning Objectives • Learn about parsing arithmetic expressions • Learn how to handle associativity with a grammar • Learn how to handle precedence with a grammar Assessments • ANTLR grammar for math Parsing Expressions There are a variety of special purpose algorithms to make this task more efficient: • The shunting yard algorithm https://eli.thegreenplace.net/2010/01/02 • Precedence climbing /top-down-operator-precedence-parsing • Pratt parsing For this class we are just going to use recursive descent • Simpler • Same as the rest of our parser Grammar for Expressions Needs to account for operator associativity • Also known as fixity • Determines how you apply operators of the same precedence • Operators can be left-associative or right-associative Needs to account for operator precedence • Precedence is a concept that you know from mathematics • Think PEMDAS • Apply higher precedence operators first Associativity By convention 7 + 3 + 1 is equivalent to (7 + 3) + 1, 7 - 3 - 1 is equivalent to (7 - 3) – 1, and 12 / 3 * 4 is equivalent to (12 / 3) * 4 • If we treated 7 - 3 - 1 as 7 - (3 - 1) the result would be 5 instead of the 3. • Another way to state this convention is associativity Associativity Addition, subtraction, multiplication, and division are left-associative - What does this mean? You have: 1 - 2 - 3 - 3 • operators (+, -, *, /, etc.) and • operands (numbers, ids, etc.) 1 2 • Left-associativity: if an operand has operators -

Arithmetic Expression Construction

1 Arithmetic Expression Construction 2 Leo Alcock Sualeh Asif Harvard University, Cambridge, MA, USA MIT, Cambridge, MA, USA 3 Jeffrey Bosboom Josh Brunner MIT CSAIL, Cambridge, MA, USA MIT CSAIL, Cambridge, MA, USA 4 Charlotte Chen Erik D. Demaine MIT, Cambridge, MA, USA MIT CSAIL, Cambridge, MA, USA 5 Rogers Epstein Adam Hesterberg MIT CSAIL, Cambridge, MA, USA Harvard University, Cambridge, MA, USA 6 Lior Hirschfeld William Hu MIT, Cambridge, MA, USA MIT, Cambridge, MA, USA 7 Jayson Lynch Sarah Scheffler MIT CSAIL, Cambridge, MA, USA Boston University, Boston, MA, USA 8 Lillian Zhang 9 MIT, Cambridge, MA, USA 10 Abstract 11 When can n given numbers be combined using arithmetic operators from a given subset of 12 {+, −, ×, ÷} to obtain a given target number? We study three variations of this problem of 13 Arithmetic Expression Construction: when the expression (1) is unconstrained; (2) has a specified 14 pattern of parentheses and operators (and only the numbers need to be assigned to blanks); or 15 (3) must match a specified ordering of the numbers (but the operators and parenthesization are 16 free). For each of these variants, and many of the subsets of {+, −, ×, ÷}, we prove the problem 17 NP-complete, sometimes in the weak sense and sometimes in the strong sense. Most of these proofs 18 make use of a rational function framework which proves equivalence of these problems for values in 19 rational functions with values in positive integers. 20 2012 ACM Subject Classification Theory of computation → Problems, reductions and completeness 21 Keywords and phrases Hardness, algebraic complexity, expression trees 22 Digital Object Identifier 10.4230/LIPIcs.ISAAC.2020.41 23 Related Version A full version of the paper is available on arXiv. -

1 Logic, Language and Meaning 2 Syntax and Semantics of Predicate

Semantics and Pragmatics 2 Winter 2011 University of Chicago Handout 1 1 Logic, language and meaning • A formal system is a set of primitives, some statements about the primitives (axioms), and some method of deriving further statements about the primitives from the axioms. • Predicate logic (calculus) is a formal system consisting of: 1. A syntax defining the expressions of a language. 2. A set of axioms (formulae of the language assumed to be true) 3. A set of rules of inference for deriving further formulas from the axioms. • We use this formal system as a tool for analyzing relevant aspects of the meanings of natural languages. • A formal system is a syntactic object, a set of expressions and rules of combination and derivation. However, we can talk about the relation between this system and the models that can be used to interpret it, i.e. to assign extra-linguistic entities as the meanings of expressions. • Logic is useful when it is possible to translate natural languages into a logical language, thereby learning about the properties of natural language meaning from the properties of the things that can act as meanings for a logical language. 2 Syntax and semantics of Predicate Logic 2.1 The vocabulary of Predicate Logic 1. Individual constants: fd; n; j; :::g 2. Individual variables: fx; y; z; :::g The individual variables and constants are the terms. 3. Predicate constants: fP; Q; R; :::g Each predicate has a fixed and finite number of ar- guments called its arity or valence. As we will see, this corresponds closely to argument positions for natural language predicates. -

Common Errors in Algebraic Expressions: a Quantitative-Qualitative Analysis

International Journal on Social and Education Sciences Volume 1, Issue 2, 2019 ISSN: 2688-7061 (Online) Common Errors in Algebraic Expressions: A Quantitative-Qualitative Analysis Eliseo P. Marpa Philippine Normal University Visayas, Philippines, [email protected] Abstract: Majority of the students regarded algebra as one of the difficult areas in mathematics. They even find difficulties in algebraic expressions. Thus, this investigation was conducted to identify common errors in algebraic expressions of the preservice teachers. A descriptive method of research was used to address the problems. The data were gathered using the developed test administered to the 79 preservice teachers. Statistical tools such as frequency, percent, and mean percentage error were utilized to address the present problems. Results show that performance in algebraic expressions of the preservice teachers is average. However, performance in classifying polynomials and translating mathematical phrases into symbols was very low and low, respectively. Furthermore, the study indicates that preservice teachers were unable to classify polynomials in parenthesis. Likewise, they are confused of the signs in adding and subtracting polynomials. Results disclosed that preservice teachers have difficulties in classifying polynomials according to the degree and polynomials in parenthesis. They also have difficulties in translating mathematical phrases into symbols. Thus, mathematics teachers should strengthen instruction on these topics. Mathematics teachers handling the subject are also encouraged to develop learning exercises that will develop the mastery level of the students. Keywords: Algebraic expressions, Analysis of common errors, Education students, Performance Introduction One of the main objectives of teaching mathematics is to prepare students for practical life. However, students sometimes find mathematics, especially algebra impractical because they could not find any reason or a justification of using the xs and the ys in everyday life. -

3.2 Writing Expressions and Equations

Name:_______________________________ 3.2 Writing Expressions and Equations To translate statements into expressions and equations: 1) Identify __KEY__ __WORDS____ that indicate the operation. 2) Write the numbers/variables in the correct order. The SUM of _____5_______ and ______8______: _____5 + 8_____ The DIFFERENCE of ___n_____ and ____3______ : _____n – 3______ The PRODUCT of ____4______ and ____b – 1____: __4(b – 1)___ The QUOTIENT of ___4_____ and ____ b – 1_____: 4 ÷ (b – 1) or Write each verbal phrase as an algebraic expression. 1. the sum of 8 and t 8 + t 2. the quotient of g and 15 3. the product of 5 and b 5b 4. the difference of 32 and x 32 – x SWITCH THE LESS THAN the first number subtracted ORDER OF from the second! THE TERMS! MORE THAN 5. Eight more than x _x +8_ 6. Six less than p __p – 6___ Write the phrase five dollars less than Jennifer earned as an algebraic expression. Key Words five dollars less than Jennifer earned Variable Let d represent # of $ Jennifer earned Expression d – 5 6. 14 less than f f – 14 7. p more than 10 10 + p 8. 3 more runs than Pirates scored P + 3 9. 12 less than some number n – 12 10. Arthur is 8 years younger than Tanya 11. Kelly’s test score is 6 points higher than Mike’s IS, equals, is equal to Substitute with equal sign. 7. 5 more than a number is 6. 8. The product of 7 and b is equal to 63. n + 5 = 6 7b = 63 9. The sum of r and 45 is 79. -

Formal Languages and Systems

FORMAL LANGUAGES AND SYSTEMS Authors: Heinrich Herre Universit¨at Leipzig Intitut f¨urInformatik Germany Peter Schroeder-Heister Universit¨at T¨ubingen Wilhelm-Schickard-Institut f¨urInformatik email: [email protected] Draft (1995) of a paper which appeared 1998 in the Routledge Encyclopedia of Philosophy Herre & Schroeder-Heister “Formal Languages and Systems” p. 1 FORMAL LANGUAGES AND SYSTEMS Keywords: Consequence relation Deductive system Formal grammar Formal language Formal system Gentzen-style system Hilbert-style system Inference operation Logical calculus Rule-based system Substructural logics Herre & Schroeder-Heister “Formal Languages and Systems” p. 2 FORMAL LANGUAGES AND SYSTEMS Formal languages and systems are concerned with symbolic structures considered under the aspect of generation by formal (syntactic) rules, i.e. irrespective of their or their components’ meaning(s). In the most general sense, a formal language is a set of expressions (1). The most important way of describing this set is by means of grammars (2). Formal systems are formal languages equipped with a consequence operation yielding a deductive system (3). If one further specifies the means by which expressions are built up (connectives, quantifiers) and the rules from which inferences are generated, one obtains logical calculi (4) of various sorts, especially Hilbert-style and Gentzen-style systems. 1 Expressions 2 Grammars 3 Deductive systems 4 Logical calculi 1 Expressions A formal language is a set L of expressions. Expressions are built up from a finite set Σ of atomic symbols or atoms (the alphabet of the language) by means of certain constructors. Normally one confines oneself to linear association (concatenation) ◦ as the only constructor, yielding strings of atoms, also called words (over Σ), starting with the empty word . -

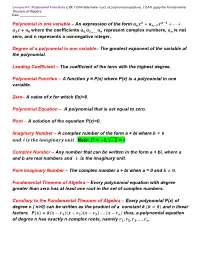

Lesson 4-1 Polynomials

Lesson 4-1: Polynomial Functions L.O: I CAN determine roots of polynomial equations. I CAN apply the Fundamental Theorem of Algebra. Date: ________________ 풏 풏−ퟏ Polynomial in one variable – An expression of the form 풂풏풙 + 풂풏−ퟏ풙 + ⋯ + 풂ퟏ풙 + 풂ퟎ where the coefficients 풂ퟎ,풂ퟏ,…… 풂풏 represent complex numbers, 풂풏 is not zero, and n represents a nonnegative integer. Degree of a polynomial in one variable– The greatest exponent of the variable of the polynomial. Leading Coefficient – The coefficient of the term with the highest degree. Polynomial Function – A function y = P(x) where P(x) is a polynomial in one variable. Zero– A value of x for which f(x)=0. Polynomial Equation – A polynomial that is set equal to zero. Root – A solution of the equation P(x)=0. Imaginary Number – A complex number of the form a + bi where 풃 ≠ ퟎ 풂풏풅 풊 풊풔 풕풉풆 풊풎풂품풊풏풂풓풚 풖풏풊풕 . Note: 풊ퟐ = −ퟏ, √−ퟏ = 풊 Complex Number – Any number that can be written in the form a + bi, where a and b are real numbers and 풊 is the imaginary unit. Pure imaginary Number – The complex number a + bi when a = 0 and 풃 ≠ ퟎ. Fundamental Theorem of Algebra – Every polynomial equation with degree greater than zero has at least one root in the set of complex numbers. Corollary to the Fundamental Theorem of Algebra – Every polynomial P(x) of degree n ( n>0) can be written as the product of a constant k (풌 ≠ ퟎ) and n linear factors. 푷(풙) = 풌(풙 − 풓ퟏ)(풙 − 풓ퟐ)(풙 − 풓ퟑ) … . -

Arithmetic Expressions

Arithmetic Expressions In Text: Chapter 7 1 Outline Precedence Associativity Evaluation order/side effects Conditional expressions Type conversions and coercions Assignment Chapter 7: Arithmetic Expressions 2 6-1 Arithmetic Expressions Arithmetic expressions consist of operators, operands, parentheses, and function calls Design issues for arithmetic expressions: What are the operator precedence rules? What are the operator associativity rules? What is the order of operand evaluation? Are there restrictions on operand evaluation side effects? Does the language allow user-defined operator overloading? What mode mixing is allowed in expressions? Chapter 7: Arithmetic Expressions 3 Operators A unary operator has one operand A binary operator has two operands A ternary operator has three operands Functions can be viewed as unary operators with an operand of a simple list Argument lists (and parameter lists) treat separators (comma, space) as “stacking” or “append”operators keyword in a language statement as functions in which the remainder of the statement is the operand Operator precedence and operator associativity are important considerations Chapter 7: Arithmetic Expressions 4 6-2 Operator Precedence The operator precedence rules for expression evaluation define the order in which “adjacent” operators of different precedence levels are evaluated Parenthetical groups (...) Exponeniation ** Mult & Div * , / Add & Sub + , - Assignment := A * B + C ** D / E - F <=> ( (A * B) + ((C ** D) / E) - F) QUESTIONS: (1) -

Common Core State Standards for MATHEMATICS

Common Core State StandardS for mathematics Common Core State StandardS for MATHEMATICS table of Contents Introduction 3 Standards for mathematical Practice 6 Standards for mathematical Content Kindergarten 9 Grade 1 13 Grade 2 17 Grade 3 21 Grade 4 27 Grade 5 33 Grade 6 39 Grade 7 46 Grade 8 52 High School — Introduction High School — Number and Quantity 58 High School — Algebra 62 High School — Functions 67 High School — Modeling 72 High School — Geometry 74 High School — Statistics and Probability 79 Glossary 85 Sample of Works Consulted 91 Common Core State StandardS for MATHEMATICS Introduction Toward greater focus and coherence Mathematics experiences in early childhood settings should concentrate on (1) number (which includes whole number, operations, and relations) and (2) geometry, spatial relations, and measurement, with more mathematics learning time devoted to number than to other topics. Mathematical process goals should be integrated in these content areas. — Mathematics Learning in Early Childhood, National Research Council, 2009 The composite standards [of Hong Kong, Korea and Singapore] have a number of features that can inform an international benchmarking process for the development of K–6 mathematics standards in the U.S. First, the composite standards concentrate the early learning of mathematics on the number, measurement, and geometry strands with less emphasis on data analysis and little exposure to algebra. The Hong Kong standards for grades 1–3 devote approximately half the targeted time to numbers and almost all the time remaining to geometry and measurement. — Ginsburg, Leinwand and Decker, 2009 Because the mathematics concepts in [U.S.] textbooks are often weak, the presentation becomes more mechanical than is ideal. -

A Guide to Writing Mathematics

A Guide to Writing Mathematics Dr. Kevin P. Lee Introduction This is a math class! Why are we writing? There is a good chance that you have never written a paper in a math class before. So you might be wondering why writing is required in your math class now. The Greek word mathemas, from which we derive the word mathematics, embodies the notions of knowledge, cognition, understanding, and perception. In the end, mathematics is about ideas. In math classes at the university level, the ideas and concepts encountered are more complex and sophisticated. The mathematics learned in college will include concepts which cannot be expressed using just equations and formulas. Putting mathemas on paper will require writing sentences and paragraphs in addition to the equations and formulas. Mathematicians actually spend a great deal of time writing. If a mathematician wants to contribute to the greater body of mathematical knowledge, she must be able communicate her ideas in a way which is comprehensible to others. Thus, being able to write clearly is as important a mathematical skill as being able to solve equations. Mastering the ability to write clear mathematical explanations is important for non-mathematicians as well. As you continue taking math courses in college, you will come to know more mathematics than most other people. When you use your mathematical knowledge in the future, you may be required to explain your thinking process to another person (like your boss, a co-worker, or an elected official), and it will be quite likely that this other person will know less math than you do.