Decision Trees

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Washburn University Table of Contents

Washburn University Media Information .................................. 3 Rebounding Leaders ............................. 72 Quick Facts 2006-07 Schedule ................................... 4 Field Goal Leaders ................................ 73 Name of School ...............Washburn University 3-Point Leaders ..................................... 74 City/Zip ........................... Topeka, Kan. 66621 2006-07 Season Outlook ................ 5-12 Free Throw Leaders .............................. 75 Founded ....................................................1865 Numerical Roster .................................... 6 Assist Leaders ....................................... 76 Enrollment ...............................................7,002 Roster Breakdowns ................................. 7 Steal Leaders ......................................... 77 Nickname ........................................Lady Blues Team Photos ........................................... 8 Block Leaders ........................................ 78 School Colors .................. Yale Blue and White Photo Roster ........................................... 9 Miscellaneous Individual Leaders ........ 79 Home Court/Capacity .........Lee Arena / 3,902 Season Outlook ................................ 10-11 Team Records ........................................ 79 Affiliation ........................... NCAA Division II Player Personal Profiles ......................... 12 Team Game Scoring Records ............... 80 Conference ..........Mid-America Intercollegiate -

NBA Draft Lottery Probabilities

AMERICAN JOURNAL OF UNDERGRADUATE RESEARCH VOL. 2, NO. 3 (2003) NBA Draft Lottery Probabilities Chad R. Florke and Mark D. Ecker Department of Mathematics University of Northern Iowa Cedar Falls, Iowa 50614-0506 USA Received: November 6, 2003 Accepted: December 1, 2003 ABSTRACT We examine the evolution of the National Basketball Association’s (NBA’s) Draft Lottery by showing how the changes implemented by the Board of Governors have impacted the probabilities of obtaining top picks in the ensuing draft lottery. We explore how these changes have impacted the team with the worst record and also investigate the conditional probabilities of the fourth worst team receiving the third pick. These calculations are conditioned upon two specified teams receiving the first two selections. We show that the probability of the fourth worst team receiving the third pick can be made unconditionally. We calculate the probabilities for the fourth worst team to move up in the draft to receive either the first, second, or third selections, along with its chance of keeping the fourth pick or even dropping in the draft. We find there is a higher chance for the fourth worst team to drop to the fifth, sixth or seventh position than to stay at the fourth position or move up. I. INTRODUCTION the record of finish of the teams, i.e., the number of games won for each team (which a. National Basketball Association (NBA) may also include playoff wins). For example Draft Lottery History and Evolution in football, the team with the worst record (fewest number of wins during the season) In all major professional team will draft first in each round while the best sports, recruiting amateur players from team (the Super Bowl winner) will draft last college, high school, or overseas is vital to in each round. -

Recruiting Forces Are Influencing Basketball Prospects Earlier Than Ever

Eagles suspend Terrell Owens indefinitely. Page 3C C SUNDAY SPORTS Novembe r 6,2005 COLLEGE FOOTBALL 2C-4C • MOTOR SPORTS 10C • GOLF 11C www.fayettevillenc.com/spor ts Staff photo illustration by David SmitH By Dan Wiederer Staff writer As Dominique Sutton catches the ball in transition, his skills sparkle like a new bride’s smile. A crossover dribble and quick spin allow him to complete an effortless left- handed layup. He smirks, enjoying the simplicity of it all. Unlike many of the 252 players attending the Bob First of a FROM Gibbons Evaluation Clinic in Winston-Salem, Sutton plays tHree-par t carefree. He feels no urgency to impress scouts, no series. immediate need to prove he is the best player in camp. After all, Sutton’s college plans have been set for some time. Even though the 6-foot-5 forward still had yet to play a game in his junior season at The Patterson School, a prep school northwest of Charlotte, he made a verbal INSIDE commitment to play for Wake Forest the summer after his % Fame and fortune are freshman year. powerful draws tHat lure THE more and more college “I just wanted to get it done,” Sutton said. “I fell in love with Wake the first time I came to visit and just said, stars to the pros, ‘Yeah, this is the place.’ ” % The NCAA clamps down Such is the trend these days where heightening exposure on recruiting gimmicks at an early age has high-profile prospects making their tHat cater to players’ egos, college commitments earlier than ever. -

Nba Legacy -- Dana Spread

2019-202018-19 • HISTORY NBA LEGACY -- DANA SPREAD 144 2019-20 • HISTORY THIS IS CAROLINA BASKETBALL 145 2019-20 • HISTORY NBA PIPELINE --- DANA SPREAD 146 2019-20 • HISTORY TAR HEELS IN THE NBA DRAFT 147 2019-20 • HISTORY BARNES 148 2019-20 • HISTORY BRADLEY 149 2019-20 • HISTORY BULLOCK 150 2019-20 • HISTORY VC 151 2019-20 • HISTORY ED DAVIS 152 2019-20 • HISTORY ellington 153 2019-20 • HISTORY FELTON 154 2019-20 • HISTORY DG 155 2019-20 • HISTORY henson (hicks?) 156 2019-20 • HISTORY JJACKSON 157 2019-20 • HISTORY CAM JOHNSON 158 2019-20 • HISTORY NASSIR 159 2019-20 • HISTORY THEO 160 2019-20 • HISTORY COBY WHITE 161 2019-20 • HISTORY MARVIN WILLIAMS 162 2019-20 • HISTORY ALL-TIME PRO ROSTER TAR HEELS WITH NBA CHAMPIONSHIP RINGS Name Affiliation Season Team Billy Cunningham (1) Player 1966-67 Philadelphia 76ers Charles Scott (1) Player 1975-76 Boston Celtics Mitch Kupchak Player 1977-78 Washington Bullets Tommy LaGarde (1) Player 1978-79 Seattle SuperSonics Mitch Kupchak Player 1981-82 Los Angeles Lakers Bob McAdoo Player 1981-82 Los Angeles Lakers Bobby Jones (1) Player 1982-83 Philadelphia 76ers Mitch Kupchak (3) Player 1984-85 Los Angeles Lakers Bob McAdoo (2) Player 1984-85 Los Angeles Lakers James Worthy Player 1984-85 Los Angeles Lakers James Worthy Player 1986-87 Los Angeles Lakers James Worthy (3) Player 1987-88 Los Angeles Lakers Michael Jordan Player 1990-91 Chicago Bulls Scott Williams Player 1990-91 Chicago Bulls Michael Jordan Player 1991-92 Chicago Bulls Scott Williams Player 1991-92 Chicago Bulls Michael Jordan Player -

Vince Carter Wins 2019-20 NBA Sportsmanship Award __10-1-20

VINCE CARTER WINS 2019-20 NBA SPORTSMANSHIP AWARD NEW YORK, Oct. 1, 2020 – NBA players have selected Vince Carter as the recipient of the 2019-20 NBA Sportsmanship Award, the NBA announced today. Carter, who spent the 2019-20 season with the Atlanta Hawks, announced his retirement from the NBA in June after playing a league-record 22 seasons. He receives the Joe Dumars Trophy as the winner of the NBA Sportsmanship Award. Dumars, a two-time NBA champion and Naismith Memorial Basketball Hall of Famer, played 14 NBA seasons and won the inaugural Sportsmanship Award in the 1995-96 season. Each NBA team nominated one of its players for the NBA Sportsmanship Award, which is designed to honor a player who best represents the ideals of sportsmanship on the court. From the list of 30 team nominees, a panel of league executives selected one finalist from each of the NBA’s six divisions. Current NBA players selected the winner from the list of six finalists, with more than 250 players submitting their votes through confidential balloting conducted by the league office. In addition to Carter (Southeast Division), the other finalists were Oklahoma City Thunder center Steven Adams (Northwest), Sacramento Kings forward Harrison Barnes (Pacific), Detroit Pistons guard Langston Galloway (Central), Memphis Grizzlies guard Tyus Jones (Southwest) and Brooklyn Nets guard Garrett Temple (Atlantic). Carter received 143 of 266 first-place votes and 2,520 total points in balloting of NBA players. Temple (1,746 points) and Adams (1,632 points) finished in second and third place, respectively. The six finalists were awarded 11 points for each first-place vote, nine points for each second-place vote, seven points for each third-place vote, five points for each fourth-place vote, three points for each fifth- place vote and one point for each sixth-place vote. -

The NBA Scores Big with Kids New He

More Girls Ages 10-16 Join Fitness Challenge (NAPSA)—To encourage young Tips For Cold Weather Skin Care women across the country to be (NAPSA)—Turning the other The NBA Scores Big With Kids more physically active, a program cheek to cold weather may not developed by the United States keep it from damaging your Department of Health and Human skin—but facing up to a few skin Services (HHS), called 4Girls care facts might. Experts offer Health, www.4girls.gov, has formed these tips. a President’s Challenge group. • Close Your Eyes—Sleep plays a major role in maintaining healthy, radiant skin. Doctors say a lack of sleep can slow down the cell renewal process—and that’s when aging can set in. In addi- tion, about 89 percent of women recently surveyed said “getting enough sleep” was an important factor in achieving healthy skin. Cool Skin—It’s important to prac- Try to get at least eight or nine tice a skin care regimen during hours a night. colder months. (NAPSA)—Getting great gifts for • NBA Jox Box—This NBA • Hot Water Hazards—During the kids on your list will be a slam player version of the classic “Jack- the colder months, limit your time vitamins A and C. Vitamin A will dunk—with an assist from the in-the-Box” springs up just in time in the shower or bathtub and keep help with the growth and repair of National Basketball Association. for the holidays with such favorite water at a lukewarm tempera- skin tissue and can be found in The league offers an assortment NBA superstars as LeBron James, ture. -

Rhythmic Basketball Skills Clinic

Rhythmic Basketball Skills Clinic Emily Larson FOR TEAMS AT ALL LEVELS – challenging, fun & energizing… Rhythmic Basketball Skills is a unique, invigorating and fun method to learning and practicing individual basketball skills with an emphasis on footwork, timing and technical expression… Parents have given high praise for Emily Larson with comments like: amazing, unbelievable, the most fantastic session I have ever seen, and more… You have to see it to believe it! Ganon Baker, basketball skills trainer for NBA/WNBA players, teaches these techniques to the pro’s and has personally taught Emily in this amazing approach… Coaches who have witnessed the Rhythmic Skills Session have also made comments like: amazing, best thing I’ve done for my players this season, wow!, and more… Do you want to host a Rhythmic Skills Session for your team? -contact Mark Hogan at [email protected] OR Emily Larson at [email protected] for more information… "Emily Larson has spent hours with me sharpening her coaching skills to teach an Amazing Rhythmic Basketball Skills Session. You will have so much fun and get a great workout with this standout college All-Star & Academic All-Canadian. She will MOVE and INSPIRE you, trust me!" Ganon Baker ____________________________________________________________________________________________________________________________________________________________________________________________ Ganon Baker is a world-renowned basketball trainer for Kobe Bryant, Chris Paul, Vince Carter, Lebron James, KD and more… [email protected] To Book First: email to book at Rhythmic Skills Clinic for your team a Cost: $150 for your team’s practice (60 to 90 minutes) Session Payment: can be made at the session -cash or cheque (cheques made payable to: Courtside Basketball) Ganon Baker with Lebron James “Rhythmic Skills Training is one of the most creative, challenging & fun methods to skill development that you will ever do.” -Ganon Baker “Emily Larson is the best Rhythmic Skills instructors I have seen in Canada. -

FOX Sports Southeast Adds Vince Carter to Hawks Broadcast Team for Select Telecasts

FOX Sports Southeast Adds Vince Carter to Hawks Broadcast Team for Select Telecasts Carter to call games alongside Bob Rathbun, Dominique Wilkins and Kelly Crull ATLANTA (Dec. 28, 2020) –FOX Sports Southeast announced on Monday that eight-time NBA All-Star Vince Carter will join their Atlanta Hawks broadcast team for select marquee telecasts during the 2020-21 regular season. Carter will make his first appearance on Wednesday, Dec. 30 alongside Emmy-winning play-by- play announcer Bob Rathbun, Naismith Memorial Basketball Hall of Famer and analyst Dominique Wilkins, and courtside reporter Kelly Crull. The Hawks will be on the road to take on the Brooklyn Nets at 7 p.m. ET. “I’m excited for the opportunity to join Bob and ‘Nique on Hawks broadcasts this season,” Carter said. “It’s a great opportunity for me to sharpen my skills breaking down the game and providing analysis for a team that I know so well. I’m looking forward to seeing how the new vets mix in with our young guys as they try to take that next step to making the playoffs.” The next game for Carter will be Monday, Jan. 18 at 2 p.m. ET when the Hawks host the Minnesota Timberwolves on Martin Luther King Jr. Day. He will return the following Sunday for the Jan. 24 telecast of the Hawks at the Milwaukee Bucks at 7 p.m. ET. Carter will finish off the First Half of the NBA regular season with back-to-back games in February. He will call the Monday, Feb. -

Memphis Grizzlies 2016 Nba Draft

MEMPHIS GRIZZLIES 2016 NBA DRAFT June 23, 2016 • FedExForum • Memphis, TN Table of Contents 2016 NBA Draft Order ...................................................................................................... 2 2016 Grizzlies Draft Notes ...................................................................................................... 3 Grizzlies Draft History ...................................................................................................... 4 Grizzlies Future Draft Picks / Early Entry Candidate History ...................................................................................................... 5 History of No. 17 Overall Pick / No. 57 Overall Pick ...................................................................................................... 6 2015‐16 Grizzlies Alphabetical and Numerical Roster ...................................................................................................... 7 How The Grizzlies Were Built ...................................................................................................... 8 2015‐16 Grizzlies Transactions ...................................................................................................... 9 2016 NBA Draft Prospect Pronunciation Guide ...................................................................................................... 10 All Time No. 1 Overall NBA Draft Picks ...................................................................................................... 11 No. 1 Draft Picks That Have Won NBA -

Michael Jordan's 'Knee-Dful Things'

The Bronx Journal/March/April 2002 S P O RT S A 1 5 Michael Jordan’s GREG VAN VOORHIS ‘Knee-dful Things’ SPORTS EDITOR The Michael Jordan Chronicles: Month 3 lousy play, but they also must pass one of ryptonite has the other aforementioned teams to land a playoff spot this season. Not to mention that crept its way Miami is only a mere 2 games behind Washington, and is playing its best basket- into the ball of the season. It seems that the Wiz are “Man of Steel,’ closer to a trip to the draft lottery than they are a trip to the playoffs, a once very pos- sending the Wiz-kids sible fete, that now seems quite unlikely. But as I’ve said before, if there’s a Mike, reeling and pushing there’s always a chance, though it will take more than a quick return to the court for MJ them further and to salvage the season for the Wiz. First of all, with only 20 or so games remaining, further away from a and Washington trailing Charlotte and Indiana by just mere percentage points for possible playoff berth. the final spot in the East, every game that It seems that the weight of carrying a team Michael does not play will prove to be huge has proven to be too much for the 39-year for their chances to make it. The burden to old Michael Jordan’s knees to bear, as he succeed will fall mainly on the shoulders of has been placed on the injured list after hav- Richard Hamilton, Wa s h i n g t o n ’s most ing arthroscopic knee surgery to repair torn skilled player this season. -

MA#12Jumpingconclusions Old Coding

Mathematics Assessment Activity #12: Mathematics Assessed: · Ability to support or refute a claim; Jumping to Conclusions · Understanding of mean, median, mode, and range; · Calculation of mean, The ten highest National Basketball League median, mode and salaries are found in the table below. Numbers range; like these lead us to believe that all professional · Problem solving; and basketball players make millions of dollars · Communication every year. While all NBA players make a lot, they do not all earn millions of dollars every year. NBA top 10 salaries for 1999-2000 No. Player Team Salary 1. Shaquille O'Neal L.A. Lakers $17.1 million 2. Kevin Garnett Minnesota Timberwolves $16.6 million 3. Alonzo Mourning Miami Heat $15.1 million 4. Juwan Howard Washington Wizards $15.0 million 5. Patrick Ewing New York Knicks $15.0 million 6. Scottie Pippen Portland Trail Blazers $14.8 million 7. Hakeem Olajuwon Houston Rockets $14.3 million 8. Karl Malone Utah Jazz $14.0 million 9. David Robinson San Antonio Spurs $13.0 million 10. Jayson Williams New Jersey Nets $12.4 million As a matter of fact according to data from USA Today (12/8/00) and compiled on the website “Patricia’s Basketball Stuff” http://www.nationwide.net/~patricia/ the following more accurately reflects the salaries across professional basketball players in the NBA. 1 © 2003 Wyoming Body of Evidence Activities Consortium and the Wyoming Department of Education. Wyoming Distribution Ready August 2003 Salaries of NBA Basketball Players - 2000 Number of Players Salaries 2 $19 to 20 million 0 $18 to 19 million 0 $17 to 18 million 3 $16 to 17 million 1 $15 to 16 million 3 $14 to 15 million 2 $13 to 14 million 4 $12 to 13 million 5 $11 to 12 million 15 $10 to 11 million 9 $9 to 10 million 11 $8 to 9 million 8 $7 to 8 million 8 $6 to 7 million 25 $5 to 6 million 23 $4 to 5 million 41 3 to 4 million 92 $2 to 3 million 82 $1 to 2 million 130 less than $1 million 464 Total According to this source the average salaries for the 464 NBA players in 2000 was $3,241,895. -

Table of Contents

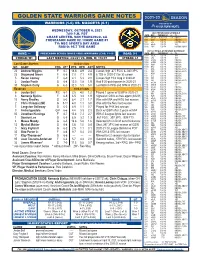

GOLDEN STATE WARRIORS GAME NOTES WARRIORS (1-0) VS. NUGGETS (0-1) WEDNESDAY, OCTOBER 6, 2021 7:00 P.M. PDT 2021 PRESEASON SCHEDULE DATE OPP TIME/RESULT +/- TV CHASE CENTER, SAN FRANCISCO, CA 10/4 at POR W, 121-107 +14 NBA TV PRESEASON GAME #2 / HOME GAME #1 10/6 DEN 7:00 PM NBCSBA 10/8 LAL 7:00 PM NBCSBA, NBA TV TV: NBC SPORTS BAY AREA 10/12 at LAL 7:30 PM TNT RADIO: 95.7 THE GAME 10/15 POR 7:00 PM NBCSBA, NBA TV 2021-22 REGULAR SEASON SCHEDULE HOME: --- PRESEASON SERIES (SINCE 1980): WARRIORS LEAD, 11-10 ROAD: 0-1 DATE OPP TIME/RESULT +/- TV 10/19 at LAL 7:00 PM TNT STREAK: W1 LAST MEETING: 4/23/21 VS. DEN, W, 118-97 STREAK: L1 10/21 LAC 7:00 PM TNT 10/24 at SAC 6:00 PM NBCSBA 10/26 at OKC 5:00 PM NBCSBA Last Game Starters 2020-21 Stats 10/28 MEM 7:00 PM NBCSBA 10/30 OKC 5:30 PM NBCSBA NO. NAME POS HT^ PTS REB AST NOTES 11/3 CHA 7:00 PM NBCSBA, ESPN 22 Andrew Wiggins F 6-7 18.6 4.9 2.4 Career-high .477 FG% & .380 3P% 11/5 NOP 7:00 PM NBCSBA, ESPN 11/7 HOU 5:30 PM NBCSBA 23 Draymond Green F 6-6 7.0 7.1 8.9 6 TD3 in 2020-21 for 30 career 11/8 ATL 7:00 PM NBCSBA 5 Kevon Looney F 6-9 4.1 5.3 2.0 Career-high 19.0 mpg in 2020-21 11/10 MIN 7:00 PM NBCSBA 11/12 CHI 7:00 PM NBCSBA, ESPN 3 Jordan Poole G 6-4 12.0 1.8 1.9 Had 9 20-point games in 2020-21 11/14 at CHA 4:00 PM NBCSBA 30 Stephen Curry G 6-3 32.0 5.5 5.8 Led NBA in PPG and 3PM in 2020-21 11/16 at BKN 4:30 PM TNT 11/18 at CLE 4:30 PM NBCSBA Reserves 2020-21 Stats 11/19 at DET 4:00 PM NBCSBA 11/21 TOR 5:30 PM NBCSBA 6 Jordan Bell F/C 6-7 2.5 4.0 1.2 Played 1 game w/ GSW in 2020-21 11/24