Competitive Programmer's Handbook

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Parallel Prefix Sum (Scan) with CUDA

Parallel Prefix Sum (Scan) with CUDA Mark Harris [email protected] April 2007 Document Change History Version Date Responsible Reason for Change February 14, Mark Harris Initial release 2007 April 2007 ii Abstract Parallel prefix sum, also known as parallel Scan, is a useful building block for many parallel algorithms including sorting and building data structures. In this document we introduce Scan and describe step-by-step how it can be implemented efficiently in NVIDIA CUDA. We start with a basic naïve algorithm and proceed through more advanced techniques to obtain best performance. We then explain how to scan arrays of arbitrary size that cannot be processed with a single block of threads. Month 2007 1 Parallel Prefix Sum (Scan) with CUDA Table of Contents Abstract.............................................................................................................. 1 Table of Contents............................................................................................... 2 Introduction....................................................................................................... 3 Inclusive and Exclusive Scan .........................................................................................................................3 Sequential Scan.................................................................................................................................................4 A Naïve Parallel Scan ......................................................................................... 4 A Work-Efficient -

CC Geometry H

CC Geometry H Aim #8: How do we find perimeter and area of polygons in the Cartesian Plane? Do Now: Find the area of the triangle below using decomposition and then using the shoelace formula (also known as Green's theorem). 1) Given rectangle ABCD, a. Identify the vertices. b. Find the exact perimeter. c. Find the area using the area formula. d. List the vertices starting with A moving counterclockwise and apply the shoelace formula. Does the method work for quadrilaterals? 2) Calculate the area using the shoelace formula and then find the perimeter (nearest hundredth). 3) Find the perimeter (to the nearest hundredth) and the area of the quadrilateral with vertices A(-3,4), B(4,6), C(2,-3), and D(-4,-4). 4) A textbook has a picture of a triangle with vertices (3,6) and (5,2). Something happened in printing the book and the coordinates of the third vertex are listed as (-1, ). The answers in the back of the book give the area of the triangle as 6 square units. What is the y-coordinate of this missing vertex? 5) Find the area of the pentagon with vertices A(5,8), B(4,-3), C(-1,-2), D(-2,4), and E(2,6). 6) Show that the shoelace formula used on the trapezoid shown confirms the traditional formula for the area of a trapezoid: 1 (b1 + b2)h 2 D (x3,y) C (x2,y) A B (x ,0) (0,0) 1 7) Find the area and perimeter (exact and nearest hundredth) of the hexagon shown. -

Lesson 11: Perimeters and Areas of Polygonal Regions Defined by Systems of Inequalities

NYS COMMON CORE MATHEMATICS CURRICULUM Lesson 11 M4 GEOMETRY Lesson 11: Perimeters and Areas of Polygonal Regions Defined by Systems of Inequalities Student Outcomes . Students find the perimeter of a triangle or quadrilateral in the coordinate plane given a description by inequalities. Students find the area of a triangle or quadrilateral in the coordinate plane given a description by inequalities by employing Green’s theorem. Lesson Notes In previous lessons, students found the area of polygons in the plane using the “shoelace” method. In this lesson, we give a name to this method—Green’s theorem. Students will draw polygons described by a system of inequalities, find the perimeter of the polygon, and use Green’s theorem to find the area. Classwork Opening Exercises (5 minutes) The opening exercises are designed to review key concepts of graphing inequalities. The teacher should assign them independently and circulate to assess understanding. Opening Exercises Graph the following: a. b. Lesson 11: Perimeters and Areas of Polygonal Regions Defined by Systems of Inequalities 135 Date: 8/28/14 This work is licensed under a © 2014 Common Core, Inc. Some rights reserved. commoncore.org Creative Commons Attribution-NonCommercial-ShareAlike 3.0 Unported License. NYS COMMON CORE MATHEMATICS CURRICULUM Lesson 11 M4 GEOMETRY d. c. Example 1 (10 minutes) Example 1 A parallelogram with base of length and height can be situated in the coordinate plane as shown. Verify that the shoelace formula gives the area of the parallelogram as . What is the area of a parallelogram? Base height . The distance from the -axis to the top left vertex is some number . -

Lesson 10: Perimeter and Area of Polygonal Regions in the Cartesian Plane

NYS COMMON CORE MATHEMATICS CURRICULUM Lesson 10 M4 GEOMETRY Lesson 10: Perimeter and Area of Polygonal Regions in the Cartesian Plane Student Outcomes . Students find the perimeter of a quadrilateral in the coordinate plane given its vertices and edges. Students find the area of a quadrilateral in the coordinate plane given its vertices and edges by employing Green’s theorem. Classwork Opening Exercise (5 minutes) Scaffolding: . Give students time to The Opening Exercise allows students to practice the shoelace method of finding the area struggle with these of a triangle in preparation for today’s lesson. Have students complete the exercise questions. Add more individually, and then compare their work with a neighbor’s. Pull the class back together questions as necessary to for a final check and discussion. scaffold for struggling If students are struggling with the shoelace method, they can use decomposition. students. Consider providing students with pre-graphed Opening Exercise quadrilaterals with axes on Find the area of the triangle given. Compare your answer and method to your neighbor’s, and grid lines. discuss differences. Go from concrete to abstract by starting with finding the area by decomposition, then translating one vertex to the origin, and then using the shoelace formula. Post the shoelace diagram and formula from Lesson 9. Shoelace Formula Decomposition Coordinates: 푨(−ퟑ, ퟐ), 푩(ퟐ, −ퟏ), 푪(ퟑ, ퟏ) Area of Rectangle: ퟔ units ⋅ ퟑ units = ퟏퟖ square Area Calculation: units ퟏ Area of Left Rectangle: ퟕ. ퟓ square units ((−ퟑ) ∙ (−ퟏ) + ퟐ ∙ ퟏ + ퟑ ∙ ퟐ − ퟐ ∙ ퟐ − (−ퟏ) ∙ ퟑ − ퟏ ∙ (−ퟑ)) ퟐ Area of Bottom Right Triangle: ퟏ square unit Area: ퟔ. -

Magic: Discover 6 in 52 Cards Knowing Their Colors Based on the De Bruijn Sequence

Magic: discover 6 in 52 cards knowing their colors based on the De Bruijn sequence Siang Wun Song Institute of Mathematics and Statistics University of SA~$o Paulo Abstract We present a magic in which a deck of 52 cards is cut any number of times and six cards are dealt. By only knowing their colors, all six cards are revealed. It is based on the De Bruijn sequence. The version of telling 5 cards from an incomplete 32-card deck is well known. We adapted it to the 52-card version, by obtaining a 52-bit cyclic sequence, with certain properties, from a 64-bit De Bruijn sequence. To our knowledge, this result is not known in the literature. The rest of the paper presents the De Bruijn sequence and why the magic works. 1 Introduction We present the following magic. The magician takes a complete deck of 52 cards. After cutting the deck of cards several times, 6 participants are chosen and a card is dealt to each one. The magicion asks who holds a red card. With the aid of a slide presentation file, the magicion inputs the 6 colors into the slide file that reveals the 6 cards dealt. The reader can go directly to section 2 to practice the magic trick and then go back to the rest of the paper to understand why it works. The magic is based on the De Bruijn sequence [4, 12]. The magic of discovering 5 cards in an incomplete deck of 32 cards, which we will call Magic Version 32, is well known [2, 7]. -

Generic Implementation of Parallel Prefix Sums and Their

GENERIC IMPLEMENTATION OF PARALLEL PREFIX SUMS AND THEIR APPLICATIONS A Thesis by TAO HUANG Submitted to the Office of Graduate Studies of Texas A&M University in partial fulfillment of the requirements for the degree of MASTER OF SCIENCE May 2007 Major Subject: Computer Science GENERIC IMPLEMENTATION OF PARALLEL PREFIX SUMS AND THEIR APPLICATIONS A Thesis by TAO HUANG Submitted to the Office of Graduate Studies of Texas A&M University in partial fulfillment of the requirements for the degree of MASTER OF SCIENCE Approved by: Chair of Committee, Lawrence Rauchwerger Committee Members, Nancy M. Amato Jennifer L. Welch Marvin L. Adams Head of Department, Valerie E. Taylor May 2007 Major Subject: Computer Science iii ABSTRACT Generic Implementation of Parallel Prefix Sums and Their Applications. (May 2007) Tao Huang, B.E.; M.E., University of Electronic Science and Technology of China Chair of Advisory Committee: Dr. Lawrence Rauchwerger Parallel prefix sums algorithms are one of the simplest and most useful building blocks for constructing parallel algorithms. A generic implementation is valuable because of the wide range of applications for this method. This thesis presents a generic C++ implementation of parallel prefix sums. The implementation applies two separate parallel prefix sums algorithms: a recursive doubling (RD) algorithm and a binary-tree based (BT) algorithm. This implementation shows how common communication patterns can be sepa- rated from the concrete parallel prefix sums algorithms and thus simplify the work of parallel programming. For each algorithm, the implementation uses two different synchronization options: barrier synchronization and point-to-point synchronization. These synchronization options lead to different communication patterns in the algo- rithms, which are represented by dependency graphs between tasks. -

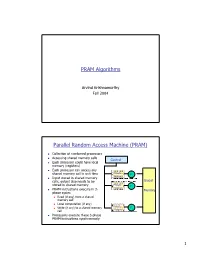

PRAM Algorithms Parallel Random Access Machine

PRAM Algorithms Arvind Krishnamurthy Fall 2004 Parallel Random Access Machine (PRAM) n Collection of numbered processors n Accessing shared memory cells Control n Each processor could have local memory (registers) n Each processor can access any shared memory cell in unit time Private P Memory 1 n Input stored in shared memory cells, output also needs to be Global stored in shared memory Private P Memory 2 n PRAM instructions execute in 3- Memory phase cycles n Read (if any) from a shared memory cell n Local computation (if any) Private n Write (if any) to a shared memory P Memory p cell n Processors execute these 3-phase PRAM instructions synchronously 1 Shared Memory Access Conflicts n Different variations: n Exclusive Read Exclusive Write (EREW) PRAM: no two processors are allowed to read or write the same shared memory cell simultaneously n Concurrent Read Exclusive Write (CREW): simultaneous read allowed, but only one processor can write n Concurrent Read Concurrent Write (CRCW) n Concurrent writes: n Priority CRCW: processors assigned fixed distinct priorities, highest priority wins n Arbitrary CRCW: one randomly chosen write wins n Common CRCW: all processors are allowed to complete write if and only if all the values to be written are equal A Basic PRAM Algorithm n Let there be “n” processors and “2n” inputs n PRAM model: EREW n Construct a tournament where values are compared P0 Processor k is active in step j v if (k % 2j) == 0 At each step: P0 P4 Compare two inputs, Take max of inputs, P0 P2 P4 P6 Write result into shared memory -

Lecture Notes, CSE 232, Fall 2014 Semester

Lecture Notes, CSE 232, Fall 2014 Semester Dr. Brett Olsen Week 1 - Introduction to Competitive Programming Syllabus First, I've handed out along with the syllabus a short pre-questionnaire intended to give us some idea of where you're coming from and what you're hoping to get out of this class. Please fill it out and turn it in at the end of the class. Let me briefly cover the material in the syllabus. This class is CSE 232, Programming Skills Workshop, and we're going to focus on practical programming skills and preparation for programming competitions and similar events. We'll particularly be paying attention to the 2014 Midwest Regional ICPC, which will be November 1st, and I encourage anyone interested to participate. I've been working closely with the local chapter of the ACM, and you should talk to them if you're interesting in joining an ICPC team. My name is Dr. Brett Olsen, and I'm a computational biologist on the Medical School campus. I was invited to teach this course after being contacted by the ACM due to my performance in the Google Code Jam, where I've been consistently performing in the top 5-10%, so I do have some practical experience in these types of contests that I hope can help you improve your performance as well. Assisting me will be TA Joey Woodson, who is also affiliated with the ACM, and would be a good person to contact about the ICPC this fall. Our weekly hour and a half meetings will be split into roughly half lecture and half practical lab work, where I'll provide some sample problems to work on under our guidance. -

Parallel Prefix Sum on the GPU (Scan)

Parallel Prefix Sum on the GPU (Scan) Presented by Adam O’Donovan Slides adapted from the online course slides for ME964 at Wisconsin taught by Prof. Dan Negrut and from slides Presented by David Luebke Parallel Prefix Sum (Scan) Definition: The all-prefix-sums operation takes a binary associative operator ⊕ with identity I, and an array of n elements a a a [ 0, 1, …, n-1] and returns the ordered set I a a ⊕ a a ⊕ a ⊕ ⊕ a [ , 0, ( 0 1), …, ( 0 1 … n-2)] . Example: Exclusive scan: last input if ⊕ is addition, then scan on the set element is not included in [3 1 7 0 4 1 6 3] the result returns the set [0 3 4 11 11 15 16 22] (From Blelloch, 1990, “Prefix 2 Sums and Their Applications) Applications of Scan Scan is a simple and useful parallel building block Convert recurrences from sequential … for(j=1;j<n;j++) out[j] = out[j-1] + f(j); … into parallel : forall(j) in parallel temp[j] = f(j); scan(out, temp); Useful in implementation of several parallel algorithms: radix sort Polynomial evaluation quicksort Solving recurrences String comparison Tree operations Lexical analysis Histograms Stream compaction Etc. HK-UIUC 3 Scan on the CPU void scan( float* scanned, float* input, int length) { scanned[0] = 0; for(int i = 1; i < length; ++i) { scanned[i] = scanned[i-1] + input[i-1]; } } Just add each element to the sum of the elements before it Trivial, but sequential Exactly n-1 adds: optimal in terms of work efficiency 4 Parallel Scan Algorithm: Solution One Hillis & Steele (1986) Note that a implementation of the algorithm shown in picture requires two buffers of length n (shown is the case n=8=2 3) Assumption: the number n of elements is a power of 2: n=2 M Picture courtesy of Mark Harris 5 The Plain English Perspective First iteration, I go with stride 1=2 0 Start at x[2 M] and apply this stride to all the array elements before x[2 M] to find the mate of each of them. -

1 Introduction 2 Problems Without the Formula

DG Kim Spokane Math Circle The Shoelace Theorem March 3rd 1 Introduction The Shoelace Theorem is a nifty formula for finding the area of a polygon given the coordinates of its vertices. In this lecture, we'll explore the Shoelace Theorem and its applications. 2 Problems without the Formula Find the areas of the following shapes: 1 DG Kim Spokane Math Circle The Shoelace Theorem March 3rd 3 Answers to Exercises 1. 4 × 4 = 16 2. 1=2 × 6 × 2 = 6 3. 6 × 4 − 1=2 × 4 × 4 = 24 − 8 = 16 4. 4 × 6 − 1=2 × 4 × 1 − 1=2 × 4 × 2 = 24 − 2 − 4 = 18 5. 8 × 3 − 1=2 × 8 × 1 = 24 − 4 = 20 4 The Cartesian Plane One quick note that you should know is about the Cartesian Plane. Cartesian Planes are in an (x; y) format. The first number in a Cartesian point is the number of spaces it goes horizontally, and the second number is the number of spaces it goes vertically. You should be able to find the Cartesian coordinates in all the diagrams that were provided earlier. 5 The Shoelace Formula! n−1 n−1 1 X X A = x y + x y − x y − x y 2 i i+1 n 1 i+1 i 1 n i=1 i=1 Okay, so this looks complicated, but now we'll look at why the shoelace formula got its name. 2 DG Kim Spokane Math Circle The Shoelace Theorem March 3rd The reason this formula is called the shoelace formula is because of the method used to find it. -

De Bruijn Sequences: from Games to Shift-Rules to a Proof of The

De Bruijn Sequences: From Games to Shift-Rules to a Proof of the Fredricksen-Kessler-Maiorana Theorem Gal Amram, Amir Rubin, Yotam Svoray, Gera Weiss Department of Computer Science, Ben-Gurion University of The Negev Abstract We present a combinatorial game and propose efficiently computable optimal strategies. We then show how these strategies can be translated to efficiently computable shift-rules for the well known prefer-max and prefer-min De Bruijn sequences, in both forward and backward directions. Using these shift-rules, we provide a new proof of the well known theorem by Fredricksen, Kessler, and Maiorana on De Bruijn sequences and Lyndon words. 1. Introduction A De Bruijn sequence of order n on the alphabet [k] = {0,..., k − 1} is a cyclic sequence of length kn such that every possible word of length n over this alphabet appears exactly once as a subword [1]. In this work we focus on two of the most famous De Bruijn sequences called the prefer-max and the prefer-min sequences [2, 3] obtained by starting with 0n, for the prefer-max, or (k − 1)n, for the prefer-min, and adding to each prefix the maximal/minimal value in [k] such that the suffix of length n does not appear as a subword of the prefix. A shift-rule of a De Bruijn sequence is a mapping shift: [k]n → [k]n such arXiv:1805.02405v3 [cs.DM] 18 Nov 2020 that, for each word w = σ1 ... σn, shift(w) is the word σ2 ... σnτ where τ is the symbol that follows w in the sequence (for the last word τ is the first symbol in the sequence). -

Combinatorial Species and Labelled Structures Brent Yorgey University of Pennsylvania, [email protected]

University of Pennsylvania ScholarlyCommons Publicly Accessible Penn Dissertations 1-1-2014 Combinatorial Species and Labelled Structures Brent Yorgey University of Pennsylvania, [email protected] Follow this and additional works at: http://repository.upenn.edu/edissertations Part of the Computer Sciences Commons, and the Mathematics Commons Recommended Citation Yorgey, Brent, "Combinatorial Species and Labelled Structures" (2014). Publicly Accessible Penn Dissertations. 1512. http://repository.upenn.edu/edissertations/1512 This paper is posted at ScholarlyCommons. http://repository.upenn.edu/edissertations/1512 For more information, please contact [email protected]. Combinatorial Species and Labelled Structures Abstract The theory of combinatorial species was developed in the 1980s as part of the mathematical subfield of enumerative combinatorics, unifying and putting on a firmer theoretical basis a collection of techniques centered around generating functions. The theory of algebraic data types was developed, around the same time, in functional programming languages such as Hope and Miranda, and is still used today in languages such as Haskell, the ML family, and Scala. Despite their disparate origins, the two theories have striking similarities. In particular, both constitute algebraic frameworks in which to construct structures of interest. Though the similarity has not gone unnoticed, a link between combinatorial species and algebraic data types has never been systematically explored. This dissertation lays the theoretical groundwork for a precise—and, hopefully, useful—bridge bewteen the two theories. One of the key contributions is to port the theory of species from a classical, untyped set theory to a constructive type theory. This porting process is nontrivial, and involves fundamental issues related to equality and finiteness; the recently developed homotopy type theory is put to good use formalizing these issues in a satisfactory way.