Guidelines on Cryptographic Algorithms Usage and Key Management

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Public-Key Cryptography

Public Key Cryptography EJ Jung Basic Public Key Cryptography public key public key ? private key Alice Bob Given: Everybody knows Bob’s public key - How is this achieved in practice? Only Bob knows the corresponding private key Goals: 1. Alice wants to send a secret message to Bob 2. Bob wants to authenticate himself Requirements for Public-Key Crypto ! Key generation: computationally easy to generate a pair (public key PK, private key SK) • Computationally infeasible to determine private key PK given only public key PK ! Encryption: given plaintext M and public key PK, easy to compute ciphertext C=EPK(M) ! Decryption: given ciphertext C=EPK(M) and private key SK, easy to compute plaintext M • Infeasible to compute M from C without SK • Decrypt(SK,Encrypt(PK,M))=M Requirements for Public-Key Cryptography 1. Computationally easy for a party B to generate a pair (public key KUb, private key KRb) 2. Easy for sender to generate ciphertext: C = EKUb (M ) 3. Easy for the receiver to decrypt ciphertect using private key: M = DKRb (C) = DKRb[EKUb (M )] Henric Johnson 4 Requirements for Public-Key Cryptography 4. Computationally infeasible to determine private key (KRb) knowing public key (KUb) 5. Computationally infeasible to recover message M, knowing KUb and ciphertext C 6. Either of the two keys can be used for encryption, with the other used for decryption: M = DKRb[EKUb (M )] = DKUb[EKRb (M )] Henric Johnson 5 Public-Key Cryptographic Algorithms ! RSA and Diffie-Hellman ! RSA - Ron Rives, Adi Shamir and Len Adleman at MIT, in 1977. • RSA -

A Quantitative Study of Advanced Encryption Standard Performance

United States Military Academy USMA Digital Commons West Point ETD 12-2018 A Quantitative Study of Advanced Encryption Standard Performance as it Relates to Cryptographic Attack Feasibility Daniel Hawthorne United States Military Academy, [email protected] Follow this and additional works at: https://digitalcommons.usmalibrary.org/faculty_etd Part of the Information Security Commons Recommended Citation Hawthorne, Daniel, "A Quantitative Study of Advanced Encryption Standard Performance as it Relates to Cryptographic Attack Feasibility" (2018). West Point ETD. 9. https://digitalcommons.usmalibrary.org/faculty_etd/9 This Doctoral Dissertation is brought to you for free and open access by USMA Digital Commons. It has been accepted for inclusion in West Point ETD by an authorized administrator of USMA Digital Commons. For more information, please contact [email protected]. A QUANTITATIVE STUDY OF ADVANCED ENCRYPTION STANDARD PERFORMANCE AS IT RELATES TO CRYPTOGRAPHIC ATTACK FEASIBILITY A Dissertation Presented in Partial Fulfillment of the Requirements for the Degree of Doctor of Computer Science By Daniel Stephen Hawthorne Colorado Technical University December, 2018 Committee Dr. Richard Livingood, Ph.D., Chair Dr. Kelly Hughes, DCS, Committee Member Dr. James O. Webb, Ph.D., Committee Member December 17, 2018 © Daniel Stephen Hawthorne, 2018 1 Abstract The advanced encryption standard (AES) is the premier symmetric key cryptosystem in use today. Given its prevalence, the security provided by AES is of utmost importance. Technology is advancing at an incredible rate, in both capability and popularity, much faster than its rate of advancement in the late 1990s when AES was selected as the replacement standard for DES. Although the literature surrounding AES is robust, most studies fall into either theoretical or practical yet infeasible. -

IMPLEMENTATION and BENCHMARKING of PADDING UNITS and HMAC for SHA-3 CANDIDATES in FPGAS and ASICS by Ambarish Vyas a Thesis Subm

IMPLEMENTATION AND BENCHMARKING OF PADDING UNITS AND HMAC FOR SHA-3 CANDIDATES IN FPGAS AND ASICS by Ambarish Vyas A Thesis Submitted to the Graduate Faculty of George Mason University in Partial Fulfillment of The Requirements for the Degree of Master of Science Computer Engineering Committee: Dr. Kris Gaj, Thesis Director Dr. Jens-Peter Kaps. Committee Member Dr. Bernd-Peter Paris. Committee Member Dr. Andre Manitius, Department Chair of Electrical and Computer Engineering Dr. Lloyd J. Griffiths. Dean, Volgenau School of Engineering Date: ---J d. / q /9- 0 II Fall Semester 2011 George Mason University Fairfax, VA Implementation and Benchmarking of Padding Units and HMAC for SHA-3 Candidates in FPGAs and ASICs A thesis submitted in partial fulfillment of the requirements for the degree of Master of Science at George Mason University By Ambarish Vyas Bachelor of Science University of Pune, 2009 Director: Dr. Kris Gaj, Associate Professor Department of Electrical and Computer Engineering Fall Semester 2011 George Mason University Fairfax, VA Copyright c 2011 by Ambarish Vyas All Rights Reserved ii Acknowledgments I would like to use this oppurtunity to thank the people who have supported me throughout my thesis. First and foremost my advisor Dr.Kris Gaj, without his zeal, his motivation, his patience, his confidence in me, his humility, his diverse knowledge, and his great efforts this thesis wouldn't be possible. It is difficult to exaggerate my gratitude towards him. I also thank Ekawat Homsirikamol for his contributions to this project. He has significantly contributed to the designs and implementations of the architectures. Additionally, I am indebted to my student colleagues in CERG for providing a fun environment to learn and giving invaluable tips and support. -

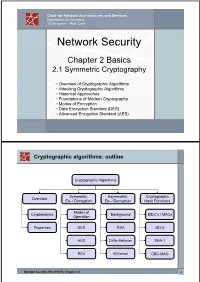

The Data Encryption Standard (DES) – History

Chair for Network Architectures and Services Department of Informatics TU München – Prof. Carle Network Security Chapter 2 Basics 2.1 Symmetric Cryptography • Overview of Cryptographic Algorithms • Attacking Cryptographic Algorithms • Historical Approaches • Foundations of Modern Cryptography • Modes of Encryption • Data Encryption Standard (DES) • Advanced Encryption Standard (AES) Cryptographic algorithms: outline Cryptographic Algorithms Symmetric Asymmetric Cryptographic Overview En- / Decryption En- / Decryption Hash Functions Modes of Cryptanalysis Background MDC’s / MACs Operation Properties DES RSA MD-5 AES Diffie-Hellman SHA-1 RC4 ElGamal CBC-MAC Network Security, WS 2010/11, Chapter 2.1 2 Basic Terms: Plaintext and Ciphertext Plaintext P The original readable content of a message (or data). P_netsec = „This is network security“ Ciphertext C The encrypted version of the plaintext. C_netsec = „Ff iThtIiDjlyHLPRFxvowf“ encrypt key k1 C P key k2 decrypt In case of symmetric cryptography, k1 = k2. Network Security, WS 2010/11, Chapter 2.1 3 Basic Terms: Block cipher and Stream cipher Block cipher A cipher that encrypts / decrypts inputs of length n to outputs of length n given the corresponding key k. • n is block length Most modern symmetric ciphers are block ciphers, e.g. AES, DES, Twofish, … Stream cipher A symmetric cipher that generats a random bitstream, called key stream, from the symmetric key k. Ciphertext = key stream XOR plaintext Network Security, WS 2010/11, Chapter 2.1 4 Cryptographic algorithms: overview -

Authentication in Key-Exchange: Definitions, Relations and Composition

Authentication in Key-Exchange: Definitions, Relations and Composition Cyprien Delpech de Saint Guilhem1;2, Marc Fischlin3, and Bogdan Warinschi2 1 imec-COSIC, KU Leuven, Belgium 2 Dept Computer Science, University of Bristol, United Kingdom 3 Computer Science, Technische Universit¨atDarmstadt, Germany [email protected], [email protected], [email protected] Abstract. We present a systematic approach to define and study authentication notions in authenti- cated key-exchange protocols. We propose and use a flexible and expressive predicate-based definitional framework. Our definitions capture key and entity authentication, in both implicit and explicit vari- ants, as well as key and entity confirmation, for authenticated key-exchange protocols. In particular, we capture critical notions in the authentication space such as key-compromise impersonation resis- tance and security against unknown key-share attacks. We first discuss these definitions within the Bellare{Rogaway model and then extend them to Canetti{Krawczyk-style models. We then show two useful applications of our framework. First, we look at the authentication guarantees of three representative protocols to draw several useful lessons for protocol design. The core technical contribution of this paper is then to formally establish that composition of secure implicitly authenti- cated key-exchange with subsequent confirmation protocols yields explicit authentication guarantees. Without a formal separation of implicit and explicit authentication from secrecy, a proof of this folklore result could not have been established. 1 Introduction The commonly expected level of security for authenticated key-exchange (AKE) protocols comprises two aspects. Authentication provides guarantees on the identities of the parties involved in the protocol execution. -

2018-07-11 and for Information to the Iso Member Bodies and to the Tmb Members

Sergio Mujica Secretary-General TO THE CHAIRS AND SECRETARIES OF ISO COMMITTEES 2018-07-11 AND FOR INFORMATION TO THE ISO MEMBER BODIES AND TO THE TMB MEMBERS ISO/IEC/ITU coordination – New work items Dear Sir or Madam, Please find attached the lists of IEC, ITU and ISO new work items issued in June 2018. If you wish more information about IEC technical committees and subcommittees, please access: http://www.iec.ch/. Click on the last option to the right: Advanced Search and then click on: Documents / Projects / Work Programme. In case of need, a copy of an actual IEC new work item may be obtained by contacting [email protected]. Please note for your information that in the annexed table from IEC the "document reference" 22F/188/NP means a new work item from IEC Committee 22, Subcommittee F. If you wish to look at the ISO new work items, please access: http://isotc.iso.org/pp/. On the ISO Project Portal you can find all information about the ISO projects, by committee, document number or project ID, or choose the option "Stages search" and select "Search" to obtain the annexed list of ISO new work items. Yours sincerely, Sergio Mujica Secretary-General Enclosures ISO New work items 1 of 8 2018-07-11 Alert Detailed alert Timeframe Reference Document title Developing committee VA Registration dCurrent stage Stage date Guidance for multiple organizations implementing a common Warning Warning – NP decision SDT 36 ISO/NP 50009 (ISO50001) EnMS ISO/TC 301 - - 10.60 2018-06-10 Warning Warning – NP decision SDT 36 ISO/NP 31050 Guidance for managing -

SHA-3 Update

SHA+3%update% %% % Quynh%Dang% Computer%Security%Division% ITL,%NIST% IETF%86% SHA-3 Competition 11/2/2007% SHA+3%CompeDDon%Began.% 10/2/2012% Keccak&announced&as&the&SHA13&winner.& IETF%86% Secure Hash Algorithms Outlook ► SHA-2 looks strong. ► We expect Keccak (SHA-3) to co-exist with SHA-2. ► Keccak complements SHA-2 in many ways. Keccak is good in different environments. Keccak is a sponge - a different design concept from SHA-2. IETF%86% Sponge Construction Sponge capacity corresponds to a security level: s = c/2. IETF%86% SHA-3 Selection ► We chose Keccak as the winner because of many different reasons and below are some of them: ► It has a high security margin. ► It received good amount of high-quality analyses. ► It has excellent hardware performance. ► It has good overall performance. ► It is very different from SHA-2. ► It provides a lot of flexibility. IETF%86% Keccak Features ► Keccak supports the same hash-output sizes as SHA-2 (i.e., SHA-224, -256, -384, -512). ► Keccak works fine with existing applications, such as DRBGs, KDFs, HMAC and digital signatures. ► Keccak offers flexibility in performance/security tradeoffs. ► Keccak supports tree hashing. ► Keccak supports variable-length output. IETF%86% Under Consideration for SHA-3 ► Support for variable-length hashes ► Considering options: ► One capacity: c = 512, with output size encoding, ► Two capacities: c = 256 and c = 512, with output size encoding, or ► Four capacities: c = 224, c = 256, c=384, and c = 512 without output size encoding (preferred by the Keccak team). ► Input format for SHA-3 hash function(s) will contain a padding scheme to support tree hashing in the future. -

Block Ciphers and the Data Encryption Standard

Lecture 3: Block Ciphers and the Data Encryption Standard Lecture Notes on “Computer and Network Security” by Avi Kak ([email protected]) January 26, 2021 3:43pm ©2021 Avinash Kak, Purdue University Goals: To introduce the notion of a block cipher in the modern context. To talk about the infeasibility of ideal block ciphers To introduce the notion of the Feistel Cipher Structure To go over DES, the Data Encryption Standard To illustrate important DES steps with Python and Perl code CONTENTS Section Title Page 3.1 Ideal Block Cipher 3 3.1.1 Size of the Encryption Key for the Ideal Block Cipher 6 3.2 The Feistel Structure for Block Ciphers 7 3.2.1 Mathematical Description of Each Round in the 10 Feistel Structure 3.2.2 Decryption in Ciphers Based on the Feistel Structure 12 3.3 DES: The Data Encryption Standard 16 3.3.1 One Round of Processing in DES 18 3.3.2 The S-Box for the Substitution Step in Each Round 22 3.3.3 The Substitution Tables 26 3.3.4 The P-Box Permutation in the Feistel Function 33 3.3.5 The DES Key Schedule: Generating the Round Keys 35 3.3.6 Initial Permutation of the Encryption Key 38 3.3.7 Contraction-Permutation that Generates the 48-Bit 42 Round Key from the 56-Bit Key 3.4 What Makes DES a Strong Cipher (to the 46 Extent It is a Strong Cipher) 3.5 Homework Problems 48 2 Computer and Network Security by Avi Kak Lecture 3 Back to TOC 3.1 IDEAL BLOCK CIPHER In a modern block cipher (but still using a classical encryption method), we replace a block of N bits from the plaintext with a block of N bits from the ciphertext. -

Lecture9.Pdf

Merkle- Suppose H is a Damgaord hash function built from a secure compression function : several to build a function ways keyed : m : = H Ilm 1 . end FCK ) (k ) Prep key , " " ↳ - Insecure due to structure of Merkle : can mount an extension attack: H (KH m) can Barnyard given , compute ' Hlkllmllm ) by extending Merkle- Danged chain = : m : 2 . FCK ) 11k) Append key , Hlm ↳ - - to : Similar to hash then MAC construction and vulnerable same offline attack adversary finds a collision in the - - > Merkle and uses that to construct a for SHA I used PDF files Barnyard prefix forgery f , they ↳ - Structure in SHA I (can matches exploited collision demonstration generate arbitrary collisions once prefix ) ' = : FCK m - H on h 3. method , ) ( K HMH K) for reasonable randomness ( both Envelope pseudo assumptions e.g , : = - = i - - : F ( m m } : h K m h m k 4. nest ( ki ) H Ck H (k m ( , and m ( ) is a PRF both Two , kz , ) (ka HH , )) F- , ) ) Falk , ) , ) key , - of these constructions are secure PRFS on a variable size domain hash- based MAC ✓ a the - nest with correlated : HMAC is PRF / MAC based on two key (though keys) : = m H H ka m HMACCK ( K H ( , )) , ) , where k ← k ④ and kz ← k to , ipad opad and and are fixed ( in the HMAC standard) ipad opad strings specified I 0×36 repeated %x5C repeated : k . a Since , and ka are correlated need to make on h remains under Sety , stronger assumption security leg , pseudorandom related attack) Instantiations : denoted HMAC- H where H is the hash function Typically , HMAC- SHAI %" - - HMAC SHA256 -

Constructing Low-Weight Dth-Order Correlation-Immune Boolean Functions Through the Fourier-Hadamard Transform Claude Carlet and Xi Chen*

1 Constructing low-weight dth-order correlation-immune Boolean functions through the Fourier-Hadamard transform Claude Carlet and Xi Chen* Abstract The correlation immunity of Boolean functions is a property related to cryptography, to error correcting codes, to orthogonal arrays (in combinatorics, which was also a domain of interest of S. Golomb) and in a slightly looser way to sequences. Correlation-immune Boolean functions (in short, CI functions) have the property of keeping the same output distribution when some input variables are fixed. They have been widely used as combiners in stream ciphers to allow resistance to the Siegenthaler correlation attack. Very recently, a new use of CI functions has appeared in the framework of side channel attacks (SCA). To reduce the cost overhead of counter-measures to SCA, CI functions need to have low Hamming weights. This actually poses new challenges since the known constructions which are based on properties of the Walsh-Hadamard transform, do not allow to build unbalanced CI functions. In this paper, we propose constructions of low-weight dth-order CI functions based on the Fourier- Hadamard transform, while the known constructions of resilient functions are based on the Walsh-Hadamard transform. We first prove a simple but powerful result, which makes that one only need to consider the case where d is odd in further research. Then we investigate how constructing low Hamming weight CI functions through the Fourier-Hadamard transform (which behaves well with respect to the multiplication of Boolean functions). We use the characterization of CI functions by the Fourier-Hadamard transform and introduce a related general construction of CI functions by multiplication. -

GCM) for Confidentiality And

NIST Special Publication 800-38D Recommendation for Block DRAFT (April, 2006) Cipher Modes of Operation: Galois/Counter Mode (GCM) for Confidentiality and Authentication Morris Dworkin C O M P U T E R S E C U R I T Y Abstract This Recommendation specifies the Galois/Counter Mode (GCM), an authenticated encryption mode of operation for a symmetric key block cipher. KEY WORDS: authentication; block cipher; cryptography; information security; integrity; message authentication code; mode of operation. i Table of Contents 1 PURPOSE...........................................................................................................................................................1 2 AUTHORITY.....................................................................................................................................................1 3 INTRODUCTION..............................................................................................................................................1 4 DEFINITIONS, ABBREVIATIONS, AND SYMBOLS.................................................................................2 4.1 DEFINITIONS AND ABBREVIATIONS .............................................................................................................2 4.2 SYMBOLS ....................................................................................................................................................4 4.2.1 Variables................................................................................................................................................4 -

On the NIST Lightweight Cryptography Standardization

On the NIST Lightweight Cryptography Standardization Meltem S¨onmez Turan NIST Lightweight Cryptography Team ECC 2019: 23rd Workshop on Elliptic Curve Cryptography December 2, 2019 Outline • NIST's Cryptography Standards • Overview - Lightweight Cryptography • NIST Lightweight Cryptography Standardization Process • Announcements 1 NIST's Cryptography Standards National Institute of Standards and Technology • Non-regulatory federal agency within U.S. Department of Commerce. • Founded in 1901, known as the National Bureau of Standards (NBS) prior to 1988. • Headquarters in Gaithersburg, Maryland, and laboratories in Boulder, Colorado. • Employs around 6,000 employees and associates. NIST's Mission to promote U.S. innovation and industrial competitiveness by advancing measurement science, standards, and technology in ways that enhance economic security and improve our quality of life. 2 NIST Organization Chart Laboratory Programs Computer Security Division • Center for Nanoscale Science and • Cryptographic Technology Technology • Secure Systems and Applications • Communications Technology Lab. • Security Outreach and Integration • Engineering Lab. • Security Components and Mechanisms • Information Technology Lab. • Security Test, Validation and • Material Measurement Lab. Measurements • NIST Center for Neutron Research • Physical Measurement Lab. Information Technology Lab. • Advanced Network Technologies • Applied and Computational Mathematics • Applied Cybersecurity • Computer Security • Information Access • Software and Systems • Statistical