April 2007 Volume 32 Number 2

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

BUGS in the SYSTEM a Primer on the Software Vulnerability Ecosystem and Its Policy Implications

ANDI WILSON, ROSS SCHULMAN, KEVIN BANKSTON, AND TREY HERR BUGS IN THE SYSTEM A Primer on the Software Vulnerability Ecosystem and its Policy Implications JULY 2016 About the Authors About New America New America is committed to renewing American politics, Andi Wilson is a policy analyst at New America’s Open prosperity, and purpose in the Digital Age. We generate big Technology Institute, where she researches and writes ideas, bridge the gap between technology and policy, and about the relationship between technology and policy. curate broad public conversation. We combine the best of With a specific focus on cybersecurity, Andi is currently a policy research institute, technology laboratory, public working on issues including encryption, vulnerabilities forum, media platform, and a venture capital fund for equities, surveillance, and internet freedom. ideas. We are a distinctive community of thinkers, writers, researchers, technologists, and community activists who Ross Schulman is a co-director of the Cybersecurity believe deeply in the possibility of American renewal. Initiative and senior policy counsel at New America’s Open Find out more at newamerica.org/our-story. Technology Institute, where he focuses on cybersecurity, encryption, surveillance, and Internet governance. Prior to joining OTI, Ross worked for Google in Mountain About the Cybersecurity Initiative View, California. Ross has also worked at the Computer The Internet has connected us. Yet the policies and and Communications Industry Association, the Center debates that surround the security of our networks are for Democracy and Technology, and on Capitol Hill for too often disconnected, disjointed, and stuck in an Senators Wyden and Feingold. unsuccessful status quo. -

Resurrect Your Old PC

Resurrect your old PCs Resurrect your old PC Nostalgic for your old beige boxes? Don’t let them gather dust! Proprietary OSes force users to upgrade hardware much sooner than necessary: Neil Bothwick highlights some great ways to make your pensioned-off PCs earn their keep. ardware performance is constantly improving, and it is only natural to want the best, so we upgrade our H system from time to time and leave the old ones behind, considering them obsolete. But you don’t usually need the latest and greatest, it was only a few years ago that people were running perfectly usable systems on 500MHz CPUs and drooling over the prospect that a 1GHz CPU might actually be available quite soon. I can imagine someone writing a similar article, ten years from now, about what to do with that slow, old 4GHz eight-core system that is now gathering dust. That’s what we aim to do here, show you how you can put that old hardware to good use instead of consigning it to the scrapheap. So what are we talking about when we say older computers? The sort of spec that was popular around the turn of the century. OK, while that may be true, it does make it seem like we are talking about really old hardware. A typical entry-level machine from six or seven years ago would have had something like an 800MHz processor, Pentium 3 or similar, 128MB of RAM and a 20- 30GB hard disk. The test rig used for testing most of the software we will discuss is actually slightly lower spec, it has a 700MHz Celeron processor, because that’s what I found in the pile of computer gear I never throw away in my loft, right next to my faithful old – but non-functioning – Amiga 4000. -

BUGS in the SYSTEM a Primer on the Software Vulnerability Ecosystem and Its Policy Implications

ANDI WILSON, ROSS SCHULMAN, KEVIN BANKSTON, AND TREY HERR BUGS IN THE SYSTEM A Primer on the Software Vulnerability Ecosystem and its Policy Implications JULY 2016 About the Authors About New America New America is committed to renewing American politics, Andi Wilson is a policy analyst at New America’s Open prosperity, and purpose in the Digital Age. We generate big Technology Institute, where she researches and writes ideas, bridge the gap between technology and policy, and about the relationship between technology and policy. curate broad public conversation. We combine the best of With a specific focus on cybersecurity, Andi is currently a policy research institute, technology laboratory, public working on issues including encryption, vulnerabilities forum, media platform, and a venture capital fund for equities, surveillance, and internet freedom. ideas. We are a distinctive community of thinkers, writers, researchers, technologists, and community activists who Ross Schulman is a co-director of the Cybersecurity believe deeply in the possibility of American renewal. Initiative and senior policy counsel at New America’s Open Find out more at newamerica.org/our-story. Technology Institute, where he focuses on cybersecurity, encryption, surveillance, and Internet governance. Prior to joining OTI, Ross worked for Google in Mountain About the Cybersecurity Initiative View, California. Ross has also worked at the Computer The Internet has connected us. Yet the policies and and Communications Industry Association, the Center debates that surround the security of our networks are for Democracy and Technology, and on Capitol Hill for too often disconnected, disjointed, and stuck in an Senators Wyden and Feingold. unsuccessful status quo. -

Software Assurance

Information Assurance State-of-the-Art Report Technology Analysis Center (IATAC) SOAR (SOAR) July 31, 2007 Data and Analysis Center for Software (DACS) Joint endeavor by IATAC with DACS Software Security Assurance Distribution Statement A E X C E E C L I L V E R N E Approved for public release; C S E I N N I IO DoD Data & Analysis Center for Software NF OR MAT distribution is unlimited. Information Assurance Technology Analysis Center (IATAC) Data and Analysis Center for Software (DACS) Joint endeavor by IATAC with DACS Software Security Assurance State-of-the-Art Report (SOAR) July 31, 2007 IATAC Authors: Karen Mercedes Goertzel Theodore Winograd Holly Lynne McKinley Lyndon Oh Michael Colon DACS Authors: Thomas McGibbon Elaine Fedchak Robert Vienneau Coordinating Editor: Karen Mercedes Goertzel Copy Editors: Margo Goldman Linda Billard Carolyn Quinn Creative Directors: Christina P. McNemar K. Ahnie Jenkins Art Director, Cover, and Book Design: Don Rowe Production: Brad Whitford Illustrations: Dustin Hurt Brad Whitford About the Authors Karen Mercedes Goertzel Information Assurance Technology Analysis Center (IATAC) Karen Mercedes Goertzel is a subject matter expert in software security assurance and information assurance, particularly multilevel secure systems and cross-domain information sharing. She supports the Department of Homeland Security Software Assurance Program and the National Security Agency’s Center for Assured Software, and was lead technologist for 3 years on the Defense Information Systems Agency (DISA) Application Security Program. Ms. Goertzel is currently lead author of a report on the state-of-the-art in software security assurance, and has also led in the creation of state-of-the-art reports for the Department of Defense (DoD) on information assurance and computer network defense technologies and research. -

Guide to Open Source Solutions

White paper ___________________________ Guide to open source solutions “Guide to open source by Smile ” Page 2 PREAMBLE SMILE Smile is a company of engineers specialising in the implementing of open source solutions OM and the integrating of systems relying on open source. Smile is member of APRIL, the C . association for the promotion and defence of free software, Alliance Libre, PLOSS, and PLOSS RA, which are regional cluster associations of free software companies. OSS Smile has 600 throughout the World which makes it the largest company in Europe - specialising in open source. Since approximately 2000, Smile has been actively supervising developments in technology which enables it to discover the most promising open source products, to qualify and assess them so as to offer its clients the most accomplished, robust and sustainable products. SMILE . This approach has led to a range of white papers covering various fields of application: Content management (2004), portals (2005), business intelligence (2006), PHP frameworks (2007), virtualisation (2007), and electronic document management (2008), as well as PGIs/ERPs (2008). Among the works published in 2009, we would also cite “open source VPN’s”, “Firewall open source flow control”, and “Middleware”, within the framework of the WWW “System and Infrastructure” collection. Each of these works presents a selection of best open source solutions for the domain in question, their respective qualities as well as operational feedback. As open source solutions continue to acquire new domains, Smile will be there to help its clients benefit from these in a risk-free way. Smile is present in the European IT landscape as the integration architect of choice to support the largest companies in the adoption of the best open source solutions. -

Referência Debian I

Referência Debian i Referência Debian Osamu Aoki Referência Debian ii Copyright © 2013-2021 Osamu Aoki Esta Referência Debian (versão 2.85) (2021-09-17 09:11:56 UTC) pretende fornecer uma visão geral do sistema Debian como um guia do utilizador pós-instalação. Cobre muitos aspetos da administração do sistema através de exemplos shell-command para não programadores. Referência Debian iii COLLABORATORS TITLE : Referência Debian ACTION NAME DATE SIGNATURE WRITTEN BY Osamu Aoki 17 de setembro de 2021 REVISION HISTORY NUMBER DATE DESCRIPTION NAME Referência Debian iv Conteúdo 1 Manuais de GNU/Linux 1 1.1 Básico da consola ................................................... 1 1.1.1 A linha de comandos da shell ........................................ 1 1.1.2 The shell prompt under GUI ......................................... 2 1.1.3 A conta root .................................................. 2 1.1.4 A linha de comandos shell do root ...................................... 3 1.1.5 GUI de ferramentas de administração do sistema .............................. 3 1.1.6 Consolas virtuais ............................................... 3 1.1.7 Como abandonar a linha de comandos .................................... 3 1.1.8 Como desligar o sistema ........................................... 4 1.1.9 Recuperar uma consola sã .......................................... 4 1.1.10 Sugestões de pacotes adicionais para o novato ................................ 4 1.1.11 Uma conta de utilizador extra ........................................ 5 1.1.12 Configuração -

Ubuntu® 1.4Inux Bible

Ubuntu® 1.4inux Bible William von Hagen 111c10,ITENNIAL. 18072 @WILEY 2007 •ICIOATENNIAl. Wiley Publishing, Inc. Acknowledgments xxi Introduction xxiii Part 1: Getting Started with Ubuntu Linux Chapter 1: The Ubuntu Linux Project 3 Background 4 Why Use Linux 4 What Is a Linux Distribution? 5 Introducing Ubuntu Linux 6 The Ubuntu Manifesto 7 Ubuntu Linux Release Schedule 8 Ubuntu Update and Maintenance Commitments 9 Ubuntu and the Debian Project 9 Why Choose Ubuntu? 10 Installation Requirements 11 Supported System Types 12 Hardware Requirements 12 Time Requirements 12 Ubuntu CDs 13 Support for Ubuntu Linux 14 Community Support and Information 14 Documentation 17 Commercial Support for Ubuntu Linux 18 Getting More Information About Ubuntu 19 Summary 20 Chapter 2: Installing Ubuntu 21 Getting a 64-bit or PPC Desktop CD 22 Booting the Desktop CD 22 Installing Ubuntu Linux from the Desktop CD 24 Booting Ubuntu Linux 33 Booting Ubuntu Linux an Dual-Boot Systems 33 The First Time You Boot Ubuntu Linux 34 Test-Driving Ubuntu Linux 34 Expioring the Desktop CD's Examples Folder 34 Accessing Your Hard Drive from the Desktop CD 36 Using Desktop CD Persistence 41 Copying Files to Other Machines Over a Network 43 Installing Windows Programs from the Desktop CD 43 Summary 45 ix Contents Chapter 3: Installing Ubuntu on Special-Purpose Systems 47 Overview of Dual-Boot Systems 48 Your Computer's Boot Process 48 Configuring a System for Dual-Booting 49 Repartitioning an Existing Disk 49 Getting a Different Install CD 58 Booting from a Server or Altemate -

Unidade Curricular – Administração De Redes Prof. Eduardo Maroñas

SERVIÇO NACIONAL DE APRENDIZAGEM COMERCIAL FACULDADE DE TECNOLOGIA SENAC PELOTAS Unidade Curricular – Administração de Redes Prof. Eduardo Maroñas Monks Roteiro de Laboratório Backup – Parte II (Gerenciadores de Backup) Objetivo: Utilizar e descrever as características de funcionamento de backups em rede. Ferramentas: Wireshark, Vmware Player, Putty, OpenSSH, WinSCP, Apache Web Server, CentOS, Windows XP, FreeNAS, BackupPC, Clonezilla, MySQL, PostgreSQL Introdução: Neste roteiro de laboratório, serão utilizadas diversas ferramentas e sistemas operacionais para analisar o funcionamento dos sistemas de backup. O backup é essencial na administração de rede e deve ser aplicado onde dados importantes sejam utilizados. Atualmente, com o grande volume de dados realizar o backup de forma correta é cada vez mais complexo. Para isto, existem ferramentas que auxiliam nesta tarefa, tornando a vida do administrador de redes mais fácil. Tarefas: 1) Na máquina virtual CentOS, instalar o gerenciador de backups BackupPC. Para isto, devem ser seguidos os seguintes procedimentos: 1. Criar o diretório /backups onde será o repositório dos dados copiados. 2. Instalar os pacotes 1. Perl-setuid: yum install perl-suidperl 2. Archive-Zip: yum install perl-Archive-Zip.noarch 3. File-RsyncP: yum install perl-File-RsyncP Obs.: utilizar o proxy do Mussum (export http_proxy=”http://192.168.200.3:3128” 3. Fazer o download do código-fonte do BackupPC, disponível em http://192.168.200.3/emmonks/admredes4/BACKUP/BackupPC-3.3.0.tar.gz, para o diretório /usr/src 4. Criar o usuário backuppc e dar permissões para este usuário no diretório /backups. 5. Criar o diretório /var/www/html/imagens para ser o repositório de figuras do BackupPC. -

Pipenightdreams Osgcal-Doc Mumudvb Mpg123-Alsa Tbb

pipenightdreams osgcal-doc mumudvb mpg123-alsa tbb-examples libgammu4-dbg gcc-4.1-doc snort-rules-default davical cutmp3 libevolution5.0-cil aspell-am python-gobject-doc openoffice.org-l10n-mn libc6-xen xserver-xorg trophy-data t38modem pioneers-console libnb-platform10-java libgtkglext1-ruby libboost-wave1.39-dev drgenius bfbtester libchromexvmcpro1 isdnutils-xtools ubuntuone-client openoffice.org2-math openoffice.org-l10n-lt lsb-cxx-ia32 kdeartwork-emoticons-kde4 wmpuzzle trafshow python-plplot lx-gdb link-monitor-applet libscm-dev liblog-agent-logger-perl libccrtp-doc libclass-throwable-perl kde-i18n-csb jack-jconv hamradio-menus coinor-libvol-doc msx-emulator bitbake nabi language-pack-gnome-zh libpaperg popularity-contest xracer-tools xfont-nexus opendrim-lmp-baseserver libvorbisfile-ruby liblinebreak-doc libgfcui-2.0-0c2a-dbg libblacs-mpi-dev dict-freedict-spa-eng blender-ogrexml aspell-da x11-apps openoffice.org-l10n-lv openoffice.org-l10n-nl pnmtopng libodbcinstq1 libhsqldb-java-doc libmono-addins-gui0.2-cil sg3-utils linux-backports-modules-alsa-2.6.31-19-generic yorick-yeti-gsl python-pymssql plasma-widget-cpuload mcpp gpsim-lcd cl-csv libhtml-clean-perl asterisk-dbg apt-dater-dbg libgnome-mag1-dev language-pack-gnome-yo python-crypto svn-autoreleasedeb sugar-terminal-activity mii-diag maria-doc libplexus-component-api-java-doc libhugs-hgl-bundled libchipcard-libgwenhywfar47-plugins libghc6-random-dev freefem3d ezmlm cakephp-scripts aspell-ar ara-byte not+sparc openoffice.org-l10n-nn linux-backports-modules-karmic-generic-pae -

Security Versus Security: Balancing Encryption, Privacy, and National Secuirty

SECURITY VERSUS SECURITY: BALANCING ENCRYPTION, PRIVACY, AND NATIONAL SECUIRTY Jackson Stein (TC 660H or TC 359T) Plan II Honors Program The University of Texas at Austin 05/04/2017 __________________________________________ Robert Chesney Director of Robert Strauss Center Supervising Professor __________________________________________ Mark Sainsbury Department of Philosophy Second Reader ABSTRACT Author: Jackson Stein Title: Security vs. Security: Balancing Encryption, Data Privacy, and Security Supervising Professors: Robert Chesney, Mark Sainsbury This paper analyzes the current debate over encryption policy. Through careful evaluation of possible solutions to ‘going dark’ as well has weighting the costs and benefits of each solution, we found exceptional access to information more harmful than helpful. Today, there seems to be no singular leading answer to the going dark problem. Exceptional access to data and communications is a simple solution for a simple problem, however going dark is very complex, and requires a multifaceted and refined solutions. Widespread encryption forces those listening—whether it is the NSA, FBI, foreign governments, criminals or terrorist—to be much more targeted. As for the going dark metaphor, it seems as though we are not entirely “going dark”, and yet we are not completely bright either. There are dark and bright spots coming and going across the technological landscape battling in a perpetual technological arms race. The findings of this paper, ultimately determine there to be no policy that doesn’t come without some cost. That said, there are a number of ways in which law enforcement can track criminals and terrorist without weakening encryption, which we determine to be the best direction in any win lose situation. -

6.4.0-0 Release of SIMP, Which Is Compatible with Centos and Red Hat Enterprise Linux (RHEL)

SIMP Documentation THE SIMP TEAM Sep 16, 2020 Contents 1 Level of Knowledge 3 1.1 Quick Start................................................4 1.2 Changelogs................................................4 1.3 SIMP Getting Started Guide....................................... 64 1.4 SIMP User Guide............................................ 81 1.5 Contributing to SIMP.......................................... 228 1.6 SIMP Security Concepts......................................... 263 1.7 SIMP Security Control Mapping..................................... 282 1.8 Vulnerability Supplement........................................ 642 1.9 Help................................................... 644 1.10 License.................................................. 652 1.11 Contact.................................................. 652 1.12 Glossary of Terms............................................ 652 Index 669 i ii SIMP Documentation This is the documentation for the 6.4.0-0 release of SIMP, which is compatible with CentOS and Red Hat Enterprise Linux (RHEL). This guide will walk a user through the process of installing and managing a SIMP system. It also provides a mapping of security features to security requirements, which can be used to document a system’s security conformance. Warning: Be EXTREMELY CAREFUL when performing copy/paste operations from this document! Different web browsers and operating systems may substitute incompatible quotes and/or line endings in your files. The System Integrity Management Platform (SIMP) is an Open Source -

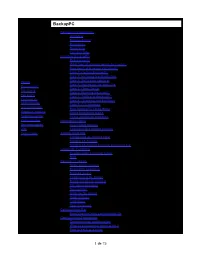

Seleccione Un Host... Aceptar Backuppc

BackupPC BackupPC Introduction Hosts Overview Backup basics Seleccione un host... Resources Road map You can help Installing BackupPC Requirements What type of storage space do I need? Aceptar How much disk space do I need? Step 1: Getting BackupPC Servidor Step 2: Installing the distribution Step 3: Setting up config.pl Estado Step 4: Setting up the hosts file Resumen PC Step 5: Client Setup Edit Config Step 6: Running BackupPC Edit Hosts Step 7: Talking to BackupPC Opciones de Step 8: Checking email delivery administración Step 9: CGI interface Archivo Registro How BackupPC Finds Hosts Registros antiguos Other installation topics Resumen correo Fixing installation problems Colas actuales Restore functions Documentación CGI restore options Wiki Command-line restore options SourceForge Archive functions Configuring an Archive Host Starting an Archive Starting an Archive from the command line Other CGI Functions Configuration and Host Editor RSS BackupPC Design Some design issues BackupPC operation Storage layout Compressed file format Rsync checksum caching File name mangling Special files Attribute file format Optimizations Limitations Security issues Configuration File Modifying the main configuration file Configuration Parameters General server configuration What to backup and when to do it How to backup a client 1 de 75 Email reminders, status and messages CGI user interface configuration settings Version Numbers Author Copyright Credits License BackupPC Introduction This documentation describes BackupPC version 3.1.0, released on 25 Nov 2007. Overview BackupPC is a high-performance, enterprise-grade system for backing up Unix, Linux, WinXX, and MacOSX PCs, desktops and laptops to a server's disk. BackupPC is highly configurable and easy to install and maintain.