Big Data Storage Technologies: a Survey

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Openldap Slides

What's New in OpenLDAP Howard Chu CTO, Symas Corp / Chief Architect OpenLDAP FOSDEM'14 OpenLDAP Project ● Open source code project ● Founded 1998 ● Three core team members ● A dozen or so contributors ● Feature releases every 12-18 months ● Maintenance releases roughly monthly A Word About Symas ● Founded 1999 ● Founders from Enterprise Software world – platinum Technology (Locus Computing) – IBM ● Howard joined OpenLDAP in 1999 – One of the Core Team members – Appointed Chief Architect January 2007 ● No debt, no VC investments Intro Howard Chu ● Founder and CTO Symas Corp. ● Developing Free/Open Source software since 1980s – GNU compiler toolchain, e.g. "gmake -j", etc. – Many other projects, check ohloh.net... ● Worked for NASA/JPL, wrote software for Space Shuttle, etc. 4 What's New ● Lightning Memory-Mapped Database (LMDB) and its knock-on effects ● Within OpenLDAP code ● Other projects ● New HyperDex clustered backend ● New Samba4/AD integration work ● Other features ● What's missing LMDB ● Introduced at LDAPCon 2011 ● Full ACID transactions ● MVCC, readers and writers don't block each other ● Ultra-compact, compiles to under 32KB ● Memory-mapped, lightning fast zero-copy reads ● Much greater CPU and memory efficiency ● Much simpler configuration LMDB Impact ● Within OpenLDAP ● Revealed other frontend bottlenecks that were hidden by BerkeleyDB-based backends ● Addressed in OpenLDAP 2.5 ● Thread pool enhanced, support multiple work queues to reduce mutex contention ● Connection manager enhanced, simplify write synchronization OpenLDAP -

![LIST of NOSQL DATABASES [Currently 150]](https://docslib.b-cdn.net/cover/8918/list-of-nosql-databases-currently-150-418918.webp)

LIST of NOSQL DATABASES [Currently 150]

Your Ultimate Guide to the Non - Relational Universe! [the best selected nosql link Archive in the web] ...never miss a conceptual article again... News Feed covering all changes here! NoSQL DEFINITION: Next Generation Databases mostly addressing some of the points: being non-relational, distributed, open-source and horizontally scalable. The original intention has been modern web-scale databases. The movement began early 2009 and is growing rapidly. Often more characteristics apply such as: schema-free, easy replication support, simple API, eventually consistent / BASE (not ACID), a huge amount of data and more. So the misleading term "nosql" (the community now translates it mostly with "not only sql") should be seen as an alias to something like the definition above. [based on 7 sources, 14 constructive feedback emails (thanks!) and 1 disliking comment . Agree / Disagree? Tell me so! By the way: this is a strong definition and it is out there here since 2009!] LIST OF NOSQL DATABASES [currently 150] Core NoSQL Systems: [Mostly originated out of a Web 2.0 need] Wide Column Store / Column Families Hadoop / HBase API: Java / any writer, Protocol: any write call, Query Method: MapReduce Java / any exec, Replication: HDFS Replication, Written in: Java, Concurrency: ?, Misc: Links: 3 Books [1, 2, 3] Cassandra massively scalable, partitioned row store, masterless architecture, linear scale performance, no single points of failure, read/write support across multiple data centers & cloud availability zones. API / Query Method: CQL and Thrift, replication: peer-to-peer, written in: Java, Concurrency: tunable consistency, Misc: built-in data compression, MapReduce support, primary/secondary indexes, security features. -

Apache Cassandra from the Ground Up

Apache Cassandra From The Ground Up Akhil Mehra This book is for sale at http://leanpub.com/apachecassandrafromthegroundup This version was published on 2017-09-18 This is a Leanpub book. Leanpub empowers authors and publishers with the Lean Publishing process. Lean Publishing is the act of publishing an in-progress ebook using lightweight tools and many iterations to get reader feedback, pivot until you have the right book and build traction once you do. © 2015 - 2017 Akhil Mehra Contents An Introduction To NoSQL & Apache Cassandra ....................... 1 Database Evolution ...................................... 1 Scaling ............................................. 3 NoSQL Database ........................................ 10 Key Foundational Concepts .................................. 12 Apache Cassandra ....................................... 21 An Introduction To NoSQL & Apache Cassandra Welcome to Apache Cassandra from The Group Up. The primary goal of this book to help developers and database administrators understand Apache Cassandra. We start off this chapter exploring database history. An overview of database history lays the foundation for understanding various types of databases currently available. This historical context enables a good understanding of the NoSQL ecosystem and Apache Cassandra’s place in this ecosystem. The chapter concludes by introducing Apache Cassandra’s its key features and applicable use cases. This context is invaluable to evaluate and get to grips with Apache Cassandra. Database Evolution Those who are unaware of history are destined to repeat it Let’s start with the basics. What is a database? According to Wikipedia, a database is an organized collection of data. Purely mathematical calculations were the primary use of early digital computers. Using computers for mathematical calculations was short lived. Applications grew in complexity and needed to read, write and manipulate data. -

The Rationale for Relational History of DB Models Table of Contents

The Rationale for Relational History of DB models Table of contents Relational vs SQL vs NoSQL vs modern marketing Why relational still matters Further reading OMG – Chocolate Fish!!!! Hierarchical databases “A hierarchical database model is a data model in which the data is organized into a tree-like structure. The data is stored as records which are connected to one another through links.“ “The hierarchical structure is used primarily today for storing geographic information and file systems.” Source: https://en.wikipedia.org/wiki/Hierarchical_database_model Network databases “Its distinguishing feature is that the schema, viewed as a graph in which object types are nodes and relationship types are arcs, is not restricted to being a hierarchy or lattice.“ “Until the early 1980s the performance benefits of the low-level navigational interfaces offered by hierarchical and network databases were persuasive for many large-scale applications, but as hardware became faster, the extra productivity and flexibility of the relational model led to the gradual obsolescence of the network model in corporate enterprise usage.” Source: https://en.wikipedia.org/wiki/Network_model Relational algebra and model Relational algebra • Set theory (union, intersect, minus…) • Joins (Cartesian, natural, semi, outer, anti…) • Aggregation Source: https://en.wikipedia.org/wiki/Relational_algebra Relational model “The purpose of the relational model is to provide a declarative method for specifying data and queries: users directly state what information the database contains and what information they want from it, and let the database management system software take care of describing data structures for storing the data and retrieval procedures for answering queries.” Key formal modelling concepts: normal forms (e.g. -

Survey on Nosql Database

Survey on NoSQL Database Jing Han, Haihong E, Guan Le PCN&CAD Center Jian Du Beijing University of Posts and Telecommunications Super Instruments Corporation Beijing, 100876, China Beijing, 100876, China babyblue11 01 [email protected], [email protected] [email protected], [email protected] Abstract have emerged, which made database technology more demands, mainly in the following aspects [1][2]: With the development of the Internet and cloud • High concurrent of reading and writing with low computing, there need databases to be able to store latency and process big data effectively, demand for high Database were demand to meet the needs of high performance when reading and writing, so the concurrent of reading and writing with low latency, at traditional relational database is facing many new the same time, in order to greatly enhance customer challenges. Especially in large scale and high satisfaction, database were demand to help applications concurrency applications, such as search engines and reacting quickly enough. SNS, using the relational database to store and query • Efficient big data storage and access requirements dynamic user data has appeared to be inadequate. In Large applications, such as SNS and search engines, this case, NoSQL database created This paper need database to meet the efficient data storage (PB describes the background, basic characteristics, data level) and can respond to the needs of millions of model of NoSQL. In addition, this paper classifies traffic. NoSQL databases according to the CAP theorem. • High scalability and high availability Finally, the mainstream NoSQL databases are With the increasing number of concurrent requests separately described in detail, and extract some and data, the database needs to be able to support easy properties to help enterprises to choose NoSQL. -

VS-1046 Certified Apache Cassandra Sample Material

Apache Cassandra Sample Material VS-1046 1. INTRODUCTION TO NOSQ L NoSQL databases try to offer certain functionality that more traditional relational database management systems do not. Whether it is for holding simple key-value pairs for shorter lengths of time for caching purposes, or keeping unstructured collections (e.g. collections) of data that could not be easily dealt with using relational databases and the structured query language (SQL) – they are here to help. 1.1. NoSQL Basics A NoSQL (originally referring to "non SQL", "non relational" or "not only SQL") database provides a mechanism for storage and retrieval of data which is modeled in means other than the tabular relations used in relational databases. Such databases have existed since the late 1960s, but did not obtain the "NoSQL" moniker until a surge of popularity in the early twenty-first century, triggered by the needs of Web 2.0 companies such as Facebook, Google, and Amazon.com. NoSQL databases are increasingly used in big data and real- time web applications. NoSQL systems are also sometimes called "Not only SQL" to emphasize that they may support SQL-like query languages. Motivations for this approach include: simplicity of design, simpler "horizontal" scaling to clusters of machines (which is a problem for relational databases), and finer control over availability. The data structures used by NoSQL databases (e.g. key-value, wide column, graph, or document) are different from those used by default in relational databases, making some operations faster in NoSQL. The particular suitability of a given NoSQL database depends on the problem it must solve. -

Rethinkdb As a High Performance Nosql Engine for Real-Time Applications

International Journal of Computing and Business Research (IJCBR) ISSN (Online) : 2229-6166 International Manuscript ID : 22296166V8I1201804 Volume 8 Issue 1 January - February 2018 RethinkDB as a High Performance NoSQL Engine for Real-Time Applications Inderpreet Boparai Assistant Professor Department of Computer Applications Chandigarh Group of Colleges (CGC) Landran, Punjab, India Abstract (NoSQL) came to existence which is meant for With the increasing traffic of heterogeneous as well handling the unstructured and heterogeneous data as unstructured data on the World Wide Web with higher performance. This manuscript (WWW), the traditional database engines are underlines the assorted perspectives with the having enormous issues related to schema working environment of RethinkDB as a high management, concurrency, database integrity, performance NoSQL database engine in the cloud security, parallel read-write operations, resource as well as other working environment. optimization and many others. Now days, the real- time applications are having huge traffic from Keywords : Cloud Database, NoSQL Database, different channels including Social Media, Mail Real-Time Database, RethinkDB Groups, Satellites, Internet of Things (IoT) and many others. These types of traffic are generally Introduction unstructured and huge which cannot be handled by There are different types of NoSQL databases [1] classical Relational Database Management Systems in diversified categories for specific applications so (RDBMS). To cope up with such issues of Big that the higher degree of performance can be Data which include Velocity, Volume and Variety accuracy can be achieved [2]. (3Vs of Big Data), the advent of Not Only SQL Taxonomy NoSQL Database Document Store ArangoDB, Couchbase, BaseX, Clusterpoint, CouchDB, DocumentDB, MarkLogic, IBM Domino, MongoDB, , RethinkDB Qizx Registered with Council of Scientific and Industrial Research, Govt. -

A Practical Guide to Big Data: Opportunities, Challenges & Tools 2 © 2012 Dassault Systèmes About EXALEAD

A PRACTICAL GUIDE TO BIG DaTA Opportunities, Challenges & Tools 3DS.COM/EXALEAD “Give me a lever long enough and a fulcrum on which to place it, and I shall move the world.” 1 Archimedes ABOUT THE AUTHOR Laura Wilber is the former founder and CEO of California-based AVENCOM, Inc., a software development company specializing in online databases and database-driven Internet applications (acquired by Red Door Interactive in 2004), and she served as VP of Marketing for Kintera, Inc., a provider of SaaS software to the nonprofit and government sectors. She also developed courtroom tutorials for technology-related intellectual property litigation for Legal Arts Multimedia, LLC. Ms. Wilber earned an M.A. from the University of Maryland, where she was also a candidate in the PhD program, before joining the federal systems engineering division of Bell Atlantic (now Verizon) in Washington, DC. Ms. Wilber currently works as solutions ana- lyst at EXALEAD. Prior to joining EXALEAD, Ms. Wilber taught Business Process Reengineering, Management of Informa- tion Systems and E-Commerce at ISG (l’Institut Supérieur de Gestion) in Paris. She and her EXALEAD colleague Gregory Grefenstette recently co-authored Search-Based Applications: At the Confluence of Search and Database Technologies, published in 2011 by Morgan & Claypool Publishers. A Practical Guide to Big Data: Opportunities, Challenges & Tools 2 © 2012 Dassault Systèmes ABOUT EXALEAD Founded in 2000 by search engine pioneers, EXALEAD® is the leading Search-Based Application platform provider to business and government. EXALEAD’s worldwide client base includes leading companies such as PricewaterhouseCooper, ViaMichelin, GEFCO, the World Bank and Sanofi Aventis R&D, and more than 100 million unique users a month use EXALEAD’s technology for search and information access. -

Performance Comparison of the Most Popular Relational and Non-Relational Database Management Systems

Master of Science in Software Engineering February 2018 Performance comparison of the most popular relational and non-relational database management systems Kamil Kolonko Faculty of Computing Blekinge Institute of Technology SE-371 79 Karlskrona Sweden This thesis is submitted to the Faculty of Computing at Blekinge Institute of Technology in partial fulfillment of the requirements for the degree of Master of Science in Software Engineering. The thesis is equivalent to 20 weeks of full time studies. Contact Information: Author(s): Kamil Kolonko E-mail: [email protected], [email protected] External advisor: IF APPLICABLE University advisor: Javier González-Huerta Department of Software Engineering Faculty of Computing Internet : www.bth.se Blekinge Institute of Technology Phone : +46 455 38 50 00 SE-371 79 Karlskrona, Sweden Fax : +46 455 38 50 57 i i ABSTRACT Context. Database is an essential part of any software product. With an emphasis on application performance, database efficiency becomes one of the key factors to analyze in the process of technology selection. With a development of new data models and storage technologies, the necessity for a comparison between relational and non-relational database engines is especially evident in the software engineering domain. Objectives. This thesis investigates current knowledge on database performance measurement methods, popularity of relational and non-relational database engines, defines characteristics of databases, approximates their average values and compares the performance of two selected database engines. Methods. In this study a number of research methods are used, including literature review, a review of Internet sources, and an experiment. Literature datasets used in the research incorporate over 100 sources including IEEE Xplore and ACM Digital Library. -

Storage Solutions for Big Data Systems: a Qualitative Study and Comparison

Storage Solutions for Big Data Systems: A Qualitative Study and Comparison Samiya Khana,1, Xiufeng Liub, Syed Arshad Alia, Mansaf Alama,2 aJamia Millia Islamia, New Delhi, India bTechnical University of Denmark, Denmark Highlights Provides a classification of NoSQL solutions on the basis of their supported data model, which may be data- oriented, graph, key-value or wide-column. Performs feature analysis of 80 NoSQL solutions in view of technology selection criteria for big data systems along with a cumulative evaluation of appropriate and inappropriate use cases for each of the data model. Classifies big data file formats into five categories namely text-based, row-based, column-based, in-memory and data storage services. Compares available data file formats, analyzing benefits and shortcomings, and use cases for each data file format. Evaluates the challenges associated with shift of next-generation big data storage towards decentralized storage and blockchain technologies. Abstract Big data systems‘ development is full of challenges in view of the variety of application areas and domains that this technology promises to serve. Typically, fundamental design decisions involved in big data systems‘ design include choosing appropriate storage and computing infrastructures. In this age of heterogeneous systems that integrate different technologies for optimized solution to a specific real-world problem, big data system are not an exception to any such rule. As far as the storage aspect of any big data system is concerned, the primary facet in this regard is a storage infrastructure and NoSQL seems to be the right technology that fulfills its requirements. However, every big data application has variable data characteristics and thus, the corresponding data fits into a different data model. -

The Nosql RDBMS

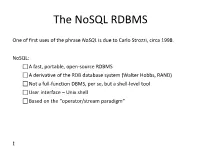

The NoSQL RDBMS One of first uses of the phrase NoSQL is due to Carlo Strozzi, circa 1998. NoSQL: A fast, portable, open-source RDBMS A derivative of the RDB database system (Walter Hobbs, RAND) Not a full-function DBMS, per se, but a shell-level tool User interface – Unix shell Based on the “operator/stream paradigm” 1 NoSQL Today More recently: . The term has taken on different meanings . One common interpretation is “not only SQL” Most modern NoSQL systems diverge from the relational model or standard RDBMS functionality: The data model: relations documents tuples vs. graphs attributes key/values domains normalization The query model: relational algebra graph traversal tuple calculus vs. text search map/reduce The implementation: rigid schemas vs. flexible schemas (schema-less) ACID compliance vs. BASE In that sense, NoSQL today is more commonly meant to be something like “non-relational” NoSQL Today (a partial, unrefined list) Hbase Cassandra Hypertable Accumulo Amazon SimpleDB SciDB Stratosphere flare Cloudata BigTable QD Technology SmartFocus KDI Alterian Cloudera C-Store Vertica Qbase–MetaCarta OpenNeptune HPCC Mongo DB CouchDB Clusterpoint ServerTerrastore Jackrabbit OrientDB Perservere CoudKit Djondb SchemaFreeDB SDB JasDB RaptorDB ThruDB RavenDB DynamoDB Azure Table Storage Couchbase Server Riak LevelDB Chordless GenieDB Scalaris Tokyo Kyoto Cabinet Tyrant Scalien Berkeley DB Voldemort Dynomite KAI MemcacheDB Faircom C-Tree HamsterDB STSdb Tarantool/Box Maxtable Pincaster RaptorDB TIBCO Active Spaces allegro-C nessDBHyperDex -

Big Data Software

Big Data Software Spring 2017 Bloomington, Indiana Editor: Gregor von Laszewski Department of Intelligent Systems Engeneering Indiana University [email protected] Contents 1 S17-ER-1001 Berkeley DB Saber Sheybani 5 2 S17-IO-3000 Apache Ranger Avadhoot Agasti 8 3 S17-IO-3005 Amazon Kinesis Abhishek Gupta 11 4 S17-IO-3008 Google Cloud DNS Vishwanath Kodre 14 5 S17-IO-3010 Robot Operating System (ROS) Matthew Lawson 17 6 S17-IO-3011 Apache Crunch Scott McClary 22 7 S17-IO-3012 Apache MRQL - MapReduce Query Language Mark McCombe 25 8 S17-IO-3013 Lighting Memory-Mapped Database (LMDB) Leonard Mwangi 29 9 S17-IO-3014 SciDB: An Array Database Piyush Rai 32 10 S17-IO-3015 Cassandra Sabyasachi Roy Choudhury 34 11 S17-IO-3016 Apache Derby Ribka Rufael 37 1 12 S17-IO-3017 Facebook Tao Nandita Sathe 40 13 S17-IO-3019 InCommon Michael Smith, 43 14 S17-IO-3020 Hadoop YARN Milind Suryawanshi, Gregor von Laszewski 46 15 S17-IO-3021 Apache Tez- Application Data processing Framework Abhijt Thakre 49 16 S17-IO-3022 Deployment Model of Juju Sunanda Unni 53 17 S17-IO-3023 AWS Lambda Karthick Venkatesan 56 18 S17-IO-3024 Not Submitted Ashok Vuppada 60 19 S17-IR-2001 HUBzero: A Platform For Scientific Collaboration Niteesh Kumar Akurati 62 20 S17-IR-2002 Apache Flink: Stream and Batch Processing Jimmy Ardiansyah 65 21 S17-IR-2004 Jelastic Ajit Balaga, S17-IR-2004 68 22 S17-IR-2006 An Overview of Apache Spark Snehal Chemburkar, Rahul Raghatate 71 23 S17-IR-2008 An overview of Apache THRIFT and its architecture Karthik Anbazhagan 76 2 24 S17-IR-2011 Hyper-V Anurag