Performance Optimization and Tuning Techniques for IBM Power Systems Processors Including IBM POWER8

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Accelerating HPL Using the Intel Xeon Phi 7120P Coprocessors

Accelerating HPL using the Intel Xeon Phi 7120P Coprocessors Authors: Saeed Iqbal and Deepthi Cherlopalle The Intel Xeon Phi Series can be used to accelerate HPC applications in the C4130. The highly parallel architecture on Phi Coprocessors can boost the parallel applications. These coprocessors work seamlessly with the standard Xeon E5 processors series to provide additional parallel hardware to boost parallel applications. A key benefit of the Xeon Phi series is that these don’t require redesigning the application, only compiler directives are required to be able to use the Xeon Phi coprocessor. Fundamentally, the Intel Xeon series are many-core parallel processors, with each core having a dedicated L2 cache. The cores are connected through a bi-directional ring interconnects. Intel offers a complete set of development, performance monitoring and tuning tools through its Parallel Studio and VTune. The goal is to enable HPC users to get advantage from the parallel hardware with minimal changes to the code. The Xeon Phi has two modes of operation, the offload mode and native mode. In the offload mode designed parts of the application are “offloaded” to the Xeon Phi, if available in the server. Required code and data is copied from a host to the coprocessor, processing is done parallel in the Phi coprocessor and results move back to the host. There are two kinds of offload modes, non-shared and virtual-shared memory modes. Each offload mode offers different levels of user control on data movement to and from the coprocessor and incurs different types of overheads. In the native mode, the application runs on both host and Xeon Phi simultaneously, communication required data among themselves as need. -

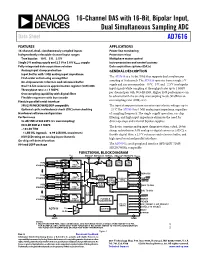

16-Channel DAS with 16-Bit, Bipolar Input, Dual Simultaneous Sampling

16-Channel DAS with 16-Bit, Bipolar Input, Dual Simultaneous Sampling ADC Data Sheet AD7616 FEATURES APPLICATIONS 16-channel, dual, simultaneously sampled inputs Power line monitoring Independently selectable channel input ranges Protective relays True bipolar: ±10 V, ±5 V, ±2.5 V Multiphase motor control Single 5 V analog supply and 2.3 V to 3.6 V VDRIVE supply Instrumentation and control systems Fully integrated data acquisition solution Data acquisition systems (DASs) Analog input clamp protection GENERAL DESCRIPTION Input buffer with 1 MΩ analog input impedance First-order antialiasing analog filter The AD7616 is a 16-bit, DAS that supports dual simultaneous On-chip accurate reference and reference buffer sampling of 16 channels. The AD7616 operates from a single 5 V Dual 16-bit successive approximation register (SAR) ADC supply and can accommodate ±10 V, ±5 V, and ±2.5 V true bipolar Throughput rate: 2 × 1 MSPS input signals while sampling at throughput rates up to 1 MSPS Oversampling capability with digital filter per channel pair with 90.5 dB SNR. Higher SNR performance can Flexible sequencer with burst mode be achieved with the on-chip oversampling mode (92 dB for an Flexible parallel/serial interface oversampling ratio (OSR) of 2). SPI/QSPI/MICROWIRE/DSP compatible The input clamp protection circuitry can tolerate voltages up to Optional cyclic redundancy check (CRC) error checking ±21 V. T h e AD7616 has 1 MΩ analog input impedance, regardless Hardware/software configuration of sampling frequency. The single-supply operation, on-chip Performance filtering, and high input impedance eliminate the need for 92 dB SNR at 500 kSPS (2× oversampling) driver op amps and external bipolar supplies. -

Vxworks Architecture Supplement, 6.2

VxWorks Architecture Supplement VxWorks® ARCHITECTURE SUPPLEMENT 6.2 Copyright © 2005 Wind River Systems, Inc. All rights reserved. No part of this publication may be reproduced or transmitted in any form or by any means without the prior written permission of Wind River Systems, Inc. Wind River, the Wind River logo, Tornado, and VxWorks are registered trademarks of Wind River Systems, Inc. Any third-party trademarks referenced are the property of their respective owners. For further information regarding Wind River trademarks, please see: http://www.windriver.com/company/terms/trademark.html This product may include software licensed to Wind River by third parties. Relevant notices (if any) are provided in your product installation at the following location: installDir/product_name/3rd_party_licensor_notice.pdf. Wind River may refer to third-party documentation by listing publications or providing links to third-party Web sites for informational purposes. Wind River accepts no responsibility for the information provided in such third-party documentation. Corporate Headquarters Wind River Systems, Inc. 500 Wind River Way Alameda, CA 94501-1153 U.S.A. toll free (U.S.): (800) 545-WIND telephone: (510) 748-4100 facsimile: (510) 749-2010 For additional contact information, please visit the Wind River URL: http://www.windriver.com For information on how to contact Customer Support, please visit the following URL: http://www.windriver.com/support VxWorks Architecture Supplement, 6.2 11 Oct 05 Part #: DOC-15660-ND-00 Contents 1 Introduction -

Intel Cirrascale and Petrobras Case Study

Case Study Intel® Xeon Phi™ Coprocessor Intel® Xeon® Processor E5 Family Big Data Analytics High-Performance Computing Energy Accelerating Energy Exploration with Intel® Xeon Phi™ Coprocessors Cirrascale delivers scalable performance by combining its innovative PCIe switch riser with Intel® processors and coprocessors To find new oil and gas reservoirs, organizations are focusing exploration in the deep sea and in complex geological formations. As energy companies such as Petrobras work to locate and map those reservoirs, they need powerful IT resources that can process multiple iterations of seismic models and quickly deliver precise results. IT solution provider Cirrascale began building systems with Intel® Xeon Phi™ coprocessors to provide the scalable performance Petrobras and other customers need while holding down costs. Challenges • Enable deep-sea exploration. Improve reservoir mapping accuracy with detailed seismic processing. • Accelerate performance of seismic applications. Speed time to results while controlling costs. • Improve scalability. Enable server performance and density to scale as data volumes grow and workloads become more demanding. Solution • Custom Cirrascale servers with Intel Xeon Phi coprocessors. Employ new compute blades with the Intel® Xeon® processor E5 family and Intel Xeon Phi coprocessors. Cirrascale uses custom PCIe switch risers for fast, peer-to-peer communication among coprocessors. Technology Results • Linear scaling. Performance increases linearly as Intel Xeon Phi coprocessors “Working together, the are added to the system. Intel® Xeon® processors • Simplified development model. Developers no longer need to spend time optimizing data placement. and Intel® Xeon Phi™ coprocessors help Business Value • Faster, better analysis. More detailed and accurate modeling in less time HPC applications shed improves oil and gas exploration. -

SIMD Extensions

SIMD Extensions PDF generated using the open source mwlib toolkit. See http://code.pediapress.com/ for more information. PDF generated at: Sat, 12 May 2012 17:14:46 UTC Contents Articles SIMD 1 MMX (instruction set) 6 3DNow! 8 Streaming SIMD Extensions 12 SSE2 16 SSE3 18 SSSE3 20 SSE4 22 SSE5 26 Advanced Vector Extensions 28 CVT16 instruction set 31 XOP instruction set 31 References Article Sources and Contributors 33 Image Sources, Licenses and Contributors 34 Article Licenses License 35 SIMD 1 SIMD Single instruction Multiple instruction Single data SISD MISD Multiple data SIMD MIMD Single instruction, multiple data (SIMD), is a class of parallel computers in Flynn's taxonomy. It describes computers with multiple processing elements that perform the same operation on multiple data simultaneously. Thus, such machines exploit data level parallelism. History The first use of SIMD instructions was in vector supercomputers of the early 1970s such as the CDC Star-100 and the Texas Instruments ASC, which could operate on a vector of data with a single instruction. Vector processing was especially popularized by Cray in the 1970s and 1980s. Vector-processing architectures are now considered separate from SIMD machines, based on the fact that vector machines processed the vectors one word at a time through pipelined processors (though still based on a single instruction), whereas modern SIMD machines process all elements of the vector simultaneously.[1] The first era of modern SIMD machines was characterized by massively parallel processing-style supercomputers such as the Thinking Machines CM-1 and CM-2. These machines had many limited-functionality processors that would work in parallel. -

IBM Power Systems Solution Edition for Scale-Out Cloud

IBM Systems and Technology Solution Brief IBM Power Systems and Storage Solution Edition for Scale-Out Cloud Open source, Linux solution for scale-out data virtualization and cloud Cloud computing is no longer a question of “if” for IT organizations, but Highlights rather one of when, how and for which workloads. Cloud is widely understood to be an IT delivery model that can improve IT asset utilization, Allows open infrastructures to scale out intelligently, with less hardware, flexibility and responsiveness while reducing complexity and lowering power and cooling requirements costs. With these benefits come many complexities which need to be and better economics, using over considered as organizations define and implement a cloud delivery strategy. twice the bandwidth from previous Some technology options can hinder the efficiency and costs saving generations potential of the cloud by impeding interoperability, hampering workload Built-in data virtualization delivers performance, exposing security vulnerabilities and limiting scalability. seamless storage management from a single control point with no Building on the performance advantaged IBM POWER8™ architecture, the impact to applications Power Systems™ and Storage Solution Edition for Scale-Out Cloud Flexibility, agility and provides a superior cost effective platform with open source PowerKVM interoperability with open source, hypervisor, powerful data virtualized with IBM Storwize ® V7000, and community-driven virtualization and a single pane of glass for OpenStack-based cloud management for a single pane of glass cloud heterogeneous cloud management management. The Solution Edition reduces the timeframe for infrastructure deployments from months to days with integrated infrastructure and automated provisioning of virtualized resources. It ensures optimal cost effectiveness with Power scale out systems, automated Easy Tier® for flash and disk storage and Real-Time Compression™ that can store up to 5x as much data in the same physical space. -

Power Management 24

Power Management 24 The embedded Pentium® processor family implements Intel’s System Management Mode (SMM) architecture. This chapter describes the hardware interface to SMM and Clock Control. 24.1 Power Management Features • System Management Interrupt can be delivered through the SMI# signal or through the local APIC using the SMI# message, which enhances the SMI interface, and provides for SMI delivery in APIC-based Pentium processor dual processing systems. • In dual processing systems, SMIACT# from the bus master (MRM) behaves differently than in uniprocessor systems. If the LRM processor is the processor in SMM mode, SMIACT# will be inactive and remain so until that processor becomes the MRM. • The Pentium processor is capable of supporting an SMM I/O instruction restart. This feature is automatically disabled following RESET. To enable the I/O instruction restart feature, set bit 9 of the TR12 register to “1”. • The Pentium processor default SMM revision identifier has a value of 2 when the SMM I/O instruction restart feature is enabled. • SMI# is NOT recognized by the processor in the shutdown state. 24.2 System Management Interrupt Processing The system interrupts the normal program execution and invokes SMM by generating a System Management Interrupt (SMI#) to the processor. The processor will service the SMI# by executing the following sequence. See Figure 24-1. 1. Wait for all pending bus cycles to complete and EWBE# to go active. 2. The processor asserts the SMIACT# signal while in SMM indicating to the system that it should enable the SMRAM. 3. The processor saves its state (context) to SMRAM, starting at address location SMBASE + 0FFFFH, proceeding downward in a stack-like fashion. -

Robust Architectural Support for Transactional Memory in the Power Architecture

Robust Architectural Support for Transactional Memory in the Power Architecture Harold W. Cain∗ Brad Frey Derek Williams IBM Research IBM STG IBM STG Yorktown Heights, NY, USA Austin, TX, USA Austin, TX, USA [email protected] [email protected] [email protected] Maged M. Michael Cathy May Hung Le IBM Research IBM Research (retired) IBM STG Yorktown Heights, NY, USA Yorktown Heights, NY, USA Austin, TX, USA [email protected] [email protected] [email protected] ABSTRACT in current p795 systems, with 8 TB of DRAM), as well as On the twentieth anniversary of the original publication [10], strengths in RAS that differentiate it in the market, adding following ten years of intense activity in the research lit- TM must not compromise any of these virtues. A robust erature, hardware support for transactional memory (TM) system is one that is sturdy in construction, a trait that has finally become a commercial reality, with HTM-enabled does not usually come to mind in respect to HTM systems. chips currently or soon-to-be available from many hardware We structured TM to work in harmony with features that vendors. In this paper we describe architectural support for support the architecture's scalability. Our goal has been to TM provide a comprehensive programming environment includ- TM added to a future version of the Power ISA . Two im- ing support for simple system calls and debug aids, while peratives drove the development: the desire to complement providing a robust (in the sense of "no surprises") execu- our weakly-consistent memory model with a more friendly tion environment with reasonably consistent performance interface to simplify the development and porting of multi- and without unexpected transaction failures.2 TM must be threaded applications, and the need for robustness beyond usable throughout the system stack: in hypervisors, oper- that of some early implementations. -

The CLASP Application Security Process

The CLASP Application Security Process Secure Software, Inc. Copyright (c) 2005, Secure Software, Inc. The CLASP Application Security Process The CLASP Application Security Process TABLE OF CONTENTS CHAPTER 1 Introduction 1 CLASP Status 4 An Activity-Centric Approach 4 The CLASP Implementation Guide 5 The Root-Cause Database 6 Supporting Material 7 CHAPTER 2 Implementation Guide 9 The CLASP Activities 11 Institute security awareness program 11 Monitor security metrics 12 Specify operational environment 13 Identify global security policy 14 Identify resources and trust boundaries 15 Identify user roles and resource capabilities 16 Document security-relevant requirements 17 Detail misuse cases 18 Identify attack surface 19 Apply security principles to design 20 Research and assess security posture of technology solutions 21 Annotate class designs with security properties 22 Specify database security configuration 23 Perform security analysis of system requirements and design (threat modeling) 24 Integrate security analysis into source management process 25 Implement interface contracts 26 Implement and elaborate resource policies and security technologies 27 Address reported security issues 28 Perform source-level security review 29 Identify, implement and perform security tests 30 The CLASP Application Security Process i Verify security attributes of resources 31 Perform code signing 32 Build operational security guide 33 Manage security issue disclosure process 34 Developing a Process Engineering Plan 35 Business objectives 35 Process -

Power Management Using FPGA Architectural Features Abu Eghan, Principal Engineer Xilinx Inc

Power Management Using FPGA Architectural Features Abu Eghan, Principal Engineer Xilinx Inc. Agenda • Introduction – Impact of Technology Node Adoption – Programmability & FPGA Expanding Application Space – Review of FPGA Power characteristics • Areas for power consideration – Architecture Features, Silicon design & Fabrication – now and future – Power & Package choices – Software & Implementation of Features – The end-user choices & Enablers • Thermal Management – Enabling tools • Summary Slide 2 2008 MEPTEC Symposium “The Heat is On” Abu Eghan, Xilinx Inc Technology Node Adoption in FPGA • New Tech. node Adoption & level of integration: – Opportunities – at 90nm, 65nm and beyond. FPGAs at leading edge of node adoption. • More Programmable logic Arrays • Higher clock speeds capability and higher performance • Increased adoption of Embedded Blocks: Processors, SERDES, BRAMs, DCM, Xtreme DSP, Ethernet MAC etc – Impact – general and may not be unique to FPGA • Increased need to manage leakage current and static power • Heat flux (watts/cm2) trend is generally up and can be non-uniform. • Potentially higher dynamic power as transistor counts soar. • Power Challenges -- Shared with Industry – Reliability limitation & lower operating temperatures – Performance & Cost Trade-offs – Lower thermal budgets – Battery Life expectancy challenges Slide 3 2008 MEPTEC Symposium “The Heat is On” Abu Eghan, Xilinx Inc FPGA-101: FPGA Terms • FPGA – Field Programmable Gate Arrays • Configurable Logic Blocks – used to implement a wide range of arbitrary digital -

IBM Power® Systems for SAS® Empowers Advanced Analytics Harry Seifert, Laurent Montaron, IBM Corporation

Paper 4695-2020 IBM Power® Systems for SAS® Empowers Advanced Analytics Harry Seifert, Laurent Montaron, IBM Corporation ABSTRACT For over 40+ years of partnership between IBM and SAS®, clients have been benefiting from the added value brought by IBM’s infrastructure platforms to deploy SAS analytics, and now SAS Viya’s evolution of modern analytics. IBM Power® Systems and IBM Storage empower SAS environments with infrastructure that does not make tradeoffs among performance, cost, and reliability. The unified solution stack, comprising server, storage, and services, reduces the compute time, controls costs, and maximizes resilience of SAS environment with ultra-high bandwidth and highest availability. INTRODUCTION We will explore how to deploy SAS on IBM Power Systems platforms and unleash the full potential of the infrastructure, to reduce deployment risk, maximize flexibility and accelerate insights. We will start by reviewing IBM and SAS’s technology relationship and the current state of SAS products on IBM Power Systems. Then we will look at some of the infrastructure options to deploy SAS 9.4 on IBM Power Systems and IBM Storage, while maximizing resiliency & throughput by leveraging best practices. Next, we will look at SAS Viya, which introduces changes to the underlying infrastructure requirements while remaining able to be deployed alongside a traditional SAS 9.4 operation. We’ll explore the various deployment modes available. Finally, we’ll look at tuning practices and reference materials available for a deeper dive in deploying SAS on IBM platforms. SAS: 40 YEARS OF PARTNERSHIP WITH IBM IBM and SAS have been partners since the founding of SAS. -

POWER® Processor-Based Systems

IBM® Power® Systems RAS Introduction to IBM® Power® Reliability, Availability, and Serviceability for POWER9® processor-based systems using IBM PowerVM™ With Updates covering the latest 4+ Socket Power10 processor-based systems IBM Systems Group Daniel Henderson, Irving Baysah Trademarks, Copyrights, Notices and Acknowledgements Trademarks IBM, the IBM logo, and ibm.com are trademarks or registered trademarks of International Business Machines Corporation in the United States, other countries, or both. These and other IBM trademarked terms are marked on their first occurrence in this information with the appropriate symbol (® or ™), indicating US registered or common law trademarks owned by IBM at the time this information was published. Such trademarks may also be registered or common law trademarks in other countries. A current list of IBM trademarks is available on the Web at http://www.ibm.com/legal/copytrade.shtml The following terms are trademarks of the International Business Machines Corporation in the United States, other countries, or both: Active AIX® POWER® POWER Power Power Systems Memory™ Hypervisor™ Systems™ Software™ Power® POWER POWER7 POWER8™ POWER® PowerLinux™ 7® +™ POWER® PowerHA® POWER6 ® PowerVM System System PowerVC™ POWER Power Architecture™ ® x® z® Hypervisor™ Additional Trademarks may be identified in the body of this document. Other company, product, or service names may be trademarks or service marks of others. Notices The last page of this document contains copyright information, important notices, and other information. Acknowledgements While this whitepaper has two principal authors/editors it is the culmination of the work of a number of different subject matter experts within IBM who contributed ideas, detailed technical information, and the occasional photograph and section of description.