Cyber-Physical Threat Intelligence for Critical Infrastructures Security

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

ECSO State of the Art Syllabus V1 ABOUT ECSO

STATE OF THE ART SYLLABUS Overview of existing Cybersecurity standards and certification schemes WG1 I Standardisation, certification, labelling and supply chain management JUNE 2017 ECSO State of the Art Syllabus v1 ABOUT ECSO The European Cyber Security Organisation (ECSO) ASBL is a fully self-financed non-for-profit organisation under the Belgian law, established in June 2016. ECSO represents the contractual counterpart to the European Commission for the implementation of the Cyber Security contractual Public-Private Partnership (cPPP). ECSO members include a wide variety of stakeholders across EU Member States, EEA / EFTA Countries and H2020 associated countries, such as large companies, SMEs and Start-ups, research centres, universities, end-users, operators, clusters and association as well as European Member State’s local, regional and national administrations. More information about ECSO and its work can be found at www.ecs-org.eu. Contact For queries in relation to this document, please use [email protected]. For media enquiries about this document, please use [email protected]. Disclaimer The document was intended for reference purposes by ECSO WG1 and was allowed to be distributed outside ECSO. Despite the authors’ best efforts, no guarantee is given that the information in this document is complete and accurate. Readers of this document are encouraged to send any missing information or corrections to the ECSO WG1, please use [email protected]. This document integrates the contributions received from ECSO members until April 2017. Cybersecurity is a very dynamic field. As a result, standards and schemes for assessing Cybersecurity are being developed and updated frequently. -

Iso 22301:2019

INTERNATIONAL ISO STANDARD 22301 Second edition 2019-10 Security and resilience — Business continuity management systems — Requirements Sécurité et résilience — Systèmes de management de la continuité d'activité — Exigences Reference number ISO 22301:2019(E) © ISO 2019 ISO 22301:2019(E) COPYRIGHT PROTECTED DOCUMENT © ISO 2019 All rights reserved. Unless otherwise specified, or required in the context of its implementation, no part of this publication may be reproduced or utilized otherwise in any form or by any means, electronic or mechanical, including photocopying, or posting on the internet or an intranet, without prior written permission. Permission can be requested from either ISO at the address below or ISO’s member body in the country of the requester. ISO copyright office CP 401 • Ch. de Blandonnet 8 CH-1214 Vernier, Geneva Phone: +41 22 749 01 11 Fax:Website: +41 22www.iso.org 749 09 47 Email: [email protected] iiPublished in Switzerland © ISO 2019 – All rights reserved ISO 22301:2019(E) Contents Page Foreword ..........................................................................................................................................................................................................................................v Introduction ................................................................................................................................................................................................................................vi 1 Scope ................................................................................................................................................................................................................................ -

En Iso 22300

Terminology in Crisis and Disaster Management CEN Workshop Agreement Georg Neubauer, AIT http://www.ait.ac.at Background . The FP7 project EPISECC develops a concept of a common information space including taxonomy building to improve interoperability between European crisis managers and stakeholders . EPISECC is mandated to provide the outcome of its research to international standardisation – involvement in CEN TC391 . Within the FP7 project DRIVER a standard on terminology in crisis management shall be developed (among multiple other goals) . DRIVER & EPISECC will jointly co-operate on this issue . Additional support is planned from the FP7 projects REDIRNET, SECINCORE and SECTOR (all dealing with interoperability) 2 Scope and Purpose . Provision of an overview of existing terminologies and definitions applied in multiple domains of crisis and disaster management . Overview on synonyms with the same or similar definitions . Overview on different definitions for the same term . Benefit: Support enhancement of mutual understanding of users/organizations applying different standards/taxonomies . Benefit: Potential long term perspective: enhanced use of most suitable terms and definitions arising from multiple sources 3 Scope and Purpose (Example) Domain Term Definition Standard/document Intended Users situation where widespread human, material, economic or environmental losses have occurred which exceeded the ability of the affected organization (2.2.9), community or society to respond and recover using its own resources Societal security disaster ISO 22300 (2012) not specified A serious disruption of the functioning of a community or a society involving widespread human, material, economic or environmental losses and impacts, which exceeds the ability of the affected community or society to cope using its own authorities, pratictioners not specified disaster resources. -

Risk Management in Crisis: Winners and Losers During the COVID-19 Pandemic/Piotr Jedynak and Sylwia Bąk

Risk Management in Crisis Risk management is a domain of management which comes to the fore in crisis. This book looks at risk management under crisis conditions in the COVID-19 pandemic context. The book synthesizes existing concepts, strategies, approaches and methods of risk management and provides the results of empirical research on risk and risk management during the COVID-19 pandemic. The research outcome was based on the authors’ study on 42 enterprises of different sizes in various sectors, and these firms have either been negatively affected by COVID-19 or have thrived successfully under the new conditions of conducting business activities. The anal- ysis looks at both the impact of the COVID-19 pandemic on the selected enter- prises and the risk management measures these enterprises had taken in response to the emerging global trends. The book puts together key factors which could have determined the enterprises’ failures and successes. The final part of the book reflects on how firms can build resilience in chal- lenging times and suggests a model for business resilience. The comparative anal- ysis will provide useful insights into key strategic approaches of risk management. Piotr Jedynak is Professor of Management. He works at Jagiellonian University in Cracow, Poland, where he holds the positions of Vice-Rector for Financial and HR Policy and Head of the Management Systems Department. He specializes in risk management, strategic management and management systems. He is the author of numerous publications, an auditor and consultant to many public and business organizations. Sylwia Bąk holds a PhD in Management Sciences. -

Linee Guida Per Lo Sviluppo E La Definizione Del Modello Nazionale

Linee guida per lo sviluppo e la definizione del modello nazionale di riferimento per i CERT regionali AGID 13 feb 2020 Indice 1 Premessa 3 2 Riferimenti 5 2.1 Leggi...................................................5 2.2 Linee Guida e Standard.........................................5 3 Definizioni e Acronimi 7 4 Contesto 9 4.1 Quadro di riferimento nazionale.....................................9 4.2 Impianto normativo applicabile ai CERT................................ 12 4.3 Organismi a supporto della Cyber Security............................... 18 4.4 Standard per la Cyber Security...................................... 21 5 Introduzione ai CERT 31 5.1 CERT: significato e definizioni generali................................. 31 5.2 Categorie di CERT............................................ 32 5.3 Mission dei CERT............................................ 32 5.4 Identificazione della constituency.................................... 33 5.5 CERT regionali.............................................. 34 6 Modello organizzativo 39 6.1 Modello indipendente.......................................... 39 6.2 Modello incorporato........................................... 41 6.3 Modello campus............................................. 43 7 Modello amministrativo 45 8 Servizi 47 8.1 Modelli di classificazione dei servizi.................................. 47 8.2 Servizi offerti dai CERT Regionali.................................... 50 9 Processo di gestione degli incidenti di sicurezza 57 9.1 Definizioni............................................... -

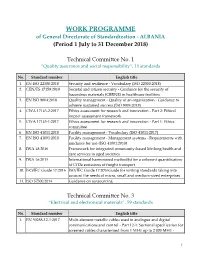

WORK PROGRAMME of General Directorate of Standardization - ALBANIA (Period 1 July to 31 December 2018)

WORK PROGRAMME of General Directorate of Standardization - ALBANIA (Period 1 July to 31 December 2018) Technical Committee No. 1 “Quality assurance and social responsibility”, 11 standards No. Standard number English title 1. EN ISO 22300:2018 Security and resilience - Vocabulary (ISO 22300:2018) 2. CEN/TS 17159:2018 Societal and citizen security - Guidance for the security of hazardous materials (CBRNE) in healthcare facilities 3. EN ISO 9004:2018 Quality management - Quality of an organization - Guidance to achieve sustained success (ISO 9004:2018) 4. CWA 17145-2:2017 Ethics assessment for research and innovation - Part 2: Ethical impact assessment framework 5. CWA 17145-1:2017 Ethics assessment for research and innovation - Part 1: Ethics committee 6. EN ISO 41011:2018 Facility management - Vocabulary (ISO 41011:2017) 7. EN ISO 41001:2018 Facility management - Management systems - Requirements with guidance for use (ISO 41001:2018) 8. IWA 18:2016 Framework for integrated community-based life-long health and care services in aged societies 9. IWA 16:2015 International harmonized method(s) for a coherent quantification of CO2e emissions of freight transport 10. ISO/IEC Guide 17:2016 ISO/IEC Guide 17:2016Guide for writing standards taking into account the needs of micro, small and medium-sized enterprises 11. ISO 37500:2014 Guidance on outsourcing Technical Committee No. 3 “Electrical and electronical materials”, 59 standards No. Standard number English title 1. EN 50288-12-1:2017 Multi-element metallic cables used in analogue and digital communications and control - Part 12-1: Sectional specification for screened cables characterised from 1 MHz up to 2 000 MHz - 1 Horizontal and building backbone cables 2. -

D955.11 – Report on Existing Standards and Standardization Activities in Crisis Management Sp95 - Impact, Engagement and Sustainability March 2018 (M47)

D955.11 – REPORT ON EXISTING STANDARDS AND STANDARDIZATION ACTIVITIES IN CRISIS MANAGEMENT SP95 - IMPACT, ENGAGEMENT AND SUSTAINABILITY MARCH 2018 (M47) This project has received funding from the European Union’s 7th Framework Programme for Research, Technological Development and Demonstration under Grant Agreement (GA) N° #607798 DRIVER+ project D955.11 – Report on existing standards and standardization activities in crisis managementD955.11 – Report on existing standards and standardization activities in crisis management March 2018 (M47) Project information Project Acronym: DRIVER+ Project Full Title: Driving Innovation in Crisis Management for European Resilience Grant Agreement: 607798 Project Duration: 72 months (May 2014 - April 2020) Project Technical Coordinator: TNO Contact: [email protected] Deliverable information Deliverable Status: Final Deliverable Title: D955.11 – Report on existing standards and standardization activities in crisis management Deliverable Nature: Report (R) Dissemination Level: Public (PU) Due Date: March 2018 (M47) Submission Date: 02/04/2018 Sub-Project (SP): SP95 - Impact, Engagement and Sustainability Work Package (WP): WP955 - Standardisation activities Deliverable Leader: DIN Reviewers: Francisco Gala, ATOS. Klaudia, Tani, EOS File Name: DRIVER+_D955.11_Report_on_existing_standards_and_standardisation_activitie s_in_crisis_management.docx DISCLAIMER The opinion stated in this report reflects the opinion of the authors and not the opinion of the European Commission. All intellectual property rights are owned by the DRIVER+ consortium members and are protected by the applicable laws. Except where otherwise specified, all document contents are: “©DRIVER+ Project - All rights reserved”. Reproduction is not authorised without prior written agreement. The commercial use of any information contained in this document may require a license from the owner of that information. All DRIVER+ consortium members are also committed to publish accurate and up to date information and take the greatest care to do so. -

DRIVER+ Portfolio of Solutions Database and Guidance Tool

This project has received funding from the European Union’s 7th Framework Programme for Research, Technological Development and Demonstration under Grant Agreement (GA) N° #607798 DRIVER+ project ◼ D933.21 DRIVER+ PoS database and guidance tool prototypes ◼ July 2019 (M63) Project Acronym: DRIVER+ Project Full Title: Driving Innovation in Crisis Management for European Resilience Grant Agreement: 607798 Project Duration: 72 months (May 2014 - April 2020) Project Technical Coordinator: TNO Contact: Deliverable Status: Final Deliverable Title: D933.21 DRIVER+ PoS database and guidance tool prototypes Deliverable Nature: Prototype (P) Dissemination Level: Public (PU) Due Date: July 2019 (M63) Submission Date: 17/07/2019 Subproject (SP): SP93 - Solutions Work Package (WP): WP933 - DRIVER+ online platforms Deliverable Leader: Denis Havlik, AIT Héctor Naranjo Setién, GMV Reviewers: Maurice Sammels, XVR Erik Vullings, TNO File Name: DRIVER+_D933.21 DRIVER+ PoS database and guidance tool prototypes.docx Version of template used: V2.2 – February 2019 DISCLAIMER The opinion stated in this report reflects the opinion of the authors and not the opinion of the European Commission. All intellectual property rights are owned by the DRIVER+ consortium members and are protected by the applicable laws. Except where otherwise specified, all document contents are: “©DRIVER+ Project - All rights reserved”. Reproduction is not authorised without prior written agreement. The commercial use of any information contained in this document may require a license from the owner of that information. All DRIVER+ consortium members are also committed to publish accurate and up to date information and take the greatest care to do so. However, the DRIVER+ consortium members cannot accept liability for any inaccuracies or omissions nor do they accept liability for any direct, indirect, special, consequential or other losses or damages of any kind arising out of the use of this information. -

Business Continuity and Disaster Recovery Plan for Information Security Vyshnavi Jorrigala St

St. Cloud State University theRepository at St. Cloud State Culminating Projects in Information Assurance Department of Information Systems 12-2017 Business Continuity and Disaster Recovery Plan for Information Security Vyshnavi Jorrigala St. Cloud State University, [email protected] Follow this and additional works at: https://repository.stcloudstate.edu/msia_etds Recommended Citation Jorrigala, Vyshnavi, "Business Continuity and Disaster Recovery Plan for Information Security" (2017). Culminating Projects in Information Assurance. 44. https://repository.stcloudstate.edu/msia_etds/44 This Starred Paper is brought to you for free and open access by the Department of Information Systems at theRepository at St. Cloud State. It has been accepted for inclusion in Culminating Projects in Information Assurance by an authorized administrator of theRepository at St. Cloud State. For more information, please contact [email protected]. Business Continuity and Disaster Recovery Plan for Information Security by Vyshnavi Devi Jorrigala A Starred Paper Submitted to the Graduate Faculty of Saint Cloud State University in Partial Fulfillment of the Requirements for the Degree, of Master of Science in Information Assurance December, 2018 Starred Paper Committee: Susantha Herath, Chairperson Dien D. Phan Balasubramanian Kasi 2 Abstract Business continuity planning and Disaster recovery planning are the most crucial elements of a business but are often ignored. Businesses must make a well-structured plan and document for disaster recovery and business continuation, even before a catastrophe occurs. Disasters can be short or may last for a long time, but when an organization is ready for any adversity, it thrives hard and survives. This paper will clearly distinguish the difference between disaster recovery plan and business continuity plan, will describe the components of each plan and finally, will provide an approach that organizations can follow to make better contingency plan so that they will not go out of business when something unexpected happens. -

This Project Has Received Funding from the European Union's 7Th

This project has received funding from the European Union’s 7th Framework Programme for Research, Technological Development and Demonstration under Grant Agreement (GA) N° #607798 DRIVER+ project ◼ D933.11 – DRIVER+ online tools - Implementation specifications ◼ March 2019 (M59) Project information Project Acronym: DRIVER+ Project Full Title: Driving Innovation in Crisis Management for European Resilience Grant Agreement: 607798 Project Duration: 72 months (May 2014 - April 2020) Project Technical Coordinator: TNO Contact: Deliverable information Deliverable Status: Final Deliverable Title: D933.11 – DRIVER+ online tools - Implementation specifications Deliverable Nature: Report (R) Dissemination Level: Public (PU) Due Date: March 2019 (M59) Submission Date: 10/04/2019 Subproject (SP): SP93 - Solutions Work Package (WP): WP933 - DRIVER+ online platforms Deliverable Leader: Denis Havlik, AIT Reviewers: Maurice Sammels, XVR Erik Vullings, TNO Héctor Naranjo Setién, GMV File Name: DRIVER+_D933.11 DRIVER+ online tools - Implementation specifications.docx DISCLAIMER The opinion stated in this report reflects the opinion of the authors and not the opinion of the European Commission. All intellectual property rights are owned by the DRIVER+ consortium members and are protected by the applicable laws. Except where otherwise specified, all document contents are: “©DRIVER+ Project - All rights reserved”. Reproduction is not authorised without prior written agreement. The commercial use of any information contained in this document may require a license from the owner of that information. All DRIVER+ consortium members are also committed to publish accurate and up to date information and take the greatest care to do so. However, the DRIVER+ consortium members cannot accept liability for any inaccuracies or omissions nor do they accept liability for any direct, indirect, special, consequential or other losses or damages of any kind arising out of the use of this information. -

Iso/Tc292 規格開発状況(2021 年 6 月)

ISO/TC292 規格開発状況(2021 年 6 月) 注:オレンジ色のハイライトは,日本提案案件 WG 規格番号 タイトル 現在のステータス 備 考 ISO/TR 22312:2011 Societal security — Technological capabilities 1 ISO 22300:2021 Security and resilience — Vocabulary 発行済み 2 ISO 22301:2019 Security and resilience — Business continuity 発行済み JIS Q 22301:2020 management systems — Requirements セキュリティ及びレジリエンス―事業継続マネジメント 更なる参考書 システム―要求事項 外部リンク(ISO) 2 ISO 22313:2020 Security and resilience — Business continuity 発行済み JIS Q22313 改正中 management systems — Guidance on the use of ISO 22301 2 ISO 22316:2017 Security and resilience — Organizational resilience — 発行済み Principles and attributes 2 ISO/TS 22317:2015 Societal security -- Business continuity management 発行済み 改定中(今夏発行予定) systems -- Guidelines for business impact analysis (BIA) 1 2 ISO/TS 22318:2015 Societal security — Business continuity management 発行済み 改定中(今夏発行予定) systems — Guidelines for supply chain continuity 2 ISO/TS 22330:2018 Security and resilience — Business continuity 発行済み management systems — Guidelines for people aspects of business continuity 2 ISO/TS 22331:2018 Security and resilience — Business continuity 発行済み management systems — Guidelines for business continuity strategy 2 ISO/TS 22332:2021 Security and resilience — Business continuity 発行済み management systems — Guidance for developing business continuity procedures CASCO ISO/IEC 17021-6 Conformity assessment — Requirements for bodies 定期見直し実施中 providing audit and certification of management (ISO/CASCO主導) systems — Part 6: Competence requirements for auditing and certification of business continuity -

How Standards Contribute to Business Resilience in Crisis Situations

Physikalisch-Technische Bundesanstalt INTERNATIONAL Braunschweig und Berlin National Metrology Institute COOPERATION Current Situation How Standards Contribute to Business Resilience in Crisis Situations Niels Ferdinand, Richard Prem On behalf of the Federal Government of Germany, the Physikalisch-Technische Bundesanstalt promotes the improvement of the framework conditions for economic, social and environmentally friendly action and thus supports the development of quality infrastructure. 2 CONTENTS 1. Background 4 2. The significance of standards on increasing business resilience 4 2.1. Defining resilience and business continuity management 4 2.2. Fields of action for increasing business resilience 5 2.3. The correlation between standardisation and business resilience 5 2.4. Risk management in standardisation 7 3. Specific standards for resilience and business continuity management 8 3.1. Topic overview for specific standards 8 3.2. Effectiveness of standards in social and economic crises 10 Abbreviations 11 References 12 An overview of existing standards, specifically in regard to business resilience 14 E-Learning resources 21 Notes 22 3 1. BacKgroUND 1. Background The global corona virus crisis is presenting enormous This paper summarises the current state of affairs of challenges for companies worldwide. Companies in de- standardisation in the area of business resilience. It will veloping and emerging nations are especially affected, as explain the general contributions standardisation can the crisis has severe ramifications for them, and they have make towards promoting resilience. On this basis, it will less access to support. This raises the question of how we give an overview to specific standards relevant to busi- can use standardisation to improve the resilience of com- ness resilience.