State-Of-The-Art of Secure Ict Landscape (Version 2) April 2015

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Protecting Location Privacy Fromuntrusted Wireless Service

Protecting Location Privacy from Untrusted Wireless Service Providers Keen Sung Brian Levine Mariya Zheleva University of Massachusetts Amherst University of Massachusetts Amherst University at Albany, SUNY [email protected] [email protected] [email protected] ABSTRACT 1 INTRODUCTION Access to mobile wireless networks has become critical for day- When mobile users connect to the Internet, they authenticate to a to-day life. However, it also inherently requires that a user’s geo- cell tower, allowing service providers such as Verizon and AT&T graphic location is continuously tracked by the service provider. to store a log of the time, radio tower, and user identity [69]. As It is challenging to maintain location privacy, especially from the providers have advanced towards the current fifth generation of provider itself. To do so, a user can switch through a series of iden- cellular networks, the density of towers has grown, allowing these tifiers, and even go offline between each one, though it sacrifices logs to capture users’ location with increasing precision. Many users utility. This strategy can make it difficult for an adversary to per- are persistently connected, apprising providers of their location all form location profiling and trajectory linking attacks that match day. Connecting to a large private Wi-Fi network provides similar observed behavior to a known user. information to its administrators. And some ISPs offer cable, cellular, In this paper, we model and quantify the trade-off between utility and Wi-Fi hotspots as a unified package. and location privacy. We quantify the privacy available to a com- While fixed user identifiers are useful in supporting backend munity of users that are provided wireless service by an untrusted services such as postpaid billing, wireless providers’ misuse of provider. -

Comparative Analysis and Framework Evaluating Web Single Sign-On Systems

Comparative Analysis and Framework Evaluating Web Single Sign-On Systems FURKAN ALACA, School of Computing, Queen’s University, [email protected] PAUL C. VAN OORSCHOT, School of Computer Science, Carleton University, [email protected] We perform a comprehensive analysis and comparison of 14 web single sign-on (SSO) systems proposed and/or deployed over the last decade, including federated identity and credential/password management schemes. We identify common design properties and use them to develop a taxonomy for SSO schemes, highlighting the associated trade-offs in benefits (positive attributes) offered. We develop a framework to evaluate the schemes, in which weidentify14 security, usability, deployability, and privacy benefits. We also discuss how differences in priorities between users, service providers (SPs), and identity providers (IdPs) impact the design and deployment of SSO schemes. 1 INTRODUCTION Password-based authentication has numerous documented drawbacks. Despite the many password alternatives, no scheme has thus far offered improved security without trading off deployability or usability [8]. Single Sign-On (SSO) systems have the potential to strengthen web authentication while largely retaining the benefits of conventional password-based authentication. We analyze in depth the state-of-the art ofwebSSO through the lens of usability, deployability, security, and privacy, to construct a taxonomy and evaluation framework based on different SSO architecture design properties and their associated benefits and drawbacks. SSO is an umbrella term for schemes that allow users to rely on a single master credential to access a multitude of online accounts. We categorize SSO schemes across two broad categories: federated identity systems (FIS) and credential managers (CM). -

SGP Technologies Et INNOV8 Annoncent Le Lancement En Avant-Première Mondiale Du Smartphone BLACKPHONE Dans Les Boutiques LICK

PRESS RELEASE SGP Technologies et INNOV8 annoncent le lancement en avant-première mondiale du smartphone BLACKPHONE dans les boutiques LICK Paris (France) / Geneva (Suisse), 5 Juin 2014. Blackphone, le premier smartphone qui permet la sécurité de la vie privée des utilisateurs, sera lancé en avant-première cet été en France chez LICK, 1er réseau de boutiques 2.0 dédié aux objets connectés. SGP Technologies (Joint-Venture entre Silent Circle et Geeksphone) créateur du BLACKPHONE a choisi le groupe INNOV8, pour dévoiler le premier smartphone qui respecte la vie privée, à l’occasion de l’ouverture de LICK (Groupe INNOV8), le premier réseau de boutiques 2.0 dédié aux objets connectés, le 5 juin au Concept Store des 4 Temps à la Défense. Dès cet été, LICK sera le premier réseau de magasins autorisé à distribuer cette innovation technologique. La distribution sera ensuite ouverte à d’autres canaux en France via Extenso Telecom (Leader de la distribution de smartphones, accessoires et objets connectés en France) afin de proposer au plus grand nombre cette innovation technologique en phase avec les attentes des clients soucieux du respect de leur vie privée et de leur sécurité. "La France est connue pour être très ouverte à l’innovation. Nous sommes heureux de travailler avec le groupe INNOV8 pour lancer le Blackphone sur ce marché important en Europe et d'offrir aux utilisateurs de smartphones une solution inégalée pour la protection de leur vie privée et de leur sécurité», a déclaré Tony Bryant, VP Sales de SGP Technologies. « Nous sommes convaincus que la technologie devient un véritable style de vie. -

Analisi Del Progetto Mozilla

Università degli studi di Padova Facoltà di Scienze Matematiche, Fisiche e Naturali Corso di Laurea in Informatica Relazione per il corso di Tecnologie Open Source Analisi del progetto Mozilla Autore: Marco Teoli A.A 2008/09 Consegnato: 30/06/2009 “ Open source does work, but it is most definitely not a panacea. If there's a cautionary tale here, it is that you can't take a dying project, sprinkle it with the magic pixie dust of "open source", and have everything magically work out. Software is hard. The issues aren't that simple. ” Jamie Zawinski Indice Introduzione................................................................................................................................3 Vision .........................................................................................................................................4 Mozilla Labs...........................................................................................................................5 Storia...........................................................................................................................................6 Mozilla Labs e i progetti di R&D...........................................................................................8 Mercato.......................................................................................................................................9 Tipologia di mercato e di utenti..............................................................................................9 Quote di mercato (Firefox).....................................................................................................9 -

Firefox OS Overview Ewa Janczukowicz

Firefox OS Overview Ewa Janczukowicz To cite this version: Ewa Janczukowicz. Firefox OS Overview. [Research Report] Télécom Bretagne. 2013, pp.28. hal- 00961321 HAL Id: hal-00961321 https://hal.archives-ouvertes.fr/hal-00961321 Submitted on 24 Apr 2014 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Collection des rapports de recherche de Télécom Bretagne RR-2013-04-RSM Firefox OS Overview Ewa JANCZUKOWICZ (Télécom Bretagne) This work is part of the project " Étude des APIs Mozilla Firefox OS" supported by Orange Labs / TC PASS (CRE API MOZILLA FIREFOX OS - CTNG13025) ACKNOWLEGMENTS Above all, I would like to thank Ahmed Bouabdallah and Arnaud Braud for their assistance, support and guidance throughout the contract. I am very grateful to Gaël Fromentoux and Stéphane Tuffin for giving me the possibility of working on the Firefox OS project. I would like to show my gratitude to Jean-Marie Bonnin, to all members of Orange NCA/ARC team and RSM department for their help and guidance. RR-2013-04-RSM 1 RR-2013-04-RSM 2 SUMMARY Firefox OS is an operating system for mobile devices such as smartphones and tablets. -

A Hybrid Approach to Detect Tabnabbing Attacks

A Hybrid Approach to Detect Tabnabbing Attacks by Hana Sadat Fahim Hashemi A thesis submitted to the School of Computing in conformity with the requirements for the degree of Master of Science Queen's University Kingston, Ontario, Canada August 2014 Copyright c Hana Sadat Fahim Hashemi, 2014 Abstract Phishing is one of the most prevalent types of modern attacks, costing significant financial losses to enterprises and users each day. Despite the emergence of various anti-phishing tools and techniques, not only there has been a dramatic increase in the number of phishing attacks but also more sophisticated forms of these attacks have come into existence. One of the most complicated and deceptive forms of phishing attacks is the tabnabbing attack. This newly discovered threat takes advantage of the user's trust and inattention to the open tabs in the browser and changes the appearance of an already open malicious page to the appearance of a trusted website that demands confidential information from the user. As one might imagine, the tabnabbing attack mechanism makes it quite probable for even an attentive user to be lured into revealing his or her confidential information. Few tabnabbing detection and prevention techniques have been proposed thus far. The majority of these techniques block scripts that are susceptible to perform malicious actions or violate the browser security policy. However, most of these techniques cannot effectively prevent the other variant of the tabnabbing attack that is launched without the use of scripts. In this thesis, we propose a hybrid tabnabbing detection approach with the aim i of overcoming the shortcomings of the existing anti-tabnabbing approaches and tech- niques. -

RELEASE NOTES UFED PHYSICAL ANALYZER, Version 5.0 | March 2016 UFED LOGICAL ANALYZER

NOW SUPPORTING 19,203 DEVICE PROFILES +1,528 APP VERSIONS UFED TOUCH, UFED 4PC, RELEASE NOTES UFED PHYSICAL ANALYZER, Version 5.0 | March 2016 UFED LOGICAL ANALYZER COMMON/KNOWN HIGHLIGHTS System Images IMAGE FILTER ◼ Temporary root (ADB) solution for selected Android Focus on the relevant media files and devices running OS 4.3-5.1.1 – this capability enables file get to the evidence you need fast system and physical extraction methods and decoding from devices running OS 4.3-5.1.1 32-bit with ADB enabled. In addition, this capability enables extraction of apps data for logical extraction. This version EXTRACT DATA FROM BLOCKED APPS adds this capability for 110 devices and many more will First in the Industry – Access blocked application data with file be added in coming releases. system extraction ◼ Enhanced physical extraction while bypassing lock of 27 Samsung Android devices with APQ8084 chipset (Snapdragon 805), including Samsung Galaxy Note 4, Note Edge, and Note 4 Duos. This chipset was previously supported with UFED, but due to operating system EXCLUSIVE: UNIFY MULTIPLE EXTRACTIONS changes, this capability was temporarily unavailable. In the world of devices, operating system changes Merge multiple extractions in single unified report for more frequently, and thus, influence our support abilities. efficient investigations As our ongoing effort to continue to provide our customers with technological breakthroughs, Cellebrite Logical 10K items developed a new method to overcome this barrier. Physical 20K items 22K items ◼ File system and logical extraction and decoding support for iPhone SE Samsung Galaxy S7 and LG G5 devices. File System 15K items ◼ Physical extraction and decoding support for a new family of TomTom devices (including Go 1000 Point Trading, 4CQ01 Go 2505 Mm, 4CT50, 4CR52 Go Live 1015 and 4CS03 Go 2405). -

User Controlled Trust and Security Level of Web Real-Time Communications Kevin CORRE

User Controlled Trust and Security Level of Web Real-Time Communications THESE DE DOCTORAT DE L’UNIVERSITE DE RENNES 1 COMUE UNIVERSITE BRETAGNE LOIRE Ecole Doctorale N° 601 Mathèmatique et Sciences et Technologies de l’Information et de la Communication Spécialité : Informatique Thèse présentée et soutenue à RENNES, le 31 MAI 2018 par Kevin CORRE Unité de recherche : IRISA (UMR 6074) Institut de Recherche en Informatique et Systemes Aléatoires Thèse N° : Rapporteurs avant soutenance : Maryline LAURENT, Professeur, TELECOM SudParis Yvon KERMARREC, Professeur, IMT Atlantique Composition du jury : Présidente : Maryline LAURENT, Professeur, TELECOM SudParis Examinateurs : Yvon KERMARREC, Professeur, IMT Atlantique Walter RUDAMETKIN, Maître de Conférences, Université de Lille Dominique HAZAEL-MASSIEUX, Ingenieur de Recherche, W3C Vincent FREY, R&D Software Engineer, Orange Labs Dir. de thèse : Olivier BARAIS, Professeur, Université de Rennes 1 Co-dir. de thèse : Gerson SUNYÉ, Maître de Conférences, Université de Nantes “Fide, sed cui vide”. - Locution latine “Du père qui m’a donné la vie : la modestie et la virilité, du moins si je m’en rapporte à la réputation qu’il a laissée et au souvenir personnel qui m’en reste”. - Marcus Catilius Severus i Résumé en Français Contexte Communication, du latin communicatio [13], est défini par le dictionnaire Oxford comme étant “the imparting or exchanging of information” et “the means of sending or receiving information, such as telephone lines or computers” [14]. Face à une incertitude ou à un risque, un tel échange n’est possible que si une relation de confiance peut être établie entre l’émetteur et le récepteur. Cette relation de confiance doit aussi concerner les moyens utilisés pour communiquer. -

Why “Mobile” Security Is the Hardest Security

Tufts University School of Engineering COMP 111 - Security Why “Mobile” Security is the Hardest Security By Kevin Dorosh Abstract Tech-savvy people understand that Android is less safe than iPhone, but most don’t understand how glaring cell phone security is across the board. Android’s particularly lackluster performance is exemplified in the MAC address randomization techniques employed to prevent others from being able to stalk your location through your mobile device. MAC address randomization was introduced by Apple in June of 2014 and by Google in 2015, although (glaringly) only 6% of Android devices today currently have this tech [3]. Sadly, in devices with the tech enabled, the Karma attack can still be exploited. Even worse, both iOS and Android are susceptible to RTS (request to send frame) attacks. Other power users have even seen success setting up desktop GSM towers as intermediaries between mobile devices and real cell phone towers to gather similar information [5]. This paper attempts to explain simple techniques to glean information from other mobile phones that can be used for nefarious purposes from stalking to identity fraud, but also by government agencies to monitor evildoers without their knowledge. As this paper should make clear, mobile security is far more difficult than “stationary security”, for lack of a better term. Even worse, some of these flaws are problems exist with the Wi-Fi protocol itself, but we will still offer solutions to mitigate risks and fix the protocols. As we do more on our phones every day, it becomes imperative that we understand and address these risks. -

Benítez Bravo, Rubén; Liu, Xiao; Martín Mor, Adrià, Dir

This is the published version of the article: Benítez Bravo, Rubén; Liu, Xiao; Martín Mor, Adrià, dir. Proyecto de local- ización de Waterfox al catalán y al chino. 2019. 38 p. This version is available at https://ddd.uab.cat/record/203606 under the terms of the license Trabajo de TPD LOCALIZACIÓN DE WATERFOX EN>CA/ZH Ilustración 1. Logo de Waterfox Rubén Benítez Bravo y Xiao Liu 1332396 | 1532305 [email protected] [email protected] Màster de tradumàtica: Tecnologies de la traducció 2018-2019 Índice de contenidos 1. Introducción ........................................................................................ 4 2. Tabla resumen .................................................................................... 5 3. Descripción del proceso de localización ............................................. 6 3.1 ¿Qué es Waterfox? ........................................................................ 6 3.2 ¿Qué uso tiene?............................................................................. 6 3.3 Preparando la traducción ............................................................... 7 4. Análisis de la traducción ................................................................... 11 5. Análisis técnico ................................................................................. 21 6. Fase de testing ................................................................................. 29 7. Conclusiones .................................................................................... 35 8. Bibliografía -

Untraceable Links: Technology Tricks Used by Crooks to Cover Their Tracks

UNTRACEABLE LINKS: TECHNOLOGY TRICKS USED BY CROOKS TO COVER THEIR TRACKS New mobile apps, underground networks, and crypto-phones are appearing daily. More sophisticated technologies such as mesh networks allow mobile devices to use public Wi-Fi to communicate from one device to another without ever using the cellular network or the Internet. Anonymous and encrypted email services are under development to evade government surveillance. Learn how these new technology capabilities are making anonymous communication easier for fraudsters and helping them cover their tracks. You will learn how to: Define mesh networks. Explain the way underground networks can provide untraceable email. Identify encrypted email services and how they work. WALT MANNING, CFE President Investigations MD Green Cove Springs, FL Walt Manning is the president of Investigations MD, a consulting firm that conducts research related to future crimes while also helping investigators market and develop their businesses. He has 35 years of experience in the fields of criminal justice, investigations, digital forensics, and e-discovery. He retired with the rank of lieutenant after a 20-year career with the Dallas Police Department. Manning is a contributing author to the Fraud Examiners Manual, which is the official training manual of the ACFE, and has articles published in Fraud Magazine, Police Computer Review, The Police Chief, and Information Systems Security, which is a prestigious journal in the computer security field. “Association of Certified Fraud Examiners,” “Certified Fraud Examiner,” “CFE,” “ACFE,” and the ACFE Logo are trademarks owned by the Association of Certified Fraud Examiners, Inc. The contents of this paper may not be transmitted, re-published, modified, reproduced, distributed, copied, or sold without the prior consent of the author. -

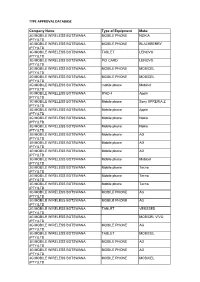

Type Approval Database 2017031231.Pdf

TYPE APPROVAL DATABASE Company Name Type of Equipment Make 3G MOBILE WIRELESS BOTSWANA MOBILE PHONE NOKIA (PTY)LTD 3G MOBILE WIRELESS BOTSWANA MOBILE PHONE BLACKBERRY (PTY)LTD 3G MOBILE WIRELESS BOTSWANA TABLET LENOVO (PTY)LTD 3G MOBILE WIRELESS BOTSWANA PCI CARD LENOVO (PTY)LTD 3G MOBILE WIRELESS BOTSWANA MOBILE PHONE MOBICEL (PTY)LTD 3G MOBILE WIRELESS BOTSWANA MOBILE PHONE MOBICEL (PTY)LTD 3G MOBILE WIRELESS BOTSWANA mobile phone Mobicel (PTY)LTD 3G MOBILE WIRELESS BOTSWANA IPAD 4 Apple (PTY)LTD 3G MOBILE WIRELESS BOTSWANA Mobile phone Sony XPRERIA Z (PTY)LTD 3G MOBILE WIRELESS BOTSWANA Mobile phone Apple (PTY)LTD 3G MOBILE WIRELESS BOTSWANA Mobile phone Nokia (PTY)LTD 3G MOBILE WIRELESS BOTSWANA Mobile phone Nokia (PTY)LTD 3G MOBILE WIRELESS BOTSWANA Mobile phone AG (PTY)LTD 3G MOBILE WIRELESS BOTSWANA Mobile phone AG (PTY)LTD 3G MOBILE WIRELESS BOTSWANA Mobile phone AG (PTY)LTD 3G MOBILE WIRELESS BOTSWANA Mobile phone Mobicel (PTY)LTD 3G MOBILE WIRELESS BOTSWANA Mobile phone Tecno (PTY)LTD 3G MOBILE WIRELESS BOTSWANA Mobile phone Tecno (PTY)LTD 3G MOBILE WIRELESS BOTSWANA Mobile phone Tecno (PTY)LTD 3G MOBILE WIRELESS BOTSWANA MOBILE PHONE AG (PTY)LTD 3G MOBILE WIRELESS BOTSWANA MOBILE PHONE AG (PTY)LTD 3G MOBILE WIRELESS BOTSWANA TABLET VERSSED (PTY)LTD 3G MOBILE WIRELESS BOTSWANA MOBICEL VIVO (PTY)LTD 3G MOBILE WIRELESS BOTSWANA MOBILE PHONE AG (PTY)LTD 3G MOBILE WIRELESS BOTSWANA TABLET MOBICEL (PTY)LTD 3G MOBILE WIRELESS BOTSWANA MOBILE PHONE AG (PTY)LTD 3G MOBILE WIRELESS BOTSWANA MOBILE PHONE AG (PTY)LTD 3G MOBILE WIRELESS BOTSWANA