Protection Models for Web Applications

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Learning to Program in Perl

Learning to Program in Perl by Graham J Ellis Languages of the Web Learning to Program in Perl version 1.7 Written by Graham Ellis [email protected] Design by Lisa Ellis Well House Consultants, Ltd. 404, The Spa, Melksham, Wiltshire SN12 6QL England +44 (0) 1225 708 225 (phone) +44 (0) 1225 707 126 (fax) Find us on the World Wide Web at: http://www.wellho.net Or contact us at: [email protected] Copyright © 2003 by Well House Consultants, Ltd. Printed in Great Britain. Printing History May 1999 1.0 First Edition February 2000 1.1 Minor additions June 2000 1.2 Compliation of modules October 2000 1.3 Name change, revisions April 2002 1.4 Added modules September 2002 1.5 Added modules January 2003 1.6 Updated modules February 2003 1.7 Updated modules This manual was printed on 21 May 2003. Notice of Rights All rights reserved. No part of this manual, including interior design, may be reproduced or translated into any language in any form, or transmitted in any form or by any means electronic, mechanical, photocopying, recording or otherwise, without prior written permission of Well House Consultants except in the case of brief quotations embodied in critical articles and reviews. For more information on getting permission for reprints and excerpts, contact Graham Ellis at Well House Consultants. This manual is subject to the condition that it shall not, by way of trade or otherwise, be lent, sold, hired out or otherwise circulated without the publisher's prior consent, incomplete nor in any form of binding or cover other than in which it is published and without a similar condition including this condition being imposed on the subsequent receiver. -

SEO Footprints

SEO Footprints Brought to you by: Jason Rushton Copyright 2013 Online - M a r k e t i n g - T o o l s . c o m Page 1 Use these “Footprints” with your niche specific keywords to find Backlink sources. Some of the footprints below have already been formed into ready made search queries. TIP* If you find a footprint that returns the results you are looking for, there is no need to use the rest in that section. For example if I am looking for wordpress sites that allow comments and the search query “powered by wordpress” “YOUR YOUR KEYWORDS” returns lots of results there is no need to use all of the others that target wordpress sites as a lot of them will produce similar results. I would use one or two from each section. You can try them out and when you find one you like add it to your own list of favourites. Blogs “article directory powered by wordpress” “YOUR YOUR KEYWORDS” “blog powered by wordpress” “YOUR YOUR KEYWORDS” “blogs powered by typepad” “YOUR YOUR KEYWORDS” “YOURYOUR KEYWORDS” inurl:”trackback powered by wordpress” “powered by blogengine net 1.5.0.7” “YOUR YOUR KEYWORDS” “powered by blogengine.net” “YOUR YOUR KEYWORDS” “powered by blogengine.net add comment” “YOUR YOUR KEYWORDS” “powered by typepad” “YOUR YOUR KEYWORDS” “powered by wordpress” “YOUR YOUR KEYWORDS” “powered by wordpress review theme” “YOUR YOUR KEYWORDS” “proudly powered by wordpress” “YOUR YOUR KEYWORDS” “remove powered by wordpress” “YOUR YOUR KEYWORDS” Copyright 2013 Online - M a r k e t i n g - T o o l s . -

Webové Diskusní Fórum

MASARYKOVA UNIVERZITA F}w¡¢£¤¥¦§¨ AKULTA INFORMATIKY !"#$%&'()+,-./012345<yA| Webové diskusní fórum BAKALÁRSKÁˇ PRÁCE Martin Bana´s Brno, Jaro 2009 Prohlášení Prohlašuji, že tato bakaláˇrskápráce je mým p ˚uvodnímautorským dílem, které jsem vy- pracoval samostatnˇe.Všechny zdroje, prameny a literaturu, které jsem pˇrivypracování používal nebo z nich ˇcerpal,v práci ˇrádnˇecituji s uvedením úplného odkazu na pˇríslušný zdroj. V Brnˇe,dne . Podpis: . Vedoucí práce: prof. RNDr. JiˇríHˇrebíˇcek,CSc. ii Podˇekování Dˇekujivedoucímu prof. RNDr. JiˇrímuHˇrebíˇckovi,CSc. za správné vedení v pr ˚ubˇehucelé práce a trpˇelivostpˇrikonzutacích. Dále dˇekujicelému kolektivu podílejícímu se na reali- zaci projektu FEED za podnˇetnépˇripomínkya postˇrehy. iii Shrnutí Bakaláˇrskápráce se zabývá analýzou souˇcasnýchdiskusních fór typu open-source a vý- bˇerem nejvhodnˇejšíhodiskusního fóra pro projekt eParticipation FEED. Další ˇcástpráce je zamˇeˇrenána analýzu vybraného fóra, tvorbu ˇceskéhomanuálu, ˇceskélokalizace pro portál a rozšíˇrenípro anotaci pˇríspˇevk˚u. Poslední kapitola je vˇenovánanasazení systému do provozu a testování rozšíˇrení pro anotaci pˇríspˇevk˚u. iv Klíˇcováslova projekt FEED, eParticipation, diskusní fórum, portál, PHP, MySQL, HTML v Obsah 1 Úvod ...........................................3 2 Projekt eParticipation FEED .............................4 2.1 eGovernment ...................................4 2.2 Úˇcastníciprojektu FEED .............................4 2.3 Zamˇeˇreníprojektu FEED .............................5 2.4 Cíl -

Interfacing Apache HTTP Server 2.4 with External Applications

Interfacing Apache HTTP Server 2.4 with External Applications Jeff Trawick Interfacing Apache HTTP Server 2.4 with External Applications Jeff Trawick November 6, 2012 Who am I? Interfacing Apache HTTP Server 2.4 with External Applications Met Unix (in the form of Xenix) in 1985 Jeff Trawick Joined IBM in 1990 to work on network software for mainframes Moved to a different organization in 2000 to work on Apache httpd Later spent about 4 years at Sun/Oracle Got tired of being tired of being an employee of too-huge corporation so formed my own too-small company Currently working part-time, coding on other projects, and taking classes Overview Interfacing Apache HTTP Server 2.4 with External Applications Jeff Trawick Huge problem space, so simplify Perspective: \General purpose" web servers, not minimal application containers which implement HTTP \Applications:" Code that runs dynamically on the server during request processing to process input and generate output Possible web server interactions Interfacing Apache HTTP Server 2.4 with External Applications Jeff Trawick Native code plugin modules (uhh, assuming server is native code) Non-native code + language interpreter inside server (Lua, Perl, etc.) Arbitrary processes on the other side of a standard wire protocol like HTTP (proxy), CGI, FastCGI, etc. (Java and \all of the above") or private protocol Some hybrid such as mod fcgid mod fcgid as example hybrid Interfacing Apache HTTP Server 2.4 with External Applications Jeff Trawick Supports applications which implement a standard wire protocol, no restriction on implementation mechanism Has extensive support for managing the application[+interpreter] processes so that the management of the application processes is well-integrated with the web server Contrast with mod proxy fcgi (pure FastCGI, no process management) or mod php (no processes/threads other than those of web server). -

Phpbb 3.3 Proteus Documentation

phpBB 3.3 Proteus Documentation Edited by Dominik Dröscher and Graham Eames phpBB 3.3 Proteus Documentation by Dominik Dröscher and Graham Eames Copyright © 2005 phpBB Group Abstract The detailed documentation for phpBB 3.3 Proteus. Table of Contents 1. Quick Start Guide ..................................................................................................... 1 1. Requirements ..................................................................................................... 1 2. Installation ......................................................................................................... 2 2.1. Introduction ............................................................................................ 3 2.2. Requirements .......................................................................................... 3 2.3. Administrator details .............................................................................. 3 2.4. Database settings .................................................................................... 3 2.5. Configuration file ................................................................................... 5 2.6. Advanced settings .................................................................................. 5 2.7. Final Steps .............................................................................................. 5 2.8. Supporting the phpBB organization ....................................................... 6 3. General settings ................................................................................................ -

Symbols Administrators

Index ■Symbols administrators. See also administering $access_check variable, 209 admin user (Drupal), 11–12 & (ampersand) in path aliases, 84 approving comments in WordPress, 396 <br /> (break tag), 476 auditing, 296–297 <!—more—> tag, 475–477 Advanced Editing mode (WordPress) <!—noteaser—> tag, 476 Advanced options in, 405–406, 408–409 >> (breadcrumb links), 159 previewing posts in, 409 ? (question mark) in path aliases, 84 using Custom Fields, 409 / (slash) in path aliases, 84 Advanced Mode (phpBB) announcement forum permissions in, 304 ■A group permissions in, 307 paraccess setting permission in, 303–304 accessing database abstraction layer, user permissions in, 305 335–338 Aggregator module, 61–64 Drupal rules for, 36–38 adding feeds, 63 Image Assist module settings for, 111 categorizing feeds, 64 rights for database servers, 6 function of, 61–62 site, 8–9 identifying feeds for subscription, 62 activating setting permissions, 64 group blocks, 132 viewing options for feeds, 64 IImage Browser plug-in, 410–411 aggregators, 375 RSS Link List plug-in, 424 aliased domains, 191 WP-DB Backup plug-in, 490 aliases to Drupal paths, 84–85 Admin Configuration panel (phpBB 2.0), 235, ampersand (in path aliases), 84 236–237 animation in posts, 287 administering. See also administrators; announcement forums, 247, 304 Database Administration module announcements Administer Nodes permission, 135 global, 287 administrative password for WordPress, permissions for, 270–271 389–390 removing, 315 blocks, 39–40 Anonymous User role (Drupal), 34, 35 Drupal -

Introduction Points

Introduction Points Ahmia.fi - Clearnet search engine for Tor Hidden Services (allows you to add new sites to its database) TORLINKS Directory for .onion sites, moderated. Core.onion - Simple onion bootstrapping Deepsearch - Another search engine. DuckDuckGo - A Hidden Service that searches the clearnet. TORCH - Tor Search Engine. Claims to index around 1.1 Million pages. Welcome, We've been expecting you! - Links to basic encryption guides. Onion Mail - SMTP/IMAP/POP3. ***@onionmail.in address. URSSMail - Anonymous and, most important, SECURE! Located in 3 different servers from across the globe. Hidden Wiki Mirror - Good mirror of the Hidden Wiki, in the case of downtime. Where's pedophilia? I WANT IT! Keep calm and see this. Enter at your own risk. Site with gore content is well below. Discover it! Financial Services Currencies, banks, money markets, clearing houses, exchangers. The Green Machine Forum type marketplace for CCs, Paypals, etc.... Some very good vendors here!!!! Paypal-Coins - Buy a paypal account and receive the balance in your bitcoin wallet. Acrimonious2 - Oldest escrowprovider in onionland. BitBond - 5% return per week on Bitcoin Bonds. OnionBC Anonymous Bitcoin eWallet, mixing service and Escrow system. Nice site with many features. The PaypalDome Live Paypal accounts with good balances - buy some, and fix your financial situation for awhile. EasyCoin - Bitcoin Wallet with free Bitcoin Mixer. WeBuyBitcoins - Sell your Bitcoins for Cash (USD), ACH, WU/MG, LR, PayPal and more. Cheap Euros - 20€ Counterfeit bills. Unbeatable prices!! OnionWallet - Anonymous Bitcoin Wallet and Bitcoin Laundry. BestPal BestPal is your Best Pal, if you need money fast. Sells stolen PP accounts. -

Evaluation Metric Boardsearch Metrics: Recall - C/N, Precision C/E

Overview • Forums provide a wealth of information • Semi structured data not taken advantage of by popular search software Board Search • Despite being crawled, many An Internet Forum Index information rich posts are lost in low page rank Forum Examples vBulletin •vBulletin • phpBB •UBB • Invision •YaBB • Phorum • WWWBoard phpBB UBB 1 gentoo evolutionM bayareaprelude warcraft Paw talk Current Solutions • Search engines • Forum’s internal search 2 Google lycos internal boardsearch Evaluation Metric boardsearch Metrics: Recall - C/N, Precision C/E Rival system: • Rival system is the search engine / forum internal search combination • Rival system lacks precision Evaluations: • How good our system is at finding forums • How good our system is at finding relevant posts/threads Problems: • Relevance is in the eye of the beholder • How many correct extractions exist? 3 Implementation • Lucene Improving Software Package • Mysql • Ted Grenager’s Crawler Source Search Quality • Jakarta HTTPClient Dan Fingal and Jamie Nicolson The Problem Sourceforge.org • Search engines for softare packages typically perform poorly • Tend to search project name an blurb only • For example… Gentoo.org Freshmeat.net 4 How can we improve this? Better Sources of Information • Better keyword matching • Every package is associated with a • Better ranking of the results website that contains much more detailed • Better source of information about the information about it package • Spidering these sites should give us a • Pulling in nearest neighbors of top richer representation -

A Taxonomy of SQL Injection Defense Techniques

Master’s Thesis Computer Science Thesis no: MCS-2011-46 June 2011 A Taxonomy of SQL Injection Defense Techniques Anup Shakya, Dhiraj Aryal School of Computing Blekinge Institute of Technology SE – 371 79 Karlskrona Sweden This thesis is submitted to the School of Computing at Blekinge Institute of Technology in partial fulfillment of the requirements for the degree of Master of Science in Computer Science. The thesis is equivalent to 20 weeks of full time studies. Contact Information: Author(s): Anup Shakya Address: Älgbacken 8, 372 34 Ronneby E-mail: [email protected] Author(s): Dhiraj Aryal Address: Lindblomsvågen 96, 372 33 Ronneby E-mail: [email protected] University advisor(s): Dr. Stefan Axelsson School of Computing Blekinge Institute of Technology School of Computing Internet : www.bth.se/com Blekinge Institute of Technology Phone : +46 455 38 50 00 SE – 371 79 Karlskrona Fax : +46 455 38 50 57 Sweden Abstract Context: SQL injection attack (SQLIA) poses a serious defense threat to web appli- cations by allowing attackers to gain unhindered access to the underlying databases containing potentially sensitive information. A lot of methods and techniques have been proposed by different researchers and practitioners to mitigate SQL injection problem. However, deploying those methods and techniques without a clear under- standing can induce a false sense of security. Classification of such techniques would provide a great assistance to get rid of such false sense of security. Objectives: This paper is focused on classification of such techniques by building taxonomy of SQL injection defense techniques. Methods: Systematic literature review (SLR) is conducted using five reputed and familiar e-databases; IEEE, ACM, Engineering Village (Inspec/Compendex), ISI web of science and Scopus. -

Design and Implementation of a Gis-Enabled Online Discussion Forum for Participatory Planning

DESIGN AND IMPLEMENTATION OF A GIS-ENABLED ONLINE DISCUSSION FORUM FOR PARTICIPATORY PLANNING MAN YEE (TERESA) TANG September 2006 TECHNICAL REREPORTPORT NO. 244217 DESIGN AND IMPLEMENTATION OF A GIS- ENABLED ONLINE DISCUSSION FORUM FOR PARTICIPATORY PLANNING Man Yee (Teresa) Tang Department of Geodesy and Geomatics Engineering University of New Brunswick P.O. Box 4400 Fredericton, N.B. Canada E3B 5A3 September 2006 © Man Yee (Teresa) Tang 2006 PREFACE This technical report is a reproduction of a thesis submitted in partial fulfillment of the requirements for the degree of Master of Science in Engineering in the Department of Geodesy and Geomatics Engineering, September 2006. The research was co-supervised by Dr. Y. C. Lee and Dr. David Coleman, and support was provided by the Natural Sciences and Engineering Research Council of Canada. As with any copyrighted material, permission to reprint or quote extensively from this report must be received from the author. The citation to this work should appear as follows: Tang, Man Yee (Teresa) (2006). Design and Implementation of a GIS-Enabled Online Discussion Forum for Participatory Planning. M.Sc.E. thesis, Department of Geodesy and Geomatics Engineering Technical Report No. 244, University of New Brunswick, Fredericton, New Brunswick, Canada, 151 pp. ABSTRACT Public participation is a process whose ultimate goal is to facilitate consensus building. To achieve this goal, there must be intensive communication and discussion among the participants who must have access to information about the matters being addressed. Recent efforts in Public Participation Geographic Information Systems (PPGIS), however, concentrate mainly on making GIS and other spatial decision-making tools available and accessible to the general public. -

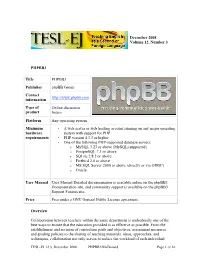

December 2008 Volume 12, Number 3 PHPBB3 Title PHPBB3 Publisher

December 2008 Volume 12, Number 3 PHPBB3 Title PHPBB3 Publisher phpBB Group Contact http://www.phpbb.com information Type of Online discussion product forum Platform Any operating system Minimum • A web server or web hosting account running on any major operating hardware system with support for PHP requirements • PHP version 4.3.3 or higher • One of the following PHP-supported database servers: o MySQL 3.23 or above (MySQLi supported) o PostgreSQL 7.3 or above o SQLite 2.8.2 or above o Firebird 2.0 or above o MS SQL Server 2000 or above (directly or via ODBC) o Oracle User Manual User Manual Detailed documentation is available online on the phpBB3 Documentation site, and community support is available on the phpBB3 Support Forums site. Price Free under a GNU General Public License agreement. Overview Collaboration between teachers within the same department is undoubtedly one of the best ways to ensure that the education provided is as effective as possible. From the establishment and revision of curriculum goals and objectives, assessment measures, and grading policies to the sharing of teaching materials, ideas, approaches, and techniques, collaboration not only serves to reduce the workload of each individual TESL-EJ 12.3, December 2008 PHPBB3/McDonald Page 1 of 16 teacher, it can serve to enhance the quality of work produced. Unfortunately, the nature of the teaching profession as it stands today, with teachers spending a majority of their time either working with students in the classroom or performing administrative duties, often precludes real opportunities for instructors within the same department to collaborate. -

Pipenightdreams Osgcal-Doc Mumudvb Mpg123-Alsa Tbb

pipenightdreams osgcal-doc mumudvb mpg123-alsa tbb-examples libgammu4-dbg gcc-4.1-doc snort-rules-default davical cutmp3 libevolution5.0-cil aspell-am python-gobject-doc openoffice.org-l10n-mn libc6-xen xserver-xorg trophy-data t38modem pioneers-console libnb-platform10-java libgtkglext1-ruby libboost-wave1.39-dev drgenius bfbtester libchromexvmcpro1 isdnutils-xtools ubuntuone-client openoffice.org2-math openoffice.org-l10n-lt lsb-cxx-ia32 kdeartwork-emoticons-kde4 wmpuzzle trafshow python-plplot lx-gdb link-monitor-applet libscm-dev liblog-agent-logger-perl libccrtp-doc libclass-throwable-perl kde-i18n-csb jack-jconv hamradio-menus coinor-libvol-doc msx-emulator bitbake nabi language-pack-gnome-zh libpaperg popularity-contest xracer-tools xfont-nexus opendrim-lmp-baseserver libvorbisfile-ruby liblinebreak-doc libgfcui-2.0-0c2a-dbg libblacs-mpi-dev dict-freedict-spa-eng blender-ogrexml aspell-da x11-apps openoffice.org-l10n-lv openoffice.org-l10n-nl pnmtopng libodbcinstq1 libhsqldb-java-doc libmono-addins-gui0.2-cil sg3-utils linux-backports-modules-alsa-2.6.31-19-generic yorick-yeti-gsl python-pymssql plasma-widget-cpuload mcpp gpsim-lcd cl-csv libhtml-clean-perl asterisk-dbg apt-dater-dbg libgnome-mag1-dev language-pack-gnome-yo python-crypto svn-autoreleasedeb sugar-terminal-activity mii-diag maria-doc libplexus-component-api-java-doc libhugs-hgl-bundled libchipcard-libgwenhywfar47-plugins libghc6-random-dev freefem3d ezmlm cakephp-scripts aspell-ar ara-byte not+sparc openoffice.org-l10n-nn linux-backports-modules-karmic-generic-pae