Temporal Elasticity As the Most Important Tool for Expressive Performance of German Late Romantic Organ Music

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Computational Methods for Tonality-Based Style Analysis of Classical Music Audio Recordings

Fakult¨at fur¨ Elektrotechnik und Informationstechnik Computational Methods for Tonality-Based Style Analysis of Classical Music Audio Recordings Christof Weiß geboren am 16.07.1986 in Regensburg Dissertation zur Erlangung des akademischen Grades Doktoringenieur (Dr.-Ing.) Angefertigt im: Fachgebiet Elektronische Medientechnik Institut fur¨ Medientechnik Fakult¨at fur¨ Elektrotechnik und Informationstechnik Gutachter: Prof. Dr.-Ing. Dr. rer. nat. h. c. mult. Karlheinz Brandenburg Prof. Dr. rer. nat. Meinard Muller¨ Prof. Dr. phil. Wolfgang Auhagen Tag der Einreichung: 25.11.2016 Tag der wissenschaftlichen Aussprache: 03.04.2017 urn:nbn:de:gbv:ilm1-2017000293 iii Acknowledgements This thesis could not exist without the help of many people. I am very grateful to everybody who supported me during the work on my PhD. First of all, I want to thank Prof. Karlheinz Brandenburg for supervising my thesis but also, for the opportunity to work within a great team and a nice working enviroment at Fraunhofer IDMT in Ilmenau. I also want to mention my colleagues of the Metadata department for having such a friendly atmosphere including motivating scientific discussions, musical activity, and more. In particular, I want to thank all members of the Semantic Music Technologies group for the nice group climate and for helping with many things in research and beyond. Especially|thank you Alex, Ronny, Christian, Uwe, Estefan´ıa, Patrick, Daniel, Ania, Christian, Anna, Sascha, and Jakob for not only having a prolific working time in Ilmenau but also making friends there. Furthermore, I want to thank several students at TU Ilmenau who worked with me on my topic. Special thanks go to Prof. -

Matthias Giesen (Austria)

Matthias Giesen (Austria) The biography of each musician is published as it has been sent by its author Matthias Giesen (*1973) was born in Boppard/Rhein in Germany. After the final school examination he studied church music, organ and music theory at the Music University in Cologne, Stuttgart and Vienna. His organ teachers were the Cologne Cathedral organist Clemens Ganz, Johannes Geffert and Bernhard Haas, music theory he studied with Johannes Schild and Friedrich Jaecker. He joined several organ courses with Marie-Claire Alain, Guy Bovet, Lorenzo Ghielmi, Jean-Claude Zehnder, Michael Radulescu etc. In 1998 he won the First Prize of the Bayreuth Organ Competition. After his organ examinations he studied music theory with Martin Eybl at the Vienna University of Music. In 1999 he was organist in several parishes in Cologne (Germany); in August 1999 he was chosen as the organist of the Bruckner-Organ of the Augustinian Monastery St Florian/Austria. He is also in charge of the Monastery´s music archive. He participated in several recordings for broadcast and television (Italian, Japanese, US-American- Television, Austrian television ORF). This involvement in many international concerts has contributed to his artistic activity (Austria, Switzerland, Germany, Italy, Poland, Greece, Algeria, Mexico, Slovakia, Russia, Australia etc.). In May 2002 a CD featuring Giesen playing the Bruckner Organ with organ compositions of the 19th century (Liszt, Schumann, Reger and Bruckner transcription) was published. It also includes a transcription of one of Anton Bruckner´s works. Several other CD projects are planned. In the following time different articles about the organ composers Jean Guillou (in 2001 Giesen made a collaboration project with him), André Jolivet, Leos Janacek and Max Reger were published. -

Repertoire List Course 11 Contemporary HAAS

Repertoire list for Course No. 11 Contemporary Music Professor: Bernhard Haas · Arnold Schönberg: Variationen op. 40 (Belmont Music Publishers); Fragment der Orgelsonate (Universal Edition) · Anton Webern: Variationen op. 27 (Orgelfassung Xavier Darasse, Bärenreiter) · Philipp Jarnach: Konzertstück Romancero III op. 21 (Schott) · Paul Hindemith: 3 Organ Sonatas (Schott) · Luigi Dallapiccola: Quaderno musicale di Annalibera (version for organ, Suvini Zerboni) · Ernst Krenek: Sonate op. 92, Die vier Winde (Bärenreiter) · Olivier Messiaen: Messe de la Pentecôte; Livre d'orgue (Leduc) · Gilbert Amy: 7 Bagatelles (Amphion); Quasi una Toccata (Universal Edition) · Carola Bauckholt: Gegenwind (Thürmchen Verlag) · John Cage: ASLSP; Souvenir (Peters) · Xavier Darasse: Organum 3 (Salabert); Organum 9 (Lemoine) · Morton Feldman: Principal Sound (Universal Edition) · Johannes Fritsch: IX '99 X (Feedback Studio Verlag, Köln) · Mauricio Kagel: IMprovisation ajoutée (Universal Edition); Rrrrrrr... (Peters) · György Kurtág: Orgelstücke and ‘Ligatura x’ from Jatekok 6 (Edition Musica Budapest/Boosey & Hawkes) · Thomas Lacôte: ‘Et l'unique cordeau des trompettes marines’ from Études pour orgue Band 1 (Lemoine) · Jean-Pierre Leguay: 19 Préludes; 3ème Sonate (Lemoine) · György Ligeti: Volumina (Peters); Zwei Etüden (Schott) · Robert HP Platz: Stunden:Buch (Ricordi) · Henri Pousseur: Deuxième vue sur les jardins interdits (Suvini Zerboni) · Giacinto Scelsi: In nomine Lucis (Salabert) · Karlheinz Stockhausen: Tierkreis, Version für Akkordinstrument (Stockhausen-Verlag) · Zsigmond Szathmáry: Moving colours (Bärenreiter) · Claude Vivier: Les Communiantes (Boosey & Hawkes) · Christian Wolff: Black Song Organ Preudes (Peters) · Isang Yun: Tuyaux sonores, Fragment (Bote & Bock/ Boosey & Hawkes) Composers are also invited to bring own compositions! Choose which pieces from this list you want to prepare and let us know by sending an email to [email protected]. -

Abstracts Euromac2014 Eighth European Music Analysis Conference Leuven, 17-20 September 2014

Edited by Pieter Bergé, Klaas Coulembier Kristof Boucquet, Jan Christiaens 2014 Euro Leuven MAC Eighth European Music Analysis Conference 17-20 September 2014 Abstracts EuroMAC2014 Eighth European Music Analysis Conference Leuven, 17-20 September 2014 www.euromac2014.eu EuroMAC2014 Abstracts Edited by Pieter Bergé, Klaas Coulembier, Kristof Boucquet, Jan Christiaens Graphic Design & Layout: Klaas Coulembier Photo front cover: © KU Leuven - Rob Stevens ISBN 978-90-822-61501-6 A Stefanie Acevedo Session 2A A Yale University [email protected] Stefanie Acevedo is PhD student in music theory at Yale University. She received a BM in music composition from the University of Florida, an MM in music theory from Bowling Green State University, and an MA in psychology from the University at Buffalo. Her music theory thesis focused on atonal segmentation. At Buffalo, she worked in the Auditory Perception and Action Lab under Peter Pfordresher, and completed a thesis investigating metrical and motivic interaction in the perception of tonal patterns. Her research interests include musical segmentation and categorization, form, schema theory, and pedagogical applications of cognitive models. A Romantic Turn of Phrase: Listening Beyond 18th-Century Schemata (with Andrew Aziz) The analytical application of schemata to 18th-century music has been widely codified (Meyer, Gjerdingen, Byros), and it has recently been argued by Byros (2009) that a schema-based listening approach is actually a top-down one, as the listener is armed with a script-based toolbox of listening strategies prior to experiencing a composition (gained through previous style exposure). This is in contrast to a plan-based strategy, a bottom-up approach which assumes no a priori schemata toolbox. -

Brahms's Arrangements of Bach's Cantatas

Volume XXXI, Number 2 Fall 2013 “In Concert-Life, the Most Striking, Most Pleasing of Adventures” – Brahms’s Arrangements of Bach’s Cantatas When in October 1874 Brahms wrote to his friend, the musicologist and Bach specialist Philipp Spitta in the above terms, he was describing his own concert performances of cantatas by Johann Sebastian Bach, specifically his recent performances of Christ lag in Todesbanden BWV 4 and Nun ist das Heil BWV 50.1 He had begun performing Bach cantatas with the first mixed-voice choir he directed on a regular basis, the Singverein at the Detmold Court (1857–60), and he continued when he got to Vienna with the Singakademie in 1863–64 and the Singverein of the Gesellschaft der Musikfreunde in 1872–75. Table 1 lists all his known performances of Bach’s vocal music, from which we can see that he gave complete or nearly complete performances of five cantatas, of the St. Matthew Passion, and of four of the six parts of the Christmas Oratorio. For these performances, especially for those of the cantatas, Brahms prepared arrangements that met the practical requirements of his time, though he would often do this in imaginatively creative ways. Broadly speaking, he (1) added instruments to support Brahms in 1853. Photo courtesy of the Brahms-Institut and color Bach’s vocal parts where he thought necessary; an der Musikhochschule Lübeck. (2) replaced or adapted Bach’s high trumpet parts, which were no longer fully playable by late nineteenth-century musicians; Bach cantata arrangements on 2 and 6 September, comprising (3) wrote continuo realizations; and (4) added performance those works and movements for which the sources are complete nuancing (dynamics, articulations, etc.) in accordance with and clear, conducted by Hans-Christoph Rademann and Jos contemporary taste. -

JULY 2016 St. Joseph's Church in Greenwich Village

THE DIAPASON JULY 2016 St. Joseph’s Church in Greenwich Village New York, New York Cover feature on pages 26–27 THE DIAPASON Editor’s Notebook Scranton Gillette Communications One Hundred Seventh Year: No. 7, In this issue Whole No. 1280 We present Steven Egler’s interview with Marilyn Keiser this JULY 2016 month, which also marks Marilyn Keiser’s 75th birthday. We Established in 1909 also offer Larry Palmer’s tribute to the late artist Jane Johnson, Joyce Robinson ISSN 0012-2378 whose witty drawings of so many musicians have graced these 847/391-1044; [email protected] pages numerous times over the years. www.TheDiapason.com An International Monthly Devoted to the Organ, John Bishop discusses Durham—including its cathedral, and the Harpsichord, Carillon, and Church Music the fi rm of Harrison & Harrison that was based there. John Gentle reminders describes his visit to the Harrison & Harrison workshop, their We are pleased to publish events in our calendar (print and CONTENTS major projects, and how Great Britain uses lottery proceeds a on our website) and programs of events that have taken place. FEATURES bit differently than we do here. As you complete plans for your upcoming concert season, Celebrating Marilyn Keiser at 75 Gavin Black continues his musings on the nature of record- please send them along so that we may mention them. by Steven Egler 19 ings—whether they are to be objective representations of the Don’t forget to send us your recital programs (if possible, Drawings by Jane Johnson composer’s intentions or free expressions by the performer. -

View PDF Editionarrow Forward

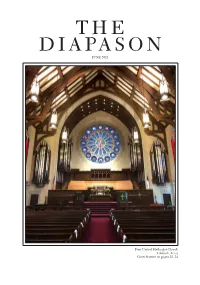

THE DIAPASON JUNE 2021 First United Methodist Church Lubbock, Texas Cover feature on pages 22–24 PHILLIP TRUCKENBROD CONCERT ARTISTS ŚĂƌůĞƐDŝůůĞƌWƌĞƐŝĚĞŶƚ WŚŝůůŝƉdƌƵĐŬĞŶďƌŽĚ&ŽƵŶĚĞƌ THE DIAPASON Editor’s Notebook Scranton Gillette Communications One Hundred Twelfth Year: No. 6, Digital edition promotion Whole No. 1339 As a reminder, through the end of June, those buying a new JUNE 2021 or renewal one-year subscription receive a free one-year digital Established in 1909 subscription for a friend. This offer is valid when purchasing a Stephen Schnurr ISSN 0012-2378 print or digital subscription; the free subscription is digital only 847/954-7989; [email protected] and is intended for a new subscriber. For information and to www.TheDiapason.com An International Monthly Devoted to the Organ, subscribe: toll free, 877/501-7540; local, 847/763-4933; for new the Harpsichord, Carillon, and Church Music subscriptions: https://sgc.dragonforms.com/DPP_newfriend; will be published in the May 2022 issue. Further details will for renewals: https://sgc.dragonforms.com/DPP_renewfriend. appear in Editor’s Notebook in an upcoming issue. In the CONTENTS meantime, direct questions to Stephen Schnurr, Editorial FEATURES Calendar of events Director: [email protected]. Memories of Charles Hendrickson The Calendar section of this issue is the largest listing of by David Engen 14 events in over a year, an encouraging example of a gradual In this issue Exploring the unknown of BWV 565, return of the arts in daily life. Next month’s issue will include a David Engen offers remembrances of Minnesota organ- Part 1 summer carillon concert calendar. builder Charles Hendrickson, who died December 17, 2020, by Michael Gailit 18 If you will be hosting summer organ and carillon recitals at the age of 85. -

Keventsidou.Pdf

Canterbury Christ Church University’s repository of research outputs http://create.canterbury.ac.uk Copyright © and Moral Rights for this thesis are retained by the author and/or other copyright owners. A copy can be downloaded for personal non-commercial research or study, without prior permission or charge. This thesis cannot be reproduced or quoted extensively from without first obtaining permission in writing from the copyright holder/s. The content must not be changed in any way or sold commercially in any format or medium without the formal permission of the copyright holders. When referring to this work, full bibliographic details including the author, title, awarding institution and date of the thesis must be given e.g. Keventsidou, E. (2016) Max Reger's variations and fugue on an original theme op.73: issues of musical structure, performance practice and interpretation. Ph.D. thesis, Canterbury Christ Church University. Contact: [email protected] Max Reger's Variations and Fugue on an Original Theme Op. 73: Issues of musical structure, performance practice and interpretation by Eleni Keventsidou Canterbury Christ Church University Thesis submitted for the degree of Doctor of Philosophy Year 2016 ACKNOWLEDGMENTS I would like to express my deepest gratitude to all my supervisors, Dr. Robert Rawson, my first supervisor, who has helped me understand, organise my research topic and clarify my arguments. I am profoundly grateful to my second supervisor Dr. Maria Varvarigou, who made me understand that writing is equally rewarding and enthusiastic with performing. I would like to thank Professor Roderick Watkins for his constant support and encouragement. -

Historic Organs of Belgium May 15-26, 2018 12 Days with J

historic organs of BELGIUM May 15-26, 2018 12 Days with J. Michael Barone historic organs of GERMANY May 22-June 4, 2019 14 Days with J. Michael Barone www.americanpublicmedia.org www.pipedreams.org National broadcasts of Pipedreams are made possible with funding from Mr. & Mrs. Wesley C. Dudley, grants from Walter McCarthy, Clara Ueland, and the Greystone Foundation, the Art and Martha Kaemmer Fund of the HRK Foundation, and Jan Kirchner on behalf of her family foun- dation, by the contributions of listeners to American Public Media stations nationwide, and by the thirty member organizations of the Associated Pipe Organ Builders of America, APOBA, represent- ing the designers and creators of pipe organs heard throughout the country and around the world, with information at www.apoba.com. See and hear Pipedreams on the Internet 24-7 at www.pipedreams.org. A complete booklet pdf with the tour itinerary can be accessed online at www.pipedreams.org/tour Table of Contents Welcome Letter Page 2 Bios of Hosts and Organists Page 3-7 The Thuringian Organ Scene Page 8-9 Alphabetical List of Organ Builders Page 10-15 Organ Observations Page 16-18 Tour Itinerary Page 19-22 Tour Members Playing the Organs Page 23 Organ Sites Page 24-116 Rooming List Page 117 Traveler Profiles Page 118-122 Hotel List Page 123-124 Map Inside Back Cover Thanks to the following people for their valuable assistance in creating this tour: Tim Schmutzler Valerie Bartl, Cynthia Jorgenson, Kristin Sullivan, Janet Tollund, and Tom Witt of Accolades International Tours for the Arts in Minneapolis. -

Konzert Für Blechbläser & Orgel

AMADEUS BRASS QUINTETT Im Jahr 2000 gegründet, musizieren di KONZERT FÜR e fünf in Niederösterreich lebenden Musiker bei zahlreichen Festivals und konzertieren in den verschiedensten Sälen und Kirchen im In- und Ausland. BLECHBLÄSER & ORGEL 2004 wurden sie, die „Blechbläsermusik für hörende Ohren machen“, mit dem Tullnerfelder Kulturpreis ausgezeichnet. Neben jährlichen Neujahrskon- zerten mit Strauß-Melodien spannen sie mit viel Gefühl einen Bogen von AMADEUS BRASS Wolfgang Amadeus Mozart, Joseph Haydn und Franz Schubert zu Duke Elling- ton und Joe Zawinul. Johannes Hoffmann - Trompete CDs: „Von Bach bis Bernstein“ und „Amadeus Brass Quintett spielt Strauß“ Karl Hemmelmayer - Trompete Unsere nächsten Termine: Andreas Simbeni - Horn Do 14.7. 19:30 Konzert Stift Lilienfeld – Amadeus Brass and friends So 2.10. 16:00 Konzert Pfarrkirche Ziersdorf mit Elisabeth Ullmann und Jürgen Sklenar - Posaune Cappella Ars Musica Franz Griesler - Tuba Johannes Zeinler - Orgel studiert derzeit an der Universität für Musik und darstellenden Kunst Wien Orgel bei Pier Damiano Peretti, Klavier bei Christiane Karajev und Kirchenmu- ORGEL sik. Bereits mit 18 Jahren gewann er den 18.Internationalen Orgelwettbe- Johannes Zeinler werb um den Bach-Preis der Landeshauptstadt Wiesbaden und es folgten weitere 1.Preise beim 4.Internationalen Franz Schmidt Orgelwettbewerb 2012 und beim Internationalen Rheinberger Orgelwettbewerb 2013 in Liech- tenstein. 2015 gewann er den 1.Preis beim prestigeträchtigen Orgelwettbe- werb in St.Albans (UK) der diverse internationale Konzertauftritte an bedeu- tenden Orgeln und eine CD-Produktion zur Folge hat. Seine Konzerttätigkeit führte ihn bereits unter anderem zum Carinthischen Sommer, Wiesbadener Bach Wochen und Stiftung Mozarteum Salzburg. Meisterkurse bei bedeuten- den Persönlichkeiten wie Jean Guillou, Michel Bouvard, Ton Koopman und Bernhard Haas ergänzen seine Ausbildung. -

ORGANO | ORGAN | 20 11 M International Symposium on the Importance and Future of the Organ | September 8–11, 20 11 in Zurich PROGRAMME

M M A R G O R P h c i r ü Z n i 1 1 0 2 r e b m e t p e S . 1 1 – . 8 | l e g r O r e d t f n u k u Z d n u g n u t u e d e B r u z m u i s o p m y S s e l a n o i t a n r e t n I P R 11 0 2 | N A G R O | O N A G R O | E U G R O | L E G R O O G R A M M E | O R G E L | O R G U E | O R G A N O | O R G A N | 2 0 1 1 | P R O G R A M ORGEL | ORGUE | ORGANO | ORGAN | 20 11 M International Symposium on the Importance and Future of the Organ | September 8–11, 20 11 in Zurich PROGRAMME D 2 D2 ORGEL | ORGUE | ORGANO | ORGAN | 20 11 International Symposium on the Importance and Future of the Organ | September 8–11, 2011 in Zurich PROGRAMME www.orgel2011.ch Patroness and Patronage Committee Patroness and Patronage Committee Patroness Corine Mauch, Mayor of Zurich Patronage Committee Council of States Christine Egerszegi-Obrist Nikolaus Harnoncourt Michael Haefliger Mons. Kurt Cardinal Koch Pastor Michel Müller-Zwygart, President of the Ecclesiastical Council Dr. Elmar Weingarten Prof. Dr. Dr. h.c. Christoph Wolff E4 Words of Welcome Words of Welcome from Corine Mauch Mayor of Zurich Welcome to Zurich! Zurich today is a city of music. -

ZGMTH Zeitschrift Der Gesellschaft Für Musiktheorie Herausgegeben Von

Zeitschrift der ZGMTH Gesellschaft für Musiktheorie 8. Jahrgang 2011 Herausgegeben von Folker Froebe, Michael Polth, Stefan Rohringer und Jan Philipp Sprick ZGMTH Zeitschrift der Gesellschaft für Musiktheorie e. V. Wissenschaftlicher Beirat: Jean-Michel Bardez (Paris), Nicholas Cook (London), Thomas Christensen (Chicago), Jonathan Cross (Oxford), Hermann Danuser (Berlin), Helga de la Motte-Haber (Berlin), Hartmut Fladt (Berlin), Allen Forte (Yale), Renate Groth (Bonn), Thomas Kabisch (Trossingen), Eckehard Kiem (Freiburg), Clemens Kühn (Dresden), Nicolas Meeús (Paris), Christian Martin Schmidt (Berlin), Michiel Schuijer (Amsterdam). 8. Jahrgang 2011 Herausgeber: Folker Froebe, Wilhelm-Brandes-Straße 2, 27570 Bremerhaven, Tel.: + 49(0)471 - 200 290, Michael Polth, Zechnerweg 4, 69118 Heidelberg, Tel.: + 49(0)6221 - 735 33 90 Stefan Rohringer, Ismaningerstraße 82, 81675 München, Tel.: + 49(0)89 - 28 92 74 81 und Jan Philipp Sprick, Willibald-Alexis-Straße 22, 10965 Berlin, Tel.: + 49(0)30 - 61209936 Die Herausgeber sind per E-Mail erreichbar unter: [email protected]. Layout: Poli Quintana, [email protected] / Oliver Schwab-Felisch. Gesetzt in Linotype Optima. Umschlag: Oliver Schwab-Felisch Satz: Folker Froebe Notensatz und Grafik: Folker Froebe / Jan Philipp Sprick Erscheinungsweise: jährlich. Manuskripte und Rezensionsexemplare senden Sie bitte an die Herausgeber oder an: ZGMTH, z. Hd. Stefan Rohringer, Hochschule für Musik und Theater München, Arcisstraße 12, 80333 München. Bezug über den Buchhandel oder direkt über Georg Olms Verlag, Hagentorwall 7, 31134 Hildesheim, Tel.: + 49(0)5121 - 150 10, [email protected], www.olms.de. Preise: Einzelband 44,- €, Abonnement 37,- € (zzgl. Versandspesen). Für Mitglieder der Gesellschaft für Musik theorie ist der Bezugspreis durch den Mitgliedsbeitrag abgegolten. Anzeigenannahme: Georg Olms Verlag. Die Deutsche Bibliothek verzeichnet diese Publikation in der Deutschen Nationalbibliografie; detaillierte bib- liographische Daten sind im Internet über http://dnb.ddb.de abrufbar.