Fast, Precise, Low-Cost and Compact Motion Sensing Using Laser Speckle Jan Zizka 1,2 Alex Olwal 1 Ramesh Raskar 1 1 MIT Media Lab 2 Comenius University DAI 77 Mass

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Emerging Trends in Management, IT and Education ISBN No.: 978-87-941751-2-4

Emerging Trends in Management, IT and Education ISBN No.: 978-87-941751-2-4 Paper 12 IMPLEMENTING PRODUCT DIVERSIFICATION STRATEGIES FOR THE SUSTAINABILITY OF A TECHNOLOGY COMPANY - A CASE OF MICROSOFT CORPORATION Vinayachandra1 & Krishna Prasad K2 1Research Scholar, College of Computer Science and Information Science, Srinivas University, Mangalore, India 2 College of Computer Science and Information Science, Srinivas University, Mangalore, India E-mail : [email protected] Abstract Started in 1975, with a view to develop and vend BASIC interpreter, today, Microsoft develop, produce, license, support and sell worldwide software, user-level electronics, personal computers, and allied services. The company is world-famous because of its best software products Windows operating systems, Office suits, IE and Edge. The company’s notable hardware pieces are Xbox and Microsoft Surface family touch-screen computers. The company is listed as the top software company by Forbes Global for many years. From its inception to date, the company is maintaining top ranking technology-wise, product-wise, service-wise, revenue-wise, and growth-wise. It is possible for the company to sustain growth because of the integration and implementation of product diversification strategy. Over the years the company transformed from just a developer and seller of interpreter to producer & marketer of wide variety of software-hardware products. This paper analyses the strategies the company adopted and incorporated in diversifying product and services lineup to sustain growth and maintain market stability. It also analyses the relevance and acceptability of different Microsoft products, its customer base, and software market share and near future strategies. Keywords: Microsoft, strategies, product diversification, Windows, generic, intensive. -

Conference Guide

CANPCollaborate. Educate. Advocate. RD 43 ANNUAL APRIL 22-24, 2021 EDUCATIONAL VIRTUAL CONFERENCE CONFERENCE SUPPORTED BY Do you have an extra $304,206 lying around? That’s the average cost of a malpractice claim against a nurse practitioner.* • 55% of malpractice allegations are due to diagnosis and treatment/care management Nurse Practitioner Professional • Adult medical/primary care and family practice Liability Insurance account for 53.7% of all malpractice claims For rates and coverage details • $4,441 is the average cost to defend your nso.com/canp license in front of the state licensing board When you add up all the numbers, NSO equals peace of mind. Endorsed by: *Nurse Practitioner Claim Report, Fourth Edition 2017. Nurses Service Organization is a registered trade name of Affinity Insurance Services, Inc. (TX 13695); (AR 100106022); in CA & MN, AIS Affinity Insurance Agency, Inc. (CA 0795465); in OK, AIS Affinity Insurance Services Inc.; in CA, Aon Affinity Insurance Services, Inc. (CA 0G94493); Aon Direct Insurance Administrators and Berkely Insurance Agency and in NY, AIS Affinity Insurance Agency. X-14253-0421 CANP21 Welcome As president of the California Association for Nurse Practitioners (CANP), I am pleased to welcome you to the 43rd Annual Educational Conference. Although we have opted to hold the event virtually this year for the safety of all, it remains the place where the future of health care is addressed and defined. Our theme – Collaborate. Educate. Advocate. – is a true reflection of CANP’s mission. Annually, we gather to share ideas and provide access to information that SUPPORTED BY affects our industry. -

Lightweight Portability for the Task at Hand

Lightweight portability for the task at hand Meet the new Surface Go 2 for Federal Always on the go Work wherever, whenever with ease. The lightest Microsoft Surface 2-in-1 device features a bigger 10.5-inch touchscreen display, either Pentium processors or a newly introduced 8th Intel® Core™ Processor option, up to 10 hours of battery life, and still weighs just 1.2 pounds. Equipped for government use Protect your device with the Kensington BlackBelt Rugged Case with Integrated CAC Reader, and military-grade drop protection or the IOGEAR Smart Card Reader Hub for Surface. These FIPS 201–compliant smart card readers provide a highly secure connection to your network. Work more securely, on your terms Whether you need to physically disable cameras or just want to better secure all aspects of your corporate endpoints, Surface Enterprise Management Mode (SEMM) provides a scalable deployable utility to meet this need. Administrators can selectively choose to enable or disable hardware- based components, in addition to boot options, on a per-device basis—all secured via PKI. Warranty Support you can Trust Work without worries, knowing you can receive quick and reliable support through Microsoft’s service partnership with ITG. Choose 3-year, 4- year, or 5-year onsite warranties w/Keep Your Hard Drive, receive support by the next business day, and remain in full compliance with regulations. TECHNICAL DETAILS 9.65” x 6.9” x 0.33” eMMC drive: 64GB (Wi-Fi) Dimensions (245 mm x 175 mm x 8.3 mm) Storage6 Solid-state drive (SSD): 128GB (Wi-Fi or LTE); -

Surface Go 2 Fact Sheet May 2020

Surface Go 2 Fact Sheet May 2020 We created Surface Go to bring a more affordable and portable 2:1 to the Surface line. Businesses, schools, students and families have chosen Surface Go for its compact design, the versatility of a touchscreen, keyboard, Pen and the added connectivity of LTE Advanced. The newest Surface Go 2 was designed with those same people in in mind. Surface Go 2 keeps the same thin, lightweight design, but now offers a larger 10.5” edge-to-edge PixelSense display, improved battery life, dual Studio Mics, a 5MP front-facing camera and faster performance than the original with new Intel 8th generation processors. Surface Go 2 is perfect companion for your everyday tasks, homework, and play — delivering tablet portability with laptop versatility, all-day battery life, and Windows security for the whole family Top Features and Benefits • Most Versatile. Surface Go 2 gives you tablet portability and a full laptop experience with built-in Kickstand and Signature Type Cover1 in one compact design. Starting at just 1.2 pounds (not including Type Cover*), Surface Go 2 is our lightest and most affordable Surface yet, helping you stay connected wherever you are. Connect the Surface Go 2 Signature Type Cover* for a great typing experience when and where you need it — now in a choice of new colors2 for fast and accurate typing with an integrated glass trackpad for precise navigation. With Surface Go 2, you have your go-to Microsoft 365 apps3 at your fingertips: Glance and edit in Word and PowerPoint or take notes in OneNote from anywhere. -

Surface Pro User Guide

Surface Pro User Guide Published: April 30, 2013 Version 1.01 © 2013 Microsoft. All rights reserved. BlueTrack Technology, ClearType, Excel, Hotmail, Internet Explorer, Microsoft, OneNote, Outlook, PowerPoint, SkyDrive, Windows, Xbox, and Xbox Live are registered trademarks of Microsoft Corporation. Surface, VaporMg, Skype, and Wedge are trademarks of Microsoft Corporation. Bluetooth is a registered trademark of Bluetooth SIG, Inc. This document is provided “as-is.” Information in this document, including URL and other Internet Web site references, may change without notice. © 2013 Microsoft Page ii Contents Meet Surface Pro ............................................................................................................................... 1 About this guide ........................................................................................................................... 1 Highlights ........................................................................................................................................ 2 What is Windows 8 Pro? ............................................................................................................ 4 Surface accessories ...................................................................................................................... 4 Setup ...................................................................................................................................................... 6 Plug in and turn on .................................................................................................................... -

Meet Surface Go for Education Portable Power in the Classroom

Meet Surface Go for Education Portable power in the classroom A device as versatile as Transform classroom time Create a safe, manageable your students Innovative and reliable, Surface Go with Microsoft IT environment Education helps teachers deliver engaging learning Surface Go helps students achieve better experiences to students and foster creativity. Surface Go helps promote modern learning learning outcomes with a premium 2-in-1 device as an innovative 2-in-1 while securely integrating that balances the performance and portability. • Provide tools that personalize learning for with your ecosystem. your students • Power through a full day with 9 hours of battery life1 • Manage data and shared or individually assigned • Customize your lessons and give extra attention devices with Intune for Education • Read or watch in tablet mode, type in laptop mode, to students who need it or draw in studio mode • Get the privacy you should expect with safe searches • Inspire a passion for STEM by ensuring students take and no student retargeting • Type more comfortably with a mechanical backlit advantage of full software, apps, and web tools keypad on Surface Go Type Cover • Make the most of your technology investments with devices that optimize the Microsoft 365 experience Technical specifications Display 10” PixelSense™ Display, 1800 x 1200 (217 PPI) Sensors Ambient light sensor 10-point multi-touch, Aspect ratio 3:2 Accelerometer Corning® Gorilla® Glass 3 Gyroscope Contrast ratio: 1500:1 Magnetometer Dimensions 9.65" x 6.9" x 0.33" (245mm x -

Microsoft Surface Go

Surface Go Fact Sheet July 2018 We started by looking at the world we live in. It’s filled with spectrums and different perspectives. We see this rich diversity through the different roles we play in our lives. You are a parent, sister, brother, friend, employee, athlete, artist, etc. There are some people who believe you must stay in one place, but we believe in celebrating all these roles by embracing the word AND. You can be a parent AND an entrepreneurial titan. You can be a student AND a social justice warrior. You shouldn’t have to make binary choices that force unnecessary compromise. Surface believes in empowering users to be the most productive in their personal AND professional lives. We are thrilled to introduce our newest, smallest and lightest Surface yet — Surface Go. Packing a punch in 1.15 pounds and a 10-inch PixelSense Display, Surface Go moves with you throughout your day — from the road to your couch. A device that is small AND strong. That can run desktop apps like Office AND casual apps like Spotify and Netflix. You can work the way you want with ink AND touch AND a trackpad. It’s the premium qualities that Surface is known for AND our most accessible price yet. With Surface Go, we made the device smaller while remaining true to what makes Surface unique: versatility, design and performance. Surface Go is the best of Microsoft with the Windows you know and love, and the iconic Surface design with no compromise in premium quality. It’s your notebook for imagination, your best friend on the plane and your family’s trusted confidant. -

Download User Manual for Microsoft 10.8

Surface 3 User Guide With Windows 8.1 Published: June 2015 Version 2.0 © 2015 Microsoft. All rights reserved. BlueTrack Technology, ClearType, Excel, Hotmail, Internet Explorer, Microsoft, OneNote, Outlook, PowerPoint, OneDrive, Windows, Xbox, and Xbox Live are registered trademarks of Microsoft Corporation. Surface and Skype are trademarks of Microsoft Corporation. Bluetooth is a registered trademark of Bluetooth SIG, Inc. Dolby and the double-D symbol are registered trademarks of Dolby Laboratories. This document is provided “as-is.” Information in this document, including URL and other Internet website references, may change without notice. © 2015 Microsoft Page ii Contents Meet Surface 3 ................................................................................................................................................................. 1 SURFACE 3 FEATURES............................................................................................................................................................................................ 1 Set up your Surface 3 ...................................................................................................................................................... 4 The basics .......................................................................................................................................................................... 6 POWER AND CHARGING ...................................................................................................................................................................................... -

Surface Go 2 Product FAQ

Surface Go 2 Product FAQ Microsoft Internal & Partner Use Only Although the information contained in this document is considered public and may be used in discussions with customers, please do not share this document in its entirety. This documentation is proprietary information of Microsoft Corporation, provided for internal and/or partner use, for informational purposes only. Microsoft makes no warranties, either express or implied, in this document. Although the information contained in this document is considered public and may be used in discussions with customers, please do not share this document in its entirety. © 2020. Microsoft Corporation. All rights reserved. Contents Introduction ............................................................................................................................................................................................................ 2 Ready to get it done ........................................................................................................................................................................... 2 Meet the needs of your business .................................................................................................................................................. 3 Connect and collaborate with customers and colleagues ................................................................................................... 3 Surface for Business unlocks more value .................................................................................................................................. -

Contest Rules

MICROSOFT ONA20 CONTEST OFFICIAL RULES 1. SPONSOR These Official Rules (“Rules”) govern the operation of the Microsoft ONA20 Contest (“Contest”). Microsoft Corporation, One Microsoft Way, Redmond, WA, 98052, USA, is the Contest sponsor (“Sponsor”). 2. DEFINITIONS In these Rules, "Microsoft", "we", "our", and "us" refer to Sponsor and “you” and "yourself" refers to a Contest participant, or the parent/legal guardian of any Contest entrant who has not reached the age of majority to contractually obligate themselves in their legal place of residence. By entering you (your parent/legal guardian if you are not the age of majority in your legal place of residence) agree to be bound by these Rules. 3. ENTRY PERIOD The Contest starts at 12:01 p.m. Pacific Time (PT) on October 6, 2020, and ends at 11:59 p.m. PT on October 18, 2020 (“Entry Period”). 4. ELIGIBILITY This is a trade Contest open only to registered attendees of ONA20 18 years of age or older. Employees and directors of Microsoft Corporation and its subsidiaries, affiliates, advertising agencies, and Contest Parties are not eligible, nor are persons involved in the execution or administration of this promotion, or the family members of each above (parents, children, siblings, spouse/domestic partners, or individuals residing in the same household). Void in Cuba, Iran, North Korea, Sudan, Syria, Region of Crimea, and where prohibited. If you are participating in your capacity as an employee, it is your sole responsibility to comply with your employer’s gift policies. Microsoft will not be party to any disputes or actions related to this matter. -

Surface 2 User Guide with Windows RT 8.1 Software

Surface 2 User Guide With Windows RT 8.1 Software Published: April 2014 Version 2.0 © 2014 Microsoft. All rights reserved. BlueTrack Technology, ClearType, Excel, Hotmail, Internet Explorer, Microsoft, OneNote, Outlook, PowerPoint, OneDrive, Windows, Xbox, and Xbox Live are registered trademarks of Microsoft Corporation. Surface, Skype, and Wedge are trademarks of Microsoft Corporation. Bluetooth is a registered trademark of Bluetooth SIG, Inc. Dolby and the double-D symbol are registered trademarks of Dolby Laboratories. This document is provided “as-is.” Information in this document, including URL and other Internet Web site references, may change without notice. © 2014 Microsoft Page ii Contents MEET SURFACE 2 ..............................................................................................................................................................1 ABOUT THIS GUIDE ................................................................................................................................................................................................ 1 SURFACE 2 FEATURES ............................................................................................................................................................................................ 2 SET UP YOUR SURFACE ...................................................................................................................................................7 PLUG IN AND TURN ON ....................................................................................................................................................................................... -

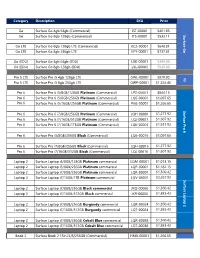

Microsoft-Surface-Price-List.Pdf

Category Description SKU Price Go Surface Go 4gb 64gb (Commercial) JST-00001 $401.85 Go Surface Go 8gb 128gb (Commercial) JTS-00001 $533.11 Surface Go Go LTE Surface Go 8gb 128gb LTE (Commercial) KC2-00001 $648.81 Go LTE Surface Go 8gb 256gb LTE KFY-00001 $737.81 Go (EDU) Surface Go 4gb 64gb (EDU) LXK-00001 $399.00 Go (EDU) Surface Go 8gb 128gb (EDU) LXL-00001 $549.00 Pro 5 LTE Surface Pro i5 4gb 128gb LTE GWL-00001 $970.90 V5 Pro 5 LTE Surface Pro i5 8gb 256gb LTE GWP-00001 $1,224.40 Pro 6 Surface Pro 6 i5/8GB/128GB Platinum (Commercial) LPZ-00001 $844.15 Pro 6 Surface Pro 6 i5/8GB/256GB Platinum (Commercial) LQ6-00001 $1,097.65 Pro 6 Surface Pro 6 i5/16GB/256GB Platinum (Commercial) P6G-00001 $1,266.65 Surface Pro 6 Surface Pro Pro 6 Surface Pro 6 i7/8GB/256GB Platinum (Commercial) LQH-00001 $1,277.92 Pro 6 Surface Pro 6 i7/16GB/512GB Platinum (Commercial) LQJ-00001 $1,607.92 Pro 6 Surface Pro 6 i7/16GB/1TBGB Platinum (Commercial) LQK-00001 $1,937.92 Pro 6 Surface Pro i5/8GB/256GB Black (Commercial) LQ6-00016 $1,097.65 Pro 6 Surface Pro i7/8GB/256GB Black (Commercial) LQH-00016 $1,277.92 Pro 6 Surface Pro i7/16GB/512GB Black (Commercial) LQJ-00016 $1,607.92 Laptop 2 Surface Laptop i5/8GB/128GB Platinum commercial LQM-00001 $1,013.15 Laptop 2 Surface Laptop i5/8GB/256GB Platinum commercial LQP-00001 $1,182.15 Laptop 2 Surface Laptop i7/8GB/256GB Platinum commercial LQR-00001 $1,360.42 Laptop 2 Surface Laptop i7/16GB/1TB Platinum commercial LQV-00001 $2,267.92 Surface Laptop 2 Laptop Surface Laptop 2 Surface Laptop i7/8GB/256GB Black commercial