Best Practice Guidelines and Recommendations for Large-Scale Long-Term Repository Migration; for Preservation of Research Data; for Bit Preservation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Concept of Appraisal and Archival Theory

328 American Archivist / Vol. 57 / Spring 1994 Research Article Downloaded from http://meridian.allenpress.com/american-archivist/article-pdf/57/2/328/2748653/aarc_57_2_pu548273j5j1p816.pdf by guest on 29 September 2021 The Concept of Appraisal and Archival Theory LUCIANA DURANTI Abstract: In the last decade, appraisal has become one of the central topics of archival literature. However, the approach to appraisal issues has been primarily methodological and practical. This article discusses the theoretical implications of appraisal as attribution of value to archives, and it bases its argument on the nature of archival material as defined by traditional archival theory. About the author: Luciana Duranti is associate professor in the Master of Archival Studies Pro- gram, School of Library, Archival, and Information Studies, at the University of British Columbia. Before leaving Italy for Canada in 1987, she was professor-researcher in the Special School for Archivists and Librarians at the University of Rome. She obtained that position in 1982, after having held archival and teaching posts in the State Archives of Rome and its School, and in the National Research Council. She earned a doctorate in arts from the University of Rome (1973) and graduate degrees in archival science and diplomatics and paleography from the University of Rome (1975) and the School of the State Archives of Rome (1979) respectively. The Concept of Appraisal and Archival Theory 329 Appraisal is the process of establishing the preceded by an exploration of the concept value of documents made or received in the of appraisal in the context of archival the- course of the conduct of affairs, qualifying ory, but only by a continuous reiteration of that value, and determining its duration. -

Infusing Consumer Data Reuse Practices Into Curation and Preservation Activities

San Diego, CA, 11 August 2012 76th Annual Meeting of the Society of American Archivists Infusing Consumer Data Reuse Practices into Curation and Preservation Activities Ixchel M. Faniel, Ph. D. OCLC Research [email protected] This project is possible with funding from the Institute of Museum and Library Services. The world’s libraries. Connected. Agenda DIPIR: A Project Overview Reuse Practices of Quantitative Social Scientists & Archaeologists Q&A The world’s libraries. Connected. An Overview: www.dipir.org The DIPIR Project The world’s libraries. Connected. Research Team Nancy McGovern ICPSR/MIT Elizabeth Ixchel Faniel Yakel OCLC University of Research Michigan (Co-PI) DIPIR (PI) Project William Fink Eric Kansa UM Museum Open of Zoology Context The world’s libraries. Connected. Research Motivation Our interest is in this overlap. Two Major Goals 1. Bridge gap between Data reuse data reuse and digital research curation research 2. Determine whether reuse and curation Disciplines Digital practices can be curating and curation generalized across reusing data research disciplines Faniel & Yakel (2011) The world’s libraries. Connected. Research Questions 1. What are the significant properties of data that facilitate reuse in the quantitative social science, archaeology, and ecology communities? 2. How can these significant properties be expressed as representation information to ensure the preservation of meaning and enable data reuse? The world’s libraries. Connected. Research Methodology Sep 2012 – Sep 2013 Phase 3: May 2011 – Apr 2013 Mapping Significant Phase 2: Properties as Collecting & Representation Analyzing User Information Data across the Oct 2010 – Jun 2011 Three Sites Phase 1: Project Start up The world’s libraries. -

Treasures of the World: Preserving Rare Book Collections 1

Running head: TREASURES OF THE WORLD: PRESERVING RARE BOOK COLLECTIONS 1 Treasures of the World: Preserving Rare Book Collections David Glauber 1 December 2016 Dr. Niu University of South Florida TREASURES OF THE WORLD: PRESERVING RARE BOOK COLLECTIONS 2 Treasures of the World: Preserving Rare Book Collections Rare books and other cultural artifacts maintained by special collections librarians are of great importance to society. They are historical objects that reflect the development and growth of culture and are desired and sought after by collectors from around the globe (Rinaldo, 2007, p. 38). Books, such as a first edition of William Shakespeare’s plays, the first English language dictionary, and surviving copies of the Gutenberg Bible reflect what may be considered rare books (Robinson, 2012, pp. 513-14). It is the collectable nature of the resources and their value that make a book rare, not just its age. Age is not sufficient to be considered rare, explains librarian Fred C. Robinson as there are “many books that survive in only a few copies [that] are of no value because they are inherently worthless to begin with” (p. 514). In addition to maintaining rare books, special collections librarians, also known as curators, oversee archival materials and fragile materials, which are housed in a special collections department. Within this department, curators create a protective environment for these resources, monitoring lighting conditions and air flow, with the goal of extending the shelf life of these cultural artifacts for generations to come (Cullison & Donaldson, 1987, p. 233). However, collections all over the world face great challenges, including budgetary and space shortages, negligence in recognizing cultural and historical value, challenges in preserving books that are already in fragile, deteriorating condition, and dilemmas over how to provide access to rare books and objects that are of a fragile nature. -

Special Collections in the Public Library

ILLINOIS UNIVERSITY OF ILLINOIS AT URBANA-CHAMPAIGN PRODUCTION NOTE University of Illinois at Urbana-Champaign Library Large-scale Digitization Project, 2007. Library Trends VOLUME 36 NUMBER 1 SUMMER 1987 University of Illinois Graduate School of Library and Information Science Whrre necessary, prrmisyion IS gr.inted by thr cop)right owncr for libraries and otherq registered with the Copyright Clearance Centrr (CXC)to photocop) any article herein for $5.00 pei article. Pay- ments should br sent dirrctly tn thr Copy- right Clraranrc Crnter, 27 Congiess Strert, Salem, blasaachusrtts 10970. Cop)- ing done for other than prrsonal or inter- nal reference usr-such as cop)iiig for general distribution, tot advertising or promotional purposrs. foi creating new collrctivc works, or for rraale-without the expressed permisyion of The Board of Trurtees of 'Thr University of Illinois is prohibited. Requests for special perrnis- sion or bulk orders should be addiessed to The GiaduateSrhool of L.ibrarv and Infor- mation Science, 249 Armory Building, 505 E Armory St., Champaizri, Illinois 61820. Serial-[re rodr: 00242594 87 $3 + .00. Copyright 6) 1987 Thr Board of Trusters of The Ilnivrisity of Illiiioia. Recent Trends In Rare Book Librarianship MICHELE VALERIE CLOONAN Issue Editor CONTENTS I. Recent Trends in Rare Book Librarianship: An Ormziiew Micht.le Valerie Cloonan 3 INTRODUCTION Sidney E. Berger 9 WHAT IS SO RARE...: ISSI ES N RARE BOOK LIBRARIANSHIP 11. Aduances in Scientific Investigation and Automation Jeffrey Abt 23 OBJECTIFYING THE BOOK: THE IMPACT OF- SCIENCE ON BOOKS AND MANUSCRIPTS Paul S. Koda 39 SCIENTIFIC: EQUIPMEN'I' FOR THE EXAMINATION OF RARE BOOKS, MANITSCRIPTS, AND DOCITMENTS Richard N. -

Morality and Nationalism

Morality and Nationalism This book takes a unique approach to explore the moral foundations of nationalism. Drawing on nationalist writings and examining almost 200 years of nationalism in Ireland and Quebec, the author develops a theory of nationalism based on its role in representation. The study of nationalism has tended towards the construction of dichotomies – arguing, for example, that there are political and cultural, or civic and ethnic, versions of the phenomenon. However, as an object of moral scrutiny this bifurcation makes nationalism difficult to work with. The author draws on primary sources to see how nationalists themselves argued for their cause and examines almost two hundred years of nationalism in two well-known cases, Ireland and Quebec. The author identifies which themes, if any, are common across the various forms that nationalism can take and then goes on to develop a theory of nationalism based on its role in representation. This representation-based approach provides a basis for the moral claim of nationalism while at the same time identifying grounds on which this claim can be evaluated and limited. It will be of strong interest to political theorists, especially those working on nationalism, multiculturalism, and minority rights. The special focus in the book on the Irish and Quebec cases also makes it relevant reading for specialists in these fields as well as for other area studies where nationalism is an issue. Catherine Frost is Assistant Professor of Political Theory at McMaster University, Canada. Routledge Innovations in Political Theory 1 A Radical Green Political Theory Alan Carter 2 Rational Woman A feminist critique of dualism Raia Prokhovnik 3 Rethinking State Theory Mark J. -

Sistine Chapel Coloring Book Pdf Free Download

SISTINE CHAPEL COLORING BOOK PDF, EPUB, EBOOK Buonarroti Michelangelo | 32 pages | 27 Aug 2004 | Dover Publications Inc. | 9780486433349 | English | New York, United States Sistine Chapel Coloring Book PDF Book Cardinal Edmund Szoka , governor of Vatican City , said: "This restoration and the expertise of the restorers allows us to contemplate the paintings as if we had been given the chance of being present when they were first shown. Bacteria and chemical pollutants are filtered out. Leave a Comment Cancel Reply Your email address will not be published. The brilliant palette ought to have been expected by the restorers as the same range of colours appears in the works of Giotto , Masaccio and Masolino , Fra Angelico and Piero della Francesca , as well as Ghirlandaio himself and later fresco painters such as Annibale Carracci and Tiepolo. Product links above are affiliate links. Start your review of Sistine Chapel Coloring Book. The apparent explanation for this is that over the long period that Michelangelo was at work, he probably, for a variety of reasons, varied his technique. Michelangelo, Sistine Chapel Ceiling, , fresco. If you would like to cite this page, please use this information: ItalianRenaissance. Its walls were decorated by a number of Renaissance painters who were among the most highly regarded artists of late 15th century Italy, including Ghirlandaio , Perugino , and Botticelli. Sign up for our mailing list and receive Free Domestic Standard Shipping on your orders. Through a study of the main campaigns to adorn the Sistine Chapel, Pfisterer argues that the art transformed the chapel into a pathway to the kingdom of God, legitimizing the absolute authority of the popes. -

The Definitive Guide to Automating Content Migration Migrating Digital Content Without Scripting Or Consultants

White Paper The Definitive Guide to Automating Content Migration Migrating digital content without scripting or consultants. September 2012 Executive Summary Contents Today’s organizations are adopting Content Management 1 Executive Summary Systems to reduce cost, increase efficiency, and improve 2 Content Migration: The Hidden customer service, but migrating digital assets into a new CMS Challenges can be a significant effort. 3 Traditional Content Migration Too often organizations begin a migration without fully 4 Content Migration with Kapow Katalyst understanding the challenges they face—resulting in slipped deadlines, reduced scope, and even project failure. 7 Kapow Katalyst Content Migration in Action This white paper will alert you to the key issues that can arise 8 Automate Your Content Migration with Kapow Katalyst when planning and executing a CM project, and show how Kapow Katalyst™ has addressed those issues—in project after project—and enabled cost and schedule reductions up to 90%. White Paper The Definitive Guide to Automating Content Migration 2 Content Migration: The Hidden Challenges Kapow Katalyst enabled Lenovo Today’s organizations depend on sharing a wide variety of digital content, to access content held in a including websites, digital media, and documents. But selecting the right Content diverse group of data stores, Management System to house and manage your digital assets is only the first including Quest DB, Domino DB, step—the real challenges arise while migrating content to your new CMS. What static HTML, and custom .NET makes content migration (CM) so complex, unpredictable, and high-risk? Let’s applications. explore a few reasons. Digital Assets May be Missing It’s not uncommon for assets to be lost or misplaced. -

Rudolf Steiner's Theories and Their Translation Into Architecture

Rudolf Steiner’s Theories and Their Translation into Architecture by Fiona Gray B.A. Arts (Arch.); B. ARCH. (Deakin). Submitted in fulfilment of the requirements for the degree of Doctor of Philosophy Deakin University Submitted for examination January, 2014. Acknowledgements There are many people to whom I am greatly indebted for the support they have given me throughout this thesis. First among them are my supervisors. Without the encouragement I received from Dr. Mirjana Lozanovska I may never have embarked upon this journey. Her candour and insightful criticism have been immensely valuable and her intelligent engagement with the world of architecture has been a source of inspiration. Associate Professor Ursula de Jong has also made a very substantial contribution. Her astute reading and generous feedback have helped bring clarity and discipline to my thinking and writing. She has been a passionate advocate of my work and a wonderful mentor. Special thanks also to Professor Des Smith, Professor Judith Trimble and Guenter Lehmann for their ongoing interest in my work and the erudition and wisdom they graciously imparted at various stages along the way. My research has also been greatly assisted by a number of people at Deakin University. Thank you to the friendly and helpful library staff, especially those who went to great lengths to source obscure texts tucked away in libraries in all corners of the world. Thanks also to the Research Services Division, particularly Professor Roger Horn for the informative research seminars and much needed writing retreats he facilitated. My ambition to undertake this PhD was made possible by the financial support I received from a Deakin University Science and Technology scholarship. -

Modern Domestic Architcture in and Around Ithaca, Ny: the “Fallingwaters” of Raymond Viner Hall

MODERN DOMESTIC ARCHITCTURE IN AND AROUND ITHACA, NY: THE “FALLINGWATERS” OF RAYMOND VINER HALL A Thesis Presented to the Faculty of the Graduate School of Cornell University In Partial Fulfillment of the Requirements for the Degree of Master of Arts by Mahyar Hadighi January 2014 © 2014 Mahyar Hadighi ABSTRACT This research examines the role of Modern architecture in shaping the American dream through the work of a particular architect, Raymond Viner Hall, a Frank Lloyd Wright follower, in Ithaca, NY. Modernists’ ideas and Modern architecture played significant roles in the twentieth century post-depression urban history. Although the historic part of historic preservation does not commonly refer to twentieth century architecture, mid-century Modern architecture is an important part of the history and its preservation is important. Many of these mid-century Modern examples have already been destroyed, mainly because of lack of documentation, lack of general public knowledge, and lack of activity of advocacy groups and preservationists. Attention to the recent past history of Ithaca, New York, which is home of Cornell University and the region this research survey focuses on, is similarly not at the level it should be. Thus, in an attempt to begin to remedy this oversight, and in the capacity of a historic preservation-planning student at Cornell (with a background in architecture), a survey documenting the Modern architecture of the area was conducted. In the process of studying the significant recent history of Ithaca, a very interesting local adaptation of Wrightian architecture was discovered: the projects of Raymond Viner Hall (1908-1981), a semi-local Pennsylvanian architect, who was a Frank Lloyd Wright follower and son of the chief builder of Fallingwater. -

Issues Surrounding the Preservation of Digital Music Documents

Issues Surrounding the Preservation of Digital Music Documents BRENT LEE RÉSUMÉ Les défis que pose la conservation des archives musicales ont été compli- qués au cours des dernières décennies par la création de documents sous forme numérique. Cette révolution numérique a affecté toutes les étapes de la création musicale traditionnelle, depuis l’ébauche et la notation des compositions jusqu’à l’enregistrement des performances. De plus, les nouvelles formes de musique unique- ment numériques impliquant la composition à l’aide d’algorythmes informatiques, d’environnements interactifs et de synthèse de son numérique ont amené la création de nouvelles variétés de documents numériques. Cet article décrit les différents types de documents qui sont produits aujourd’hui dans le cours du processus de création musicale et expose les défis que pose le passage du temps sur ces documents. Il s’arrête plus spécifiquement sur la capacité de les lire (comment savoir si nous pourrons récupérer correctement les documents numériques?), leur compréhension (comment pourrons-nous savoir ce que ces documents signifient exactement?), de même que sur l’adéquation entre leur représentation et leur authenticité (comment savoir que la fiabilité, l’identité et l’intégrité des documents n’ont pas été compromis d’une façon ou d’une autre?). Comprendre ces défis est crucial pour la conservation de notre culture musicale contemporaine. ABSTRACT The challenges of preserving musical archives have been complicated over the last few decades by the generation of documents in digital form. The digital revolution has affected all stages of traditional musical creation, from the sketching and notation of compositions to recordings of performances; also, new forms of uniquely digital music involving computer-aided algorithmic composition, interactive environments, and digital sound synthesis have created corresponding new varieties of digital documents. -

Content Matrix 9.3 Release Notes February 2021

Metalogix® Content Matrix 9.3 Release Notes February 2021 These release notes provide information about the latest Metalogix® Content Matrix release. · New Features · Resolved Issues · Known Issues · System Requirements · Product Licensing · Third Party Contributions · About Us New Features Version 9.3 of Metalogix Content Matrix introduces the following features: · Content Matrix supports Windows Server 2019 and SQL Server 2019. · The Content Matrix Console client application requires Microsoft .NET Framework 4.7.2 to run. · Support for the migration of Publishing sites to Communication sites has been added. · You can convert classic pages to modern pages from within Content Matrix using a solution provided by the PnP Community. · Support for the migration of classic web parts via the Import Pipeline has been added. (Refer to the SharePoint Edition user documentation for a complete list of supported web parts.) · An option has been added to the Connect to SharePoint dialog which allows you to connect to an Office 365 tenant that uses a custom domain with OAuth authentication. · Azure private container naming conventions have been implemented to make it easier to identify and delete containers and queues created by Content Matrix during migrations that use the Import Pipeline. · The following enhanced security practices have been implemented (which include practices that comply with Federal Information Processing Standards (FIPS)): § Users have the option to encrypt a connection to a SQL Server database. Content Matrix 1 § An option has been added to installer which will allow users to run Content Matrix without being a local Administrator on the machine where it is installed. § Content Matrix now validates certificates used when making on-premises SSL/TLS connections to SharePoint. -

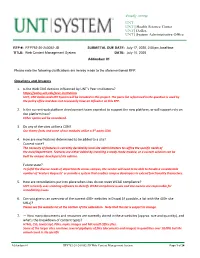

1. Is the Web CMS Decision Influenced by UNT's Peer

Proudly serving: UNT UNT | Health Science Center UNT | Dallas UNT | System Administrative Office RFP #: RFP752-20-243052-JD SUBMITTAL DUE DATE: July 17, 2020, 2:00 pm, local time TITLE: Web Content Management System DATE: July 10, 2020 Addendum #1 Please note the following clarifications are hereby made to the aforementioned RFP. Questions and Answers 1. Is the Web CMS decision influenced by UNT’s Peer Institutions? https://policy.unt.edu/peer-institutions UNT, UNT Dallas and UNT System will be included in this project. The peers list referenced in the question is used by the policy office and does not necessarily have an influence on this RFP. 2. Is the current web platform development team expected to support the new platform, or will support rely on the platform host? Either option will be considered. 3. Do any of the sites utilize a CDN? Our theme fonts and some of our modules utilize a 3rd party CDN. 4. How are new features determined to be added to a site? Current state? The necessity of features is currently decided by local site administrators to suffice the specific needs of the area/department. Features are either added by installing a ready-made module, or a custom solution can be built by campus developers/site admins. Future state? To fulfill the diverse needs of departments across campus, the vendor will need to be able to handle a considerable number of ‘Feature Requests’ or provide a system that enables campus developers to extend functionality themselves. 5. How are remediations put into place when sites do not meet WCAG compliance? UNT currently uses scanning software to identify WCAG compliance issues and site owners are responsible for remediating issues.