The Power of Higher-Order Composition Languages in System Design

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Notes on Functional Programming with Haskell

Notes on Functional Programming with Haskell H. Conrad Cunningham [email protected] Multiparadigm Software Architecture Group Department of Computer and Information Science University of Mississippi 201 Weir Hall University, Mississippi 38677 USA Fall Semester 2014 Copyright c 1994, 1995, 1997, 2003, 2007, 2010, 2014 by H. Conrad Cunningham Permission to copy and use this document for educational or research purposes of a non-commercial nature is hereby granted provided that this copyright notice is retained on all copies. All other rights are reserved by the author. H. Conrad Cunningham, D.Sc. Professor and Chair Department of Computer and Information Science University of Mississippi 201 Weir Hall University, Mississippi 38677 USA [email protected] PREFACE TO 1995 EDITION I wrote this set of lecture notes for use in the course Functional Programming (CSCI 555) that I teach in the Department of Computer and Information Science at the Uni- versity of Mississippi. The course is open to advanced undergraduates and beginning graduate students. The first version of these notes were written as a part of my preparation for the fall semester 1993 offering of the course. This version reflects some restructuring and revision done for the fall 1994 offering of the course|or after completion of the class. For these classes, I used the following resources: Textbook { Richard Bird and Philip Wadler. Introduction to Functional Program- ming, Prentice Hall International, 1988 [2]. These notes more or less cover the material from chapters 1 through 6 plus selected material from chapters 7 through 9. Software { Gofer interpreter version 2.30 (2.28 in 1993) written by Mark P. -

The Haskell School of Expression: Learning Functional Programming Through Multimedia

The Haskell School of Expression: Learning Functional Programming through Multimedia The Haskell School of Expression: Learning Functional Programming through Multimedia - 2000 - Cambridge University Press, 2000 - 1107268656, 9781107268654 - Paul Hudak Functional programming is a style of programming that emphasizes the use of functions (in contrast to object-oriented programming, which emphasizes the use of objects). It has become popular in recent years because of its simplicity, conciseness, and clarity. This book teaches functional programming as a way of thinking and problem solving, using Haskell, the most popular purely functional language. Rather than using the conventional (boring) mathematical examples commonly found in other programming language textbooks, the author uses examples drawn from multimedia applications, including graphics, animation, and computer music, thus rewarding the reader with working programs for inherently more interesting applications. Aimed at both beginning and advanced programmers, this tutorial begins with a gentle introduction to functional programming and moves rapidly on to more advanced topics. Details about progamming in Haskell are presented in boxes throughout the text so they can be easily found and referred to. DOWNLOAD FILE HERE: http://resourceid.org/2fjqbSK.pdf Practical Aspects of Declarative Languages - Paul Hudak, David S. Warren - 10th International Symposium, PADL 2008, San Francisco, CA, USA, January 7-8, 2008, Proceedings - This book, complete with online files and updates, covers a hugely important area of study in computing. It constitutes the refereed proceedings of the 10th International - ISBN:9783540774419 - Computers - Dec 18, 2007 - 342 pages DOWNLOAD FILE HERE: http://resourceid.org/2fjj64I.pdf Advanced Functional Programming - First International Spring School on Advanced Functional Programming Techniques, Bastad, Sweden, May 24 - 30, 1995. -

Modeling User Interfaces in a Functional Language

Abstract Modeling User Interfaces in a Functional Language Antony Alexander Courtney 2004 It is widely recognized that programs with Graphical User Interfaces (GUIs) are difficult to design and implement. One possible reason for this difficulty is the lack of any clear formal basis for GUI programming. GUI toolkit libraries are typically described only informally, in terms of implementation artifacts such as objects, imperative state and I/O systems. In this thesis, we develop Fruit, a Functional Reactive User Interface Toolkit. Fruit is based on Yampa, an adaptation of Functional Reactive Programming (FRP) to the Arrows computational framework. Yampa has a clear, simple formal se- mantics based on a synchronous dataflow model of computation. GUIs in Fruit are defined compositionally using only the Yampa model and formally tractable mouse, keyboard and picture types. Fruit and Yampa have been implemented as libraries for Haskell, a purely functional programming language. This thesis presents the semantics and implementations of Yampa and Fruit, and shows how they can be used to write concise executable specifications of com- mon GUI programming idioms and complete GUI programs. Modeling User Interfaces in a Functional Language A Dissertation Presented to the Faculty of the Graduate School of Yale University in Candidacy for the Degree of Doctor of Philosophy by Antony Alexander Courtney Dissertation Director: Professor Paul Hudak May 2004 Copyright c 2004 by Antony Alexander Courtney All rights reserved. ii Contents Acknowledgments ix 1 Introduction 1 1.1 Background and Motivation . ...................... 1 1.2 Dissertation Overview . ...................... 4 I Foundations 5 2 Yampa: A Synchronous Dataflow Language Embedded in Haskell 6 2.1 Concepts . -

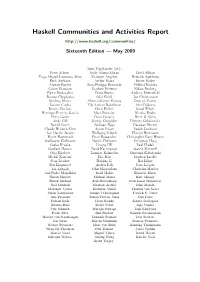

Haskell Communities and Activities Report

Haskell Communities and Activities Report http://www.haskell.org/communities/ Sixteenth Edition — May 2009 Janis Voigtländer (ed.) Peter Achten Andy Adams-Moran Lloyd Allison Tiago Miguel Laureano Alves Krasimir Angelov Heinrich Apfelmus Emil Axelsson Arthur Baars Justin Bailey Alistair Bayley Jean-Philippe Bernardy Clifford Beshers Gwern Branwen Joachim Breitner Niklas Broberg Björn Buckwalter Denis Bueno Andrew Butterfield Roman Cheplyaka Olaf Chitil Jan Christiansen Sterling Clover Alberto Gómez Corona Duncan Coutts Jácome Cunha Nils Anders Danielsson Atze Dijkstra Robert Dockins Chris Eidhof Conal Elliott Henrique Ferreiro García Marc Fontaine Nicolas Frisby Peter Gavin Patai Gergely Brett G. Giles Andy Gill George Giorgidze Dimitry Golubovsky Daniel Gorin Jurriaan Hage Bastiaan Heeren Claude Heiland-Allen Aycan Irican Judah Jacobson Jan Martin Jansen Wolfgang Jeltsch Florian Haftmann Kevin Hammond Enzo Haussecker Christopher Lane Hinson Guillaume Hoffmann Martin Hofmann Creighton Hogg Csaba Hruska Liyang HU Paul Hudak Graham Hutton Farid Karimipour Garrin Kimmell Oleg Kiselyov Lennart Kolmodin Slawomir Kolodynski Michal Konečný Eric Kow Stephen Lavelle Sean Leather Huiqing Li Bas Lijnse Ben Lippmeier Andres Löh Rita Loogen Ian Lynagh John MacFarlane Christian Maeder José Pedro Magalhães Ketil Malde Blažević Mario Simon Marlow Michael Marte Bart Massey Simon Michael Arie Middelkoop Ivan Lazar Miljenovic Neil Mitchell Maarten de Mol Dino Morelli Matthew Naylor Rishiyur Nikhil Thomas van Noort Johan Nordlander Jeremy O’Donoghue Patrick O. -

A Case for Generative Programming and Dsls in Performance Critical Systems

Go Meta! A Case for Generative Programming and DSLs in Performance Critical Systems Tiark Rompf1, Kevin J. Brown2, HyoukJoong Lee2, Arvind K. Sujeeth2, Manohar Jonnalagedda4, Nada Amin4, Georg Ofenbeck5, Alen Stojanov5, Yannis Klonatos3, Mohammad Dashti3, Christoph Koch3, Markus Püschel5, and Kunle Olukotun2 1 Purdue University, USA {first}@purdue.edu 2 Stanford University, USA {kjbrown,hyouklee,asujeeth,kunle}@stanford.edu 3 EPFL DATA, Switzerland {first.last}@epfl.ch 4 EPFL LAMP, Switzerland {first.last}@epfl.ch 5 ETH Zurich, Switzerland {ofgeorg,astojanov,pueschel}@inf.ethz.ch Abstract Most performance critical software is developed using very low-level techniques. We argue that this needs to change, and that generative programming is an effective avenue to enable the use of high-level languages and programming techniques in many such circumstances. 1998 ACM Subject Classification D.1 Programming Techniques Keywords and phrases Performance, Generative Programming, Staging, DSLs Digital Object Identifier 10.4230/LIPIcs.xxx.yyy.p 1 The Cost of Performance Performance critical software is almost always developed in C and sometimes even in assem- bly. While implementations of high-level languages have come a long way, programmers do not trust them to deliver the same reliable performance. This is bad, because low-level code in unsafe languages attracts security vulnerabilities and development is far less agile and productive. It also means that PL advances are mostly lost on programmers operating un- der tight performance constraints. Furthermore, in the age of heterogeneous architectures, “big data” workloads and cloud computing, a single hand-optimized C codebase no longer provides the best, or even good, performance across different target platforms with diverse programming models (multi-core, clusters, NUMA, GPU, ...). -

Philip Wadler

Philip Wadler School of Informatics, University of Edinburgh 10 Crichton Street, Edinburgh EH8 9AB, SCOTLAND [email protected] http://homepages.inf.ed.ac.uk/wadler/ +44 131 650 5174 (W), +44 7976 507 543 (M) Citizen of United States and United Kingdom. Born: 1956 Father of Adam and Leora Wadler. Separated from Catherine Lyons. 1 Education 1984. Ph.D., Computer Science, Carnegie-Mellon University. Dissertation title: Listlessness is Better than Laziness. Supervisor: Nico Habermann. Committee: James Morris, Guy Steele, Bill Scherlis. 1979. M.S., Computer Science, Carnegie-Mellon University. 1977. B.S., Mathematics, with honors, Phi Beta Kappa, Stanford University. Awards National Science Foundation Fellow, three year graduate fellowship. 1975 ACM Forsythe Student Paper Competition, first place. 2 Employment 2003{present. Edinburgh University. Professor of Theoretical Computer Science. 2017{present. IOHK. Senior Research Fellow, Area Leader Programming Languages. 1999{2003. Avaya Labs. Member of Technical Staff. 1996{1999. Bell Labs, Lucent Technologies. Member of Technical Staff. 1987{1996. University of Glasgow. Lecturer, 1987{90; Reader, 1990{93; Professor, 1993{96. 1983{1987. Programming Research Group and St. Cross College, Oxford. Visiting Research Fellow and ICL Research Fellow. Awards Most Influential POPL Paper Award 2003 (for 1993) for Imperative Functional Programming by Simon Peyton Jones and Philip Wadler. Royal Society Wolfson Research Merit Award, 2004{2009. Fellow, Royal Society of Edinburgh, 2005{. Fellow, Association for Computing Machinery, 2007{. EUSA Teaching Awards, Overall High Performer, runner up, 2009. 1 3 Research overview Hybrid trees yield the most robust fruit. My research in programming languages spans theory and practice, using each to fertilize the other. -

Course Information

CS 591/491 Advanced Programming Techniques, Spring 2009 1 Preliminary version of 20 January Course Information Meetings Tuesdays and Thursdays, 2:00–3:15, in FEC345. Instructor Darko Stefanovic, office hours Tuesdays and Thursdays, 3:20–4:00 in ECE 236C. Course topics and format A seminar with readings and presentations on selected topics in typeful functional programming. Read- ings range from classics to current research, but emphasis is on tutorial articles on programming tech- niques, principally from the summer schools on advanced functional programming. Reading List / Lecture Plan This is a preliminary list of topics, which will be adjusted to reflect the interests of course participants. The pace will be adjusted as well; we may take more than one class period per paper if the discussion shows this is necessary. The sequence proposed below allows dropping the tail. I expect, however, that we will definitely cover topics 1–15. Any dropped topics will be covered in a seminar next year. Presenter name shown in italics. Week 1-2: Introduction 1 organizational; Haskell in brief [IFPH, Ch1-5; H9] Stefanovic 2 functional programming [H4] [T1] Stefanovic 3 trees with folds, binary heap trees, rose trees [IFPH, Ch6] Stefanovic Week 3: Efficiency 4 lazy evaluation, accumulating parameters, tupling [IFPH, Ch7] 5 fusion and deforestation [IFPH, Ch7] Week 4-6: Data structures 6 amortization; queues and splay heaps [O1] 7 red-black trees [O6] 8 more heap trees [O7] 9 tree traversal [O3] 10 tree access [H1] CS 591/491 Advanced Programming Techniques, -

Haskell Communities and Activities Report

Haskell Communities and Activities Report http://tinyurl.com/haskcar Twenty-Third Edition — November 2012 Janis Voigtländer (ed.) Andreas Abel Heinrich Apfelmus Emil Axelsson Doug Beardsley Jean-Philippe Bernardy Jeroen Bransen Gwern Branwen Joachim Breitner Björn Buckwalter Erik de Castro Lopo Olaf Chitil Duncan Coutts Jason Dagit Nils Anders Danielsson Romain Demeyer Daniel Díaz Atze Dijkstra Adam Drake Sebastian Erdweg Ben Gamari Andy Georges Patai Gergely Brett G. Giles Andy Gill George Giorgidze Torsten Grust Jurriaan Hage Bastiaan Heeren Mike Izbicki PÁLI Gábor János Guillaume Hoffmann Csaba Hruska Paul Hudak Oleg Kiselyov Michal Konečný Eric Kow Ben Lippmeier Andres Löh Hans-Wolfgang Loidl Rita Loogen Ian Lynagh Christian Maeder José Pedro Magalhães Ketil Malde Antonio Mamani Simon Marlow Dino Morelli JP Moresmau Ben Moseley Takayuki Muranushi Jürgen Nicklisch-Franken Tom Nielsen Rishiyur Nikhil Jens Petersen David Sabel Uwe Schmidt Martijn Schrage Tom Schrijvers Andrew G. Seniuk Jeremy Shaw Christian Höner zu Siederdissen Michael Snoyman Doaitse Swierstra Henning Thielemann Sergei Trofimovich Bernhard Urban Marcos Viera Janis Voigtländer Daniel Wagner Greg Weber Kazu Yamamoto Edward Z. Yang Brent Yorgey Preface This is the 23rd edition of the Haskell Communities and Activities Report. As usual, fresh entries are formatted using a blue background, while updated entries have a header with a blue background. Entries for which I received a liveness ping, but which have seen no essential update for a while, have been replaced with online pointers to previous versions. Other entries on which no new activity has been reported for a year or longer have been dropped completely. Please do revive such entries next time if you do have news on them. -

Synthesizing Functional Reactive Programs

Synthesizing Functional Reactive Programs Bernd Finkbeiner Felix Klein Saarland University Saarland University Germany Germany Ruzica Piskac Mark Santolucito Yale University Yale University CT, USA CT, USA Abstract 1 Introduction Functional Reactive Programming (FRP) is a paradigm that Reactive programs implement a broad class of computer has simplified the construction of reactive programs. There systems whose defining element is the continued interaction are many libraries that implement incarnations of FRP, us- between the system and its environment. Their importance ing abstractions such as Applicative, Monads, and Arrows. can be seen through the wide range of applications, such as However, finding a good control flow, that correctly manages embedded devices [Helbling and Guyer 2016], games [Perez state and switches behaviors at the right times, still poses a 2017], robotics [Jing et al. 2016], hardware circuits [Khalimov major challenge to developers. et al. 2014], GUIs [Czaplicki and Chong 2013], and interactive An attractive alternative is specifying the behavior instead multimedia [Santolucito et al. 2015]. of programming it, as made possible by the recently devel- Functional Reactive Programming (FRP) [Courtney et al. oped logic: Temporal Stream Logic (TSL). However, it has 2003; Elliott and Hudak 1997] is a paradigm for writing pro- not been explored so far how Control Flow Models (CFMs), grams for reactive systems. The fundamental idea of FRP resulting from TSL synthesis, are turned into executable code is to extend the classic building blocks of functional pro- that is compatible with libraries building on FRP. We bridge gramming with the abstraction of a siдnal to describe values this gap, by showing that CFMs are a suitable formalism to varying over time. -

Specifying and Verifying Hardware-Based Security Enforcement Mechanisms Thomas Letan

Specifying and Verifying Hardware-based Security Enforcement Mechanisms Thomas Letan To cite this version: Thomas Letan. Specifying and Verifying Hardware-based Security Enforcement Mechanisms. Hard- ware Architecture [cs.AR]. CentraleSupélec, 2018. English. NNT : 2018CSUP0002. tel-01989940v2 HAL Id: tel-01989940 https://hal.inria.fr/tel-01989940v2 Submitted on 27 May 2020 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. THESE DE DOCTORAT DE CENTRALESUPELEC RENNES COMUE UNIVERSITE BRETAGNE LOIRE ECOLE DOCTORALE N° 601 Mathématiques et Sciences et Technologies de l'Information et de la Communication Spécialité : Informatique Par Thomas Letan Specifying and Verifying Hardware-based Security Enforcement Mechanisms Thèse présentée et soutenue à Paris, le 25 octobre 2018 Unité de recherche : CIDRE Thèse N° : 2018-06-TH Rapporteurs avant soutenance : Composition du Jury : Gilles Barthe Professor, IMDEA Software Institute Présidente Laurence Pierre Professeur, Université Grenoble Alpes Emmanuelle Encrenaz Maître de conférences, Sorbonne Université Membres Gilles Barthe Professor, IMDEA Software Institute Laurence Pierre Professeur, Université Grenoble Alpes Pierre Chifflier Chef de laboratoire, ANSSI Guilaume Hiet Maître de conférences, CentraleSupélec Rennes Directeur de thèse Ludovic Mé Professeur, Inria Rennes Invité Alastair Reid Researcher, ARM Ltd. ii Abstract In this thesis, we consider a class of security enforcement mechanisms we called Hardware-based Security Enforcement (HSE). -

Imperative Functional Programming

Imp erative functional programming Simon L Peyton Jones Philip Wadler Dept of Computing Science University of Glasgow Email simonpjwadlerdcsglagsowacuk Octob er This paper appears in ACM Symposium on Principles Of Programming Languages POPL Charleston Jan pp This copy corrects a few minor typographical errors in the published version Abstract IO are constructed by gluing together smaller pro grams that do so Section Combined with higher We present a new mo del based on monads for p erform order functions and lazy evaluation this gives a ing inputoutput in a nonstrict purely functional lan highly expressive medium in which to express IO guage It is comp osable extensible ecient requires no p erforming computations Section quite the extensions to the type system and extends smo othly to reverse of the sentiment with which we b egan this incorp orate mixedlanguage working and inplace array section up dates We compare the monadic approach to IO with other standard approaches dialogues and continuations Section and eect systems and linear types Sec Introduction tion Inputoutput has always app eared to b e one of the less It is easily extensible The key to our implementation satisfactory features of purely functional languages t is to extend Haskell with a single form that allows one ting action into the functional paradigm feels like tting to call an any pro cedure written in the programming a square blo ck into a round hole Closely related dicul language C Kernighan Ritchie without ties are asso ciated with p erforming inplace -

A Reflection on Types Simon

Bryn Mawr College Scholarship, Research, and Creative Work at Bryn Mawr College Computer Science Faculty Research and Computer Science Scholarship 2016 A Reflection on Types Simon. Peyton Jones Stephanie Weirich Richard A. Eisenberg Bryn Mawr College Dimitrios Vytiniotis Let us know how access to this document benefits ouy . Follow this and additional works at: http://repository.brynmawr.edu/compsci_pubs Part of the Programming Languages and Compilers Commons, and the Software Engineering Commons Custom Citation Simon Peyton Jones , Stephanie Weirich, Richard A. Eisenberg, Dimitrios Vytiniotis, "A Reflection on Types," in A List of Successes That Can Change the World: Essays Dedicated to Philip Wadler on the Occasion of His 60th Birthday, ed. Sam Lindley et al. (Cham: Springer International Publishing, 2016) 292-317. This paper is posted at Scholarship, Research, and Creative Work at Bryn Mawr College. http://repository.brynmawr.edu/compsci_pubs/3 For more information, please contact [email protected]. A reflection on types Simon Peyton Jones1, Stephanie Weirich2, Richard A. Eisenberg2, and Dimitrios Vytiniotis1 1 Microsoft Research, Cambridge 2 Department of Computer and Information Science, University of Pennsylvania Abstract. The ability to perform type tests at runtime blurs the line between statically-typed and dynamically-checked languages. Recent de- velopments in Haskell’s type system allow even programs that use re- flection to themselves be statically typed, using a type-indexed runtime representation of types called TypeRep. As a result we can build dynamic types as an ordinary, statically-typed library, on top of TypeRep in an open-world context. 1 Preface If there is one topic that has been a consistent theme of Phil Wadler’s research career, it would have to be types.