MRC Guidance on Developing and Evaluating Complex Interventions

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Durham Research Online

Durham Research Online Deposited in DRO: 17 June 2009 Version of attached le: Accepted Version Peer-review status of attached le: Peer-reviewed Citation for published item: Whitehead, M. and Petticrew, M. and Graham, H. and Macintyre, S. and Bambra, C. and Egan, M. (2004) 'Evidence for public health policy on inequalities II : assembling the evidence jigsaw.', Journal of epidemiology and community health., 58 (10). pp. 817-821. Further information on publisher's website: http://dx.doi.org/10.1136/jech.2003.015297 Publisher's copyright statement: Additional information: Use policy The full-text may be used and/or reproduced, and given to third parties in any format or medium, without prior permission or charge, for personal research or study, educational, or not-for-prot purposes provided that: • a full bibliographic reference is made to the original source • a link is made to the metadata record in DRO • the full-text is not changed in any way The full-text must not be sold in any format or medium without the formal permission of the copyright holders. Please consult the full DRO policy for further details. Durham University Library, Stockton Road, Durham DH1 3LY, United Kingdom Tel : +44 (0)191 334 3042 | Fax : +44 (0)191 334 2971 https://dro.dur.ac.uk Evidence for public health policy on inequalities: II: Assembling the evidence jigsaw Professor Margaret Whitehead Department of Public health University of Liverpool Liverpool L69 3GB Dr. Mark Petticrew MRC Social and Public Health Sciences Unit University of Glasgow Glasgow G12 8RZ Professor Hilary Graham Department of Applied Social Sciences University of Lancaster Lancaster LA1 4YW Professor Sally Macintyre MRC Social and Public Health Sciences Unit University of Glasgow Glasgow G12 8RZ Dr. -

Annual Report 2001

25629_Cvr 9/8/02 9:31 AM Page 1 2001Annual Report - 2001 Annual Report - 20012001 University of Glasgow • 4 Lilybank Gardens • Glasgow G12 8RZ T: +44 (0) 141 357 3949 • F: +44 (0) 141 337 2389 • W: www.msoc-mrc.gla.ac.uk July 2002 25629_Txt 9/8/02 9:20 AM Page 1 The MRC Social & Public Health Sciences Unit The Social and Public Health Sciences Unit (SPHSU) is jointly funded by the UK Medical Research Council, and the Chief Scientist Office at the Department of Health at the Scottish Executive. The Unit's aim is to: Promote human health by the study of social and environmental influences on health. Specific objectives include: • Studying how people's social positions, and their social and physical environments, influence their physical and mental health and capacity to lead healthy lives; • Designing and evaluating interventions aiming to improve public health and reduce social inequalities in health, and; • Influencing policy and practice by communicating the results and implications of research. During 2001 we continued to work towards all three objectives, in particular by expanding our portfolio of evaluation research, engaging directly with policy makers, and grappling with issues around 'what counts as evidence' in public health policy making. We have aimed to contribute especially to the evidence base of Scottish policy-making, and to maintain our wider UK links while developing and sustaining international collaborations. We were pleased to welcome as visiting workers Mehrangiz Ebrahimi-Mameghani from Iran, Kristina Manderbacka and Ilmo Keskimäki from Finland, Matthew Shaw from MRC Gambia, and Deborah Osbourne from Australia, as well as Joy Adamson from the MRC HSRC and Ian Deary from the University of Edinburgh. -

Medical Sociology News Vol 31 No 3 Winter 2005

Medical Sociology News Vol 31 No 3 Winter 2005 CONTENTS BSA Medical Sociology Group Committee ..............................................4 Editorial: The End of an Era!....................................................................6 New Editorial Team .................................................................................7 News and Notices....................................................................................9 Forthcoming Events - National and International ..................................11 - Study Groups......................................................15 Conference Reports...............................................................................18 Study Groups’ Annual Reports 2004-05................................................28 Articles ...................................................................................................35 Missing Connections: Medical Sociology and Feminism.................35 Ellen Annandale, University of Leicester .........................................35 Social Structure and Health: A Narrative of Neglect?* ....................53 Graham Scambler, University College London ...............................53 Interview with Professor Sally Macintyre ...............................................70 PhD Abstracts........................................................................................79 Book Reviews ........................................................................................86 Agony Aunt ..........................................................................................100 -

MRC Social and Public Health Sciences Unit I ANNUAL REPORT I 2007 78123 REVIEW:78123 REVIEW 23/6/08 15:15 Page 3

78123 REVIEW:78123 REVIEW 23/6/08 19:02 Page 1 Unit Sciences Health Public and ANNUAL REPORT I 2007 Social MRC 78123 REVIEW:78123 REVIEW 23/6/08 15:15 Page 2 >> Contents Current staff, students and co-workers 04 The West of Scotland Twenty-07 Study 06 Social and Spatial Patterning of Health 09 Measuring Health 12 Ethnicity and Health 15 Youth and Health 17 Sexual and Reproductive Health 20 Gender and Health 22 Evaluating the Health Effects of Social Interventions 25 Understandings and Use of Public Health Research 28 Professional Activities by Unit members 2007 31 Unit Publications 2007 33 Presentations 2007 37 2 I MRC Social and Public Health Sciences Unit I ANNUAL REPORT I 2007 78123 REVIEW:78123 REVIEW 23/6/08 15:15 Page 3 >> MRC Social and Public Health Sciences Unit Welcome 2007 was an eventful year for this Unit. The West of Scotland Twenty-07 Study, Health in the Community was set up in 1987 to follow people living in the Central Clydeside Conurbation for 20 years. After much careful preparation, the 20 year follow-up was launched in September and we have been very pleased by the response of the many loyal participants. We were sorry to see the departure of several staff members, including Mark Petticrew to a chair at the London School of Hygiene and Tropical Medicine, David Ogilvie to the MRC Epidemiology Unit in Cambridge, Melissa Whitrow to the University of Adelaide, and Naomi Hemy back to Perth (Australia) to pursue further studies. We were saddened by the untimely death of Louise O’Neill, who had been receptionist at the Unit for 12 years. -

Developing and Evaluating Complex Interventions: New Guidance

Developing and evaluating complex interventions: new guidance Prepared on behalf of the Medical Research Council by: Peter Craig, MRC Population Health Sciences Research Network Paul Dieppe, Nuffield Department of Orthopaedic Surgery, University of Oxford Sally Macintyre, MRC Social and Public Health Sciences Unit Susan Michie, Centre for Outcomes Research and Effectiveness, University College London Irwin Nazareth, MRC General Practice Research Framework Mark Petticrew, Department of Public Health and Policy, London School of Hygiene and Tropical Medicine www.mrc.ac.uk/complexinterventionsguidance Developing and evaluating complex interventions: new guidance 2 Contents Acknowledgements .......................................................................................................................................................................3 Summary ..........................................................................................................................................................................................4 Introduction ....................................................................................................................................................................................6 Part I Key messages .......................................................................................................................................................................7 Part II Further questions for evaluators .................................................................................................................................14 -

MRC Annual Report and Accounts 2016 to 2017

Medical Research Council Annual report and accounts 2016/17 Medical Research Council Annual Report and Accounts 2016/2017 Presented to Parliament pursuant to Paragraph 2 (2) and 3 (3) of Schedule 1 of the Science and Technology Act 1965. Ordered by the House of Commons to be printed on 13 July 2017. HC 174 © Medical Research Council copyright 2017 The text of this document (this excludes, where present, the Royal Arms and all departmental or agency logos) may be reproduced free of charge in any format or medium provided that it is reproduced accurately and not in a misleading context. The material must be acknowledged as Medical Research Council copyright and the document title specified. Where third party material has been identified, permission from the respective copyright holder must be sought. Any enquiries related to this publication should be sent to us at: Medical Research Council, Polaris House, North Star Avenue, Swindon, SN2 1FL Or [email protected] This publication is available at https://www.gov.uk/government/publications Print ISBN 9781474143356 Web ISBN 9781474143363 ID 11041701 07/17 Printed on paper containing 75% recycled fibre content minimum Printed in the UK by the Williams Lea Group on behalf of the Controller of Her Majesty’s Stationery Office Contents Performance report 8 Overview 10 Performance analysis 25 Accountability report 42 Corporate governance report 43 Remuneration and staff report 62 Parliamentary accountability and audit report 75 Financial statements 82 Annual report and accounts 2016/17 -

MRC: in CONFIDENCE HSPHRB Nov 2006

35 MEDICAL RESEARCH COUNCIL MRC: IN CONFIDENCE HSPHRB Nov 2006 Complex Intervention Workshop Report – Revision of MRC Guidance 1. Background An MRC PHSRN-funded workshop on Complex Interventions was held in May 2006 with the aim of discussing the MRC’s ‘Framework for Development and Evaluation of RCTs for Complex Interventions to Improve Health’, and considering whether it needed updating. The workshop was organised by Paul Dieppe (Director, HSRC), Janet Darbyshire (Director, CTU) and Sally MacIntyre (Director, SPHSU), with 30 delegates attending, including the authors of the original document. The report from the workshop (Annex 1) summarises the day’s recommendations, including the overall conclusion that the Framework needed updating. In discussion with the Office and the Board Chairman, the following next steps have been agreed in principle: Paul Dieppe has agreed to be responsible for taking forward the re- drafting of the Framework (Annex 2) Draft re-writes of Framework will be circulated to a subcommittee of Board members and others. The final version of the new Framework document would be submitted to the Board for endorsement within a year. 2. Actions required The Board is asked to: (i) To note and comment on the Complex Intervention Workshop report (Annex 1); (ii) To note and support the next steps being proposed (Annex 2); and (iii) To endorse, in principle, resources being allocated from the Board budget to take this forward. 3. Annexes Annex 1: Complex Intervention Workshop Report Annex 2: Professor Paul Dieppe’s letter outlining the writing group charged with the Framework revisions. DBMs: Professor David Armstrong Professor Max Parmar LO’C/MB Annex 1 MRC PHSRN ‘Complex Interventions Workshop’ May 2006 Workshop Report for the PHSRN and the HSPHRB An MRC PHSRN-funded workshop on Complex Interventions was held at the Goodenough College, London, on 15 and 16 May 2006. -

Annual Report 2013 Institute of Health and Wellbeing at the University of Glasgow 1

Annual Report 2013 Institute of Health and Wellbeing at the University of Glasgow 1 We are grateful to Beverley Bergman, Nicki Boyer, Artidtaya Charoensukkasem, Mariza Dacoron, Phil McLoone, Rich Mitchell, Joanna Stewart, Lorraine Waddell and Yiqiao Xin for their kind permission to use their photographs 2 Foreword The Institute of Health and Wellbeing is one of seven Research Institutes at the University of Glasgow. It was set up in August 2011. This is our second annual report and describes the achievements of the Institute over the calendar year 2013. In 2013, the Institute held 394 active research grants or contracts, with The Athena SWAN Self Assessment Team convened in July 2013, chaired a total value to the Institute of £47 million. We also won 162 new grants by Professor Anna Cooper. They held five meetings over 2013 to complete resulting in more than £15.9 million coming to the Institute. Our academic the initial self assessment and develop an action plan, and submitted an staff published 439 peer-reviewed journal articles and 16 books or book application for a Bronze award in early 2014. chapters; and we returned 60 category A staff to the Research Excellence Framework: 53 to Unit of Assessment A2 (Public Health, Health Services The Institute continues to actively engage with stakeholders locally and and Primary Care) and 7 to Unit of Assessment C22 (Social Work and globally. We are now collaborating with 73 countries across the world. Social Policy). 197 doctoral students were registered with the Institute We are indebted to our International Scientific Advisory Board (Appendix in 2013: 110 PhD, 8 MD and 79 DClinPsy. -

Adulthood: the Aberdeen Children of the 1950S Study Position in Relation to Alcohol Induced Hangovers in Childhood IQ and Life C

Downloaded from jech.bmj.com on 15 March 2007 Childhood IQ and life course socioeconomic position in relation to alcohol induced hangovers in adulthood: the Aberdeen children of the 1950s study G David Batty, Ian J Deary and Sally Macintyre J. Epidemiol. Community Health 2006;60;872-874 doi:10.1136/jech.2005.045039 Updated information and services can be found at: http://jech.bmj.com/cgi/content/full/60/10/872 These include: References This article cites 17 articles, 9 of which can be accessed free at: http://jech.bmj.com/cgi/content/full/60/10/872#BIBL 3 online articles that cite this article can be accessed at: http://jech.bmj.com/cgi/content/full/60/10/872#otherarticles Rapid responses You can respond to this article at: http://jech.bmj.com/cgi/eletter-submit/60/10/872 Email alerting Receive free email alerts when new articles cite this article - sign up in the box at the service top right corner of the article Topic collections Articles on similar topics can be found in the following collections Alcohol (293 articles) Socioeconomic Determinants of Health (866 articles) Notes To order reprints of this article go to: http://www.bmjjournals.com/cgi/reprintform To subscribe to Journal of Epidemiology and Community Health go to: http://www.bmjjournals.com/subscriptions/ Downloaded from jech.bmj.com on 15 March 2007 872 SHORT REPORT Childhood IQ and life course socioeconomic position in relation to alcohol induced hangovers in adulthood: the Aberdeen children of the 1950s study G David Batty, Ian J Deary, Sally Macintyre .............................................................................................................................. -

Timetable (PDF)

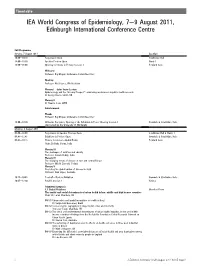

Timetable IEA World Congress of Epidemiology, 7e9 August 2011, Edinburgh International Conference Centre Full Programme Sunday, 7 August 2011 Location 10:00e19:30 Registration Open Strathblane Hall 14:00e19:30 Speaker Preview Open Harris 1 16:00e18:00 Opening Ceremony & Plenary Session 1 Pentland Suite Welcome Professor Raj Bhopal, Conference Committee Chair Opening Professor Neil Pearce, IEA President Plenary IdJohn Snow Lecture Epidemiology and the “Gloomy Prospect”: embracing randomness in public health research Dr George Davey Smith, UK Plenary II Dr Claudia Stein, WHO Entertainment Thanks Professor Raj Bhopal, Conference Committee Chair 18:00e19:30 Welcome Reception, Opening of the Exhibition & Poster Viewing Session 1 Cromdale & Strathblane Halls (Sponsored by the University of Edinburgh) Monday, 8 August 2011 08:00e18:00 Registration & Speaker Preview Open Strathblane Hall & Harris 1 08.45e17.45 Exhibition & Posters Open Cromdale & Strathblane Halls 08:45e10:15 Plenary Session 2dGlobal Health Pentland Suite Chair: Dr Babu Verma, India Plenary III The challenges of nutrition and obesity Professor Srinath Reddy, India Plenary IV The changing trends of disease in east and central Europe Professor Witold Zatonski, Poland Plenary V Predicting the global burden of disease in 2030 Professor Alan Lopez, Australia 10:15e10:45 Tea/Coffee/Posters/Exhibition Cromdale & Strathblane Halls 10:45e12:30 Parallel Session 1 Various Submitted symposia 1.1 Global Problems Moorfoot Room The spatial and social determinants of urban health in low, middle -

Project Contributors

Health of the public in 2040: project contributors Job titles and affiliations were correct at the time of engagement with the project. Visioning and drivers analysis workshop: November 2014 • Professor Dame Anne Johnson DBE FMedSci [Chair] Chair of the Population and Lifelong Health Domain, and Vice-Dean for External and International Relations, Faculty of Population Health Sciences, UCL • Ms Gill Ringland [Facilitator] CEO & Fellow, SAMI Consulting • Mr David Lye [Facilitator] Director & Fellow, SAMI Consulting • Dr Rob Aldridge Public Health Doctor; Research Training Fellow in Epidemiology, UCL • Professor Carol Brayne FMedSci Director of the Cambridge Institute of Public Health, University of Cambridge • Ms Amitti CanagaRetna Strategy Advisor, Department of Health • Mr Martin Champion Portfolio Manager, Healthcare Technologies Theme, Engineering and Physical Sciences Research Council • Ms Rowan Conway Director of Connected Communities, Royal Society for the Encouragement of Arts, Manufactures and Commerce • Professor Rachel Cooper OBE Professor of Design Management, University of Lancaster • Professor Michael Depledge Chair of Environment and Human Health, University of Exeter Medical School • Professor Yvonne Doyle Regional Director for London, Public Health England • Ms Jacqui Farnham Producer/Director, BBC Factual 1 • Ms Susie Fisher Director, The Susie Fisher Group • Professor David Ford Professor of Health Informatics, Swansea University • Mr Tom Gentry Policy Adviser, Health Services, Age UK • Ms Kate Halliwell Nutrition Manager, -

Macintyre, S., Macdonald, L. and Ellaway, A. (2008) Lack of Agreement Between Measured and Self-Reported Distance from Public Green Parks in Glasgow, Scotland

Macintyre, S., MacDonald, L. and Ellaway, A. (2008) Lack of agreement between measured and self-reported distance from public green parks in Glasgow, Scotland. International Journal of Behavioral Nutrition and Physical Activity, 5 (1). p. 26. ISSN 1479-5868 http://eprints.gla.ac.uk/42781/ Deposited on: 28 October 2010 Enlighten – Research publications by members of the University of Glasgow http://eprints.gla.ac.uk International Journal of Behavioral Nutrition and Physical Activity BioMed Central Research Open Access Lack of agreement between measured and self-reported distance from public green parks in Glasgow, Scotland Sally Macintyre*, Laura Macdonald and Anne Ellaway Address: MRC Social & Public Health Sciences Unit, 4 Lilybank Gardens, Glasgow, G12 8RZ, UK Email: Sally Macintyre* - [email protected]; Laura Macdonald - [email protected]; Anne Ellaway - [email protected] * Corresponding author Published: 4 May 2008 Received: 31 October 2007 Accepted: 4 May 2008 International Journal of Behavioral Nutrition and Physical Activity 2008, 5:26 doi:10.1186/1479- 5868-5-26 This article is available from: http://www.ijbnpa.org/content/5/1/26 © 2008 Macintyre et al; licensee BioMed Central Ltd. This is an Open Access article distributed under the terms of the Creative Commons Attribution License (http://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. Abstract Background: Reviews have reported mixed findings for associations between physical activity and proximity to a range of environmental resources. Initially most studies used self reported proximity, but more are now using GIS techniques to measure proximity objectively.