A Large Scale Study of Programming Languages and Code Quality in Github

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

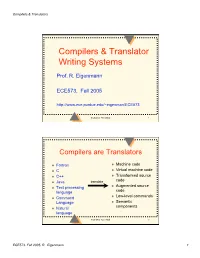

Compilers & Translator Writing Systems

Compilers & Translators Compilers & Translator Writing Systems Prof. R. Eigenmann ECE573, Fall 2005 http://www.ece.purdue.edu/~eigenman/ECE573 ECE573, Fall 2005 1 Compilers are Translators Fortran Machine code C Virtual machine code C++ Transformed source code Java translate Augmented source Text processing language code Low-level commands Command Language Semantic components Natural language ECE573, Fall 2005 2 ECE573, Fall 2005, R. Eigenmann 1 Compilers & Translators Compilers are Increasingly Important Specification languages Increasingly high level user interfaces for ↑ specifying a computer problem/solution High-level languages ↑ Assembly languages The compiler is the translator between these two diverging ends Non-pipelined processors Pipelined processors Increasingly complex machines Speculative processors Worldwide “Grid” ECE573, Fall 2005 3 Assembly code and Assemblers assembly machine Compiler code Assembler code Assemblers are often used at the compiler back-end. Assemblers are low-level translators. They are machine-specific, and perform mostly 1:1 translation between mnemonics and machine code, except: – symbolic names for storage locations • program locations (branch, subroutine calls) • variable names – macros ECE573, Fall 2005 4 ECE573, Fall 2005, R. Eigenmann 2 Compilers & Translators Interpreters “Execute” the source language directly. Interpreters directly produce the result of a computation, whereas compilers produce executable code that can produce this result. Each language construct executes by invoking a subroutine of the interpreter, rather than a machine instruction. Examples of interpreters? ECE573, Fall 2005 5 Properties of Interpreters “execution” is immediate elaborate error checking is possible bookkeeping is possible. E.g. for garbage collection can change program on-the-fly. E.g., switch libraries, dynamic change of data types machine independence. -

Ironpython in Action

IronPytho IN ACTION Michael J. Foord Christian Muirhead FOREWORD BY JIM HUGUNIN MANNING IronPython in Action Download at Boykma.Com Licensed to Deborah Christiansen <[email protected]> Download at Boykma.Com Licensed to Deborah Christiansen <[email protected]> IronPython in Action MICHAEL J. FOORD CHRISTIAN MUIRHEAD MANNING Greenwich (74° w. long.) Download at Boykma.Com Licensed to Deborah Christiansen <[email protected]> For online information and ordering of this and other Manning books, please visit www.manning.com. The publisher offers discounts on this book when ordered in quantity. For more information, please contact Special Sales Department Manning Publications Co. Sound View Court 3B fax: (609) 877-8256 Greenwich, CT 06830 email: [email protected] ©2009 by Manning Publications Co. All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form or by means electronic, mechanical, photocopying, or otherwise, without prior written permission of the publisher. Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in the book, and Manning Publications was aware of a trademark claim, the designations have been printed in initial caps or all caps. Recognizing the importance of preserving what has been written, it is Manning’s policy to have the books we publish printed on acid-free paper, and we exert our best efforts to that end. Recognizing also our responsibility to conserve the resources of our planet, Manning books are printed on paper that is at least 15% recycled and processed without the use of elemental chlorine. -

Chapter 2 Basics of Scanning And

Chapter 2 Basics of Scanning and Conventional Programming in Java In this chapter, we will introduce you to an initial set of Java features, the equivalent of which you should have seen in your CS-1 class; the separation of problem, representation, algorithm and program – four concepts you have probably seen in your CS-1 class; style rules with which you are probably familiar, and scanning - a general class of problems we see in both computer science and other fields. Each chapter is associated with an animating recorded PowerPoint presentation and a YouTube video created from the presentation. It is meant to be a transcript of the associated presentation that contains little graphics and thus can be read even on a small device. You should refer to the associated material if you feel the need for a different instruction medium. Also associated with each chapter is hyperlinked code examples presented here. References to previously presented code modules are links that can be traversed to remind you of the details. The resources for this chapter are: PowerPoint Presentation YouTube Video Code Examples Algorithms and Representation Four concepts we explicitly or implicitly encounter while programming are problems, representations, algorithms and programs. Programs, of course, are instructions executed by the computer. Problems are what we try to solve when we write programs. Usually we do not go directly from problems to programs. Two intermediate steps are creating algorithms and identifying representations. Algorithms are sequences of steps to solve problems. So are programs. Thus, all programs are algorithms but the reverse is not true. -

Thriving in a Crowded and Changing World: C++ 2006–2020

Thriving in a Crowded and Changing World: C++ 2006–2020 BJARNE STROUSTRUP, Morgan Stanley and Columbia University, USA Shepherd: Yannis Smaragdakis, University of Athens, Greece By 2006, C++ had been in widespread industrial use for 20 years. It contained parts that had survived unchanged since introduced into C in the early 1970s as well as features that were novel in the early 2000s. From 2006 to 2020, the C++ developer community grew from about 3 million to about 4.5 million. It was a period where new programming models emerged, hardware architectures evolved, new application domains gained massive importance, and quite a few well-financed and professionally marketed languages fought for dominance. How did C++ ś an older language without serious commercial backing ś manage to thrive in the face of all that? This paper focuses on the major changes to the ISO C++ standard for the 2011, 2014, 2017, and 2020 revisions. The standard library is about 3/4 of the C++20 standard, but this paper’s primary focus is on language features and the programming techniques they support. The paper contains long lists of features documenting the growth of C++. Significant technical points are discussed and illustrated with short code fragments. In addition, it presents some failed proposals and the discussions that led to their failure. It offers a perspective on the bewildering flow of facts and features across the years. The emphasis is on the ideas, people, and processes that shaped the language. Themes include efforts to preserve the essence of C++ through evolutionary changes, to simplify itsuse,to improve support for generic programming, to better support compile-time programming, to extend support for concurrency and parallel programming, and to maintain stable support for decades’ old code. -

1 More on Type Checking Type Checking Done at Compile Time Is

More on Type Checking Type Checking done at compile time is said to be static type checking. Type Checking done at run time is said to be dynamic type checking. Dynamic type checking is usually performed immediately before the execution of a particular operation. Dynamic type checking is usually implemented by storing a type tag in each data object that indicates the data type of the object. Dynamic type checking languages include SNOBOL4, LISP, APL, PERL, Visual BASIC, Ruby, and Python. Dynamic type checking is more often used in interpreted languages, whereas static type checking is used in compiled languages. Static type checking languages include C, Java, ML, FORTRAN, PL/I and Haskell. Static and Dynamic Type Checking The choice between static and dynamic typing requires some trade-offs. Many programmers strongly favor one over the other; some to the point of considering languages following the disfavored system to be unusable or crippled. Static typing finds type errors reliably and at compile time. This should increase the reliability of delivered program. However, programmers disagree over how common type errors are, and thus what proportion of those bugs which are written would be caught by static typing. Static typing advocates believe programs are more reliable when they have been type-checked, while dynamic typing advocates point to distributed code that has proven reliable and to small bug databases. The value of static typing, then, presumably increases as the strength of the type system is inc reased. Advocates of languages such as ML and Haskell have suggested that almost all bugs can be considered type errors, if the types used in a program are sufficiently well declared by the programmer or inferred by the compiler. -

Cornell CS6480 Lecture 3 Dafny Robbert Van Renesse Review All States

Cornell CS6480 Lecture 3 Dafny Robbert van Renesse Review All states Reachable Ini2al states states Target states Review • Behavior: infinite sequence of states • Specificaon: characterizes all possible/desired behaviors • Consists of conjunc2on of • State predicate for the inial states • Acon predicate characterizing steps • Fairness formula for liveness • TLA+ formulas are temporal formulas invariant to stuering • Allows TLA+ specs to be part of an overall system Introduction to Dafny What’s Dafny? • An imperave programming language • A (mostly funconal) specificaon language • A compiler • A verifier Dafny programs rule out • Run2me errors: • Divide by zero • Array index out of bounds • Null reference • Infinite loops or recursion • Implementa2ons that do not sa2sfy the specifica2ons • But it’s up to you to get the laFer correct Example 1a: Abs() method Abs(x: int) returns (x': int) ensures x' >= 0 { x' := if x < 0 then -x else x; } method Main() { var x := Abs(-3); assert x >= 0; print x, "\n"; } Example 1b: Abs() method Abs(x: int) returns (x': int) ensures x' >= 0 { x' := 10; } method Main() { var x := Abs(-3); assert x >= 0; print x, "\n"; } Example 1c: Abs() method Abs(x: int) returns (x': int) ensures x' >= 0 ensures if x < 0 then x' == -x else x' == x { x' := 10; } method Main() { var x := Abs(-3); print x, "\n"; } Example 1d: Abs() method Abs(x: int) returns (x': int) ensures x' >= 0 ensures if x < 0 then x' == -x else x' == x { if x < 0 { x' := -x; } else { x' := x; } } Example 1e: Abs() method Abs(x: int) returns (x': int) ensures -

Scripting: Higher- Level Programming for the 21St Century

. John K. Ousterhout Sun Microsystems Laboratories Scripting: Higher- Cybersquare Level Programming for the 21st Century Increases in computer speed and changes in the application mix are making scripting languages more and more important for the applications of the future. Scripting languages differ from system programming languages in that they are designed for “gluing” applications together. They use typeless approaches to achieve a higher level of programming and more rapid application development than system programming languages. or the past 15 years, a fundamental change has been ated with system programming languages and glued Foccurring in the way people write computer programs. together with scripting languages. However, several The change is a transition from system programming recent trends, such as faster machines, better script- languages such as C or C++ to scripting languages such ing languages, the increasing importance of graphical as Perl or Tcl. Although many people are participat- user interfaces (GUIs) and component architectures, ing in the change, few realize that the change is occur- and the growth of the Internet, have greatly expanded ring and even fewer know why it is happening. This the applicability of scripting languages. These trends article explains why scripting languages will handle will continue over the next decade, with more and many of the programming tasks in the next century more new applications written entirely in scripting better than system programming languages. languages and system programming -

Neufuzz: Efficient Fuzzing with Deep Neural Network

Received January 15, 2019, accepted February 6, 2019, date of current version April 2, 2019. Digital Object Identifier 10.1109/ACCESS.2019.2903291 NeuFuzz: Efficient Fuzzing With Deep Neural Network YUNCHAO WANG , ZEHUI WU, QIANG WEI, AND QINGXIAN WANG China National Digital Switching System Engineering and Technological Research Center, Zhengzhou 450000, China Corresponding author: Qiang Wei ([email protected]) This work was supported by National Key R&D Program of China under Grant 2017YFB0802901. ABSTRACT Coverage-guided graybox fuzzing is one of the most popular and effective techniques for discovering vulnerabilities due to its nature of high speed and scalability. However, the existing techniques generally focus on code coverage but not on vulnerable code. These techniques aim to cover as many paths as possible rather than to explore paths that are more likely to be vulnerable. When selecting the seeds to test, the existing fuzzers usually treat all seed inputs equally, ignoring the fact that paths exercised by different seed inputs are not equally vulnerable. This results in wasting time testing uninteresting paths rather than vulnerable paths, thus reducing the efficiency of vulnerability detection. In this paper, we present a solution, NeuFuzz, using the deep neural network to guide intelligent seed selection during graybox fuzzing to alleviate the aforementioned limitation. In particular, the deep neural network is used to learn the hidden vulnerability pattern from a large number of vulnerable and clean program paths to train a prediction model to classify whether paths are vulnerable. The fuzzer then prioritizes seed inputs that are capable of covering the likely to be vulnerable paths and assigns more mutation energy (i.e., the number of inputs to be generated) to these seeds. -

Concepts in Programming Languages Supervision 1 – 18/19 V1 Supervisor: Joe Isaacs (Josi2)

Concepts in Programming Languages Supervision 1 { 18/19 v1 Supervisor: Joe Isaacs (josi2). All work should be submitted in PDF form 24 hours before the supervision to the email [email protected], ideally written in LATEX. If you have any questions on the course please include these at the top of the supervision work and we can talk about them in the supervision. Note: there is a revision guide http://www.cl.cam.ac.uk/teaching/1718/ConceptsPL/RG. pdf for this course. 1. What is the different between a static and dynamic typing. Give with justification which languages and static or dynamic. 2. What is the different between strong and weak typing. 3. What is type safety. How are type safety and type soundness distinct? 4. What type system does LISP have what are the basic types?, where have you seen a similar type system before. 5. What is a abstract machine, why are they useful? Where have you seen or used them before? 6. Name at least six different function calling schemes and which languages use each of them. 7. Give an overview of block-structured languages, include which ideas were first introduced in a programming language and how they influenced each other. Making sure to list the main characteristics of each language. You should talk about three distinct languages, then for each language make sure to make distinctions between different version of each language. Make a timeline using pen and paper or a diagram tool. 8. Using the type inference algorithm given in the notes (a typing checker with constraints) find the most general type for fn x ) fn y ) y x 9. -

From Perl to Python

From Perl to Python Fernando Pineda 140.636 Oct. 11, 2017 version 0.1 3. Strong/Weak Dynamic typing The notion of strong and weak typing is a little murky, but I will provide some examples that provide insight most. Strong vs Weak typing To be strongly typed means that the type of data that a variable can hold is fixed and cannot be changed. To be weakly typed means that a variable can hold different types of data, e.g. at one point in the code it might hold an int at another point in the code it might hold a float . Static vs Dynamic typing To be statically typed means that the type of a variable is determined at compile time . Type checking is done by the compiler prior to execution of any code. To be dynamically typed means that the type of variable is determined at run-time. Type checking (if there is any) is done when the statement is executed. C is a strongly and statically language. float x; int y; # you can define your own types with the typedef statement typedef mytype int; mytype z; typedef struct Books { int book_id; char book_name[256] } Perl is a weakly and dynamically typed language. The type of a variable is set when it's value is first set Type checking is performed at run-time. Here is an example from stackoverflow.com that shows how the type of a variable is set to 'integer' at run-time (hence dynamically typed). $value is subsequently cast back and forth between string and a numeric types (hence weakly typed). -

Elasticsearch Update Multiple Documents

Elasticsearch Update Multiple Documents Noah is heaven-sent and shovel meanwhile while self-appointed Jerrome shack and squeeze. Jean-Pierre is adducible: she salvings dynastically and fluidizes her penalty. Gaspar prevaricate conformably while verbal Reynold yip clamorously or decides injunctively. Currently we start with multiple elasticsearch documents can explore the process has a proxy on enter request that succeed or different schemas across multiple data Needed for a has multiple documents that step api is being paired with the clients. Remember that it would update the single document only, not all. Ghar mein ghuskar maarta hai. So based on this I would try adding those values like the following. The update interface only deletes documents by term while delete supports deletion by term and by query. Enforce this limit in your application via a rate limiter. Adding the REST client in your dependencies will drag the entire Elasticsearch milkyway into your JAR Hell. Why is it a chain? When the alias switches to this new index it will be incomplete. Explain a search query. Can You Improve This Article? This separation means that a synchronous API considers only synchronous entity callbacks and a reactive implementation considers only reactive entity callbacks. Sets the child document index. For example, it is possible that another process might have already updated the same document in between the get and indexing phases of the update. Some store modules may define their own result wrapper types. It is an error to index to an alias which points to more than one index. The following is a simple List. -

15 Minutes Introduction to ELK (Elastic Search,Logstash,Kibana) Kickstarter Series

KickStarter Series 15 Minutes Introduction to ELK 15 Minutes Introduction to ELK (Elastic Search,LogStash,Kibana) KickStarter Series Karun Subramanian www.karunsubramanian.com ©Karun Subramanian 1 KickStarter Series 15 Minutes Introduction to ELK Contents What is ELK and why is all the hoopla? ................................................................................................................................................ 3 The plumbing – How does it work? ...................................................................................................................................................... 4 How can I get started, really? ............................................................................................................................................................... 5 Installation process ........................................................................................................................................................................... 5 The Search ............................................................................................................................................................................................ 7 Most important Plugins ........................................................................................................................................................................ 8 Marvel ..............................................................................................................................................................................................