The 5Th Annual North American Conference on Video Game Music

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

TITLE Integrating Technology Jnto the K-12 Music Curriculum. INSTITUTION Washington Office of the State Superintendent of Public Instruction, Olympia

DOCUMENT RESUME SO 022 027 ED 343 837 the K-12 Music TITLE Integrating Technology Jnto Curriculum. State Superintendent of INSTITUTION Washington Office of the Public Instruction, Olympia. PUB DATE Jun 90 NOTE 247p. PUB TYPE Guides - Non-Classroom Use(055) EDRS PRICE MF01/PC10 Plus Postage. *Computer Software; DESCRIPTORS Computer Assisted Instruction; Computer Uses in Education;Curriculum Development; Educational Resources;*Educational Technology; Elementary Secondary Education;*Music Education; *State Curriculum Guides;Student Educational Objectives Interface; *Washington IDENTIFIERS *Musical Instrument Digital ABSTRACT This guide is intended toprovide resources for The focus of integrating technologyinto the K-12 music curriculum. (Musical the guide is on computersoftware and the use of MIDI The guide gives Instrument DigitalInterface) in the music classroom. that integrate two examples ofcommercially available curricula on the technology as well as lessonplans that incorporate technology and music subjects of music fundamentals,aural literacy composition, concerning history. The guide providesERIC listings and literature containing information computer assistedinstruction. Ten appendices including lists of on music-relatedtechnology are provided, MIDI equipment software, softwarepublishers, books and videos, manufacturers, and book andperiodical pu'lishers. (DB) *********************************************************************** Reproductions supplied by EDRS arethe best that can be made from the original document. *************************,.********************************************* -

Frédéric Chopin De Wikipedia, La Enciclopedia Libre (Redirigido Desde «Chopin»)

Frédéric Chopin De Wikipedia, la enciclopedia libre (Redirigido desde «Chopin») Fryderyk Franciszek Chopin (Szopen)nota 1 (en francés, Frédéric François Chopin,nota 2 Żelazowa Wola, Gran Ducado de Varsovia, 1 de marzo1 2 o 22 de Frédéric Chopin febreronota 3 de 1810 — París, 17 de octubre de 1849) fue un compositor y virtuoso pianista polaco considerado como uno de los más importantes de la historia y uno de los mayores representantes del Romanticismo musical. Su perfecta técnica, su refinamiento estilístico y su elaboración armónica han sido comparadas históricamente, por su perdurable influencia en la música de tiempos posteriores, con las de Johann Sebastian Bach, Wolfgang Amadeus Mozart, Ludwig van Beethoven y Franz Liszt. Índice 1 Biografía 1.1 Infancia 1.2 Adolescencia 1.3 Viena y el Levantamiento en Polonia 1.4 París 1.5 Éxito en Europa 1.6 Chopin como maestro Única fotografía de Chopin (véase más abajo un 1.7 Amor y compromiso daguerrotipo de 1846). Se cree que fue tomada en 1.8 George Sand 1849, poco antes de su muerte, por el fotógrafo Louis- 1.9 Mallorca Auguste Bisson. 1.10 Otra vez París 1.11 El principio del fin Datos generales 1.12 El canto del cisne Nombre real Fryderyk Franciszek Chopin 1.13 Fallecimiento Nacimiento 1 de marzo de 1810 2 Su música 2.1 Chopin y el piano Żelazowa Wola, Gran Ducado de 2.2 Chopin y el Romanticismo Varsovia 2.3 Obras concertantes Muerte 17 de octubre de 1849 (39 años) 2.4 Música polaca: polonesas, mazurcas y otros París, Segunda República 2.5 Valses y otras danzas Francesa 2.6 Otras obras 3 Chopin en la cultura popular Ocupación Pianista, compositor 3.1 Chopin en el cine Información artística 4 Véase también Género(s) Romanticismo musical 5 Referencias 5.1 Notas Firma 6 Bibliografía 7 Enlaces externos Biografía Infancia Frédéric Chopin nació en la aldea de Żelazowa Wola, en el voivodato de Mazovia, a 60 kilómetros de Varsovia en el centro de Polonia, en una pequeña finca propiedad del conde Skarbek, que formaba parte del Gran Ducado de Varsovia. -

THIEF PS4 Manual

See important health and safety warnings in the system Settings menu. GETTING STARTED PlayStation®4 system Starting a game: Before use, carefully read the instructions supplied with the PS4™ computer entertainment system. The documentation contains information on setting up and using your system as well as important safety information. Touch the (power) button of the PS4™ system to turn the system on. The power indicator blinks in blue, and then lights up in white. Insert the Thief disc with the label facing up into the disc slot. The game appears in the content area of the home screen. Select the software title in the PS4™ system’s home screen, and then press the S button. Refer to this manual for Default controls: information on using the software. Xxxxxx xxxxxx left stick Xxxxxx xxxxxx R button Quitting a game: Press and hold the button, and then select [Close Application] on the p Xxxxxx xxxxxx E button screen that is displayed. Xxxxxx xxxxxx W button Returning to the home screen from a game: To return to the home screen Xxxxxx xxxxxx K button Xxxxxx xxxxxx right stick without quitting a game, press the p button. To resume playing the game, select it from the content area. Xxxxxx xxxxxx N button Xxxxxx xxxxxx (Xxxxxx xxxxxxx) H button Removing a disc: Touch the (eject) button after quitting the game. Xxxxxx xxxxxx (Xxxxxx xxxxxxx) J button Xxxxxx xxxxxx (Xxxxxx xxxxxxx) Q button Trophies: Earn, compare and share trophies that you earn by making specic in-game Xxxxxx xxxxxx (Xxxxxx xxxxxxx) Click touchpad accomplishments. Trophies access requires a Sony Entertainment Network account . -

Chiptuning Intellectual Property: Digital Culture Between Creative Commons and Moral Economy

Chiptuning Intellectual Property: Digital Culture Between Creative Commons and Moral Economy Martin J. Zeilinger York University, Canada [email protected] Abstract This essay considers how chipmusic, a fairly recent form of alternative electronic music, deals with the impact of contemporary intellectual property regimes on creative practices. I survey chipmusicians’ reusing of technology and content invoking the era of 8-bit video games, and highlight points of contention between critical perspectives embodied in this art form and intellectual property policy. Exploring current chipmusic dissemination strategies, I contrast the art form’s links to appropriation-based creative techniques and the ‘demoscene’ amateur hacking culture of the 1980s with the chiptune community’s currently prevailing reliance on Creative Commons licenses for regulating access. Questioning whether consideration of this alternative licensing scheme can adequately describe shared cultural norms and values that motivate chiptune practices, I conclude by offering the concept of a moral economy of appropriation-based creative techniques as a new framework for understanding digital creative practices that resist conventional intellectual property policy both in form and in content. Keywords: Chipmusic, Creative Commons, Moral Economy, Intellectual Property, Demoscene Introduction The chipmusic community, like many other born-digital creative communities, has a rich tradition of embracing and encouraged open access, collaboration, and sharing. It does not like to operate according to the logic of informational capital and the restrictive enclosure movements this logic engenders. The creation of chipmusic, a form of electronic music based on the repurposing of outdated sound chip technology found in video gaming devices and old home computers, centrally involves the reworking of proprietary cultural materials. -

Videogame Music: Chiptunes Byte Back?

Videogame Music: chiptunes byte back? Grethe Mitchell Andrew Clarke University of East London Unaffiliated Researcher and Institute of Education 78 West Kensington Court, University of East London, Docklands Campus Edith Villas, 4-6 University Way, London E16 2RD London W14 9AB [email protected] [email protected] ABSTRACT Musicians and sonic artists who use videogames as their This paper will explore the sonic subcultures of videogame medium or raw material have, however, received art and videogame-related fan art. It will look at the work of comparatively little interest. This mirrors the situation in art videogame musicians – not those producing the music for as a whole where sonic artists are similarly neglected and commercial games – but artists and hobbyists who produce the emphasis is likewise on the visual art/artists.1 music by hacking and reprogramming videogame hardware, or by sampling in-game sound effects and music for use in It was curious to us that most (if not all) of the writing their own compositions. It will discuss the motivations and about videogame art had ignored videogame music - methodologies behind some of this work. It will explore the especially given the overlap between the two communities tools that are used and the communities that have grown up of artists and the parallels between them. For example, two around these tools. It will also examine differences between of the major videogame artists – Tobias Bernstrup and Cory the videogame music community and those which exist Archangel – have both produced music in addition to their around other videogame-related practices such as modding gallery-oriented work, but this area of their activity has or machinima. -

Legal-Process Guidelines for Law Enforcement

Legal Process Guidelines Government & Law Enforcement within the United States These guidelines are provided for use by government and law enforcement agencies within the United States when seeking information from Apple Inc. (“Apple”) about customers of Apple’s devices, products and services. Apple will update these Guidelines as necessary. All other requests for information regarding Apple customers, including customer questions about information disclosure, should be directed to https://www.apple.com/privacy/contact/. These Guidelines do not apply to requests made by government and law enforcement agencies outside the United States to Apple’s relevant local entities. For government and law enforcement information requests, Apple complies with the laws pertaining to global entities that control our data and we provide details as legally required. For all requests from government and law enforcement agencies within the United States for content, with the exception of emergency circumstances (defined in the Electronic Communications Privacy Act 1986, as amended), Apple will only provide content in response to a search issued upon a showing of probable cause, or customer consent. All requests from government and law enforcement agencies outside of the United States for content, with the exception of emergency circumstances (defined below in Emergency Requests), must comply with applicable laws, including the United States Electronic Communications Privacy Act (ECPA). A request under a Mutual Legal Assistance Treaty or the Clarifying Lawful Overseas Use of Data Act (“CLOUD Act”) is in compliance with ECPA. Apple will provide customer content, as it exists in the customer’s account, only in response to such legally valid process. -

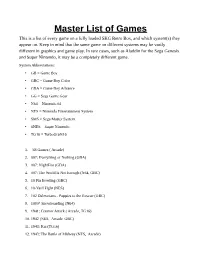

Master List of Games This Is a List of Every Game on a Fully Loaded SKG Retro Box, and Which System(S) They Appear On

Master List of Games This is a list of every game on a fully loaded SKG Retro Box, and which system(s) they appear on. Keep in mind that the same game on different systems may be vastly different in graphics and game play. In rare cases, such as Aladdin for the Sega Genesis and Super Nintendo, it may be a completely different game. System Abbreviations: • GB = Game Boy • GBC = Game Boy Color • GBA = Game Boy Advance • GG = Sega Game Gear • N64 = Nintendo 64 • NES = Nintendo Entertainment System • SMS = Sega Master System • SNES = Super Nintendo • TG16 = TurboGrafx16 1. '88 Games ( Arcade) 2. 007: Everything or Nothing (GBA) 3. 007: NightFire (GBA) 4. 007: The World Is Not Enough (N64, GBC) 5. 10 Pin Bowling (GBC) 6. 10-Yard Fight (NES) 7. 102 Dalmatians - Puppies to the Rescue (GBC) 8. 1080° Snowboarding (N64) 9. 1941: Counter Attack ( Arcade, TG16) 10. 1942 (NES, Arcade, GBC) 11. 1943: Kai (TG16) 12. 1943: The Battle of Midway (NES, Arcade) 13. 1944: The Loop Master ( Arcade) 14. 1999: Hore, Mitakotoka! Seikimatsu (NES) 15. 19XX: The War Against Destiny ( Arcade) 16. 2 on 2 Open Ice Challenge ( Arcade) 17. 2010: The Graphic Action Game (Colecovision) 18. 2020 Super Baseball ( Arcade, SNES) 19. 21-Emon (TG16) 20. 3 Choume no Tama: Tama and Friends: 3 Choume Obake Panic!! (GB) 21. 3 Count Bout ( Arcade) 22. 3 Ninjas Kick Back (SNES, Genesis, Sega CD) 23. 3-D Tic-Tac-Toe (Atari 2600) 24. 3-D Ultra Pinball: Thrillride (GBC) 25. 3-D WorldRunner (NES) 26. 3D Asteroids (Atari 7800) 27. -

Well Known TCP and UDP Ports Used by Apple Software Products

Well known TCP and UDP ports used by Apple Languages English software products Symptoms Learn more about TCP and UDP ports used by Apple products, such as OS X, OS X Server, Apple Remote Desktop, and iCloud. Many of these are referred to as "well known" industry standard ports. Resolution About this table The Service or Protocol Name column lists services registered with the Internet Assigned Numbers Authority (http://www.iana.org/), except where noted as "unregistered use." The names of Apple products that use these services or protocols appear in the Used By/Additional Information column. The RFC column lists the number of the Request For Comment document that defines the particular service or protocol, which may be used for reference. RFC documents are maintained by RFC Editor (http://www.rfc- editor.org/). If multiple RFCs define a protocol, there may only be one listed here. This article is updated periodically and contains information that is available at time of publication. This document is intended as a quick reference and should not be regarded as comprehensive. Apple products listed in the table are the most commonly used examples, not a comprehensive list. For more information, review the Notes below the table. Tip: Some services may use two or more ports. It is recommend that once you've found an instance of a product in this list, search on the name (Command-F) and then repeat (Command-G) to locate all occurrences of the product. For example, VPN service may use up to four diferent ports: 500, 1701, 1723, and 4500. -

The Cross Over Talk

Programming Composers and Composing Programmers Victoria Dorn – Sony Interactive Entertainment 1 About Me • Berklee College of Music (2013) – Sound Design/Composition • Oregon State University (2018) – Computer Science • Audio Engineering Intern -> Audio Engineer -> Software Engineer • Associate Software Engineer in Research and Development at PlayStation • 3D Audio for PS4 (PlayStation VR, Platinum Wireless Headset) • Testing, general research, recording, and developer support 2 Agenda • Programming tips/tricks for the audio person • Audio and sound tips/tricks for the programming person • Creating a dialog and establishing vocabulary • Raise the level of common understanding between sound people and programmers • Q&A 3 Media Files Used in This Presentation • Can be found here • https://drive.google.com/drive/folders/1FdHR4e3R4p59t7ZxAU7pyMkCdaxPqbKl?usp=sharing 4 Programming Tips for the ?!?!?! Audio Folks "Binary Code" by Cncplayer is licensed under CC BY-SA 3.0 5 Music/Audio Programming DAWs = Programming Language(s) Musical Motives = Programming Logic Instruments = APIs or Libraries 6 Where to Start?? • Learning the Language • Pseudocode • Scripting 7 Learning the Language • Programming Fundamentals • Variables (a value with a name) soundVolume = 10 • Loops (works just like looping a sound actually) for (loopCount = 0; while loopCount < 10; increase loopCount by 1){ play audio file one time } • If/else logic (if this is happening do this, else do something different) if (the sky is blue){ play bird sounds } else{ play rain sounds -

Technical Essays for Electronic Music Peter Elsea Fall 2002 Introduction

Technical Essays For Electronic Music Peter Elsea Fall 2002 Introduction....................................................................................................................... 2 The Propagation Of Sound .............................................................................................. 3 Acoustics For Music...................................................................................................... 10 The Numbers (and Initials) of Acoustics ...................................................................... 16 Hearing And Perception................................................................................................. 23 Taking The Waveform Apart ........................................................................................ 29 Some Basic Electronics................................................................................................. 34 Decibels And Dynamic Range ................................................................................... 39 Analog Sound Processors ............................................................................................... 43 The Analog Synthesizer............................................................................................... 50 Sampled Sound Processors............................................................................................. 56 An Overview of Computer Music.................................................................................. 62 The Mathematics Of Electronic Music ........................................................................ -

NAIT Unveils New Diploma Program in Nanotechnology – Story, Page 4

U-PASS – YEA OR NA Y ? THE P l e a s e r e c y c l e t h i s Thursday, March 25, 2010 newspaper when you are Volume 47, Issue 23 finished with it. NUGGETYOUR STUDENT NEWSPAPER EDMONTON, ALBERTA, CANADA NaNo IS NoW! NAIT unveils new diploma program in nanotechnology – story, page 4 NAIT photo THE ADJEY TOUCH NAIT Culinary students have a little fun while they learn during a recent visit to campus by Chef David Adjey, right. Chef Adjey’s time here was made possible by the institute’s Chef-in-Residence program. Story, page 2. 2 The Nugget Thursday, March 25, 2010 NEWS&FEATURES adjey adds his flavour By LINDA HOANg Assistant Issues Editor Last week, NAIT Culinary Arts students received a recipe for success as a celebrity chef stopped into the school for a week of guest teaching. Recognized from Iron Chef and Restaurant Makeover, Food Network celebrity chef David Adjey spent five days teaching Culinary Arts students, as part of NAIT’s Hokanson Chef-in- Residence program. Adjey was involved in various lecture ses- sions, discussing “Life as a Celebrity Chef” and how “Great Chefs are Great Communica- tors,” as well as teaching students in cooking labs ranging from desserts to soup and vegeta- ble labs, wine and food preparation and meat- cutting. Students also prepared a lunch service for 120 special guests using Adjey’s own recipes, cooking under his guidance. Adjey said the chance for students to learn from a celebrity chef through the Chef-in-Resi- dence program is a great experience for up-and- coming chefs. -

The Role of Audio for Immersion in Computer Games

CAPTIVATING SOUND THE ROLE OF AUDIO FOR IMMERSION IN COMPUTER GAMES by Sander Huiberts Thesis submitted in fulfilment of the requirements for the degree of PhD at the Utrecht School of the Arts (HKU) Utrecht, The Netherlands and the University of Portsmouth Portsmouth, United Kingdom November 2010 Captivating Sound The role of audio for immersion in computer games © 2002‐2010 S.C. Huiberts Supervisor: Jan IJzermans Director of Studies: Tony Kalus Examiners: Dick Rijken, Dan Pinchbeck 2 Whilst registered as a candidate for the above degree, I have not been registered for any other research award. The results and conclusions embodied in this thesis are the work of the named candidate and have not been submitted for any other academic award. 3 Contents Abstract__________________________________________________________________________________________ 6 Preface___________________________________________________________________________________________ 7 1. Introduction __________________________________________________________________________________ 8 1.1 Motivation and background_____________________________________________________________ 8 1.2 Definition of research area and methodology _______________________________________ 11 Approach_________________________________________________________________________________ 11 Survey methods _________________________________________________________________________ 12 2. Game audio: the IEZA model ______________________________________________________________ 14 2.1 Understanding the structure