CONTROL STRUCTURES for PROGRAMMING LANGUAGES • by David A. Fisher Computer Science Department . Carnegie-Mellon University

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

At—At, Batch—Execute Commands at a Later Time

at—at, batch—execute commands at a later time at [–csm] [–f script] [–qqueue] time [date] [+ increment] at –l [ job...] at –r job... batch at and batch read commands from standard input to be executed at a later time. at allows you to specify when the commands should be executed, while jobs queued with batch will execute when system load level permits. Executes commands read from stdin or a file at some later time. Unless redirected, the output is mailed to the user. Example A.1 1 at 6:30am Dec 12 < program 2 at noon tomorrow < program 3 at 1945 pm August 9 < program 4 at now + 3 hours < program 5 at 8:30am Jan 4 < program 6 at -r 83883555320.a EXPLANATION 1. At 6:30 in the morning on December 12th, start the job. 2. At noon tomorrow start the job. 3. At 7:45 in the evening on August 9th, start the job. 4. In three hours start the job. 5. At 8:30 in the morning of January 4th, start the job. 6. Removes previously scheduled job 83883555320.a. awk—pattern scanning and processing language awk [ –fprogram–file ] [ –Fc ] [ prog ] [ parameters ] [ filename...] awk scans each input filename for lines that match any of a set of patterns specified in prog. Example A.2 1 awk '{print $1, $2}' file 2 awk '/John/{print $3, $4}' file 3 awk -F: '{print $3}' /etc/passwd 4 date | awk '{print $6}' EXPLANATION 1. Prints the first two fields of file where fields are separated by whitespace. 2. Prints fields 3 and 4 if the pattern John is found. -

TDS) EDEN PG MV® Pigment Ink for Textile

Technical Data Sheet (TDS) EDEN PG MV® Pigment Ink for Textile Product Description: Applications: EDEN PG MV water based pigment Ink is ideal for high-speed direct digital printing on all standard fabrics available on the market. The ink prints on all types of fabrics with equal quality and coverage without color shifts or patches. EDEN PG MV Pigment Ink may be used on cotton, cotton blends, polyester, polyamide (nylon) and treated & untreated fabrics. EDEN PG MV is ideal for a wide range of applications including sportswear, footwear, fashion, home décor and home textile and is designed for indoor and outdoor applications. This ink is manufactured using cutting edge technology and • Home textile • Bags • Indoor furnishing high-performance pigments, for optimal ink fluidity and • Décor • Sport apparel • Fashion & apparel printability through piezo printheads without bleeding or • Footwear migration. Compatible Print Head Technology: Ricoh Gen 4 & Gen 5 Industrial print heads based printers. Pre-treatment: The use of Bordeaux EDEN PG™ OS® (pretreatment liquid) is Product Line: recommended in order to achieve higher chemical attributes (see table below for comparison). The pretreatment process for fabrics is done offline using a standard textile padder. Red Cleaning Print procedure: Cyan Magenta Yellow Black Magenta Liquid The printing process can be done either inline or in individual segments. Inline printing allows the printed fabric to be fed Material Compatibility: through a standard textile dryer directly. Alternatively, if the printing stage is separated from the drying stage, drying is • Cotton required before rolling the fabric. • Viscose • Polyester • Lycra Drying Working Parameters: • Silk Recommended drying time: 3 minutes • Polyamide Recommended temperature: 150°C (302°F) • Leather • Synthetic leather Post-treatment: No chemical post treatment is needed. -

Relational Constraint Driven Test Case Synthesis for Web Applications

Relational Constraint Driven Test Case Synthesis for Web Applications Xiang Fu Hofstra University Hempstead, NY 11549 [email protected] This paper proposes a relational constraint driven technique that synthesizes test cases automatically for web applications. Using a static analysis, servlets can be modeled as relational transducers, which manipulate backend databases. We present a synthesis algorithm that generates a sequence of HTTP requests for simulating a user session. The algorithm relies on backward symbolic image computation for reaching a certain database state, given a code coverage objective. With a slight adaptation, the technique can be used for discovering workflow attacks on web applications. 1 Introduction Modern web applications usually rely on backend database systems for storing important system infor- mation or supporting business decisions. The complexity of database queries, however, often complicates the task of thoroughly testing a web application. To manually design test cases involves labor intensive initialization of database systems, even with the help of unit testing tools such as SQLUnit [25] and DBUnit [8]. It is desirable to automatically synthesize test cases for web applications. There has been a strong interest recently in testing database driven applications and database man- agement systems (see e.g., [12, 4, 20]). Many of them are query aware, i.e., given a SQL query, an initial database (DB) instance is generated to make that query satisfiable. The DB instance is fed to the target web application as input, so that a certain code coverage goal is achieved. The problem we are trying to tackle is one step further – it is a synthesis problem: given a certain database state (or a relational constraint), a call sequence of web servlets is synthesized to reach the given DB state. -

LINUX INTERNALS LABORATORY III. Understand Process

LINUX INTERNALS LABORATORY VI Semester: IT Course Code Category Hours / Week Credits Maximum Marks L T P C CIA SEE Total AIT105 Core - - 3 2 30 70 100 Contact Classes: Nil Tutorial Classes: Nil Practical Classes: 36 Total Classes: 36 OBJECTIVES: The course should enable the students to: I. Familiar with the Linux command-line environment. II. Understand system administration processes by providing a hands-on experience. III. Understand Process management and inter-process communications techniques. LIST OF EXPERIMENTS Week-1 BASIC COMMANDS I Study and Practice on various commands like man, passwd, tty, script, clear, date, cal, cp, mv, ln, rm, unlink, mkdir, rmdir, du, df, mount, umount, find, unmask, ulimit, ps, who, w. Week-2 BASIC COMMANDS II Study and Practice on various commands like cat, tail, head , sort, nl, uniq, grep, egrep,fgrep, cut, paste, join, tee, pg, comm, cmp, diff, tr, awk, tar, cpio. Week-3 SHELL PROGRAMMING I a) Write a Shell Program to print all .txt files and .c files. b) Write a Shell program to move a set of files to a specified directory. c) Write a Shell program to display all the users who are currently logged in after a specified time. d) Write a Shell Program to wish the user based on the login time. Week-4 SHELL PROGRAMMING II a) Write a Shell program to pass a message to a group of members, individual member and all. b) Write a Shell program to count the number of words in a file. c) Write a Shell program to calculate the factorial of a given number. -

Unix Programming

P.G DEPARTMENT OF COMPUTER APPLICATIONS 18PMC532 UNIX PROGRAMMING K1 QUESTIONS WITH ANSWERS UNIT- 1 1) Define unix. Unix was originally written in assembler, but it was rewritten in 1973 in c, which was principally authored by Dennis Ritchie ( c is based on the b language developed by kenThompson. 2) Discuss the Communication. Excellent communication with users, network User can easily exchange mail,dta,pgms in the network 3) Discuss Security Login Names Passwords Access Rights File Level (R W X) File Encryption 4) Define PORTABILITY UNIX run on any type of Hardware and configuration Flexibility credits goes to Dennis Ritchie( c pgms) Ported with IBM PC to GRAY 2 5) Define OPEN SYSTEM Everything in unix is treated as file(source pgm, Floppy disk,printer, terminal etc., Modification of the system is easy because the Source code is always available 6) The file system breaks the disk in to four segements The boot block The super block The Inode table Data block 7) Command used to find out the block size on your file $cmchk BSIZE=1024 8) Define Boot Block Generally the first block number 0 is called the BOOT BLOCK. It consists of Hardware specific boot program that loads the file known as kernal of the system. 9) Define super block It describes the state of the file system ie how large it is and how many maximum Files can it accommodate This is the 2nd block and is number 1 used to control the allocation of disk blocks 10) Define inode table The third segment includes block number 2 to n of the file system is called Inode Table. -

Products for Totally Integrated Automation

ST70_2015_en_Kap01.book Seite 1 Mittwoch, 20. Mai 2015 12:16 12 © Siemens AG 2015 Introduction 1 1/2 LOGO! logic module 1/3 SIMATIC basic controller 1/3 SIMATIC S7-1200 1/4 SIMATIC advanced controller 1/4 SIMATIC S7-1500 1/5 SIMATIC S7-300 1/7 SIMATIC S7-400 1/9 SIMATIC distributed controllers 1/11 SIMATIC software controllers 1/12 SIMATIC WinAC RTX (F) 1/12 SIMATIC programming devices 1/12 SIMATIC Field PG M4 1/13 SIMATIC Industrial PCs 1/14 SIMATIC software 1/15 SIMATIC ET 200 1/16 SIMATIC HMI 1/17 SIMATIC PCS 7 1/18 SIMATIC NET Brochures For brochures serving as selection guides for SIMATIC products refer to: www.siemens.com/simatic/ printmaterial Siemens ST 70 · 2015 ST70_2015_en_Kap01.book Seite 2 Mittwoch, 20. Mai 2015 12:16 12 © Siemens AG 2015 Introduction LOGO! LOGO! logic module 1 ■ Overview LOGO!: Easy-to-use technology with a future The compact, easy-to-use and low-cost solution for simple control tasks. Universally applicable in industry, and in functional or residential buildings. Replaces wiring by linking functions. Operates in a similar way to a programmable logic controller. With integrated operating and display unit for direct input on the device and display of message texts/variables, or as a version without display or keys. Simple operation: • Interconnection of functions by mouse click on the PC or at the press of a button on the device Minimum time requirements: • Wiring solely of the inputs and outputs • Parallel creation of circuit diagram and assembly of control cabinet Reduced costs: • Many integral functions of switching technology High level of flexibility: • Simple modification of functionality at the press of a button • Versions for different operating voltages • Modular design, therefore expandable at any time For further information, refer to: LOGO! 8 versions: www.siemens.com/logo • Ethernet interface for programming and communication with SIMATIC Controllers, SIMATIC Panels and PCs • Networking of max. -

Unix Login Profile

Unix login Profile A general discussion of shell processes, shell scripts, shell functions and aliases is a natural lead in for examining the characteristics of the login profile. The term “shell” is used to describe the command interpreter that a user runs to interact with the Unix operating system. When you login, a shell process is initiated for you, called your login shell. There are a number of "standard" command interpreters available on most Unix systems. On the UNF system, the default command interpreter is the Korn shell which is determined by the user’s entry in the /etc/passwd file. From within the login environment, the user can run Unix commands, which are just predefined processes, most of which are within the system directory named /usr/bin. A shell script is just a file of commands, normally executed at startup for a shell process that was spawned to run the script. The contents of this file can just be ordinary commands as would be entered at the command prompt, but all standard command interpreters also support a scripting language to provide control flow and other capabilities analogous to those of high level languages. A shell function is like a shell script in its use of commands and the scripting language, but it is maintained in the active shell, rather than in a file. The typically used definition syntax is: <function-name> () { <commands> } It is important to remember that a shell function only applies within the shell in which it is defined (not its children). Functions are usually defined within a shell script, but may also be entered directly at the command prompt. -

Penndot Fact Sheet

FACT SHEET Instructions for Completing Form MV-1, “Application for Certificate of Title” (FOR PENNDOT AUTHORIZED AGENTS USE ONLY) This form is used to obtain a Pennsylvania Certificate of Title for vehicles not currently titled in Pennsylvania, or which were repossessed (1. Judgment and Execution; 2. Non-Judicial Sale or Repossession and Retention (U.C.C.); 3. Court Order), and vehicles which are reconstructed, specially constructed or recovered thefts. DISTRIBUTION OF FORM COPIES 1. The white copy is always submitted to PennDOT. 2. The yellow copy is always retained by the dealer or full agent and is to be retained for at least three years. If a temporary registration card or registration plate is issued, the yellow copy becomes the dealer/agent’s record of such issuance. 3. The pink copy is always retained by the individual or business in whose name the certificate of title is to be issued. REPOSSESSIONS AND COURT ORDERS In the case of repossessions, Form MV-217, "Application for Certificate of Title After Default By Owner," must be completed by the repossessor and attached to the completed Form MV-1. Only the following repossessions may be done using the Form MV-1 and Form MV-217: 1) Judgment and Execution; 2) Non-Judicial Sale or Repossession and Retention (U.C.C.) and; 3) Court order. The lienholder in these cases must follow the instructions listed on the Form MV- 217 and use Form MV-1. Upon receipt of the certificate of title in the lienholder’s name, the lienholder may then assign ownership to a dealer or individual on the reverse side of the certificate of title and use Form MV-4ST. -

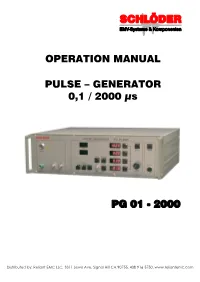

Schlöder PG 01-2000 Pulse Generator Operations Manual

OPERATION MANUAL PULSE – GENERATOR 0,1 / 2000 µs PG 01 - 2000 Distributed by: Reliant EMC LLC, 3311 Lewis Ave, Signal Hill CA 90755, 4089165750, www.reliantemc.com OPERATION MANUAL PULSE - GENERATOR PG 01 - 2000 Warning: This unit generates high voltages and currents and is inherently dangerous. The local, national and all applicable safety regulations must be obeyed for operation of this unit. The device must only be used by experienced electric technicians, see VDE 0140. People using cardiac pacemakers should be excluded from the test area. Important: Please read this manual carefully before putting the PG 01-2000 into operation and mind the described security instruction. Distributed by: Reliant EMC LLC, 3311 Lewis Ave, Signal Hill CA 90755, 4089165750, www.reliantemc.com Operation Manual PG 01 - 2000 291604 Page 2 from 18 Contents 1. INTRODUCTION .................................................................................................................................... 4 2. PULSE DEFINITION .............................................................................................................................. 4 3. DESCRIPTION OF FUNCTIONS ........................................................................................................... 5 3.1. GENERAL.............................................................................................................................................. 5 3.2. SECURITY INSTRUCTIONS ..................................................................................................................... -

SIMATIC Field PG P4 2 Configuring and Operating the Field PG P4 SIMATIC Field PG P4 3 4 Manual SIMATIC Field PG P4 Expansions Configuring the SIMATIC Field PG P4 5

Preface, Contents Important Notes 1 SIMATIC Getting Familiarized with the SIMATIC Field PG P4 2 Configuring And Operating The Field PG P4 SIMATIC Field PG P4 3 4 Manual SIMATIC Field PG P4 Expansions Configuring the SIMATIC Field PG P4 5 Error Diagnostics 6 Hardware Information 7 Reinstallation of the Software 8 Appendices A ESD Guidelines B Technical Specifications Glossary, Index Edition 08/2004 A5E00180080-04 AChapterChapterOChapter Safety Guidelines This manual contains notices intended to ensure personal safety, as well as to protect the products and connected equipment against damage. These notices are highlighted by the symbols shown below and graded according to severity by the following texts: Danger ! indicates that death, severe personal injury or substantial property damage will result if proper precautions are not taken. Warning ! indicates that death, severe personal injury or substantial property damage can result if proper precautions are not taken. Caution ! indicates that minor personal injury can result if proper precautions are not taken. Caution indicates that property damage can result if proper precautions are not taken. Notice draws your attention to particularly important information on the product, handling the product, or to a particular part of the documentation. Qualified Personnel Repair, maintenance and servicing of device only to be carried out by qualified personnel. Qualified persons are defined as persons who are authorized to commission, to ground and to tag circuits, equipment, and systems in accordance with established safety practices and standards. Correct Usage Note the following: Warning ! This device and its components may only be used for the applications described in the catalog or the technical description, and only in connection with devices or components from other manufacturers which have been approved or recommended by Siemens. -

Programmer's Guide, Release 13.1 Copyright © 2009, Oracle And/Or Its Affiliates

Oracle® Retail Extract, Transform, and Load Programmer’s Guide Release 13.1 June 2009 Oracle Retail Extract, Transform, and Load Programmer's Guide, Release 13.1 Copyright © 2009, Oracle and/or its affiliates. All rights reserved. Primary Author: Susan McKibbon This software and related documentation are provided under a license agreement containing restrictions on use and disclosure and are protected by intellectual property laws. Except as expressly permitted in your license agreement or allowed by law, you may not use, copy, reproduce, translate, broadcast, modify, license, transmit, distribute, exhibit, perform, publish, or display any part, in any form, or by any means. Reverse engineering, disassembly, or decompilation of this software, unless required by law for interoperability, is prohibited. The information contained herein is subject to change without notice and is not warranted to be error-free. If you find any errors, please report them to us in writing. If this software or related documentation is delivered to the U.S. Government or anyone licensing it on behalf of the U.S. Government, the following notice is applicable: U.S. GOVERNMENT RIGHTS Programs, software, databases, and related documentation and technical data delivered to U.S. Government customers are "commercial computer software" or "commercial technical data" pursuant to the applicable Federal Acquisition Regulation and agency-specific supplemental regulations. As such, the use, duplication, disclosure, modification, and adaptation shall be subject to the restrictions and license terms set forth in the applicable Government contract, and, to the extent applicable by the terms of the Government contract, the additional rights set forth in FAR 52.227-19, Commercial Computer Software License (December 2007). -

The Effects of Learning a Computer Programming Language on the Logical Reasoning of School Children

DOCUMENT RtsumE ED 205 206 'IR 009 510 AUTHOR: Seidman; Robert H. The Effects of Learnita a COMpItet Programming 'Language on the Logical reasoning of SchObl Children. PnB.DATE 1U Apt 81 NOTE _63p.: Paper _presented St the Annual Meeting-of the_. American Educational Research Association (LOS Angeles; CA April 14 19911. 'EDPS PRICE NE01/PC03 Plus'Postaae. DESCRIPTOPS Achievement WAS: AntlytiS of Covariance: Analysis of Variande:Cnanitive Processes: Computer Science Educatint: Elementary Education: Grade 5: Learning Processes: *Logical Thinkina: *Microcomputers: *Programing Languages TDESTIFTEPS *LOGO System ABSTPAC" The research reported in this paper explores the . syntactical and semantic 1!_tik_betWeen Computer programmingstatements and logical principles, and addresses the effects of learninga programming language on logical reasoning ability; Fifthgrade_ students !r. a pubIc school in SyracuseNew York; were randomly selected as subiectsi and then randomly placed in eitherthe experimental or:the control group. The'experimertalITOdp was taught LOGO, a programming language developed for-use with young childreni while the control croup received no-Spetial_inttudtion. At the end of the tr period,both aroupsWere adiin tered apitmentseries of tests measuring_their conditional reasoning abi fsities.Tests were scored in two_distinct waysi and the.twogroups were statistically compared Within both scoring schemes by split-plot,two-factor repeated measures and one-way analysis of variance. Itwasfound that 'students in the experimental group who interpretedconditional -logic statements biconditionallv performed significantly better on the irveision_fallacv'prinCiple than the controlgroup: no tignifidtht 'difference was found whet test items were scored in thetraditional manner. Comparison of_pre-! and post-experiment achievement test scores shored a signifidtnt improvement in reading only for the control group.