Activation Functions: Comparison of Trends in Practice and Research for Deep Learning

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Training Autoencoders by Alternating Minimization

Under review as a conference paper at ICLR 2018 TRAINING AUTOENCODERS BY ALTERNATING MINI- MIZATION Anonymous authors Paper under double-blind review ABSTRACT We present DANTE, a novel method for training neural networks, in particular autoencoders, using the alternating minimization principle. DANTE provides a distinct perspective in lieu of traditional gradient-based backpropagation techniques commonly used to train deep networks. It utilizes an adaptation of quasi-convex optimization techniques to cast autoencoder training as a bi-quasi-convex optimiza- tion problem. We show that for autoencoder configurations with both differentiable (e.g. sigmoid) and non-differentiable (e.g. ReLU) activation functions, we can perform the alternations very effectively. DANTE effortlessly extends to networks with multiple hidden layers and varying network configurations. In experiments on standard datasets, autoencoders trained using the proposed method were found to be very promising and competitive to traditional backpropagation techniques, both in terms of quality of solution, as well as training speed. 1 INTRODUCTION For much of the recent march of deep learning, gradient-based backpropagation methods, e.g. Stochastic Gradient Descent (SGD) and its variants, have been the mainstay of practitioners. The use of these methods, especially on vast amounts of data, has led to unprecedented progress in several areas of artificial intelligence. On one hand, the intense focus on these techniques has led to an intimate understanding of hardware requirements and code optimizations needed to execute these routines on large datasets in a scalable manner. Today, myriad off-the-shelf and highly optimized packages exist that can churn reasonably large datasets on GPU architectures with relatively mild human involvement and little bootstrap effort. -

Lecture 4 Feedforward Neural Networks, Backpropagation

CS7015 (Deep Learning): Lecture 4 Feedforward Neural Networks, Backpropagation Mitesh M. Khapra Department of Computer Science and Engineering Indian Institute of Technology Madras 1/9 Mitesh M. Khapra CS7015 (Deep Learning): Lecture 4 References/Acknowledgments See the excellent videos by Hugo Larochelle on Backpropagation 2/9 Mitesh M. Khapra CS7015 (Deep Learning): Lecture 4 Module 4.1: Feedforward Neural Networks (a.k.a. multilayered network of neurons) 3/9 Mitesh M. Khapra CS7015 (Deep Learning): Lecture 4 The input to the network is an n-dimensional hL =y ^ = f(x) vector The network contains L − 1 hidden layers (2, in a3 this case) having n neurons each W3 b Finally, there is one output layer containing k h 3 2 neurons (say, corresponding to k classes) Each neuron in the hidden layer and output layer a2 can be split into two parts : pre-activation and W 2 b2 activation (ai and hi are vectors) h1 The input layer can be called the 0-th layer and the output layer can be called the (L)-th layer a1 W 2 n×n and b 2 n are the weight and bias W i R i R 1 b1 between layers i − 1 and i (0 < i < L) W 2 n×k and b 2 k are the weight and bias x1 x2 xn L R L R between the last hidden layer and the output layer (L = 3 in this case) 4/9 Mitesh M. Khapra CS7015 (Deep Learning): Lecture 4 hL =y ^ = f(x) The pre-activation at layer i is given by ai(x) = bi + Wihi−1(x) a3 W3 b3 The activation at layer i is given by h2 hi(x) = g(ai(x)) a2 W where g is called the activation function (for 2 b2 h1 example, logistic, tanh, linear, etc.) The activation at the output layer is given by a1 f(x) = h (x) = O(a (x)) W L L 1 b1 where O is the output activation function (for x1 x2 xn example, softmax, linear, etc.) To simplify notation we will refer to ai(x) as ai and hi(x) as hi 5/9 Mitesh M. -

Revisiting the Softmax Bellman Operator: New Benefits and New Perspective

Revisiting the Softmax Bellman Operator: New Benefits and New Perspective Zhao Song 1 * Ronald E. Parr 1 Lawrence Carin 1 Abstract tivates the use of exploratory and potentially sub-optimal actions during learning, and one commonly-used strategy The impact of softmax on the value function itself is to add randomness by replacing the max function with in reinforcement learning (RL) is often viewed as the softmax function, as in Boltzmann exploration (Sutton problematic because it leads to sub-optimal value & Barto, 1998). Furthermore, the softmax function is a (or Q) functions and interferes with the contrac- differentiable approximation to the max function, and hence tion properties of the Bellman operator. Surpris- can facilitate analysis (Reverdy & Leonard, 2016). ingly, despite these concerns, and independent of its effect on exploration, the softmax Bellman The beneficial properties of the softmax Bellman opera- operator when combined with Deep Q-learning, tor are in contrast to its potentially negative effect on the leads to Q-functions with superior policies in prac- accuracy of the resulting value or Q-functions. For exam- tice, even outperforming its double Q-learning ple, it has been demonstrated that the softmax Bellman counterpart. To better understand how and why operator is not a contraction, for certain temperature pa- this occurs, we revisit theoretical properties of the rameters (Littman, 1996, Page 205). Given this, one might softmax Bellman operator, and prove that (i) it expect that the convenient properties of the softmax Bell- converges to the standard Bellman operator expo- man operator would come at the expense of the accuracy nentially fast in the inverse temperature parameter, of the resulting value or Q-functions, or the quality of the and (ii) the distance of its Q function from the resulting policies. -

Improvements on Activation Functions in ANN: an Overview

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by CSCanada.net: E-Journals (Canadian Academy of Oriental and Occidental Culture,... ISSN 1913-0341 [Print] Management Science and Engineering ISSN 1913-035X [Online] Vol. 14, No. 1, 2020, pp. 53-58 www.cscanada.net DOI:10.3968/11667 www.cscanada.org Improvements on Activation Functions in ANN: An Overview YANG Lexuan[a],* [a]Hangzhou No.2 High School of Zhejiang Province; Hangzhou, China. An ANN is comprised of computable units called * Corresponding author. neurons (or nodes). The activation function in a neuron processes the inputted signals and determines the output. Received 9 April 2020; accepted 21 May 2020 Published online 26 June 2020 Activation functions are particularly important in the ANN algorithm; change in activation functions can have a significant impact on network performance. Therefore, Abstract in recent years, researches have been done in depth on Activation functions are an essential part of artificial the improvement of activation functions to solve or neural networks. Over the years, researches have been alleviate some problems encountered in practices with done to seek for new functions that perform better. “classic” activation functions. This paper will give some There are several mainstream activation functions, such brief examples and discussions on the modifications and as sigmoid and ReLU, which are widely used across improvements of mainstream activation functions. the decades. At the meantime, many modified versions of these functions are also proposed by researchers in order to further improve the performance. In this paper, 1. INTRODUCTION OF ACTIVATION limitations of the mainstream activation functions, as well as the main characteristics and relative performances of FUNCTIONS their modifications, are discussed. -

6.5 Applications of Exponential and Logarithmic Functions 469

6.5 Applications of Exponential and Logarithmic Functions 469 6.5 Applications of Exponential and Logarithmic Functions As we mentioned in Section 6.1, exponential and logarithmic functions are used to model a wide variety of behaviors in the real world. In the examples that follow, note that while the applications are drawn from many different disciplines, the mathematics remains essentially the same. Due to the applied nature of the problems we will examine in this section, the calculator is often used to express our answers as decimal approximations. 6.5.1 Applications of Exponential Functions Perhaps the most well-known application of exponential functions comes from the financial world. Suppose you have $100 to invest at your local bank and they are offering a whopping 5 % annual percentage interest rate. This means that after one year, the bank will pay you 5% of that $100, or $100(0:05) = $5 in interest, so you now have $105.1 This is in accordance with the formula for simple interest which you have undoubtedly run across at some point before. Equation 6.1. Simple Interest The amount of interest I accrued at an annual rate r on an investmenta P after t years is I = P rt The amount A in the account after t years is given by A = P + I = P + P rt = P (1 + rt) aCalled the principal Suppose, however, that six months into the year, you hear of a better deal at a rival bank.2 Naturally, you withdraw your money and try to invest it at the higher rate there. -

CS 189 Introduction to Machine Learning Spring 2021 Jonathan Shewchuk HW6

CS 189 Introduction to Machine Learning Spring 2021 Jonathan Shewchuk HW6 Due: Wednesday, April 21 at 11:59 pm Deliverables: 1. Submit your predictions for the test sets to Kaggle as early as possible. Include your Kaggle scores in your write-up (see below). The Kaggle competition for this assignment can be found at • https://www.kaggle.com/c/spring21-cs189-hw6-cifar10 2. The written portion: • Submit a PDF of your homework, with an appendix listing all your code, to the Gradescope assignment titled “Homework 6 Write-Up”. Please see section 3.3 for an easy way to gather all your code for the submission (you are not required to use it, but we would strongly recommend using it). • In addition, please include, as your solutions to each coding problem, the specific subset of code relevant to that part of the problem. Whenever we say “include code”, that means you can either include a screenshot of your code, or typeset your code in your submission (using markdown or LATEX). • You may typeset your homework in LaTeX or Word (submit PDF format, not .doc/.docx format) or submit neatly handwritten and scanned solutions. Please start each question on a new page. • If there are graphs, include those graphs in the correct sections. Do not put them in an appendix. We need each solution to be self-contained on pages of its own. • In your write-up, please state with whom you worked on the homework. • In your write-up, please copy the following statement and sign your signature next to it. -

Can Temporal-Difference and Q-Learning Learn Representation? a Mean-Field Analysis

Can Temporal-Difference and Q-Learning Learn Representation? A Mean-Field Analysis Yufeng Zhang Qi Cai Northwestern University Northwestern University Evanston, IL 60208 Evanston, IL 60208 [email protected] [email protected] Zhuoran Yang Yongxin Chen Zhaoran Wang Princeton University Georgia Institute of Technology Northwestern University Princeton, NJ 08544 Atlanta, GA 30332 Evanston, IL 60208 [email protected] [email protected] [email protected] Abstract Temporal-difference and Q-learning play a key role in deep reinforcement learning, where they are empowered by expressive nonlinear function approximators such as neural networks. At the core of their empirical successes is the learned feature representation, which embeds rich observations, e.g., images and texts, into the latent space that encodes semantic structures. Meanwhile, the evolution of such a feature representation is crucial to the convergence of temporal-difference and Q-learning. In particular, temporal-difference learning converges when the function approxi- mator is linear in a feature representation, which is fixed throughout learning, and possibly diverges otherwise. We aim to answer the following questions: When the function approximator is a neural network, how does the associated feature representation evolve? If it converges, does it converge to the optimal one? We prove that, utilizing an overparameterized two-layer neural network, temporal- difference and Q-learning globally minimize the mean-squared projected Bellman error at a sublinear rate. Moreover, the associated feature representation converges to the optimal one, generalizing the previous analysis of [21] in the neural tan- gent kernel regime, where the associated feature representation stabilizes at the initial one. The key to our analysis is a mean-field perspective, which connects the evolution of a finite-dimensional parameter to its limiting counterpart over an infinite-dimensional Wasserstein space. -

On the Learning Property of Logistic and Softmax Losses for Deep Neural Networks

The Thirty-Fourth AAAI Conference on Artificial Intelligence (AAAI-20) On the Learning Property of Logistic and Softmax Losses for Deep Neural Networks Xiangrui Li, Xin Li, Deng Pan, Dongxiao Zhu∗ Department of Computer Science Wayne State University {xiangruili, xinlee, pan.deng, dzhu}@wayne.edu Abstract (unweighted) loss, resulting in performance degradation Deep convolutional neural networks (CNNs) trained with lo- for minority classes. To remedy this issue, the class-wise gistic and softmax losses have made significant advancement reweighted loss is often used to emphasize the minority in visual recognition tasks in computer vision. When training classes that can boost the predictive performance without data exhibit class imbalances, the class-wise reweighted ver- introducing much additional difficulty in model training sion of logistic and softmax losses are often used to boost per- (Cui et al. 2019; Huang et al. 2016; Mahajan et al. 2018; formance of the unweighted version. In this paper, motivated Wang, Ramanan, and Hebert 2017). A typical choice of to explain the reweighting mechanism, we explicate the learn- weights for each class is the inverse-class frequency. ing property of those two loss functions by analyzing the nec- essary condition (e.g., gradient equals to zero) after training A natural question then to ask is what roles are those CNNs to converge to a local minimum. The analysis imme- class-wise weights playing in CNN training using LGL diately provides us explanations for understanding (1) quan- or SML that lead to performance gain? Intuitively, those titative effects of the class-wise reweighting mechanism: de- weights make tradeoffs on the predictive performance terministic effectiveness for binary classification using logis- among different classes. -

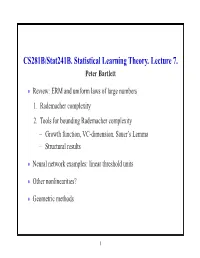

CS281B/Stat241b. Statistical Learning Theory. Lecture 7. Peter Bartlett

CS281B/Stat241B. Statistical Learning Theory. Lecture 7. Peter Bartlett Review: ERM and uniform laws of large numbers • 1. Rademacher complexity 2. Tools for bounding Rademacher complexity Growth function, VC-dimension, Sauer’s Lemma − Structural results − Neural network examples: linear threshold units • Other nonlinearities? • Geometric methods • 1 ERM and uniform laws of large numbers Empirical risk minimization: Choose fn F to minimize Rˆ(f). ∈ How does R(fn) behave? ∗ For f = arg minf∈F R(f), ∗ ∗ ∗ ∗ R(fn) R(f )= R(fn) Rˆ(fn) + Rˆ(fn) Rˆ(f ) + Rˆ(f ) R(f ) − − − − ∗ ULLN for F ≤ 0 for ERM LLN for f |sup R{z(f) Rˆ}(f)| + O(1{z/√n).} | {z } ≤ f∈F − 2 Uniform laws and Rademacher complexity Definition: The Rademacher complexity of F is E Rn F , k k where the empirical process Rn is defined as n 1 R (f)= ǫ f(X ), n n i i i=1 X and the ǫ1,...,ǫn are Rademacher random variables: i.i.d. uni- form on 1 . {± } 3 Uniform laws and Rademacher complexity Theorem: For any F [0, 1]X , ⊂ 1 E Rn F O 1/n E P Pn F 2E Rn F , 2 k k − ≤ k − k ≤ k k p and, with probability at least 1 2exp( 2ǫ2n), − − E P Pn F ǫ P Pn F E P Pn F + ǫ. k − k − ≤ k − k ≤ k − k Thus, P Pn F E Rn F , and k − k ≈ k k R(fn) inf R(f)= O (E Rn F ) . − f∈F k k 4 Tools for controlling Rademacher complexity 1. -

Neural Network in Hardware

UNLV Theses, Dissertations, Professional Papers, and Capstones 12-15-2019 Neural Network in Hardware Jiong Si Follow this and additional works at: https://digitalscholarship.unlv.edu/thesesdissertations Part of the Electrical and Computer Engineering Commons Repository Citation Si, Jiong, "Neural Network in Hardware" (2019). UNLV Theses, Dissertations, Professional Papers, and Capstones. 3845. http://dx.doi.org/10.34917/18608784 This Dissertation is protected by copyright and/or related rights. It has been brought to you by Digital Scholarship@UNLV with permission from the rights-holder(s). You are free to use this Dissertation in any way that is permitted by the copyright and related rights legislation that applies to your use. For other uses you need to obtain permission from the rights-holder(s) directly, unless additional rights are indicated by a Creative Commons license in the record and/or on the work itself. This Dissertation has been accepted for inclusion in UNLV Theses, Dissertations, Professional Papers, and Capstones by an authorized administrator of Digital Scholarship@UNLV. For more information, please contact [email protected]. NEURAL NETWORKS IN HARDWARE By Jiong Si Bachelor of Engineering – Automation Chongqing University of Science and Technology 2008 Master of Engineering – Precision Instrument and Machinery Hefei University of Technology 2011 A dissertation submitted in partial fulfillment of the requirements for the Doctor of Philosophy – Electrical Engineering Department of Electrical and Computer Engineering Howard R. Hughes College of Engineering The Graduate College University of Nevada, Las Vegas December 2019 Copyright 2019 by Jiong Si All Rights Reserved Dissertation Approval The Graduate College The University of Nevada, Las Vegas November 6, 2019 This dissertation prepared by Jiong Si entitled Neural Networks in Hardware is approved in partial fulfillment of the requirements for the degree of Doctor of Philosophy – Electrical Engineering Department of Electrical and Computer Engineering Sarah Harris, Ph.D. -

Population Dynamics: Variance and the Sigmoid Activation Function ⁎ André C

www.elsevier.com/locate/ynimg NeuroImage 42 (2008) 147–157 Technical Note Population dynamics: Variance and the sigmoid activation function ⁎ André C. Marreiros, Jean Daunizeau, Stefan J. Kiebel, and Karl J. Friston Wellcome Trust Centre for Neuroimaging, University College London, UK Received 24 January 2008; revised 8 April 2008; accepted 16 April 2008 Available online 29 April 2008 This paper demonstrates how the sigmoid activation function of (David et al., 2006a,b; Kiebel et al., 2006; Garrido et al., 2007; neural-mass models can be understood in terms of the variance or Moran et al., 2007). All these models embed key nonlinearities dispersion of neuronal states. We use this relationship to estimate the that characterise real neuronal interactions. The most prevalent probability density on hidden neuronal states, using non-invasive models are called neural-mass models and are generally for- electrophysiological (EEG) measures and dynamic casual modelling. mulated as a convolution of inputs to a neuronal ensemble or The importance of implicit variance in neuronal states for neural-mass population to produce an output. Critically, the outputs of one models of cortical dynamics is illustrated using both synthetic data and ensemble serve as input to another, after some static transforma- real EEG measurements of sensory evoked responses. © 2008 Elsevier Inc. All rights reserved. tion. Usually, the convolution operator is linear, whereas the transformation of outputs (e.g., mean depolarisation of pyramidal Keywords: Neural-mass models; Nonlinear; Modelling cells) to inputs (firing rates in presynaptic inputs) is a nonlinear sigmoidal function. This function generates the nonlinear beha- viours that are critical for modelling and understanding neuronal activity. -

Neural Networks Shuai Li John Hopcroft Center, Shanghai Jiao Tong University

Lecture 6: Neural Networks Shuai Li John Hopcroft Center, Shanghai Jiao Tong University https://shuaili8.github.io https://shuaili8.github.io/Teaching/VE445/index.html 1 Outline • Perceptron • Activation functions • Multilayer perceptron networks • Training: backpropagation • Examples • Overfitting • Applications 2 Brief history of artificial neural nets • The First wave • 1943 McCulloch and Pitts proposed the McCulloch-Pitts neuron model • 1958 Rosenblatt introduced the simple single layer networks now called Perceptrons • 1969 Minsky and Papert’s book Perceptrons demonstrated the limitation of single layer perceptrons, and almost the whole field went into hibernation • The Second wave • 1986 The Back-Propagation learning algorithm for Multi-Layer Perceptrons was rediscovered and the whole field took off again • The Third wave • 2006 Deep (neural networks) Learning gains popularity • 2012 made significant break-through in many applications 3 Biological neuron structure • The neuron receives signals from their dendrites, and send its own signal to the axon terminal 4 Biological neural communication • Electrical potential across cell membrane exhibits spikes called action potentials • Spike originates in cell body, travels down axon, and causes synaptic terminals to release neurotransmitters • Chemical diffuses across synapse to dendrites of other neurons • Neurotransmitters can be excitatory or inhibitory • If net input of neuro transmitters to a neuron from other neurons is excitatory and exceeds some threshold, it fires an action potential 5 Perceptron • Inspired by the biological neuron among humans and animals, researchers build a simple model called Perceptron • It receives signals 푥푖’s, multiplies them with different weights 푤푖, and outputs the sum of the weighted signals after an activation function, step function 6 Neuron vs.