MDB: a Memory-Mapped Database and Backend for Openldap

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Open Directory & Openldap

Open Directory & OpenLDAP David M. O’Rourke Engineering Manager Overview • Background on Apple’s Open Directory Technology (8 minutes) – What is it – What is Directory Services • How has Apple integrated OpenLDAP (20 minutes or less) – what has Apple added to OpenLDAP? • Questions and Answers (remaining time) Open Directory • Open Directory is a technology name – Covers both client access technologies and server technologies – Integrates and promotes industry standard technologies • Open Directory is built into Mac OS X & Mac OS X Server – Been there since 10.0 • Open Sourced as part of Darwin – http://developer.apple.com/darwin/projects/ opendirectory/ What Is Directory Services • Abstraction API for read/write access to system configuration and management data – Users, groups, mount records and others – Authentication abstraction Mac OS X Software Directory Services NetInfo LDAP BSD Files Other… Directory Services in 10.3 • Includes – LDAPv3 (read/write), Native Active Directory, NetInfo, NIS, BSD/etc files – Service Discovery: Rendezvous, SMB, AppleTalk, and SLP – LDAPv3 client support replication fail over • Documented Access API and plug-in API – SDK posted – Sample code, sample plug-in, notes – Directory Services Headers are installed in – /System/Library/Frameworks/DirectoryService.framework – Command line tool for directory editing ‘dscl’ 10.3 Usage of Directory Services • Login Window uses Directory Services for all user authentication – Managed Desktop • All Security Framework authentication uses Directory Services • Legacy Unix -

The Lightning Memory-Mapped Database

The Lightning Memory-Mapped Database Howard Chu CTO, Symas Corp. [email protected] Chief Architect, OpenLDAP [email protected] 2015-09-21 OpenLDAP Project ● Open source code project ● Founded 1998 ● Three core team members ● A dozen or so contributors ● Feature releases every 12-18 months ● Maintenance releases as needed 2 A Word About Symas ● Founded 1999 ● Founders from Enterprise Software world – platinum Technology (Locus Computing) – IBM ● Howard joined OpenLDAP in 1999 – One of the Core Team members – Appointed Chief Architect January 2007 ● No debt, no VC investments: self-funded 3 Intro ● Howard Chu – Founder and CTO Symas Corp. – Developing Free/Open Source software since 1980s ● GNU compiler toolchain, e.g. "gmake -j", etc. ● Many other projects... – Worked for NASA/JPL, wrote software for Space Shuttle, etc. 4 Topics (1) Background (2) Features (3) Design Approach (4) Internals (5) Special Features (6) Results 5 (1) Background ● API inspired by Berkeley DB (BDB) – OpenLDAP has used BDB extensively since 1999 – Deep experience with pros and cons of BDB design and implementation – Omits BDB features that were found to be of no benefit ● e.g. extensible hashing – Avoids BDB characteristics that were problematic ● e.g. cache tuning, complex locking, transaction logs, recovery 6 (2) Features LMDB At A Glance ● Key/Value store using B+trees ● Fully transactional, ACID compliant ● MVCC, readers never block ● Uses memory-mapped files, needs no tuning ● Crash-proof, no recovery needed after restart ● Highly optimized, extremely compact – under 40KB object code, fits in CPU L1 I$ ● Runs on most modern OSs – Linux, Android, *BSD, MacOSX, iOS, Solaris, Windows, etc.. -

Overview and Frequently Asked Questions

Overview and Frequently Asked Questions OVERVIEW Oracle Buys Open Source Software Company Sleepycat - Expands Embedded Database Product Line with Berkeley DB Oracle has expanded its embedded Oracle’s portfolio of embedded database technologies provide database offerings through the customers with a range of technical options to meet their diverse needs, with the backing of a global support organization. Oracle acquisition of Sleepycat Software, Inc., and Sleepycat’s complementary products, expertise and resources a privately held supplier of open source will help accelerate innovation and provide customers with richer, database software for developers of more complete solutions. Oracle currently intends to continue embedded applications. Sleepycat’s dual license model and plans to continue to invest in the Berkeley DB products and support offerings, and to utilize Oracle’s global channel reach to service even more customers. With the proliferation of new applications, devices, appliances, Web Services and highly available Software as a Service (SaaS), In conjunction with the expansion of available embedded there is significant and growing demand for embedded databases. database options, Oracle has formed a global initiative focused In addition to Oracle Database Lite Edition for mobile devices on marketing, selling and servicing the Oracle Lite, TimesTen and Oracle TimesTen In-Memory Database for highly dynamic, and Berkeley DB products. This will include support procedures standards-compliant applications, Oracle now adds Berkeley DB and product release cycles tuned to the rapid-response needs database products for developers of tightly embedded, highly of embedded systems developers, and packaging and pricing customized applications. Berkeley DB is added to the Oracle flexibility that adjusts to the needs of Oracle’s partners. -

Mac OS X Server Administrator's Guide

034-9285.S4AdminPDF 6/27/02 2:07 PM Page 1 Mac OS X Server Administrator’s Guide K Apple Computer, Inc. © 2002 Apple Computer, Inc. All rights reserved. Under the copyright laws, this publication may not be copied, in whole or in part, without the written consent of Apple. The Apple logo is a trademark of Apple Computer, Inc., registered in the U.S. and other countries. Use of the “keyboard” Apple logo (Option-Shift-K) for commercial purposes without the prior written consent of Apple may constitute trademark infringement and unfair competition in violation of federal and state laws. Apple, the Apple logo, AppleScript, AppleShare, AppleTalk, ColorSync, FireWire, Keychain, Mac, Macintosh, Power Macintosh, QuickTime, Sherlock, and WebObjects are trademarks of Apple Computer, Inc., registered in the U.S. and other countries. AirPort, Extensions Manager, Finder, iMac, and Power Mac are trademarks of Apple Computer, Inc. Adobe and PostScript are trademarks of Adobe Systems Incorporated. Java and all Java-based trademarks and logos are trademarks or registered trademarks of Sun Microsystems, Inc. in the U.S. and other countries. Netscape Navigator is a trademark of Netscape Communications Corporation. RealAudio is a trademark of Progressive Networks, Inc. © 1995–2001 The Apache Group. All rights reserved. UNIX is a registered trademark in the United States and other countries, licensed exclusively through X/Open Company, Ltd. 062-9285/7-26-02 LL9285.Book Page 3 Tuesday, June 25, 2002 3:59 PM Contents Preface How to Use This Guide 39 What’s Included -

Brave Gnu World

LINUXCOVERCOMMUNITY USERSTORY SchlagwortSchlagwortBrave GNU sollte Worldsollte hier hier stehen stehen Schlagwort sollte hier stehen COVER STORY The Monthly GNU Column BRAVE GNU WORLD This column looks into projects and current affairs in the world of free software from the perspective of the GNU Project and the FSF. In this issue, I’ll focus on Comspari and the EU decision on software patents. BY GEORG C.F. GREVE n the past, the German government the Kroupware project in 2002. The pub- principles back to front. For example, has often caused a (positive) stir due lic tender by the BSI was aimed to pro- many other solutions are based on the Ito its activities with free software. duce a groupware solution that would principle that the server is the king of The Federal Ministry of the Economy support strong cryptography and inte- the hill and the users have to bow down (BMWi) sponsored Gnupg [1], for exam- grate seamlessly into a heterogeneous and worship it. The Kolab server does ple. Add to this a number of accompany- environment. things differently. The program makes ing activities, such as a report by the The government office gave a consor- the user king, and gives the user power Bavarian Accounts Office, the KBST let- tium comprising Erfrakon, Klarälvdalens to do things. The software simply helps ter, and the highly acclaimed migration Datakonsult [2], and Intevation [3] the coordinate things as a central intermedi- guide by the Federal Ministry of the Inte- task of developing the software. Inter- ary. rior (BMI). nally, the design and the software was Kolab 1 combined so-called partial referred to as Kolab 1. -

Working with Oracle® Solaris 11.4 Directory and Naming Services: LDAP

® Working With Oracle Solaris 11.4 Directory and Naming Services: LDAP Part No: E61012 November 2020 Working With Oracle Solaris 11.4 Directory and Naming Services: LDAP Part No: E61012 Copyright © 2002, 2020, Oracle and/or its affiliates. License Restrictions Warranty/Consequential Damages Disclaimer This software and related documentation are provided under a license agreement containing restrictions on use and disclosure and are protected by intellectual property laws. Except as expressly permitted in your license agreement or allowed by law, you may not use, copy, reproduce, translate, broadcast, modify, license, transmit, distribute, exhibit, perform, publish, or display any part, in any form, or by any means. Reverse engineering, disassembly, or decompilation of this software, unless required by law for interoperability, is prohibited. Warranty Disclaimer The information contained herein is subject to change without notice and is not warranted to be error-free. If you find any errors, please report them to us in writing. Restricted Rights Notice If this is software or related documentation that is delivered to the U.S. Government or anyone licensing it on behalf of the U.S. Government, then the following notice is applicable: U.S. GOVERNMENT END USERS: Oracle programs (including any operating system, integrated software, any programs embedded, installed or activated on delivered hardware, and modifications of such programs) and Oracle computer documentation or other Oracle data delivered to or accessed by U.S. Government end users -

Managing Secure Shell Access in Oracle® Solaris 11.4

® Managing Secure Shell Access in Oracle Solaris 11.4 Part No: E61025 June 2019 Managing Secure Shell Access in Oracle Solaris 11.4 Part No: E61025 Copyright © 2002, 2019, Oracle and/or its affiliates. All rights reserved. This software and related documentation are provided under a license agreement containing restrictions on use and disclosure and are protected by intellectual property laws. Except as expressly permitted in your license agreement or allowed by law, you may not use, copy, reproduce, translate, broadcast, modify, license, transmit, distribute, exhibit, perform, publish, or display any part, in any form, or by any means. Reverse engineering, disassembly, or decompilation of this software, unless required by law for interoperability, is prohibited. The information contained herein is subject to change without notice and is not warranted to be error-free. If you find any errors, please report them to us in writing. If this is software or related documentation that is delivered to the U.S. Government or anyone licensing it on behalf of the U.S. Government, then the following notice is applicable: U.S. GOVERNMENT END USERS: Oracle programs, including any operating system, integrated software, any programs installed on the hardware, and/or documentation, delivered to U.S. Government end users are "commercial computer software" pursuant to the applicable Federal Acquisition Regulation and agency-specific supplemental regulations. As such, use, duplication, disclosure, modification, and adaptation of the programs, including any operating system, integrated software, any programs installed on the hardware, and/or documentation, shall be subject to license terms and license restrictions applicable to the programs. -

Administering Avaya Aura® Device Services

Administering Avaya Aura® Device Services Release 7.1.5 Issue 7 April 2019 © 2016-2019, Avaya Inc. USES AND/OR INSTALLS AVAYA SOFTWARE, PURCHASED All Rights Reserved. FROM AVAYA INC., ANY AVAYA AFFILIATE, OR AN AVAYA CHANNEL PARTNER (AS APPLICABLE) UNDER A COMMERCIAL Notice AGREEMENT WITH AVAYA OR AN AVAYA CHANNEL PARTNER. While reasonable efforts have been made to ensure that the UNLESS OTHERWISE AGREED TO BY AVAYA IN WRITING, information in this document is complete and accurate at the time of AVAYA DOES NOT EXTEND THIS LICENSE IF THE SOFTWARE printing, Avaya assumes no liability for any errors. Avaya reserves WAS OBTAINED FROM ANYONE OTHER THAN AVAYA, AN AVAYA the right to make changes and corrections to the information in this AFFILIATE OR AN AVAYA CHANNEL PARTNER; AVAYA document without the obligation to notify any person or organization RESERVES THE RIGHT TO TAKE LEGAL ACTION AGAINST YOU of such changes. AND ANYONE ELSE USING OR SELLING THE SOFTWARE WITHOUT A LICENSE. BY INSTALLING, DOWNLOADING OR Documentation disclaimer USING THE SOFTWARE, OR AUTHORIZING OTHERS TO DO SO, “Documentation” means information published in varying mediums YOU, ON BEHALF OF YOURSELF AND THE ENTITY FOR WHOM which may include product information, operating instructions and YOU ARE INSTALLING, DOWNLOADING OR USING THE performance specifications that are generally made available to users SOFTWARE (HEREINAFTER REFERRED TO of products. Documentation does not include marketing materials. INTERCHANGEABLY AS “YOU” AND “END USER”), AGREE TO Avaya shall not be responsible for any modifications, additions, or THESE TERMS AND CONDITIONS AND CREATE A BINDING deletions to the original published version of Documentation unless CONTRACT BETWEEN YOU AND AVAYA INC. -

LDAP-HOWTO.Pdf

LDAP Linux HOWTO Luiz Ernesto Pinheiro Malère <malere _at_ yahoo.com> v1.10, 2007−03−18 Revision History Revision 1.10 2007/03/18 Pointer to updated documentation Revision 1.09 2004/03/05 OpenLDAP 2.2 and general corrections. Revision 1.08 2003/04/02 SASL with DIGEST−MD5 authentication. Revision 1.07 2002/09/16 Typo correction. Revision 1.06 2002/07/17 Migration to DocBook XML standard, revision of the role document. Introducing OpenLDAP 2.1. Revision 1.05 2001/06/22 Revised by: lepm Correction of long lines that were causing inconsistences on the PDF version of the document. Revision 1.04 2001/02/28 Revised by: lepm Correction of more typos and update on the following sections: Roaming Access, Authentication using LDAP. Revision 1.03 2000/09/28 Revised by: lepm Presenting OpenLDAP 2.0, which comprises LDAPv3, as defined on RFC2251 Revision 1.02 2000/09/13 Revised by: lepm Correction of typos and addition of the section History of Releases. Revision 1.01 2000/02/15 Revised by: lepm Added the following sections: LDAP Migration Tools, Authentication using LDAP, Graphical LDAP tools, RFCs. Revision 1.00 1999/06/20 Revised by: lepm Initial version. Information about installing, configuring, running and maintaining a LDAP (Lightweight Directory Access Protocol) Server on a Linux machine is presented on this document. The document also presents details about how to create LDAP databases, how to add, how to update and how to delete information on the directory. This paper is mostly based on the University of Michigan LDAP information pages and on the OpenLDAP Administrator's Guide. -

Openldap Documentation Release 2.4.42

OpenLDAP Documentation Release 2.4.42 OpenLDAP Foundation May 10, 2017 Contents 1 Preface 1 1.1 Preface..................................................2 1.2 Introduction to OpenLDAP Directory Services.............................3 1.3 Quick Start................................................ 11 1.4 The Big Picture.............................................. 15 1.5 Building and Installing OpenLDAP Software.............................. 16 1.6 Configuring slapd............................................ 16 1.7 Running slapd.............................................. 16 1.8 Access Control.............................................. 16 1.9 Database Creation and Maintenance Tools............................... 16 1.10 Backends................................................. 16 1.11 Schema Specification........................................... 16 1.12 Security Considerations......................................... 16 1.13 Using SASL............................................... 16 1.14 Using TLS................................................ 16 1.15 OpenLDAP Software Copyright Notices................................ 16 1.16 OpenLDAP Public License....................................... 18 1.17 Glossary................................................. 18 2 Indices and tables 19 i ii CHAPTER 1 Preface This document is considered a part of OpenLDAP Software. This document is subject to terms of conditions set forth in OpenLDAP Software Copyright Notices and the OpenLDAP Public License. Complete copies of the notices and associated -

Enhancing the Performance of Openldap Directory Server with Multiple Caching

Enhancing the Performance of OpenLDAP Directory Server with Multiple Caching Jong Hyuk Choi and Hubertus Franke Kurt Zeilenga IBM Thomas J. Watson Research Center The OpenLDAP Foundation P.O. Box 218, Yorktown Heights, NY 10598 [email protected] jongchoi,frankeh¡ @us.ibm.com Keywords—Directory, LDAP, Caching, Linux, Profiling. This paper presents our efforts to enhance OpenLDAP [2], Abstract— Directory is a specialized data store optimized for ef- an open-source directory software suite. More specifically, this ficient information retrieval which has standard information model, paper focuses on the performance and reliability of slapd, the naming scheme, and access protocol for interoperability over net- standalone LDAP server of OpenLDAP. We participated in the work. It stores critical data such as user, resource, and policy infor- mation in the enterprise computing environment. This paper presents development of back-bdb, a transactional backend of OpenL- a performance driven design of a transactional backend of the OpenL- DAP, which directly utilizes the underlying Berkeley DB with- DAP open-source directory server which provides improved reliabil- out the general backend API layer used in the existing OpenL- ity with high performance. Based on a detailed system-level profiling DAP backend, back-ldbm. Back-bdb provides a higher level of of OpenLDAP on the Linux OS, we identify major performance bot- reliability and concurrency. The transaction support of back- tlenecks. After investigating the characteristics of each bottleneck, we propose a set of caching techniques in order to eliminate them: bdb makes directory recoverable from temporary failures and directory entry cache, search candidate cache, IDL (ID List) cache, disasters, while the page level locking enables concurrent ac- IDL stack slab cache, and BER (Basic Encoding Rule) transfer cache. -

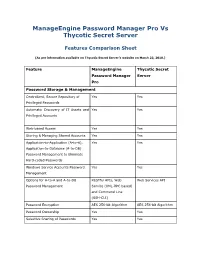

Manageengine Password Manager Pro Vs Thycotic Secret Server

ManageEngine Password Manager Pro Vs Thycotic Secret Server Features Comparison Sheet (As per information available on Thycotic Secret Server’s website on March 23, 2018.) Feature ManageEngine Thycotic Secret Password Manager Server Pro Password Storage & Management Centralized, Secure Repository of Yes Yes Privileged Passwords Automatic Discovery of IT Assets and Yes Yes Privileged Accounts Web-based Access Yes Yes Storing & Managing Shared Accounts Yes Yes Application-to-Application (A-to-A), Yes Yes Application-to-Database (A-to-DB) Password Management to Eliminate Hard-coded Passwords Windows Service Accounts Password Yes Yes Management Options for A-to-A and A-to-DB RESTful APIs, Web Web Services API Password Management Service (XML-RPC based) and Command Line (SSH-CLI) Password Encryption AES 256-bit Algorithm AES 256-bit Algorithm Password Ownership Yes Yes Selective Sharing of Passwords Yes Yes Fine-grained, role-based permission to Yes Yes view/ edit/manage Passwords Grouping Resources / Passwords Bulk Yes Yes Operation Storing Files, Images, Digital Identities Yes Yes Remote Password Reset Synchronizing Passwords of Remote Yes Yes Resources Deploying Agents for Remote Yes Yes Password Reset Agent-less Remote Password Reset Yes Yes Scheduled, Automatic Password Yes Yes Rotation Verifying the Integrity of the Yes Yes Passwords in Target Systems Windows Service Account Reset Yes Yes Provision for triggering any follow-up Yes Yes action after Password Reset Direct Connection to Remote Yes Yes Resources in Data Centers by Configuring Landing Servers (Jump Servers) Platforms Support for Remote Password Reset Supported Platforms for Remote Operating Systems ● Active Directory Password Reset Windows, Windows ● Blue Coat Domain, Flavors of UNIX ● LDAP (Active and Linux, Solaris, Mac Directory, OS, HP iLO OpenLDAP, LDAPS) Windows Applications ● Unix/Linux/Mac Service accounts, (incl.