Naturalness and Emergence

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Naturalness and New Approaches to the Hierarchy Problem

Naturalness and New Approaches to the Hierarchy Problem PiTP 2017 Nathaniel Craig Department of Physics, University of California, Santa Barbara, CA 93106 No warranty expressed or implied. This will eventually grow into a more polished public document, so please don't disseminate beyond the PiTP community, but please do enjoy. Suggestions, clarifications, and comments are welcome. Contents 1 Introduction 2 1.0.1 The proton mass . .3 1.0.2 Flavor hierarchies . .4 2 The Electroweak Hierarchy Problem 5 2.1 A toy model . .8 2.2 The naturalness strategy . 13 3 Old Hierarchy Solutions 16 3.1 Lowered cutoff . 16 3.2 Symmetries . 17 3.2.1 Supersymmetry . 17 3.2.2 Global symmetry . 22 3.3 Vacuum selection . 26 4 New Hierarchy Solutions 28 4.1 Twin Higgs / Neutral naturalness . 28 4.2 Relaxion . 31 4.2.1 QCD/QCD0 Relaxion . 31 4.2.2 Interactive Relaxion . 37 4.3 NNaturalness . 39 5 Rampant Speculation 42 5.1 UV/IR mixing . 42 6 Conclusion 45 1 1 Introduction What are the natural sizes of parameters in a quantum field theory? The original notion is the result of an aggregation of different ideas, starting with Dirac's Large Numbers Hypothesis (\Any two of the very large dimensionless numbers occurring in Nature are connected by a simple mathematical relation, in which the coefficients are of the order of magnitude unity" [1]), which was not quantum in nature, to Gell- Mann's Totalitarian Principle (\Anything that is not compulsory is forbidden." [2]), to refinements by Wilson and 't Hooft in more modern language. -

Solving the Hierarchy Problem

solving the hierarchy problem Joseph Lykken Fermilab/U. Chicago puzzle of the day: why is gravity so weak? answer: because there are large or warped extra dimensions about to be discovered at colliders puzzle of the day: why is gravity so weak? real answer: don’t know many possibilities may not even be a well-posed question outline of this lecture • what is the hierarchy problem of the Standard Model • is it really a problem? • what are the ways to solve it? • how is this related to gravity? what is the hierarchy problem of the Standard Model? • discuss concepts of naturalness and UV sensitivity in field theory • discuss Higgs naturalness problem in SM • discuss extra assumptions that lead to the hierarchy problem of SM UV sensitivity • Ken Wilson taught us how to think about field theory: “UV completion” = high energy effective field theory matching scale, Λ low energy effective field theory, e.g. SM energy UV sensitivity • how much do physical parameters of the low energy theory depend on details of the UV matching (i.e. short distance physics)? • if you know both the low and high energy theories, can answer this question precisely • if you don’t know the high energy theory, use a crude estimate: how much do the low energy observables change if, e.g. you let Λ → 2 Λ ? degrees of UV sensitivity parameter UV sensitivity “finite” quantities none -- UV insensitive dimensionless couplings logarithmic -- UV insensitive e.g. gauge or Yukawa couplings dimension-full coefs of higher dimension inverse power of cutoff -- “irrelevant” operators e.g. -

A Modified Naturalness Principle and Its Experimental Tests

A modified naturalness principle and its experimental tests Marco Farinaa, Duccio Pappadopulob;c and Alessandro Strumiad;e (a) Department of Physics, LEPP, Cornell University, Ithaca, NY 14853, USA (b) Department of Physics, University of California, Berkeley, CA 94720, USA (c) Theoretical Physics Group, Lawrence Berkeley National Laboratory, Berkeley, USA (d) Dipartimento di Fisica dell’Universita` di Pisa and INFN, Italia (e) National Institute of Chemical Physics and Biophysics, Tallinn, Estonia Abstract Motivated by LHC results, we modify the usual criterion for naturalness by ig- noring the uncomputable power divergences. The Standard Model satisfies the modified criterion (‘finite naturalness’) for the measured values of its parameters. Extensions of the SM motivated by observations (Dark Matter, neutrino masses, the strong CP problem, vacuum instability, inflation) satisfy finite naturalness in special ranges of their parameter spaces which often imply new particles below a few TeV. Finite naturalness bounds are weaker than usual naturalness bounds because any new particle with SM gauge interactions gives a finite contribution to the Higgs mass at two loop order. Contents 1 Introduction2 2 The Standard Model3 3 Finite naturalness, neutrino masses and leptogenesis4 3.1 Type-I see saw . .5 3.2 Type-III see saw . .6 3.3 Type-II see saw . .6 arXiv:1303.7244v3 [hep-ph] 29 Apr 2014 4 Finite naturalness and Dark Matter7 4.1 Minimal Dark Matter . .7 4.2 The scalar singlet DM model . 10 4.3 The fermion singlet model . 10 5 Finite naturalness and axions 13 5.1 KSVZ axions . 13 5.2 DFSZ axions . 14 6 Finite naturalness, vacuum decay and inflation 14 7 Conclusions 15 1 1 Introduction The naturalness principle strongly influenced high-energy physics in the past decades [1], leading to the belief that physics beyond the Standard Model (SM) must exist at a scale ΛNP such that quadratically divergent quantum corrections to the Higgs squared mass are made finite (presumably up to a log divergence) and not much larger than the Higgs mass Mh itself. -

![Hep-Ph] 19 Nov 2018](https://docslib.b-cdn.net/cover/4850/hep-ph-19-nov-2018-784850.webp)

Hep-Ph] 19 Nov 2018

The discreet charm of higgsino dark matter { a pocket review Kamila Kowalska∗ and Enrico Maria Sessoloy National Centre for Nuclear Research, Ho_za69, 00-681 Warsaw, Poland Abstract We give a brief review of the current constraints and prospects for detection of higgsino dark matter in low-scale supersymmetry. In the first part we argue, after per- forming a survey of all potential dark matter particles in the MSSM, that the (nearly) pure higgsino is the only candidate emerging virtually unscathed from the wealth of observational data of recent years. In doing so by virtue of its gauge quantum numbers and electroweak symmetry breaking only, it maintains at the same time a relatively high degree of model-independence. In the second part we properly review the prospects for detection of a higgsino-like neutralino in direct underground dark matter searches, col- lider searches, and indirect astrophysical signals. We provide estimates for the typical scale of the superpartners and fine tuning in the context of traditional scenarios where the breaking of supersymmetry is mediated at about the scale of Grand Unification and where strong expectations for a timely detection of higgsinos in underground detectors are closely related to the measured 125 GeV mass of the Higgs boson at the LHC. arXiv:1802.04097v3 [hep-ph] 19 Nov 2018 ∗[email protected] [email protected] 1 Contents 1 Introduction2 2 Dark matter in the MSSM4 2.1 SU(2) singlets . .5 2.2 SU(2) doublets . .7 2.3 SU(2) adjoint triplet . .9 2.4 Mixed cases . .9 3 Phenomenology of higgsino dark matter 12 3.1 Prospects for detection in direct and indirect searches . -

A Natural Introduction to Fine-Tuning

A Natural Introduction to Fine-Tuning Julian De Vuyst ∗ Department of Physics & Astronomy, Ghent University, Krijgslaan, S9, 9000 Ghent, Belgium Abstract A well-known topic within the philosophy of physics is the problem of fine-tuning: the fact that the universal constants seem to take non-arbitrary values in order for live to thrive in our Universe. In this paper we will talk about this problem in general, giving some examples from physics. We will review some solutions like the design argument, logical probability, cosmological natural selection, etc. Moreover, we will also discuss why it's dangerous to uphold the Principle of Naturalness as a scientific principle. After going through this paper, the reader should have a general idea what this problem exactly entails whenever it is mentioned in other sources and we recommend the reader to think critically about these concepts. arXiv:2012.05617v1 [physics.hist-ph] 10 Dec 2020 ∗[email protected] 1 Contents 1 Introduction3 2 A Take on Constants3 2.I The Role of Units . .4 2.II Derived vs Fundamental Constants . .5 3 The Concept of Naturalness6 3.I Technical Naturalness . .6 3.I.a A Wilsonian Perspective . .6 3.I.b A Brief History . .8 3.II Numerical/General Naturalness . .9 4 The Fine-Tuning Problem9 5 Some Examples from Physics 10 5.I The Cosmological Constant Problem . 10 5.II The Flatness Problem . 11 5.III The Higgs Mass . 12 5.IV The Strong CP Problem . 13 6 Resolutions to Fine-Tuning 14 6.I We are here, full stop . 14 6.II Designed like Clockwork . -

Naturalness and Mixed Axion-Higgsino Dark Matter Howard Baer University of Oklahoma UCLA DM Meeting, Feb

Naturalness and mixed axion-higgsino dark matter Howard Baer University of Oklahoma UCLA DM meeting, Feb. 18, 2016 ADMX LZ What is the connection? The naturalness issue: Naturalness= no large unnatural tuning in W, Z, h masses up-Higgs soft term radiative corrections superpotential mu term well-mixed TeV-scale stops suppress Sigma while lifting m(h)~125 GeV What about other measures: 2 @ log mZ ∆EENZ/BG = maxi where pi are parameters EENZ/BG: | @ log pi | * in past, applied to multi-param. effective theories; * in fundamental theory, param’s dependent * e.g. in gravity-mediation, apply to mu,m_3/2 * then agrees with Delta_EW what about large logs and light stops? * overzealous use of ~ symbol * combine dependent terms * cancellations possible * then agrees with Delta_EW SUSY mu problem: mu term is SUSY, not SUSY breaking: expect mu~M(Pl) but phenomenology requires mu~m(Z) • NMSSM: mu~m(3/2); beware singlets! • Giudice-Masiero: mu forbidden by some symmetry: generate via Higgs coupling to hidden sector • Kim-Nilles!: invoke SUSY version of DFSZ axion solution to strong CP: KN: PQ symmetry forbids mu term, but then it is generated via PQ breaking 2 m3/2 mhid/MP Little Hierarchy due to mismatch between ⇠ f m PQ breaking and SUSY breaking scales? a ⌧ hid Higgs mass tells us where 1012 GeV ma 6.2µeV to look for axion! ⇠ f ✓ a ◆ Simple mechanism to suppress up-Higgs soft term: radiative EWSB => radiatively-driven naturalness But what about SUSY mu term? There is a Little Hierarchy, but it is no problem µ m ⌧ 3/2 Mainly higgsino-like WIMPs thermally -

Exploring Supersymmetry and Naturalness in Light of New Experimental Data

Exploring supersymmetry and naturalness in light of new experimental data by Kevin Earl A thesis submitted to the Faculty of Graduate and Postdoctoral Affairs in partial fulfillment of the requirements for the degree of Doctor of Philosophy in Physics Department of Physics Carleton University Ottawa-Carleton Institute for Physics Ottawa, Canada August 27, 2019 Copyright ⃝c 2019 Kevin Earl Abstract This thesis investigates extensions of the Standard Model (SM) that are based on either supersymmetry or the Twin Higgs model. New experimental data, primar- ily collected at the Large Hadron Collider (LHC), play an important role in these investigations. Specifically, we examine the following five cases. We first consider Mini-Split models of supersymmetry. These types ofmod- els can be generated by both anomaly and gauge mediation and we examine both cases. LHC searches are used to constrain the relevant parameter spaces, and future prospects at LHC 14 and a 100 TeV proton proton collider are investigated. Next, we study a scenario where Higgsino neutralinos and charginos are pair produced at the LHC and promptly decay due to the baryonic R-parity violating superpotential operator λ00U cDcDc. More precisely, we examine this phenomenology 00 in the case of a single non-zero λ3jk coupling. By recasting an experimental search, we derive novel constraints on this scenario. We then introduce an R-symmetric model of supersymmetry where the R- symmetry can be identified with baryon number. This allows the operator λ00U cDcDc in the superpotential without breaking baryon number. However, the R-symmetry will be broken by at least anomaly mediation and this reintroduces baryon number violation. -

![Arxiv:1812.08975V1 [Physics.Hist-Ph] 21 Dec 2018 LHC, and Many Particle Physicists Expected BSM Physics to Be Detected](https://docslib.b-cdn.net/cover/1623/arxiv-1812-08975v1-physics-hist-ph-21-dec-2018-lhc-and-many-particle-physicists-expected-bsm-physics-to-be-detected-1471623.webp)

Arxiv:1812.08975V1 [Physics.Hist-Ph] 21 Dec 2018 LHC, and Many Particle Physicists Expected BSM Physics to Be Detected

Two Notions of Naturalness Porter Williams ∗ Abstract My aim in this paper is twofold: (i) to distinguish two notions of natu- ralness employed in Beyond the Standard Model (BSM) physics and (ii) to argue that recognizing this distinction has methodological consequences. One notion of naturalness is an \autonomy of scales" requirement: it prohibits sensitive dependence of an effective field theory's low-energy ob- servables on precise specification of the theory's description of cutoff-scale physics. I will argue that considerations from the general structure of effective field theory provide justification for the role this notion of natu- ralness has played in BSM model construction. A second, distinct notion construes naturalness as a statistical principle requiring that the values of the parameters in an effective field theory be \likely" given some ap- propriately chosen measure on some appropriately circumscribed space of models. I argue that these two notions are historically and conceptually related but are motivated by distinct theoretical considerations and admit of distinct kinds of solution. 1 Introduction Since the late 1970s, attempting to satisfy a principle of \naturalness" has been an influential guide for particle physicists engaged in constructing speculative models of Beyond the Standard Model (BSM) physics. This principle has both been used as a constraint on the properties that models of BSM physics must possess and shaped expectations about the energy scales at which BSM physics will be detected by experiments. The most pressing problem of naturalness in the Standard Model is the Hierarchy Problem: the problem of maintaining a scale of electroweak symmetry breaking (EWSB) many orders of magnitude lower than the scale at which physics not included in the Standard Model be- comes important.1 Models that provided natural solutions to the Hierarchy Problem predicted BSM physics at energy scales that would be probed by the arXiv:1812.08975v1 [physics.hist-ph] 21 Dec 2018 LHC, and many particle physicists expected BSM physics to be detected. -

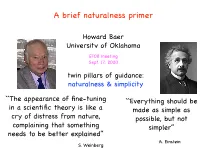

A Brief Naturalness Primer

A brief naturalness primer Howard Baer University of Oklahoma EF08 meeting Sept. 17, 2020 twin pillars of guidance: naturalness & simplicity ``The appearance of fine-tuning ``Everything should be in a scientific theory is like a made as simple as cry of distress from nature, possible, but not complaining that something simpler’’ needs to be better explained’’ A. Einstein S. Weinberg Where are the sparticles? BG naturalness: m(gluino,stop)<~400 GeV mg˜ > 2.25 TeV mt˜1 > 1.1 TeV Is SUSY a failed enterprise (as is often claimed in popular press)? Putting Dirac and ’t Hooft naturalness aside, what we usually refer to as natural is practical naturalness An observable o1 + + on is natural if all independentOcontributions⌘ ··· o to are comparable to or less then i O O 2 2 d 2 u 2 m mH +⌃d (mH +⌃u)tan β Z = d − u µ2 m2 ⌃u(t˜ ) µ2) 2 tan2 β 1 Hu u 1,2 − − ' − − HB, Barger, Huang, Mustafayev, Tata, arXiv:1207.3343 The bigger the soft term, the more natural is its weak scale value, until EW symmetry no longer broken: living dangerously! EENZ/BG naturalness 2 @ log mZ ∆EENZ/BG maxi ⌘ | @ log pi | • depends on input parameters of model • different answers for same inputs assuming different models parameters introduced to parametrize our ignorance of SUSY breaking; not expected to be fundamental e.g. SUSY with dilaton-dominated breaking: m2 = m2 with m = A = p3m 0 3/2 1/2 − 0 3/2 (doesn’t make sense to use independent m0, mhf, A0) while ∆ BG tells us about fine-tuning in our computer codes, what we really want to know is: is nature fine-tuned or natural? For correlated soft terms, then ∆ ∆ BG ! EW Alternatively, only place independent soft terms makes sense is in multiverse: but then selection effects in action High scale (HS, stop mass) measure m2 µ2 + m2 (weak)=µ2 + m2 (⇤)+δm2 h ' Hu Hu Hu Implies 3 3rd generation squarks <500 GeV: δm2 SUSY ruled out under Hu ∆HS m2 ⌘ h BUT! too many terms ignored! NOT VALID! The bigger m2 (⇤) is, the bigger is the cancelling correction- Hu these terms are not independent. -

Naturalness As a Reasonable Scientific Principle in Fundamental Physics

Candidate: Casper Dani¨elDijkstra. Student Number: s2026104. Master's thesis for Philosophy of a Specific Discipline. Specialization: Natural Sciences. Naturalness as a reasonable scientific principle in fundamental physics Supervisor: Prof. Dr. Simon Friederich. Second examiner: Prof. Dr. Leah Henderson Third examiner: Prof. Dr. Frank Hindriks arXiv:1906.03036v1 [physics.hist-ph] 19 Apr 2019 Academic year: 2017/2018. Abstract Underdetermination by data hinders experimental physicists to test legions of fundamental theories in high energy physics, since these theories predict modifications of well-entrenched theories in the deep UV and these high energies cannot be probed in the near future. Several heuristics haven proven successful in order to assess models non-emperically and \naturalness" has become an oft-used heuristic since the late 1970s. The utility of naturalness is becoming pro- gressively more contested, for several reasons. The fact that many notions of the principle have been put forward in the literature (some of which are discordant) has obscured the physical content of the principle, causing confusion as to what naturalness actually imposes. Additionally, naturalness has been criticized to be a \sociological instrument" (Grinbaum 2007, p.18), an ill-defined dogma (e.g. by Hossenfelder (2018a, p.15)) and have an \aesthetic character" which is fundamentally different from other scientific principles (e.g. by Donoghue (2008, p.1)). I aim to clarify the physical content and significance of naturalness. Physicists' earliest understanding of natural- ness, as an autonomy of scales (AoS) requirement provides the most cogent definition of naturalness and I will assert that i) this provides a uniform notion which undergirds a myriad prominent naturalness conditions, ii) this is a reason- able criterion to impose on EFTs and iii) the successes and violations of naturalness are best understood when adhering to this notion of naturalness. -

SUSY Naturalness, PQ Symmetry and the Landscape

SUSY naturalness, PQ symmetry and the landscape Howard Baer University of Oklahoma Is SUSY Alive and Well? Madrid, Sept. 2016 twin pillars of guidance: naturalness & simplicity ``The appearance of fine-tuning ``Everything should be in a scientific theory is like a made as simple as cry of distress from nature, possible, but not complaining that something simpler’’ needs to be better explained’’ A. Einstein S. Weinberg Nature sure looks like SUSY • stabilize Higgs mass • measured gauge couplings • m(t)~173 GeV for REWSB • mh(125): squarely within SUSY window recent search results from Atlas run 2 @ 13 TeV: evidently mg˜ > 1.9 TeV compare: BG naturalness (1987): mg˜ < 0.35 TeV or is SUSY dead? how to disprove SUSY? when it becomes ``unnatural’’? this brings up naturalness issue Mark Twain, 1835-1910 (or SUSY) 1897 Oft-repeated myths about naturalness • requires m(t1,t2,b1)<500 GeV • requires small At parameter • requires m(gluino)<400, no 1000, no 1500 GeV? • MSSM is fine-tuned to .1% - needs modification • naturalness is subjective/ non-predictive • different measures predict different things This talk will refute all these points! HB, Barger, Savoy, arXiv:1502.04127 And present a beautiful alternative: radiatively-driven naturalness Most claims against SUSY stem from overestimates of EW fine-tuning. These arise from violations of the Prime directive on fine-tuning: ``Thou shalt not claim fine-tuning of dependent quantities one against another!’’ HB, Barger, Mickelson, Padeffke-Kirkland, arXiv:1404.2277 Is = + b b fine-tuned for b> ? O O − O Reminder: -

![Arxiv:0801.2562V2 [Hep-Ph]](https://docslib.b-cdn.net/cover/2314/arxiv-0801-2562v2-hep-ph-2262314.webp)

Arxiv:0801.2562V2 [Hep-Ph]

NATURALLY SPEAKING: The Naturalness Criterion and Physics at the LHC Gian Francesco GIUDICE CERN, Theoretical Physics Division Geneva, Switzerland 1 Naturalness in Scientific Thought Everything is natural: if it weren’t, it wouldn’t be. Mary Catherine Bateson [1] Almost every branch of science has its own version of the “naturalness criterion”. In environmental sciences, it refers to the degree to which an area is pristine, free from human influence, and characterized by native species [2]. In mathematics, its meaning is associated with the intuitiveness of certain fundamental concepts, viewed as an intrinsic part of our thinking [3]. One can find the use of naturalness criterions in computer science (as a measure of adaptability), in agriculture (as an acceptable level of product manipulation), in linguistics (as translation quality assessment of sentences that do not reflect the natural and idiomatic forms of the receptor language). But certainly nowhere else but in particle physics has the mutable concept of naturalness taken a form which has become so influential in the development of the field. The role of naturalness in the sense of “æsthetic beauty” is a powerful guiding principle for physicists as they try to construct new theories. This may appear surprising since the final product is often a mathematically sophisticated theory based on deep fundamental principles, arXiv:0801.2562v2 [hep-ph] 30 Mar 2008 and one could believe that subjective æsthetic arguments have no place in it. Nevertheless, this is not true and often theoretical physicists formulate their theories inspired by criteria of simplicity and beauty, i.e. by what Nelson [4] defines as “structural naturalness”.