The Maxwell Relations

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

ENERGY, ENTROPY, and INFORMATION Jean Thoma June

ENERGY, ENTROPY, AND INFORMATION Jean Thoma June 1977 Research Memoranda are interim reports on research being conducted by the International Institute for Applied Systems Analysis, and as such receive only limited scientific review. Views or opinions contained herein do not necessarily represent those of the Institute or of the National Member Organizations supporting the Institute. PREFACE This Research Memorandum contains the work done during the stay of Professor Dr.Sc. Jean Thoma, Zug, Switzerland, at IIASA in November 1976. It is based on extensive discussions with Professor HAfele and other members of the Energy Program. Al- though the content of this report is not yet very uniform because of the different starting points on the subject under consideration, its publication is considered a necessary step in fostering the related discussion at IIASA evolving around th.e problem of energy demand. ABSTRACT Thermodynamical considerations of energy and entropy are being pursued in order to arrive at a general starting point for relating entropy, negentropy, and information. Thus one hopes to ultimately arrive at a common denominator for quanti- ties of a more general nature, including economic parameters. The report closes with the description of various heating appli- cation.~and related efficiencies. Such considerations are important in order to understand in greater depth the nature and composition of energy demand. This may be highlighted by the observation that it is, of course, not the energy that is consumed or demanded for but the informa- tion that goes along with it. TABLE 'OF 'CONTENTS Introduction ..................................... 1 2 . Various Aspects of Entropy ........................2 2.1 i he no me no logical Entropy ........................ -

Lecture 4: 09.16.05 Temperature, Heat, and Entropy

3.012 Fundamentals of Materials Science Fall 2005 Lecture 4: 09.16.05 Temperature, heat, and entropy Today: LAST TIME .........................................................................................................................................................................................2� State functions ..............................................................................................................................................................................2� Path dependent variables: heat and work..................................................................................................................................2� DEFINING TEMPERATURE ...................................................................................................................................................................4� The zeroth law of thermodynamics .............................................................................................................................................4� The absolute temperature scale ..................................................................................................................................................5� CONSEQUENCES OF THE RELATION BETWEEN TEMPERATURE, HEAT, AND ENTROPY: HEAT CAPACITY .......................................6� The difference between heat and temperature ...........................................................................................................................6� Defining heat capacity.................................................................................................................................................................6� -

Chapter 3. Second and Third Law of Thermodynamics

Chapter 3. Second and third law of thermodynamics Important Concepts Review Entropy; Gibbs Free Energy • Entropy (S) – definitions Law of Corresponding States (ch 1 notes) • Entropy changes in reversible and Reduced pressure, temperatures, volumes irreversible processes • Entropy of mixing of ideal gases • 2nd law of thermodynamics • 3rd law of thermodynamics Math • Free energy Numerical integration by computer • Maxwell relations (Trapezoidal integration • Dependence of free energy on P, V, T https://en.wikipedia.org/wiki/Trapezoidal_rule) • Thermodynamic functions of mixtures Properties of partial differential equations • Partial molar quantities and chemical Rules for inequalities potential Major Concept Review • Adiabats vs. isotherms p1V1 p2V2 • Sign convention for work and heat w done on c=C /R vm system, q supplied to system : + p1V1 p2V2 =Cp/CV w done by system, q removed from system : c c V1T1 V2T2 - • Joule-Thomson expansion (DH=0); • State variables depend on final & initial state; not Joule-Thomson coefficient, inversion path. temperature • Reversible change occurs in series of equilibrium V states T TT V P p • Adiabatic q = 0; Isothermal DT = 0 H CP • Equations of state for enthalpy, H and internal • Formation reaction; enthalpies of energy, U reaction, Hess’s Law; other changes D rxn H iD f Hi i T D rxn H Drxn Href DrxnCpdT Tref • Calorimetry Spontaneous and Nonspontaneous Changes First Law: when one form of energy is converted to another, the total energy in universe is conserved. • Does not give any other restriction on a process • But many processes have a natural direction Examples • gas expands into a vacuum; not the reverse • can burn paper; can't unburn paper • heat never flows spontaneously from cold to hot These changes are called nonspontaneous changes. -

A Simple Method to Estimate Entropy and Free Energy of Atmospheric Gases from Their Action

Article A Simple Method to Estimate Entropy and Free Energy of Atmospheric Gases from Their Action Ivan Kennedy 1,2,*, Harold Geering 2, Michael Rose 3 and Angus Crossan 2 1 Sydney Institute of Agriculture, University of Sydney, NSW 2006, Australia 2 QuickTest Technologies, PO Box 6285 North Ryde, NSW 2113, Australia; [email protected] (H.G.); [email protected] (A.C.) 3 NSW Department of Primary Industries, Wollongbar NSW 2447, Australia; [email protected] * Correspondence: [email protected]; Tel.: + 61-4-0794-9622 Received: 23 March 2019; Accepted: 26 April 2019; Published: 1 May 2019 Abstract: A convenient practical model for accurately estimating the total entropy (ΣSi) of atmospheric gases based on physical action is proposed. This realistic approach is fully consistent with statistical mechanics, but reinterprets its partition functions as measures of translational, rotational, and vibrational action or quantum states, to estimate the entropy. With all kinds of molecular action expressed as logarithmic functions, the total heat required for warming a chemical system from 0 K (ΣSiT) to a given temperature and pressure can be computed, yielding results identical with published experimental third law values of entropy. All thermodynamic properties of gases including entropy, enthalpy, Gibbs energy, and Helmholtz energy are directly estimated using simple algorithms based on simple molecular and physical properties, without resource to tables of standard values; both free energies are measures of quantum field states and of minimal statistical degeneracy, decreasing with temperature and declining density. We propose that this more realistic approach has heuristic value for thermodynamic computation of atmospheric profiles, based on steady state heat flows equilibrating with gravity. -

( ∂U ∂T ) = ∂CV ∂V = 0, Which Shows That CV Is Independent of V . 4

so we have ∂ ∂U ∂ ∂U ∂C =0 = = V =0, ∂T ∂V ⇒ ∂V ∂T ∂V which shows that CV is independent of V . 4. Using Maxwell’s relations. Show that (∂H/∂p) = V T (∂V/∂T ) . T − p Start with dH = TdS+ Vdp. Now divide by dp, holding T constant: dH ∂H ∂S [at constant T ]= = T + V. dp ∂p ∂p T T Use the Maxwell relation (Table 9.1 of the text), ∂S ∂V = ∂p − ∂T T p to get the result ∂H ∂V = T + V. ∂p − ∂T T p 97 5. Pressure dependence of the heat capacity. (a) Show that, in general, for quasi-static processes, ∂C ∂2V p = T . ∂p − ∂T2 T p (b) Based on (a), show that (∂Cp/∂p)T = 0 for an ideal gas. (a) Begin with the definition of the heat capacity, δq dS C = = T , p dT dT for a quasi-static process. Take the derivative: ∂C ∂2S ∂2S p = T = T (1) ∂p ∂p∂T ∂T∂p T since S is a state function. Substitute the Maxwell relation ∂S ∂V = ∂p − ∂T T p into Equation (1) to get ∂C ∂2V p = T . ∂p − ∂T2 T p (b) For an ideal gas, V (T )=NkT/p,so ∂V Nk = , ∂T p p ∂2V =0, ∂T2 p and therefore, from part (a), ∂C p =0. ∂p T 98 (a) dU = TdS+ PdV + μdN + Fdx, dG = SdT + VdP+ Fdx, − ∂G F = , ∂x T,P 1 2 G(x)= (aT + b)xdx= 2 (aT + b)x . ∂S ∂F (b) = , ∂x − ∂T T,P x,P ∂S ∂F (c) = = ax, ∂x − ∂T − T,P x,P S(x)= ax dx = 1 ax2. -

Thermodynamics

ME346A Introduction to Statistical Mechanics { Wei Cai { Stanford University { Win 2011 Handout 6. Thermodynamics January 26, 2011 Contents 1 Laws of thermodynamics 2 1.1 The zeroth law . .3 1.2 The first law . .4 1.3 The second law . .5 1.3.1 Efficiency of Carnot engine . .5 1.3.2 Alternative statements of the second law . .7 1.4 The third law . .8 2 Mathematics of thermodynamics 9 2.1 Equation of state . .9 2.2 Gibbs-Duhem relation . 11 2.2.1 Homogeneous function . 11 2.2.2 Virial theorem / Euler theorem . 12 2.3 Maxwell relations . 13 2.4 Legendre transform . 15 2.5 Thermodynamic potentials . 16 3 Worked examples 21 3.1 Thermodynamic potentials and Maxwell's relation . 21 3.2 Properties of ideal gas . 24 3.3 Gas expansion . 28 4 Irreversible processes 32 4.1 Entropy and irreversibility . 32 4.2 Variational statement of second law . 32 1 In the 1st lecture, we will discuss the concepts of thermodynamics, namely its 4 laws. The most important concepts are the second law and the notion of Entropy. (reading assignment: Reif x 3.10, 3.11) In the 2nd lecture, We will discuss the mathematics of thermodynamics, i.e. the machinery to make quantitative predictions. We will deal with partial derivatives and Legendre transforms. (reading assignment: Reif x 4.1-4.7, 5.1-5.12) 1 Laws of thermodynamics Thermodynamics is a branch of science connected with the nature of heat and its conver- sion to mechanical, electrical and chemical energy. (The Webster pocket dictionary defines, Thermodynamics: physics of heat.) Historically, it grew out of efforts to construct more efficient heat engines | devices for ex- tracting useful work from expanding hot gases (http://www.answers.com/thermodynamics). -

7 Apr 2021 Thermodynamic Response Functions . L03–1 Review Of

7 apr 2021 thermodynamic response functions . L03{1 Review of Thermodynamics. 3: Second-Order Quantities and Relationships Maxwell Relations • Idea: Each thermodynamic potential gives rise to several identities among its second derivatives, known as Maxwell relations, which express the integrability of the fundamental identity of thermodynamics for that potential, or equivalently the fact that the potential really is a thermodynamical state function. • Example: From the fundamental identity of thermodynamics written in terms of the Helmholtz free energy, dF = −S dT − p dV + :::, the fact that dF really is the differential of a state function implies that @ @F @ @F @S @p = ; or = : @V @T @T @V @V T;N @T V;N • Other Maxwell relations: From the same identity, if F = F (T; V; N) we get two more relations. Other potentials and/or pairs of variables can be used to obtain additional relations. For example, from the Gibbs free energy and the identity dG = −S dT + V dp + µ dN, we get three relations, including @ @G @ @G @S @V = ; or = − : @p @T @T @p @p T;N @T p;N • Applications: Some measurable quantities, response functions such as heat capacities and compressibilities, are second-order thermodynamical quantities (i.e., their definitions contain derivatives up to second order of thermodynamic potentials), and the Maxwell relations provide useful equations among them. Heat Capacities • Definitions: A heat capacity is a response function expressing how much a system's temperature changes when heat is transferred to it, or equivalently how much δQ is needed to obtain a given dT . The general definition is C = δQ=dT , where for any reversible transformation δQ = T dS, but the value of this quantity depends on the details of the transformation. -

Lecture 6: Entropy

Matthew Schwartz Statistical Mechanics, Spring 2019 Lecture 6: Entropy 1 Introduction In this lecture, we discuss many ways to think about entropy. The most important and most famous property of entropy is that it never decreases Stot > 0 (1) Here, Stot means the change in entropy of a system plus the change in entropy of the surroundings. This is the second law of thermodynamics that we met in the previous lecture. There's a great quote from Sir Arthur Eddington from 1927 summarizing the importance of the second law: If someone points out to you that your pet theory of the universe is in disagreement with Maxwell's equationsthen so much the worse for Maxwell's equations. If it is found to be contradicted by observationwell these experimentalists do bungle things sometimes. But if your theory is found to be against the second law of ther- modynamics I can give you no hope; there is nothing for it but to collapse in deepest humiliation. Another possibly relevant quote, from the introduction to the statistical mechanics book by David Goodstein: Ludwig Boltzmann who spent much of his life studying statistical mechanics, died in 1906, by his own hand. Paul Ehrenfest, carrying on the work, died similarly in 1933. Now it is our turn to study statistical mechanics. There are many ways to dene entropy. All of them are equivalent, although it can be hard to see. In this lecture we will compare and contrast dierent denitions, building up intuition for how to think about entropy in dierent contexts. The original denition of entropy, due to Clausius, was thermodynamic. -

Thermodynamics

...Thermodynamics Entropy: The state function for the Second Law R R d¯Q Entropy dS = T Central Equation dU = TdS − PdV Ideal gas entropy ∆s = cv ln T =T0 + R ln v=v0 Boltzmann entropy S = klogW P Statistical Entropy S = −kB i pi ln(pi ) October 14, 2019 1 / 25 Fewer entropy with chickens Counting Things You can't count a continuum. This includes states which may never be actually realised. This is where the ideal gas entropy breaks down Boltzmann's entropy requires Quantum Mechanics. October 14, 2019 2 / 25 Equilibrium and the thermodynamic potentials Second Law : entropy increases in any isolated system. Entropy of system+surroundings must increase. A system can reduce its entropy, provided the entropy of the surroundings increases by more. October 14, 2019 3 / 25 Equilibrium and boundary conditions Main postulate of thermodynamics: systems tend to \equilibrium". Equilibrium depends on boundary conditions. October 14, 2019 4 / 25 A function for every boundary condition Another statement of 2nd Law... System+surroundings maximise S Compare with mechanical equilibrium System minimises energy In thermodynamic equilibrium System minimises ... Free energy October 14, 2019 5 / 25 Free energy Willard Gibbs 1839-1903: A Method of Geometrical Representation of the Thermodynamic Properties of Substances by Means of Surfaces, 1873 Equation of state plotted as a surface of energy vs T and P. Volume, entropy are slopes of this surface Heat capacity, compressibility are curvatures. October 14, 2019 6 / 25 Surface and Slopes: Not like this October 14, 2019 7 / 25 Surfaces and slope: More like something from Lovecraft H.P.Lovecraft 1928 \The Call of Cthulhu" PV or TS \space" is completely non-euclidean. -

Thermodynamic Potentials

THERMODYNAMIC POTENTIALS, PHASE TRANSITION AND LOW TEMPERATURE PHYSICS Syllabus: Unit II - Thermodynamic potentials : Internal Energy; Enthalpy; Helmholtz free energy; Gibbs free energy and their significance; Maxwell's thermodynamic relations (using thermodynamic potentials) and their significance; TdS relations; Energy equations and Heat Capacity equations; Third law of thermodynamics (Nernst Heat theorem) Thermodynamics deals with the conversion of heat energy to other forms of energy or vice versa in general. A thermodynamic system is the quantity of matter under study which is in general macroscopic in nature. Examples: Gas, vapour, vapour in contact with liquid etc.. Thermodynamic stateor condition of a system is one which is described by the macroscopic physical quantities like pressure (P), volume (V), temperature (T) and entropy (S). The physical quantities like P, V, T and S are called thermodynamic variables. Any two of these variables are independent variables and the rest are dependent variables. A general relation between the thermodynamic variables is called equation of state. The relation between the variables that describe a thermodynamic system are given by first and second law of thermodynamics. According to first law of thermodynamics, when a substance absorbs an amount of heat dQ at constant pressure P, its internal energy increases by dU and the substance does work dW by increase in its volume by dV. Mathematically it is represented by 풅푸 = 풅푼 + 풅푾 = 풅푼 + 푷 풅푽….(1) If a substance absorbs an amount of heat dQ at a temperature T and if all changes that take place are perfectly reversible, then the change in entropy from the second 풅푸 law of thermodynamics is 풅푺 = or 풅푸 = 푻 풅푺….(2) 푻 From equations (1) and (2), 푻 풅푺 = 풅푼 + 푷 풅푽 This is the basic equation that connects the first and second laws of thermodynamics. -

1 Where and How Is Entropy Generated in Solar Energy

Where and How is Entropy Generated in Solar Energy Conversion Systems? Bolin Liao1* 1Department of Mechanical Engineering, University of California, Santa Barbara, CA, 93106, USA Abstract The hotness of the sun and the coldness of the outer space are inexhaustible thermodynamic resources for human beings. From a thermodynamic point of view, any energy conversion systems that receive energy from the sun and/or dissipate energy to the universe are heat engines with photons as the "working fluid" and can be analyzed using the concept of entropy. While entropy analysis provides a particularly convenient way to understand the efficiency limits, it is typically taught in the context of thermodynamic cycles among quasi-equilibrium states and its generalization to solar energy conversion systems running in a continuous and non-equilibrium fashion is not straightforward. In this educational article, we present a few examples to illustrate how the concept of photon entropy, combined with the radiative transfer equation, can be used to analyze the local entropy generation processes and the efficiency limits of different solar energy conversion systems. We provide explicit calculations for the local and total entropy generation rates for simple emitters and absorbers, as well as photovoltaic cells, which can be readily reproduced by students. We further discuss the connection between the entropy generation and the device efficiency, particularly the exact spectral matching condition that is shared by infinite- junction photovoltaic cells and reversible thermoelectric materials to approach their theoretical efficiency limit. * To whom correspondence should be addressed. Email: [email protected] 1 I. Introduction In the context of the radiative energy transfer, the Sun can be approximated as a blackbody at a temperature of 6000 K1, and the outer space is filled with the cosmic microwave background at an effective temperature of 2.7 K. -

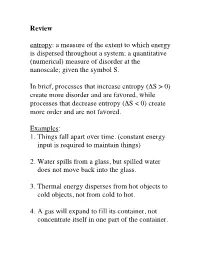

Review Entropy: a Measure of the Extent to Which Energy Is Dispersed

Review entropy: a measure of the extent to which energy is dispersed throughout a system; a quantitative (numerical) measure of disorder at the nanoscale; given the symbol S. In brief, processes that increase entropy (ΔS > 0) create more disorder and are favored, while processes that decrease entropy (ΔS < 0) create more order and are not favored. Examples: 1. Things fall apart over time. (constant energy input is required to maintain things) 2. Water spills from a glass, but spilled water does not move back into the glass. 3. Thermal energy disperses from hot objects to cold objects, not from cold to hot. 4. A gas will expand to fill its container, not concentrate itself in one part of the container. Chemistry 103 Spring 2011 Energy disperses (spreads out) over a larger number of particles. Ex: exothermic reaction, hot object losing thermal energy to cold object. Energy disperses over a larger space (volume) by particles moving to occupy more space. Ex: water spilling, gas expanding. Consider gas, liquid, and solid, Fig. 17.2, p. 618. 2 Chemistry 103 Spring 2011 Example: Predict whether the entropy increases, decreases, or stays about the same for the process: 2 CO2(g) 2 CO(g) + O2(g). Practice: Predict whether ΔS > 0, ΔS < 0, or ΔS ≈ 0 for: NaCl(s) NaCl(aq) Guidelines on pp. 617-618 summarize some important factors when considering entropy. 3 Chemistry 103 Spring 2011 Measuring and calculating entropy At absolute zero 0 K (-273.15 °C), all substances have zero entropy (S = 0). At 0 K, no motion occurs, and no energy dispersal occurs.