An Improved Multi-Objective Evolutionary Algorithm with Adaptable Parameters Khoa Duc Tran Nova Southeastern University, [email protected]

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Earth-717: Avengers Vol 1 Chapter 10: Suicide Mission “My, My, My! to Have So Many of Our Friends Together in the Same Place!

Earth-717: Avengers Vol 1 Chapter 10: Suicide Mission “My, my, my! To have so many of our friends together in the same place! I know that it is under quite distressing circumstances, but still, it's wonderful to have such a congregation!” Steve, Tasha, Thor, Bruce, Carol, Reed, Susan, Johnny, Ben and Herbie were all in one of the hangars on board the Valiant. The Rogue One had been moved from the Senatorium to the Valiant earlier that day, and Tasha had finished installing her new upgrade to the ship. Hundreds of other Nova pilots and officers were moving around the hangar, preparing for the battle ahead. Herbie bounced up and down as he looked around at the group. “To see the Fantastic Four ready to go into battle alongside such brave and noble heroes like yourselves! It is truly remarkable, is it not, Doctor Richards?” “It sure is,” said Reed. “You've got quite a team.” “Put it together at the last minute,” said Tasha. “Since somebody decided that they wanted an interstellar vacation at the worst possible time.” “Hey!” said Johnny. “Wasn't my fault! Blame these guys! I just went along for the ride!” Ben gave Johnny a light smack on the back of the head. “Nobody asked you, junior.” Johnny grumbled as he tried to fix his hair. Reed laughed before looking back Steve. “Don't suppose you'd like to tell me how I'm standing across from Captain America?” “I guess that a man of science like yourself would be interested in that sort of thing,” said Steve. -

Brad's September Newsletter

Wagon Days and September Events September is here and August has come to an end, but this doesn’t mean that Sun Valley’s lineup of summer events is over! September brings the Big Hitch Parade, antique fairs and phenomenal performances throughout our Valley. Wagon Days starts off with the Cowboy Poets Recital at the Ore Wagon Museum on Sept. 2nd at 11:00 am and culminates with the iconic Big Hitch Parade at 1:00 pm on Sept. 3rd, the largest non- motorized parade in the west. See over one hundred museum quality wagons, stage coaches, buggies, carriages and the six enormous Lewis Ore Wagons, pulled by a 20 mule jerkline. Antique fairs will also abound throughout the Valley from Sept. 2nd - 5th, at these locations: Hailey’s Antique Market, at Roberta McKercher Park, Hailey Sept 1 - 4 all day Ketchum Antique and Art Show, nexStage Theater, 120 n Main Street, Sept 2 - 4 all day. The California-based rock group Lukas Nelson and the Promise of the Real will conclude Sun Valley’s 2016 summer concert series on Labor Day, Sept. 5th. The show will open at the Sun Valley Pavilion at 6:00pm, with tickets ranging from $25 for the lawn to $100 for pavilion seats. The Sun Valley Center for the Arts will begin its 2016-17 performing arts series starting September 16th with award winning cabaret performer, Sharron Matthews. Her performance spans a mix of storytelling and mash-up pop songs. Her 2010 “Sharron Matthews Superstar: World Domination Tour 2010” was named “#1 Cabaret in New York City’ by Nightlife Exchange and WPAT Radio, and she was also nominated Touring Artist of the Year by the BC touring council in 2015. -

Club Add 2 Page Designoct07.Pub

H M. ADVS. HULK V. 1 collects #1-4, $7 H M. ADVS FF V. 7 SILVER SURFER collects #25-28, $7 H IRR. ANT-MAN V. 2 DIGEST collects #7-12,, $10 H POWERS DEF. HC V. 2 H ULT FF V. 9 SILVER SURFER collects #12-24, $30 collects #42-46, $14 H C RIMINAL V. 2 LAWLESS H ULTIMATE VISON TP collects #6-10, $15 collects #0-5, $15 H SPIDEY FAMILY UNTOLD TALES H UNCLE X-MEN EXTREMISTS collects Spidey Family $5 collects #487-491, $14 Cut (Original Graphic Novel) H AVENGERS BIZARRE ADVS H X-MEN MARAUDERS TP The latest addition to the Dark Horse horror line is this chilling OGN from writer and collects Marvel Advs. Avengers, $5 collects #200-204, $15 Mike Richardson (The Secret). 20-something Meagan Walters regains consciousness H H NEW X-MEN v5 and finds herself locked in an empty room of an old house. She's bleeding from the IRON MAN HULK back of her head, and has no memory of where the wound came from-she'd been at a collects Marvel Advs.. Hulk & Tony , $5 collects #37-43, $18 club with some friends . left angrily . was she abducted? H SPIDEY BLACK COSTUME H NEW EXCALIBUR V. 3 ETERNITY collects Back in Black $5 collects #16-24, $25 (on-going) H The End League H X-MEN 1ST CLASS TOMORROW NOVA V. 1 ANNIHILATION A thematic merging of The Lord of the Rings and Watchmen, The End League follows collects #1-8, $5 collects #1-7, $18 a cast of the last remaining supermen and women as they embark on a desperate and H SPIDEY POWER PACK H HEROES FOR HIRE V. -

![[N 1] Adrian Toomes and His Salvage Company Are Contracted](https://docslib.b-cdn.net/cover/7225/n-1-adrian-toomes-and-his-salvage-company-are-contracted-357225.webp)

[N 1] Adrian Toomes and His Salvage Company Are Contracted

SECRETARIA MUNICIPAL DE EDUCAÇÃO LÍNGUA INGLESA Name: 6º ANO: LEIA O TEXTO ABAIXO E RESPONDA AS QUESTÕES NO CADERNO. SPIDERMAN: HOMECOMING Plot Following the Battle of New York,[N 1] Adrian Toomes and his salvage company are contracted to clean up the city, but their operation is taken over by the Department of Damage Control (D.O.D.C.), a partnership between Tony Stark and the U.S. government. Enraged at being driven out of business, Toomes persuades his employees to keep the Chitauri technology they have already scavenged and use it to create and sell advanced weapons. Eight years later, Peter Parker is drafted into the Avengers by Stark to help with an internal dispute in Berlin,[N 2] but resumes his studies at the Midtown School of Science and Technology when Stark tells him he is not yet ready to become a full Avenger. Parker quits his school's academic decathlon team to spend more time focusing on his crime-fighting activities as Spider-Man. One night, after preventing criminals from robbing an ATM with their advanced weapons Toomes sold them, Parker returns to his Queens apartment, where his best friend Ned discovers his secret identity. On another night, Parker comes across Toomes' associates Jackson Brice / Shocker and Herman Schultz selling weapons to local criminal Aaron Davis. Parker saves Davis before being caught by Toomes and dropped in a lake, nearly drowning after becoming tangled in a parachute built into his suit. He is rescued by Stark, who is monitoring the Spider-Man suit he gave Parker and warns him against further involvement with the criminals. -

Visa Marvel Comic Fact Sheet

Visa Marvel Comic Fact Sheet About Visa and Marvel’s Partnership Visa Inc. understands that teaching consumers about money through “edutainment” or “gamification” is effective in making what can be a dull subject, exciting. By utilizing a compelling and familiar medium — comic books — Visa enables children to learn while having fun. Visa has teamed up with Marvel Custom Solutions to create financial literacy comic books and recently introduced a new global resource, the Guardians of the Galaxy: Rocket’s Powerful Plan comic. Released in May 2016, it follows the popular Avengers: Saving the Day comic book from Visa and Marvel, of which 497,000 print copies have been distributed worldwide since 2012. The Avengers comic has also been co-branded by Visa clients, including HSBC in Mexico and Navy Federal Credit Union and Zions Bank in the U.S. About Guardians of the Galaxy: Rocket’s Powerful Plan Children love comic books and knowing that children look up to super heroes, Visa and Marvel collaborated to create Guardians of the Galaxy: Rocket’s Powerful Plan. The comic uses Marvel’s iconic Guardians of the Galaxy and Avengers characters to make financial education entertaining and engaging for readers. The new resource provides educators and parents with a tool to teach students about financial basics such as wants versus needs, the importance of saving for a rainy day, and setting aside funds for emergencies. To increase the availability of the comic in communities across America, Visa and the Public Library Association announced a partnership to distribute the new comic book to consumers through U.S. -

Avengers Vol 1 Chapter 7: Space Pirates Within Seconds, the Entire

Earth-717: Avengers Vol 1 Chapter 7: Space Pirates Within seconds, the entire Senatorium was in chaos. The Dark Revenant opened fire on the Nova cruisers surrounding the space station, completely taking them by surprise. The two Pariahs also fired their main laser cannons, and together managed to effortlessly slice apart a Nova warship. The smaller Skrull vessels moved in on the defensive fleet, and the Skrull starfighters began swarming around any targets within range. Adora immediately gathered the Senate members and had them surrounded by Nova guards. The crowds began screaming and panicking, fleeing in all directions. Adora looked at the heroes as the Supreme Intelligence's hologram faded. “I'm getting the Senate out of here! If you want to help . then help.” Before any of the heroes could respond, the Dark Revenant launched multiple interceptor craft. These were cylindrical shuttles that struck the hull of the Senatorium and started drilling through them. While a handful were shot down by the Senatorium's turrets, a few of them still managed to latch on to the exterior of the station. Once holes were pierced by their drills, Skrull and Mekkan troops began flooding inside. Steve turned back to Adora. “We'll help your people fight them off however we can. Get the Senate to safety!” Adora hurriedly nodded before rushing off after the Senate and their guards. Steve held up his shield. Tasha equipped her repulsors and locked her helmet in place. Carol charged both of her hands with energy and started to hover. Thor held his hammer at the ready. -

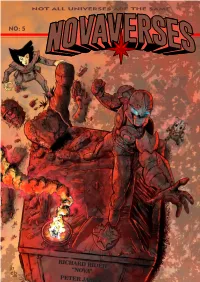

NOVAVERSES ISSUE 5D

NOT ALL UNIVERSES ARE THE SAME NO: 5 NOT ALL UNIVERSES ARE THE SAME RISE OF THE CORPS Arc 1: Whatever Happened to Richard Rider? Part 1 WRITER - GORDON FERNANDEZ ILLUSTRATION - JASON HEICHEL and DAZ RED DRAGON PART 3 WRITER - BRYAN DYKE ILLUSTRATION - FERNANDO ARGÜELLO STARSCREAM PART 5 WRITER - DAZ BLACKBURN ILLUSTRATION - EMILIANO CORREA, JOE SINGLETON and DAZ DREAM OF LIVING JUSTICE PART 2 WRITER - BYRON BREWER ILLUSTRATION - JASON HEICHEL Edited by Daz Blackburn, Doug Smith & Byron Brewer Front Cover by JASON HEICHEL and DAZ BLACKBURN Next Cover by JOHN GARRETSON Novaverses logo designed by CHRIS ANDERSON NOVA AND RELATED MARVEL CHARACTERS ARE DULY RECOGNIZED AS PROPERTY AND COPYRIGHT OF MARVEL COMICS AND MARVEL CHARACTERS INC. FANS PRODUCING NOVAVERSES DULY RECOGNIZE THE ABOVE AND DENOTE THAT NOVAVERSES IS A FAN-FICTION ANTHOLOGY PRODUCED BY FANS OF NOVA AND MARVEL COSMIC VIA NOVA PRIME PAGE AND TEAM619 FACEBOOK GROUP. NOVAVERSES IS A NON-PROFIT MAKING VENTURE AND IS INTENDED PURELY FOR THE ENJOYMENT OF FANS WITH ALL RESPECT DUE TO MARVEL. NOVAVERSES IS KINDLY HOSTED BY NOVA PRIME PAGE! ORIGINAL CHARACTERS CREATED FOR NOVAVERSES ARE THE PSYCHOLOGICAL COSMIC CONSTANT OF INDIVIDUAL CREATORS AND THEIR CENTURION IMAGINATIONS. DOWNLOAD A PDF VERSION AT www.novaprimepage.com/619.asp READ ONLINE AT novaprime.deviantart.com Rise of the Nova Corps obert Rider walked somberly through the city. It was a dark, bleak, night, and there weren't many people left on the streets. His parents and friends all warned him about the dangers of 1 Rwalking in this neighborhood, especially at this hour, but Robert didn't care. -

College of Letters 1

College of Letters 1 Kari Weil BA, Cornell University; MA, Princeton University; PHD, Princeton University COLLEGE OF LETTERS University Professor of Letters; University Professor, Environmental Studies; The College of Letters (COL) is a three-year interdisciplinary major for the study University Professor, College of the Environment; University Professor, Feminist, of European literature, history, and philosophy, from antiquity to the present. Gender, and Sexuality Studies; Co-Coordinator, Animal Studies During these three years, students participate as a cohort in a series of five colloquia in which they read and discuss (in English) major literary, philosophical, and historical texts and concepts drawn from the three disciplinary fields, and AFFILIATED FACULTY also from monotheistic religious traditions. Majors are invited to think critically about texts in relation to their contexts and influences—both European and non- Ulrich Plass European—and in relation to the disciplines that shape and are shaped by those MA, University of Michigan; PHD, New York University texts. Majors also become proficient in a foreign language and study abroad Professor of German Studies; Professor, Letters to deepen their knowledge of another culture. As a unique college within the University, the COL has its own library and workspace where students can study together, attend talks, and meet informally with their professors, whose offices VISITING FACULTY surround the library. Ryan Fics BA, University of Manitoba; MA, University of Manitoba; PHD, Emory -

Run Date: 08/30/21 12Th District Court Page

RUN DATE: 09/27/21 12TH DISTRICT COURT PAGE: 1 312 S. JACKSON STREET JACKSON MI 49201 OUTSTANDING WARRANTS DATE STATUS -WRNT WARRANT DT NAME CUR CHARGE C/M/F DOB 5/15/2018 ABBAS MIAN/ZAHEE OVER CMV V C 1/01/1961 9/03/2021 ABBEY STEVEN/JOH TEL/HARASS M 7/09/1990 9/11/2020 ABBOTT JESSICA/MA CS USE NAR M 3/03/1983 11/06/2020 ABDULLAH ASANI/HASA DIST. PEAC M 11/04/1998 12/04/2020 ABDULLAH ASANI/HASA HOME INV 2 F 11/04/1998 11/06/2020 ABDULLAH ASANI/HASA DRUG PARAP M 11/04/1998 11/06/2020 ABDULLAH ASANI/HASA TRESPASSIN M 11/04/1998 10/20/2017 ABERNATHY DAMIAN/DEN CITYDOMEST M 1/23/1990 8/23/2021 ABREGO JAIME/SANT SPD 1-5 OV C 8/23/1993 8/23/2021 ABREGO JAIME/SANT IMPR PLATE M 8/23/1993 2/16/2021 ABSTON CHERICE/KI SUSPEND OP M 9/06/1968 2/16/2021 ABSTON CHERICE/KI NO PROOF I C 9/06/1968 2/16/2021 ABSTON CHERICE/KI SUSPEND OP M 9/06/1968 2/16/2021 ABSTON CHERICE/KI NO PROOF I C 9/06/1968 2/16/2021 ABSTON CHERICE/KI SUSPEND OP M 9/06/1968 8/04/2021 ABSTON CHERICE/KI OPERATING M 9/06/1968 2/16/2021 ABSTON CHERICE/KI REGISTRATI C 9/06/1968 8/09/2021 ABSTON TYLER/RENA DRUGPARAPH M 7/16/1988 8/09/2021 ABSTON TYLER/RENA OPERATING M 7/16/1988 8/09/2021 ABSTON TYLER/RENA OPERATING M 7/16/1988 8/09/2021 ABSTON TYLER/RENA USE MARIJ M 7/16/1988 8/09/2021 ABSTON TYLER/RENA OWPD M 7/16/1988 8/09/2021 ABSTON TYLER/RENA SUSPEND OP M 7/16/1988 8/09/2021 ABSTON TYLER/RENA IMPR PLATE M 7/16/1988 8/09/2021 ABSTON TYLER/RENA SEAT BELT C 7/16/1988 8/09/2021 ABSTON TYLER/RENA SUSPEND OP M 7/16/1988 8/09/2021 ABSTON TYLER/RENA SUSPEND OP M 7/16/1988 8/09/2021 ABSTON -

Culture War, Rhetorical Education, and Democratic Virtue Beth Jorgensen Iowa State University

Iowa State University Capstones, Theses and Retrospective Theses and Dissertations Dissertations 2002 Takin' it to the streets: culture war, rhetorical education, and democratic virtue Beth Jorgensen Iowa State University Follow this and additional works at: https://lib.dr.iastate.edu/rtd Part of the Philosophy Commons, Rhetoric and Composition Commons, Social and Philosophical Foundations of Education Commons, and the Speech and Rhetorical Studies Commons Recommended Citation Jorgensen, Beth, "Takin' it to the streets: culture war, rhetorical education, and democratic virtue " (2002). Retrospective Theses and Dissertations. 969. https://lib.dr.iastate.edu/rtd/969 This Dissertation is brought to you for free and open access by the Iowa State University Capstones, Theses and Dissertations at Iowa State University Digital Repository. It has been accepted for inclusion in Retrospective Theses and Dissertations by an authorized administrator of Iowa State University Digital Repository. For more information, please contact [email protected]. INFORMATION TO USERS This manuscript has been reproduced from the microfilm master. UMI films the text directly from the original or copy submitted. Thus, some thesis and dissertation copies are in typewriter face, white others may be from any type of computer printer. The quality of this reproduction is dependent upon the quality of the copy submitted. Broken or indistinct print colored or poor quality illustrations and photographs, print bieedthrough, substandard margins, and improper alignment can adversely affect reproduction. In the unlikely event that the author did not send UMI a complete manuscript and there are missing pages, these will be noted. Also, if unauthorized copyright material had to be removed, a note will indicate the deletion. -

Werewere Liking, Sony Labou Tansi, and Tchicaya U Tam'si

WEREWERE LIKING, SONY LABOU TANSI, AND TCHICAYA U TAM’SI: PIONEERS OF “NEW THEATER” IN FRANCOPHONE AFRICA DISSERTATION Presented in Partial Fulfillment of the Requirements for The Degree Doctor of Philosophy in the Graduate School of The Ohio State University By Salome Wekisa Fouts, B.A., M.A ***** The Ohio State University 2004 Dissertation Committee Approved by Professor John-Conteh-Morgan, Adviser _________________________________ Professor Karlis Racevskis, Adviser _________________________________ Advisers Professor Jennifer Willging Department of French and Italian Copyright by Salome Wekisa Fouts 2004 ABSTRACT This dissertation is an analysis of “new African theater” as illustrated in the works of three francophone African writers: the late Congolese Sony Labou Tansi (formerly known as Marcel Ntsony), the late Congolese Félix Tchicaya, who wrote under the pseudonym Tchicaya U Tam’si, and the Cameroonian Werewere Liking. The dissertation examines plays by these authors and illustrates how the plays clearly stand apart from mainstream modern French-language African theater. The introduction, in Chapter 1 provides an explanation of the meaning that has been assigned to the term “new African theater.” It presents an overview of the various innovative structural and linguistic techniques new theater playwrights use that render their work avant-garde. The chapter also studies how new theater playwrights differ from mainstream ones, especially in the way they address and present their concerns regarding the themes of oppression, rebellion, and the outcome of rebellion. The information provided in this chapter serves as a springboard to the primary focus of the dissertation, which is a detailed theoretical and textual analysis of experimental strategies used by the three playwrights in the areas of theme, form, and language. -

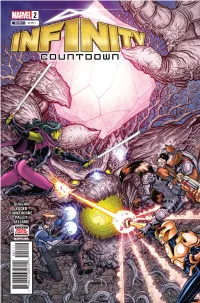

Read the Preview of INFINITY COUNTDOWN #2

HAWTHORNE BELLAIRE DUGGAN MARVEL.COM RATED T+ RATED PALLOT 0 0 2 1 1 KUDER $4.99 7 59606 08965 9 US 2 THE INFINITY STONES WERE REBORN AND SCATTERED, PASSING FROM HAND TO HAND ACROSS THE UNIVERSE, THROUGH TIME, TRANSGRESSING THE BOUNDARIES BETWEEN WORLDS... TO PROTECT THE POWER STONE, HIDDEN ON THE PLANETOID XITAUNG, DRAX AND THE NOVA CORPS FACE DOWN THE FRATERNITY OF RAPTORS AND THE CHITAURI ARMY. MEANWHILE, AS THE GUARDIANS WORK TO DEFEAT A RACE OF EVIL TREE-CREATURES, A NEWLY TRANSFORMED GROOT FLEXES HIS MUSCLES... WRITER PENCILERS INKERS COLOR ARTIST GERRY AARON KUDER & AARON KUDER & JORDIE DUGGAN MIKE HAWTHORNE TERRY PALLOT BELLAIRE LETTERER COVER ARTISTS ‘FORGING THE ARMOR’ PAGE ARTISTS VC’s CORY PETIT NICK BRADSHAW & MORRY HOLLOWELL MIKE DEODATO JR. & FRANK MARTIN VARIANT COVER ARTISTS ADI GRANOV, AARON KUDER & JORDIE BELLAIRE, RON LIM & RACHELLE ROSENBERG ASSISTANT EDITOR EDITOR EDITOR IN CHIEF CHIEF CREATIVE OFFICER PRESIDENT EXECUTIVE PRODUCER ANNALISE BISSA JORDAN D. WHITE C.B. CEBULSKI JOE QUESADA DAN BUCKLEY ALAN FINE INFINITY COUNTDOWN No. 2, June 2018. Published Monthly by MARVEL WORLDWIDE, INC., a subsidiary of MARVEL ENTERTAINMENT, LLC. OFFICE OF PUBLICATION: 135 West 50th Street, New York, NY 10020. BULK MAIL POSTAGE PAID AT NEW YORK, NY AND AT ADDITIONAL MAILING OFFICES. © 2018 MARVEL No similarity between any of the names, characters, persons, and/or institutions in this magazine with those of any living or dead person or institution is intended, and any such similarity which may exist is purely coincidental. $4.99 per copy in the U.S. (GST #R127032852) in the direct market; Canadian Agreement #40668537.