Dependability and Security Models* (Keynote Paper)

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Balancing Dependability Quality Attributes Relationships for Increased Embedded Systems Dependability

Master Thesis Software Engineering Thesis no: MSE-2009:17 September 2009 Balancing Dependability Quality Attributes Relationships for Increased Embedded Systems Dependability Saleh Al-Daajeh Supervisor: Professor Mikael Svahnberg School of Engineering Blekinge Institute of Technology Box 520 SE – 372 25 Ronneby Sweden This thesis is submitted to the School of Engineering at Blekinge Institute of Technology in partial fulfillment of the requirements for the degree of Master of Science in Software Engineering. The thesis is equivalent to 2 x 20 weeks of full time studies. Contact Information: Author: Saleh Al-Daajeh E-mail: [email protected] University advisor: Prof. Miakel Svahnberg School of Engineering Internet : www.bth.se/tek Blekinge Institute of Technology Phone : +46 457 385 000 Box 520 Fax : +46 457 271 25 SE – 372 25 Ronneby Sweden II Abstract Embedded systems are used in many critical applications of our daily life. The increased complexity of embedded systems and the tightened safety regulations posed on them and the scope of the environment in which they operate are driving the need for more dependable embedded systems. Therefore, achieving a high level of dependability to embedded systems is an ultimate goal. In order to achieve this goal we are in need of understanding the interrelationships between the different dependability quality attributes and other embedded systems’ quality attributes. This research study provides indicators of the relationship between the dependability quality attributes and other quality attributes for embedded systems by identify- ing the impact of architectural tactics as the candidate solutions to construct dependable embedded systems. III Acknowledgment I would like to express my gratitude to all those who gave me the possibility to complete this thesis. -

A Reasoning Framework for Dependability in Software Architectures Tacksoo Im Clemson University, [email protected]

Clemson University TigerPrints All Dissertations Dissertations 12-2010 A Reasoning Framework for Dependability in Software Architectures Tacksoo Im Clemson University, [email protected] Follow this and additional works at: https://tigerprints.clemson.edu/all_dissertations Part of the Computer Sciences Commons Recommended Citation Im, Tacksoo, "A Reasoning Framework for Dependability in Software Architectures" (2010). All Dissertations. 618. https://tigerprints.clemson.edu/all_dissertations/618 This Dissertation is brought to you for free and open access by the Dissertations at TigerPrints. It has been accepted for inclusion in All Dissertations by an authorized administrator of TigerPrints. For more information, please contact [email protected]. A Reasoning Framework for Dependability in Software Architectures A Dissertation Presented to the Graduate School of Clemson University In Partial Fulfillment of the Requirements for the Degree Doctor of Philosophy Computer Science by Tacksoo Im August 2010 Accepted by: Dr. John D. McGregor, Committee Chair Dr. Harold C. Grossman Dr. Jason O. Hallstrom Dr. Pradip K. Srimani Abstract The degree to which a software system possesses specified levels of software quality at- tributes, such as performance and modifiability, often have more influence on the success and failure of those systems than the functional requirements. One method of improving the level of a software quality that a product possesses is to reason about the structure of the software architecture in terms of how well the structure supports the quality. This is accomplished by reasoning through software quality attribute scenarios while designing the software architecture of the system. As society relies more heavily on software systems, the dependability of those systems be- comes critical. -

Fundamental Concepts of Dependability

Fundamental Concepts of Dependability Algirdas Avizˇ ienis Jean-Claude Laprie Brian Randell UCLA Computer Science Dept. LAAS-CNRS Dept. of Computing Science Univ. of California, Los Angeles Toulouse Univ. of Newcastle upon Tyne USA France U.K. UCLA CSD Report no. 010028 LAAS Report no. 01-145 Newcastle University Report no. CS-TR-739 LIMITED DISTRIBUTION NOTICE This report has been submitted for publication. It has been issued as a research report for early peer distribution. Abstract Dependability is the system property that integrates such attributes as reliability, availability, safety, security, survivability, maintainability. The aim of the presentation is to summarize the fundamental concepts of dependability. After a historical perspective, definitions of dependability are given. A structured view of dependability follows, according to a) the threats, i.e., faults, errors and failures, b) the attributes, and c) the means for dependability, that are fault prevention, fault tolerance, fault removal and fault forecasting. he protection and survival of complex information systems that are embedded in the infrastructure supporting advanced society has become a national and world-wide concern of the 1 Thighest priority . Increasingly, individuals and organizations are developing or procuring sophisticated computing systems on whose services they need to place great reliance — whether to service a set of cash dispensers, control a satellite constellation, an airplane, a nuclear plant, or a radiation therapy device, or to maintain the confidentiality of a sensitive data base. In differing circumstances, the focus will be on differing properties of such services — e.g., on the average real-time response achieved, the likelihood of producing the required results, the ability to avoid failures that could be catastrophic to the system's environment, or the degree to which deliberate intrusions can be prevented. -

FUNDAMENTALS of COMPUTING (2019-20) COURSE CODE: 5023 502800CH (Grade 7 for ½ High School Credit) 502900CH (Grade 8 for ½ High School Credit)

EXPLORING COMPUTER SCIENCE NEW NAME: FUNDAMENTALS OF COMPUTING (2019-20) COURSE CODE: 5023 502800CH (grade 7 for ½ high school credit) 502900CH (grade 8 for ½ high school credit) COURSE DESCRIPTION: Fundamentals of Computing is designed to introduce students to the field of computer science through an exploration of engaging and accessible topics. Through creativity and innovation, students will use critical thinking and problem solving skills to implement projects that are relevant to students’ lives. They will create a variety of computing artifacts while collaborating in teams. Students will gain a fundamental understanding of the history and operation of computers, programming, and web design. Students will also be introduced to computing careers and will examine societal and ethical issues of computing. OBJECTIVE: Given the necessary equipment, software, supplies, and facilities, the student will be able to successfully complete the following core standards for courses that grant one unit of credit. RECOMMENDED GRADE LEVELS: 9-12 (Preference 9-10) COURSE CREDIT: 1 unit (120 hours) COMPUTER REQUIREMENTS: One computer per student with Internet access RESOURCES: See attached Resource List A. SAFETY Effective professionals know the academic subject matter, including safety as required for proficiency within their area. They will use this knowledge as needed in their role. The following accountability criteria are considered essential for students in any program of study. 1. Review school safety policies and procedures. 2. Review classroom safety rules and procedures. 3. Review safety procedures for using equipment in the classroom. 4. Identify major causes of work-related accidents in office environments. 5. Demonstrate safety skills in an office/work environment. -

Dependability Assessment of Software- Based Systems: State of the Art

Dependability Assessment of Software- based Systems: State of the Art Bev Littlewood Centre for Software Reliability, City University, London [email protected] You can pick up a copy of my presentation here, if you have a lap-top ICSE2005, St Louis, May 2005 - slide 1 Do you remember 10-9 and all that? • Twenty years ago: much controversy about need for 10-9 probability of failure per hour for flight control software – could you achieve it? could you measure it? – have things changed since then? ICSE2005, St Louis, May 2005 - slide 2 Issues I want to address in this talk • Why is dependability assessment still an important problem? (why haven’t we cracked it by now?) • What is the present position? (what can we do now?) • Where do we go from here? ICSE2005, St Louis, May 2005 - slide 3 Why do we need to assess reliability? Because all software needs to be sufficiently reliable • This is obvious for some applications - e.g. safety-critical ones where failures can result in loss of life • But it’s also true for more ‘ordinary’ applications – e.g. commercial applications such as banking - the new Basel II accords impose risk assessment obligations on banks, and these include IT risks – e.g. what is the cost of failures, world-wide, in MS products such as Office? • Gloomy personal view: where it’s obvious we should do it (e.g. safety) it’s (sometimes) too difficult; where we can do it, we don’t… ICSE2005, St Louis, May 2005 - slide 4 What reliability levels are required? • Most quantitative requirements are from safety-critical systems. -

Florida Course Code Directory Computer Science Course Information 2019-2020

FLORIDA COURSE CODE DIRECTORY COMPUTER SCIENCE COURSE INFORMATION 2019-2020 Section 1007.2616, Florida Statutes, was amended by the Florida Legislature to include the definition of computer science and a requirement that computer science courses be identified in the Course Code Directory published on the Department of Education’s website. DEFINITION: The study of computers and algorithmic processes, including their principles, hardware and software designs, applications, and their impact on society, and includes computer coding and computer programming. SECONDARY COMPUTER SCIENCE COURSES Middle and high schools in each district, including combination schools in which any of grades 6-12 are taught, must provide an opportunity for students to enroll in a computer science course. If a school district does not offer an identified computer science course the district must provide students access to the course through the Florida Virtual School (FLVS) or through other means. COURSE NUMBER COURSE TITLE 0200000 M/J Computer Science Discoveries 0200010 M/J Computer Science Discoveries 1 0200020 M/J Computer Science Discoveries 2 0200305 Computer Science Discoveries 0200315 Computer Science Principles 0200320 AP Computer Science A 0200325 AP Computer Science A Innovation 0200335 AP Computer Science Principles 0200435 PRE-AICE Computer Studies IGCSE Level 0200480 AICE Computer Science 1 AS Level 0200485 AICE Computer Science 2 A Level 0200490 AICE Information Technology 1 AS Level 0200495 AICE Information Technology 2 A Level 0200800 IB Computer -

Software Reliability and Dependability: a Roadmap Bev Littlewood & Lorenzo Strigini

Software Reliability and Dependability: a Roadmap Bev Littlewood & Lorenzo Strigini Key Research Pointers Shifting the focus from software reliability to user-centred measures of dependability in complete software-based systems. Influencing design practice to facilitate dependability assessment. Propagating awareness of dependability issues and the use of existing, useful methods. Injecting some rigour in the use of process-related evidence for dependability assessment. Better understanding issues of diversity and variation as drivers of dependability. The Authors Bev Littlewood is founder-Director of the Centre for Software Reliability, and Professor of Software Engineering at City University, London. Prof Littlewood has worked for many years on problems associated with the modelling and evaluation of the dependability of software-based systems; he has published many papers in international journals and conference proceedings and has edited several books. Much of this work has been carried out in collaborative projects, including the successful EC-funded projects SHIP, PDCS, PDCS2, DeVa. He has been employed as a consultant to industrial companies in France, Germany, Italy, the USA and the UK. He is a member of the UK Nuclear Safety Advisory Committee, of IFIPWorking Group 10.4 on Dependable Computing and Fault Tolerance, and of the BCS Safety-Critical Systems Task Force. He is on the editorial boards of several international scientific journals. 175 Lorenzo Strigini is Professor of Systems Engineering in the Centre for Software Reliability at City University, London, which he joined in 1995. In 1985-1995 he was a researcher with the Institute for Information Processing of the National Research Council of Italy (IEI-CNR), Pisa, Italy, and spent several periods as a research visitor with the Computer Science Department at the University of California, Los Angeles, and the Bell Communication Research laboratories in Morristown, New Jersey. -

Safety and Security Challenge

SAFETY AND SECURITY CHALLENGE TOP SUPERCOMPUTERS IN THE WORLD - FEATURING TWO of DOE’S!! Summary: The U.S. Department of Energy (DOE) plays a very special role in In fields where scientists deal with issues from disaster relief to the keeping you safe. DOE has two supercomputers in the top ten supercomputers in electric grid, simulations provide real-time situational awareness to the whole world. Titan is the name of the supercomputer at the Oak Ridge inform decisions. DOE supercomputers have helped the Federal National Laboratory (ORNL) in Oak Ridge, Tennessee. Sequoia is the name of Bureau of Investigation find criminals, and the Department of the supercomputer at Lawrence Livermore National Laboratory (LLNL) in Defense assess terrorist threats. Currently, ORNL is building a Livermore, California. How do supercomputers keep us safe and what makes computing infrastructure to help the Centers for Medicare and them in the Top Ten in the world? Medicaid Services combat fraud. An important focus lab-wide is managing the tsunamis of data generated by supercomputers and facilities like ORNL’s Spallation Neutron Source. In terms of national security, ORNL plays an important role in national and global security due to its expertise in advanced materials, nuclear science, supercomputing and other scientific specialties. Discovery and innovation in these areas are essential for protecting US citizens and advancing national and global security priorities. Titan Supercomputer at Oak Ridge National Laboratory Background: ORNL is using computing to tackle national challenges such as safe nuclear energy systems and running simulations for lower costs for vehicle Lawrence Livermore's Sequoia ranked No. -

Critical Systems

Critical Systems ©Ian Sommerville 2004 Software Engineering, 7th edition. Chapter 3 Slide 1 Objectives ● To explain what is meant by a critical system where system failure can have severe human or economic consequence. ● To explain four dimensions of dependability - availability, reliability, safety and security. ● To explain that, to achieve dependability, you need to avoid mistakes, detect and remove errors and limit damage caused by failure. ©Ian Sommerville 2004 Software Engineering, 7th edition. Chapter 3 Slide 2 Topics covered ● A simple safety-critical system ● System dependability ● Availability and reliability ● Safety ● Security ©Ian Sommerville 2004 Software Engineering, 7th edition. Chapter 3 Slide 3 Critical Systems ● Safety-critical systems • Failure results in loss of life, injury or damage to the environment; • Chemical plant protection system; ● Mission-critical systems • Failure results in failure of some goal-directed activity; • Spacecraft navigation system; ● Business-critical systems • Failure results in high economic losses; • Customer accounting system in a bank; ©Ian Sommerville 2004 Software Engineering, 7th edition. Chapter 3 Slide 4 System dependability ● For critical systems, it is usually the case that the most important system property is the dependability of the system. ● The dependability of a system reflects the user’s degree of trust in that system. It reflects the extent of the user’s confidence that it will operate as users expect and that it will not ‘fail’ in normal use. ● Usefulness and trustworthiness are not the same thing. A system does not have to be trusted to be useful. ©Ian Sommerville 2004 Software Engineering, 7th edition. Chapter 3 Slide 5 Importance of dependability ● Systems that are not dependable and are unreliable, unsafe or insecure may be rejected by their users. -

A Practical Framework for Eliciting and Modeling System Dependability Requirements: Experience from the NASA High Dependability Computing Project

The Journal of Systems and Software 79 (2006) 107–119 www.elsevier.com/locate/jss A practical framework for eliciting and modeling system dependability requirements: Experience from the NASA high dependability computing project Paolo Donzelli a,*, Victor Basili a,b a Department of Computer Science, University of Maryland, College Park, MD 20742, USA b Fraunhofer Center for Experimental Software Engineering, College Park, MD 20742, USA Received 9 December 2004; received in revised form 21 March 2005; accepted 21 March 2005 Available online 29 April 2005 Abstract The dependability of a system is contextually subjective and reflects the particular stakeholderÕs needs. In different circumstances, the focus will be on different system properties, e.g., availability, real-time response, ability to avoid catastrophic failures, and pre- vention of deliberate intrusions, as well as different levels of adherence to such properties. Close involvement from stakeholders is thus crucial during the elicitation and definition of dependability requirements. In this paper, we suggest a practical framework for eliciting and modeling dependability requirements devised to support and improve stakeholdersÕ participation. The framework is designed around a basic modeling language that analysts and stakeholders can adopt as a common tool for discussing dependability, and adapt for precise (possibly measurable) requirements. An air traffic control system, adopted as testbed within the NASA High Dependability Computing Project, is used as a case study. Ó 2005 Elsevier Inc. All rights reserved. Keywords: System dependability; Requirements elicitation; Non-functional requirements 1. Introduction absence of failures (with higher costs, longer time to market and slower innovations) (Knight, 2002; Little- Individuals and organizations increasingly use wood and Stringini, 2000), everyday software (mobile sophisticated software systems from which they demand phones, PDAs, etc.) must provide cost effective service great reliance. -

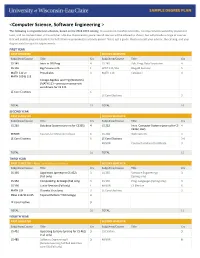

<Computer Science, Software Engineering >

<Computer Science, Software Engineering > The following is a hypothetical schedule, based on the 2018-2019 catalog. It assumes no transferred credits, no requirements waived by placement tests, and no courses taken in the summer. UW-Eau Claire cannot guarantee all courses will be offered as shown, but will provide a range of courses that will enable prepared students to fulfill their requirements in a timely period. This is just a guide. Please consult your advisor, the catalog, and your degree audit for specific requirements. FIRST YEAR FIRST SEMESTER SECOND SEMESTER Subj/Area/Course Title Crs Subj/Area/Course Title Crs CS 145 Intro to OO Prog 4 CS 245 Adv. Prog. Data Structures 4 CS 146 Big Picture in CS 1 WRIT 114/116 Blugold Seminar 5 MATH 112 or Precalculus 4 MATH 114 Calculus I 4 MATH 109 & 113 College Algebra and Trig (Winterim) (MATH 113 – prereq or concurrent enrollment for CS 245 LE Core Electives 6 LE Core Electives 3 TOTAL 15 TOTAL 16 SECOND YEAR FIRST SEMESTER SECOND SEMESTER Subj/Area/Course Title Crs Subj/Area/Course Title Crs CS 260 Database Systems (prereq for CS355) 4 CS 252 Intro. Computer Systems (prereq for CS 4 CS352, 452) MINOR Courses for Minor/Certificate 6 CS 268 Web Systems 3 LE Core Electives 6 LE Core Electives 3-6 MINOR Courses for Minor/Certificate 3 TOTAL 16 TOTAL 15 THIRD YEAR FIRST SEMESTER – Apply for Admission to Major SECOND SEMESTER Subj/Area/Course Title Crs Subj/Area/Course Title Crs CS 335 Algorithms (prereq for CS 452) 3 CS 355 Software Engineering I 3 (Fall only) (Spring only) CS 352 ComputeOrg. -

Artificial Intelligence Program 1

Artificial Intelligence Program 1 Artificial Intelligence Program Reid Simmons, Director of the BSAI program (NSH 3213) 15-122 Principles of Imperative Computation 10 (students without credit or a waiver for 15-112, Jean Harpley, Program Coordinator (NSH 1517) Fundamentals of Programming and Computer www.cs.cmu.edu/bs-in-artificial-intelligence (http://www.cs.cmu.edu/bs-in- Science, must take 15-112 before 15-122) artificial-intelligence/) 15-150 Principles of Functional Programming 10 15-210 Parallel and Sequential Data Structures and 12 Overview Algorithms 15-213 Introduction to Computer Systems 12 Carnegie Mellon University has led the world in artificial intelligence 15-251 Great Ideas in Theoretical Computer Science 12 education and innovation since the field was created. It's only natural, then, that the School of Computer Science would offer the nation's first bachelor's degree in Artificial Intelligence, which started in Fall 2018. Artificial Intelligence The new BSAI program gives students the in-depth knowledge needed to transform large amounts of data into actionable decisions. The program All of the following three AI core courses: Units and its curriculum focus on how complex inputs — such as vision, language 07-180 Concepts in Artificial Intelligence 5 and huge databases — can be used to make decisions or enhance human 15-281 Artificial Intelligence: Representation and 12 capabilities. The curriculum includes coursework in computer science, Problem Solving math, statistics, computational modeling, machine learning and symbolic computation. Because Carnegie Mellon is devoted to AI for social good, 10-315 Introduction to Machine Learning (SCS Majors) 12 students will also take courses in ethics and social responsibility, with the plus one of the following AI core courses: option to participate in independent study projects that change the world for 16-385 Computer Vision 12 the better — in areas like healthcare, transportation and education.