A Motion Capture Framework to Encourage Correct Execution of Sport Exercises

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

UPC Platform Publisher Title Price Available 730865001347

UPC Platform Publisher Title Price Available 730865001347 PlayStation 3 Atlus 3D Dot Game Heroes PS3 $16.00 52 722674110402 PlayStation 3 Namco Bandai Ace Combat: Assault Horizon PS3 $21.00 2 Other 853490002678 PlayStation 3 Air Conflicts: Secret Wars PS3 $14.00 37 Publishers 014633098587 PlayStation 3 Electronic Arts Alice: Madness Returns PS3 $16.50 60 Aliens Colonial Marines 010086690682 PlayStation 3 Sega $47.50 100+ (Portuguese) PS3 Aliens Colonial Marines (Spanish) 010086690675 PlayStation 3 Sega $47.50 100+ PS3 Aliens Colonial Marines Collector's 010086690637 PlayStation 3 Sega $76.00 9 Edition PS3 010086690170 PlayStation 3 Sega Aliens Colonial Marines PS3 $50.00 92 010086690194 PlayStation 3 Sega Alpha Protocol PS3 $14.00 14 047875843479 PlayStation 3 Activision Amazing Spider-Man PS3 $39.00 100+ 010086690545 PlayStation 3 Sega Anarchy Reigns PS3 $24.00 100+ 722674110525 PlayStation 3 Namco Bandai Armored Core V PS3 $23.00 100+ 014633157147 PlayStation 3 Electronic Arts Army of Two: The 40th Day PS3 $16.00 61 008888345343 PlayStation 3 Ubisoft Assassin's Creed II PS3 $15.00 100+ Assassin's Creed III Limited Edition 008888397717 PlayStation 3 Ubisoft $116.00 4 PS3 008888347231 PlayStation 3 Ubisoft Assassin's Creed III PS3 $47.50 100+ 008888343394 PlayStation 3 Ubisoft Assassin's Creed PS3 $14.00 100+ 008888346258 PlayStation 3 Ubisoft Assassin's Creed: Brotherhood PS3 $16.00 100+ 008888356844 PlayStation 3 Ubisoft Assassin's Creed: Revelations PS3 $22.50 100+ 013388340446 PlayStation 3 Capcom Asura's Wrath PS3 $16.00 55 008888345435 -

Research Article Exergaming Can Be a Health-Related Aerobic Physical Activity

Hindawi BioMed Research International Volume 2019, Article ID 1890527, 7 pages https://doi.org/10.1155/2019/1890527 Research Article Exergaming Can Be a Health-Related Aerobic Physical Activity Jacek PolechoNski ,1 MaBgorzata Dwbska ,1 and PaweB G. Dwbski2 1 Department of Tourism and Health-Oriented Physical Activity, Te Jerzy Kukuczka Academy of Physical Education, Katowice, 40-065, Poland 2Chair and Clinical Department of Psychiatry, School of Medicine with the Division of Dentistry in Zabrze, Medical University of Silesia in Katowice, Poland Correspondence should be addressed to Małgorzata Dębska; [email protected] Received 14 March 2019; Revised 10 May 2019; Accepted 20 May 2019; Published 4 June 2019 Academic Editor: Germ´an Vicente-Rodriguez Copyright © 2019 Jacek Polecho´nski et al. Tis is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. Te purpose of the study was to assess the intensity of aerobic physical activity during exergame training sessions with a moderate (MLD) and high (HLD) level of difculty of the interactive program “Your Shape Fitness Evolved 2012” for Xbox 360 Kinect in the context of health benefts. Te study involved 30 healthy and physically ft students. During the game, the HR of the participants was monitored using the Polar M400 heart rate monitor. Te average percentage of maximum heart rate (%HRmax) and heart rate reserve (%HRR) during the game was calculated and referred to the criterion of intensity of aerobic physical activity of American College of Sports Medicine and World Health Organization health recommendations. -

Assessing Older Adults' Usability Challenges Using Kinect-Based

Assessing Older Adults’ Usability Challenges Using Kinect-Based Exergames Christina N. Harrington1, Jordan Q. Hartley1, Tracy L. Mitzner1, and Wendy A. Rogers1 1Georgia Institute of Technology Human Factors and Aging Laboratory, Atlanta, GA {[email protected], [email protected], [email protected], [email protected]} Abstract. Exergames have been growing in popularity as a means to get physical exercise. Although these systems have many potential benefits both physically and cognitively, there may be barriers to their use by older adults due to a lack of design consideration for age-related changes in motor and perceptual capabilities. In this paper we evaluate the usability challenges of Kinect-based exergames for older adults. Older adults rated their interaction with the exergames system based on their perceived usefulness and ease-of-use of these systems. Although many of the participants felt that these systems could be potentially beneficial, particularly for exercise, there were several challenges experienced. We discuss the implications for design guidelines based on the usability challenges assessed. Keywords: older adults, exergames, usability, interface evaluation 1 Introduction The use of commercially designed exergames for physical activity has become widely accepted among a large audience of “wellness gamers” as a means of cognitive and physical stimulation [1]. Exergames are defined as interactive, exercise-based video games where players engage in physical activity via an onscreen interface [2]. These games can be played at home via a player interacting with a computer-simulated competitor, or in a multiplayer mode to encourage social connectivity. Exergames use body movement and gestures as input to responsive motion-detection consoles such as the Nintendo Wii and Balance Board, or Microsoft Xbox 360 with Kinect. -

Exergames and the “Ideal Woman”

Make Room for Video Games: Exergames and the “Ideal Woman” by Julia Golden Raz A dissertation submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy (Communication) in the University of Michigan 2015 Doctoral Committee: Associate Professor Christian Sandvig, Chair Professor Susan Douglas Associate Professor Sheila C. Murphy Professor Lisa Nakamura © Julia Golden Raz 2015 For my mother ii Acknowledgements Words cannot fully articulate the gratitude I have for everyone who has believed in me throughout my graduate school journey. Special thanks to my advisor and dissertation chair, Dr. Christian Sandvig: for taking me on as an advisee, for invaluable feedback and mentoring, and for introducing me to the lab’s holiday white elephant exchange. To Dr. Sheila Murphy: you have believed in me from day one, and that means the world to me. You are an excellent mentor and friend, and I am truly grateful for everything you have done for me over the years. To Dr. Susan Douglas: it was such a pleasure teaching for you in COMM 101. You have taught me so much about scholarship and teaching. To Dr. Lisa Nakamura: thank you for your candid feedback and for pushing me as a game studies scholar. To Amy Eaton: for all of your assistance and guidance over the years. To Robin Means Coleman: for believing in me. To Dave Carter and Val Waldren at the Computer and Video Game Archive: thank you for supporting my research over the years. I feel so fortunate to have attended a school that has such an amazing video game archive. -

Exergames Experience in Physical Education: a Review

PHYSICAL CULTURE AND SPORT. STUDIES AND RESEARCH DOI: 10.2478/pcssr-2018-0010 Exergames Experience in Physical Education: A Review Authors’ contribution: Cesar Augusto Otero Vaghetti1A-E, Renato Sobral A) conception and design 2A-E 1A-E of the study Monteiro-Junior , Mateus David Finco , Eliseo B) acquisition of data Reategui3A-E, Silvia Silva da Costa Botelho 4A-E C) analysis and interpretation of data D) manuscript preparation 1Federal University of Pelotas, Brazil E) obtaining funding 2State University of Montes Carlos, Brazil 3Federal University of Rio Grande do Sul, Brazil 4Federal University of Rio Grande, USA ABSTRACT Exergames are consoles that require a higher physical effort to play when compared to traditional video games. Active video games, active gaming, interactive games, movement-controlled video games, exertion games, and exergaming are terms used to define the kinds of video games in which the exertion interface enables a new experience. Exergames have added a component of physical activity to the otherwise motionless video game environment and have the potential to contribute to physical education classes by supplementing the current activity options and increasing student enjoyment. The use of exergames in schools has already shown positive results in the past through their potential to fight obesity. As for the pedagogical aspects of exergames, they have attracted educators’ attention due to the large number of games and activities that can be incorporated into the curriculum. In this way, the school must consider the development of a new physical education curriculum in which the key to promoting healthy physical activity in children and youth is enjoyment, using video games as a tool. -

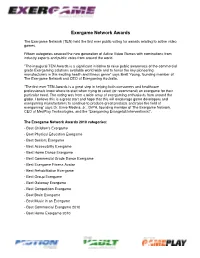

Exergame Network Awards

Exergame Network Awards The Exergame Network (TEN) held the first ever public voting for awards relating to active video games. Fifteen categories covered the new generation of Active Video Games with nominations from industry experts and public votes from around the world. ”The inaugural TEN Awards is a significant initiative to raise public awareness of the commercial grade Exergaming solutions available world wide and to honor the key pioneering manufacturers in this exciting health and fitness genre” says Brett Young, founding member of The Exergame Network and CEO of Exergaming Australia. ”The first ever TEN Awards is a great step in helping both consumers and healthcare professionals know where to start when trying to select (or recommend) an exergame for their particular need. The voting was from a wide array of exergaming enthusiasts from around the globe. I believe this is a great start and hope that this will encourage game developers and exergaming manufacturers to continue to produce great products and raise the field of exergaming” says Dr. Ernie Medina, Jr., DrPH, founding member of The Exergame Network, CEO of MedPlay Technologies, and the “Exergaming Evangelist/Interventionist”. The Exergame Network Awards 2010 categories: - Best Children's Exergame - Best Physical Education Exergame - Best Seniors Exergame - Best Accessibility Exergame - Best Home Dance Exergame - Best Commercial Grade Dance Exergame - Best Exergame Fitness Avatar - Best Rehabilitation Exergame - Best Group Exergame - Best Gateway Exergame - Best Competition Exergame - Best Brain Exergame - Best Music in an Exergame - Best Commercial Exergame 2010 - Best Home Exergame 2010 1. Best Children’s Exergame Award that gets younger kids moving with active video gaming - Dance Dance Revolution Disney Grooves by Konami - Wild Planet Hyper Dash - Atari Family Trainer - Just Dance Kids by Ubisoft - Nickelodeon Fit by 2K Play Dance Dance Revolution Disney Grooves by Konami 2. -

An Assessment of Mobile Fitness Games

An Assessment of Mobile Fitness Games An Interactive Qualifying Project by Alex Carli-Dorsey James Jackman Nicholas Massa Date: 01/30/2015 Report Submitted to: Bengisu Tulu and Emmanuel Agu Worcester Polytechnic Institute This report represents work of WPI undergraduate students submitted to the faculty as evidence of a degree requirement. WPI routinely publishes these reports on its web site without editorial or peer review. For more information about the projects program at WPI, see http://www.wpi.edu/Academics/Projects. 1 Abstract The purpose of this project was to find, document, and review mobile fitness games from the Android and iTunes marketplace, with a focus on those that encourage physical exercise. Using a variety of marketplaces and web searches, we, among a group of recruited and willing participants under study, played and reviewed Android and iPhone games that featured movement as an integral part of the game. We reported how effective the game is for fitness, and how engaging the content is for players. We provided reviews that can be used by: 1) Individuals seeking an enjoyable method of achieving a certain level of fitness, and 2) Game developers hoping to produce a compelling mobile fitness game of their own. 2 Table of Contents Abstract ..................................................................................................................................................... 2 1. Introduction .......................................................................................................................................... -

The Advantages of Exergaming

The Advantages of Exergaming Richard de Bock Vrije Universiteit Amsterdam [email protected] February 2016 Introduction It is well accepted among people that physical movement and activity leads to a healthier life. Performing physical exercises on a regular basis has proven to be healthy for people. For example, exercise science showed that physical activity can reduce the risk of coronary heart disease, obesity, type 2 diabetes and other chronic diseases. Moreover, a recent study estimated that at least 16% of all deaths could be avoided by improving people's cardio-respiratory fitness. An effective way of improving cardio-respiratory fitness is to regularly perform muscle strengthening exercises. Such exercises are recommended even for healthy adults as they were shown to lower blood pressure, improve glucose metabolism, and reduce cardiovascular disease risk. [Velloso et al., 2013] Ex- ergames make people perform physical activity, but is it as effective as normal exercises and sports? In this paper we will identify the main advantages and positive effect of exergaming and by performing a literature study on the sub- ject of exergaming. This paper consists of 5 sections. First we look into the background of exergaming, followed by an overview of the main publications. Next we examine two cases studies, future developments and we will conclude in section 5. 1 Background The word 'exergaming' is a combination of 'exercise' and 'gaming'. Exergames are video games that are also a form of physical activity. Exergaming has become popular the last couple of years, but is not new; In the 1980's exergames were introduced with Atari 2600's foot pad controller and became more popular in the 1990's with Konami's Dance Revolution product. -

Why Do Wii Teach PE

http://www.diva-portal.org This is the published version of a paper published in Swedish Journal of Sport Research. Citation for the original published paper (version of record): Quennerstedt, M., Almqvist, J., Meckbach, J., Öhman, M. (2013) Why do Wii teach physical education in school?. Swedish Journal of Sport Research, 2 Access to the published version may require subscription. N.B. When citing this work, cite the original published paper. Permanent link to this version: http://urn.kb.se/resolve?urn=urn:nbn:se:oru:diva-33883 Swedish Journal of Sport Research 2: 55-81. Why do Wii teach physical education in school? Mikael Quennerstedt Jonas Almqvist Jane Meckbach Marie Öhman Abstract: Videogames including bodily movement have recently been promoted as tools to be used in school to encourage young people to be more physically active. The purpose of this systematic review has been to explore the arguments for and against using exergames in school settings and thus facilitate new insights into this field. Most of the arguments for and/or against the use of exergames can be organised in relation to health and sport. In relation to health, the dominant theme is about fitness and obesity. In relation to sport, the two main themes were skill acquisition, and exergames as an alternative to traditional PE. The idea why Wii teach PE in schools is that children are becoming more obese, which in turn threatens the health of the population. Schools seem to be an appropriate arena for this intervention, and by using exergames as an energy consuming and enjoyable physical activity a behaviour modification and the establishment of good healthy habits is argued to be achieved. -

Measurement of Energy Expenditure While Playing Exergames at a Self- Selected Intensity

The Open Sports Sciences Journal, 2012, 5, 1-6 1 Open Access Measurement of Energy Expenditure while Playing Exergames at a Self- selected Intensity Bryan L. Haddock*, Sarah Jarvis, Nicholas R. Klug, Tarah Gonzalez, Bryan Barsaga, Shannon R. Siegel and Linda D. Wilkin California State University, San Bernardino, CA 92407, USA Abstract: Exergames have been suggested as a possible alternative to traditional exercise in the general population. The purpose of this study was to examine the heart rate (HR) and energy expenditure (EE) of young adults playing several different exergames, while self-selecting the component of the game to play and the intensity. A total of 117 participants, 18-35 years of age, were evaluated on one of four active video games. Participants were free to choose any component of the given game to play and they played at a self-selected intensity. The average HR and EE during the individual games were compared to resting conditions and to the American College of Sports Medicine (ACSM) guidelines. The HR and EE increased above resting conditions during each game (p<0.05). When the results of all games were combined, the HR was 125.4 ± 20.0 bpm and the average EE was 6.7 ± 2.1 kcal/min. This HR represents an average percent of heart rate reserve of 44.6 ± 14.1, high enough to be considered moderate intensity exercise. If performed for 30 minutes a day, five days per week, the average EE would be 1,005 kcals, enough to meet the ACSM recommendations for weekly EE. Therefore, at least some exergames could be a component of an exercise program. -

Time Spent in MVPA During Exergaming with Xbox Kinect in Sedentary College Students

Original Research Time Spent in MVPA during Exergaming with Xbox Kinect in Sedentary College Students CHIE YANG*1, ZACHARY WICKERT*1, SAMANTHA ROEDEL*1, ALEXANDRIA BERG*1, ALEX ROTHBAUER*1, MARQUELL JOHNSONǂ1 and DON BREDLEǂ1 1University of Wisconsin-Eau Claire *Denotes undergraduate student author, ‡Denotes professional author ABSTRACT International Journal of Exercise Science 7(4) : 286-294, 2014. The primary purpose of this study was to determine the amount of time spent in moderate-to-vigorous physical activity (MVPA) during a 30-minute bout of exergaming with the Xbox Kinect game console in sedentary college-aged students. A secondary purpose was to examine enjoyment level of participation in the selected exergame. Twenty college-aged students (14 females and 6 males) who self-reported being physically inactive and having no prior experience with the Xbox Kinect game “Your Shape Fitness Evolved 2012” Break a Sweat activity participated in the study. Participants came into the lab on two separate occasions. The first visit involved baseline testing and an 11 minute familiarization session with the game and physical activity (PA) assessment equipment. On the second visit, participants wore the same equipment and completed two 15 minute sessions of the full game. After the first 15 minute session, participants rested for 5 minutes before beginning the next 15 minute session. A 5 minute warm-up and cool-down was completed before and after the testing sessions on a treadmill. Time spent in MVPA was determined via portable indirect calirometry and accelerometry worn at the wrist and waist. 30 minutes, 29.95±.22, and 27.90±1.37 minutes of the 30 minutes of exergaming were spent in MVPA according to activity monitors and indirect calirometry, respectively. -

Success Factors of Video Game Consoles

Success factors of video game consoles Seminar Paper as part of the Marketing Executive Program at the University of M¨unster Submitted by Dipl.-Ing. (FH) Lars Bartschat Matriculation No: WBPA140000250 Email: [email protected] Chair: Department of Marketing and Media Research Issued by: Prof. Dr. T. Hennig-Thurau Supervisor: M. Sc. R. Behrens Start date: 17.10.2016 Submission date: 28.11.2016 Contents Contents I List of Abbreviations II List of Figures III 1 Introduction 1 2 Foundations 2 3 Developing a conceptual framework 4 3.1 Overview . .4 3.2 Hardware . .5 3.2.1 Immersion powered by high-performance hardware . .5 3.2.2 Game Flow enabling control interface design . .7 3.3 Software . .9 3.3.1 Software variety and network effects . .9 3.3.2 Looking for the system sellers - The need for high quality software 11 3.4 Value added digital services . 12 3.5 Framework summary . 14 4 Framework Application - The Console War of Generation Seven 15 4.1 Positioning of the platforms . 15 4.2 Hardware . 16 4.3 Software . 18 4.4 Value added digital services . 18 4.5 Conclusions . 19 5 Discussion, limitations and future research 20 A Additional figures and tables 30 B Declaration of Academic Integrity 35 I List of Abbreviations B billion(s) e.g. exemplia gratia (for exampe) et al. et alia (and others) etc. et cetera (and so on) f., ff. following page(s) Ed. Editor i.e. id est (that is) M million(s) no. number p. page Vol. Volume Declaration of Brand Name Usage Company, product, and brand names mentioned in this seminar paper may be brand names or registered trademarks of their respective owners.