Netlink Engine Issues, and Ways to Fix Those Andrei Vagin, Linux Kernel Summit, 2016

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Flexible Lustre Management

Flexible Lustre management Making less work for Admins ORNL is managed by UT-Battelle for the US Department of Energy How do we know Lustre condition today • Polling proc / sysfs files – The knocking on the door model – Parse stats, rpc info, etc for performance deviations. • Constant collection of debug logs – Heavy parsing for common problems. • The death of a node – Have to examine kdumps and /or lustre dump Origins of a new approach • Requirements for Linux kernel integration. – No more proc usage – Migration to sysfs and debugfs – Used to configure your file system. – Started in lustre 2.9 and still on going. • Two ways to configure your file system. – On MGS server run lctl conf_param … • Directly accessed proc seq_files. – On MSG server run lctl set_param –P • Originally used an upcall to lctl for configuration • Introduced in Lustre 2.4 but was broken until lustre 2.12 (LU-7004) – Configuring file system works transparently before and after sysfs migration. Changes introduced with sysfs / debugfs migration • sysfs has a one item per file rule. • Complex proc files moved to debugfs • Moving to debugfs introduced permission problems – Only debugging files should be their. – Both debugfs and procfs have scaling issues. • Moving to sysfs introduced the ability to send uevents – Item of most interest from LUG 2018 Linux Lustre client talk. – Both lctl conf_param and lctl set_param –P use this approach • lctl conf_param can set sysfs attributes without uevents. See class_modify_config() – We get life cycle events for free – udev is now involved. What do we get by using udev ? • Under the hood – uevents are collect by systemd and then processed by udev rules – /etc/udev/rules.d/99-lustre.rules – SUBSYSTEM=="lustre", ACTION=="change", ENV{PARAM}=="?*", RUN+="/usr/sbin/lctl set_param '$env{PARAM}=$env{SETTING}’” • You can create your own udev rule – http://reactivated.net/writing_udev_rules.html – /lib/udev/rules.d/* for examples – Add udev_log="debug” to /etc/udev.conf if you have problems • Using systemd for long task. -

Reducing Power Consumption in Mobile Devices by Using a Kernel

IEEE TRANSACTIONS ON MOBILE COMPUTING, VOL. Z, NO. B, AUGUST 2017 1 Reducing Event Latency and Power Consumption in Mobile Devices by Using a Kernel-Level Display Server Stephen Marz, Member, IEEE and Brad Vander Zanden and Wei Gao, Member, IEEE E-mail: [email protected], [email protected], [email protected] Abstract—Mobile devices differ from desktop computers in that they have a limited power source, a battery, and they tend to spend more CPU time on the graphical user interface (GUI). These two facts force us to consider different software approaches in the mobile device kernel that can conserve battery life and reduce latency, which is the duration of time between the inception of an event and the reaction to the event. One area to consider is a software package called the display server. The display server is middleware that handles all GUI activities between an application and the operating system, such as event handling and drawing to the screen. In both desktop and mobile devices, the display server is located in the application layer. However, the kernel layer contains most of the information needed for handling events and drawing graphics, which forces the application-level display server to make a series of system calls in order to coordinate events and to draw graphics. These calls interrupt the CPU which can increase both latency and power consumption, and also require the kernel to maintain event queues that duplicate event queues in the display server. A further drawback of placing the display server in the application layer is that the display server contains most of the information required to efficiently schedule the application and this information is not communicated to existing kernels, meaning that GUI-oriented applications are scheduled less efficiently than they might be, which further increases power consumption. -

Beej's Guide to Unix IPC

Beej's Guide to Unix IPC Brian “Beej Jorgensen” Hall [email protected] Version 1.1.3 December 1, 2015 Copyright © 2015 Brian “Beej Jorgensen” Hall This guide is written in XML using the vim editor on a Slackware Linux box loaded with GNU tools. The cover “art” and diagrams are produced with Inkscape. The XML is converted into HTML and XSL-FO by custom Python scripts. The XSL-FO output is then munged by Apache FOP to produce PDF documents, using Liberation fonts. The toolchain is composed of 100% Free and Open Source Software. Unless otherwise mutually agreed by the parties in writing, the author offers the work as-is and makes no representations or warranties of any kind concerning the work, express, implied, statutory or otherwise, including, without limitation, warranties of title, merchantibility, fitness for a particular purpose, noninfringement, or the absence of latent or other defects, accuracy, or the presence of absence of errors, whether or not discoverable. Except to the extent required by applicable law, in no event will the author be liable to you on any legal theory for any special, incidental, consequential, punitive or exemplary damages arising out of the use of the work, even if the author has been advised of the possibility of such damages. This document is freely distributable under the terms of the Creative Commons Attribution-Noncommercial-No Derivative Works 3.0 License. See the Copyright and Distribution section for details. Copyright © 2015 Brian “Beej Jorgensen” Hall Contents 1. Intro................................................................................................................................................................1 1.1. Audience 1 1.2. Platform and Compiler 1 1.3. -

Mmap and Dma

CHAPTER THIRTEEN MMAP AND DMA This chapter delves into the area of Linux memory management, with an emphasis on techniques that are useful to the device driver writer. The material in this chap- ter is somewhat advanced, and not everybody will need a grasp of it. Nonetheless, many tasks can only be done through digging more deeply into the memory man- agement subsystem; it also provides an interesting look into how an important part of the kernel works. The material in this chapter is divided into three sections. The first covers the implementation of the mmap system call, which allows the mapping of device memory directly into a user process’s address space. We then cover the kernel kiobuf mechanism, which provides direct access to user memory from kernel space. The kiobuf system may be used to implement ‘‘raw I/O’’ for certain kinds of devices. The final section covers direct memory access (DMA) I/O operations, which essentially provide peripherals with direct access to system memory. Of course, all of these techniques requir e an understanding of how Linux memory management works, so we start with an overview of that subsystem. Memor y Management in Linux Rather than describing the theory of memory management in operating systems, this section tries to pinpoint the main features of the Linux implementation of the theory. Although you do not need to be a Linux virtual memory guru to imple- ment mmap, a basic overview of how things work is useful. What follows is a fairly lengthy description of the data structures used by the kernel to manage memory. -

An Introduction to Linux IPC

An introduction to Linux IPC Michael Kerrisk © 2013 linux.conf.au 2013 http://man7.org/ Canberra, Australia [email protected] 2013-01-30 http://lwn.net/ [email protected] man7 .org 1 Goal ● Limited time! ● Get a flavor of main IPC methods man7 .org 2 Me ● Programming on UNIX & Linux since 1987 ● Linux man-pages maintainer ● http://www.kernel.org/doc/man-pages/ ● Kernel + glibc API ● Author of: Further info: http://man7.org/tlpi/ man7 .org 3 You ● Can read a bit of C ● Have a passing familiarity with common syscalls ● fork(), open(), read(), write() man7 .org 4 There’s a lot of IPC ● Pipes ● Shared memory mappings ● FIFOs ● File vs Anonymous ● Cross-memory attach ● Pseudoterminals ● proc_vm_readv() / proc_vm_writev() ● Sockets ● Signals ● Stream vs Datagram (vs Seq. packet) ● Standard, Realtime ● UNIX vs Internet domain ● Eventfd ● POSIX message queues ● Futexes ● POSIX shared memory ● Record locks ● ● POSIX semaphores File locks ● ● Named, Unnamed Mutexes ● System V message queues ● Condition variables ● System V shared memory ● Barriers ● ● System V semaphores Read-write locks man7 .org 5 It helps to classify ● Pipes ● Shared memory mappings ● FIFOs ● File vs Anonymous ● Cross-memory attach ● Pseudoterminals ● proc_vm_readv() / proc_vm_writev() ● Sockets ● Signals ● Stream vs Datagram (vs Seq. packet) ● Standard, Realtime ● UNIX vs Internet domain ● Eventfd ● POSIX message queues ● Futexes ● POSIX shared memory ● Record locks ● ● POSIX semaphores File locks ● ● Named, Unnamed Mutexes ● System V message queues ● Condition variables ● System V shared memory ● Barriers ● ● System V semaphores Read-write locks man7 .org 6 It helps to classify ● Pipes ● Shared memory mappings ● FIFOs ● File vs Anonymous ● Cross-memoryn attach ● Pseudoterminals tio a ● proc_vm_readv() / proc_vm_writev() ● Sockets ic n ● Signals ● Stream vs Datagram (vs uSeq. -

I.MX Linux® Reference Manual

i.MX Linux® Reference Manual Document Number: IMXLXRM Rev. 1, 01/2017 i.MX Linux® Reference Manual, Rev. 1, 01/2017 2 NXP Semiconductors Contents Section number Title Page Chapter 1 About this Book 1.1 Audience....................................................................................................................................................................... 27 1.1.1 Conventions................................................................................................................................................... 27 1.1.2 Definitions, Acronyms, and Abbreviations....................................................................................................27 Chapter 2 Introduction 2.1 Overview.......................................................................................................................................................................31 2.1.1 Software Base................................................................................................................................................ 31 2.1.2 Features.......................................................................................................................................................... 31 Chapter 3 Machine-Specific Layer (MSL) 3.1 Introduction...................................................................................................................................................................37 3.2 Interrupts (Operation).................................................................................................................................................. -

Networking with Wicked in SUSE® Linux Enterprise 12

Networking with Wicked in SUSE® Linux Enterprise 12 Something Wicked This Way Comes Guide www.suse.com Solution Guide Server Server Solution Guide Networking with Wicked in SUSE Linux Enterprise 12 Wicked QuickStart Guide Abstract: Introduced with SUSE® Linux Enterprise 12, Wicked is the new network management tool for Linux, largely replacing the sysconfig package to manage the ever-more-complicated network configurations. Wicked provides network configuration as a service, enabling you to change your configuration dynamically. This paper covers the basics of Wicked with an emphasis on Recently, new technologies have accelerated the trend toward providing correlations between how things were done previ- complexity. Virtualization requires on-demand provisioning of ously and how they need to be done now. resources, including networks. Converged networks that mix data and storage traffic on a shared link require a tighter integra- Introduction tion between stacks that were previously mostly independent. When S.u.S.E.1 first introduced its Linux distribution, network- ing requirements were relatively simple and static. Over time Today, more than 20 years after the first SUSE distribution, net- networking evolved to become far more complex and dynamic. work configurations are very difficult to manage properly, let For example, automatic address configuration protocols such as alone easily (see Figure 1). DHCP or IPv6 auto-configuration entered the picture along with a plethora of new classes of network devices. Modern Network Landscape While this evolution was happening, the concepts behind man- aging a Linux system’s network configuration didn’t change much. The basic idea of storing a configuration in some files and applying it at system boot up using a collection of scripts and system programs was pretty much the same. -

O'reilly Linux Kernel in a Nutshell.Pdf

,title.4229 Page i Friday, December 1, 2006 9:52 AM LINUX KERNEL IN A NUTSHELL ,title.4229 Page ii Friday, December 1, 2006 9:52 AM Other Linux resources from O’Reilly Related titles Building Embedded Linux Running Linux Systems Understanding Linux Linux Device Drivers Network Internals Linux in a Nutshell Understanding the Linux Linux Pocket Guide Kernel Linux Books linux.oreilly.com is a complete catalog of O’Reilly’s Resource Center books on Linux and Unix and related technologies, in- cluding sample chapters and code examples. Conferences O’Reilly brings diverse innovators together to nurture the ideas that spark revolutionary industries. We spe- cialize in documenting the latest tools and systems, translating the innovator’s knowledge into useful skills for those in the trenches. Visit conferences.oreilly.com for our upcoming events. Safari Bookshelf (safari.oreilly.com) is the premier on- line reference library for programmers and IT professionals. Conduct searches across more than 1,000 books. Subscribers can zero in on answers to time-critical questions in a matter of seconds. Read the books on your Bookshelf from cover to cover or sim- ply flip to the page you need. Try it today for free. ,title.4229 Page iii Friday, December 1, 2006 9:52 AM LINUX KERNEL IN A NUTSHELL Greg Kroah-Hartman Beijing • Cambridge • Farnham • Köln • Paris • Sebastopol • Taipei • Tokyo ,LKNSTOC.fm.8428 Page v Friday, December 1, 2006 9:55 AM Chapter 1 Table of Contents Preface . ix Part I. Building the Kernel 1. Introduction . 3 Using This Book 4 2. Requirements for Building and Using the Kernel . -

Table of Contents

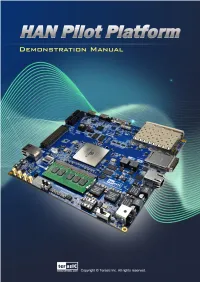

TABLE OF CONTENTS Chapter 1 Introduction ............................................................................................. 3 Chapter 2 Examples for FPGA ................................................................................. 4 2.1 Factory Default Code ................................................................................................................................. 4 2.2 Nios II Control for Programmable PLL/ Temperature/ Power/ 9-axis ....................................................... 6 2.3 Nios DDR4 SDRAM Test ........................................................................................................................ 12 2.4 RTL DDR4 SDRAM Test ......................................................................................................................... 14 2.5 USB Type-C DisplayPort Alternate Mode ............................................................................................... 15 2.6 USB Type-C FX3 Loopback .................................................................................................................... 17 2.7 HDMI TX and RX in 4K Resolution ........................................................................................................ 21 2.8 HDMI TX in 4K Resolution ..................................................................................................................... 26 2.9 Low Latency Ethernet 10G MAC Demo .................................................................................................. 29 2.10 Socket -

Communicating Between the Kernel and User-Space in Linux Using Netlink Sockets

SOFTWARE—PRACTICE AND EXPERIENCE Softw. Pract. Exper. 2010; 00:1–7 Prepared using speauth.cls [Version: 2002/09/23 v2.2] Communicating between the kernel and user-space in Linux using Netlink sockets Pablo Neira Ayuso∗,∗1, Rafael M. Gasca1 and Laurent Lefevre2 1 QUIVIR Research Group, Departament of Computer Languages and Systems, University of Seville, Spain. 2 RESO/LIP team, INRIA, University of Lyon, France. SUMMARY When developing Linux kernel features, it is a good practise to expose the necessary details to user-space to enable extensibility. This allows the development of new features and sophisticated configurations from user-space. Commonly, software developers have to face the task of looking for a good way to communicate between kernel and user-space in Linux. This tutorial introduces you to Netlink sockets, a flexible and extensible messaging system that provides communication between kernel and user-space. In this tutorial, we provide fundamental guidelines for practitioners who wish to develop Netlink-based interfaces. key words: kernel interfaces, netlink, linux 1. INTRODUCTION Portable open-source operating systems like Linux [1] provide a good environment to develop applications for the real-world since they can be used in very different platforms: from very small embedded devices, like smartphones and PDAs, to standalone computers and large scale clusters. Moreover, the availability of the source code also allows its study and modification, this renders Linux useful for both the industry and the academia. The core of Linux, like many modern operating systems, follows a monolithic † design for performance reasons. The main bricks that compose the operating system are implemented ∗Correspondence to: Pablo Neira Ayuso, ETS Ingenieria Informatica, Department of Computer Languages and Systems. -

IPC: Mmap and Pipes

Interprocess Communication: Memory mapped files and pipes CS 241 April 4, 2014 University of Illinois 1 Shared Memory Private Private address OS address address space space space Shared Process A segment Process B Processes request the segment OS maintains the segment Processes can attach/detach the segment 2 Shared Memory Private Private Private address address space OS address address space space space Shared Process A segment Process B Can mark segment for deletion on last detach 3 Shared Memory example /* make the key: */ if ((key = ftok(”shmdemo.c", 'R')) == -1) { perror("ftok"); exit(1); } /* connect to (and possibly create) the segment: */ if ((shmid = shmget(key, SHM_SIZE, 0644 | IPC_CREAT)) == -1) { perror("shmget"); exit(1); } /* attach to the segment to get a pointer to it: */ data = shmat(shmid, (void *)0, 0); if (data == (char *)(-1)) { perror("shmat"); exit(1); } 4 Shared Memory example /* read or modify the segment, based on the command line: */ if (argc == 2) { printf("writing to segment: \"%s\"\n", argv[1]); strncpy(data, argv[1], SHM_SIZE); } else printf("segment contains: \"%s\"\n", data); /* detach from the segment: */ if (shmdt(data) == -1) { perror("shmdt"); exit(1); } return 0; } Run demo 5 Memory Mapped Files Memory-mapped file I/O • Map a disk block to a page in memory • Allows file I/O to be treated as routine memory access Use • File is initially read using demand paging ! i.e., loaded from disk to memory only at the moment it’s needed • When needed, a page-sized portion of the file is read from the file system -

A Machine-Independent DMA Framework for Netbsd

AMachine-Independent DMA Framework for NetBSD Jason R. Thorpe1 Numerical Aerospace Simulation Facility NASA Ames Research Center Abstract 1.1. Host platform details One of the challenges in implementing a portable In the example platforms listed above,there are at kernel is finding good abstractions for semantically- least three different mechanisms used to perform DMA. similar operations which often have very machine- The first is used by the i386 platform. This mechanism dependent implementations. This is especially impor- can be described as "what you see is what you get": the tant on modern machines which share common archi- address that the device uses to perform the DMA trans- tectural features, e.g. the PCI bus. fer is the same address that the host CPU uses to access This paper describes whyamachine-independent the memory location in question. DMA mapping abstraction is needed, the design consid- DMA address Host address erations for such an abstraction, and the implementation of this abstraction in the NetBSD/alpha and NetBSD/i386 kernels. 1. Introduction NetBSD is a portable, modern UNIX-likeoperat- ing system which currently runs on eighteen platforms covering nine processor architectures. Some of these platforms, including the Alpha and i3862,share the PCI busasacommon architectural feature. In order to share device drivers for PCI devices between different platforms, abstractions that hide the details of bus access must be invented. The details that must be hid- den can be broken down into twoclasses: CPU access Figure 1 - WYSIWYG DMA to devices on the bus (bus_space)and device access to host memory (bus_dma). Here we will discuss the lat- The second mechanism, employed by the Alpha, ter; bus_space is a complicated topic in and of itself, is very similar to the first; the address the host CPU and is beyond the scope of this paper.