Ability of Influenza Case Detection for Disease Surveillance

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Intelligence and Security Informatics

CHAPTER 6 Intelligence and Security Informatics Hsinchun Chen and Jennifer Xu University of Arizona ISI: Challenges and Research Framework The tragic events of September 11,2001, and the subsequent anthrax scare had profound effects on many aspects of society. Terrorism has become the most significant threat to domestic security because of its potential to bring massive damage to the nation’s infrastructure and economy. In response to this challenge, federal authorities are actively implementing comprehensive strategies and measures to achieve the three objectives identified in the “National Strategy for Homeland Security” report (U.S. Office of Homeland Security, 2002): (1)preventing future terrorist attacks, (2) reducing the nation’s vulnerability, and (3) minimizing the damage and expediting recovery from attacks that occur. State and local law enforcement agencies, likewise, have become more vigilant about criminal activities that can threaten public safety and national security. Academics in the natural sciences, computational science, informa- tion science, social sciences, engineering, medicine, and many other fields have also been called upon to help enhance the government’s capa- bilities to fight terrorism and other crime. Science and technology have been identified in the “National Strategy for Homeland Security” report as the keys to winning the new counter-terrorism war (U. S. Office of Homeland Security, 2002). In particular, it is believed that information technology and information management will play indispensable roles in making the nation safer (Cronin, 2005; Davies, 2002; National Research Council, 2002) by supporting intelligence and knowledge dis- covery through collecting, processing, analyzing, and utilizing terrorism- and crime-related data (Badiru, Karasz, & Holloway, 1988; Chen, Miranda, Zeng, Demchak, Schroeder, & Madhusudan, 2003; Chen, Moore, Zeng, & Leavitt, 2004). -

Visualization and Analytics Tools for Infectious Disease Epidemiology: 7 5 a Systematic Review

YJBIN 2161 No. of Pages 12, Model 5G 18 April 2014 Journal of Biomedical Informatics xxx (2014) xxx–xxx 1 Contents lists available at ScienceDirect Journal of Biomedical Informatics journal homepage: www.elsevier.com/locate/yjbin 2 Methodological Review 6 4 Visualization and analytics tools for infectious disease epidemiology: 7 5 A systematic review a a b c d 8 Q1 Lauren N. Carroll , Alan P. Au , Landon Todd Detwiler , Tsung-chieh Fu , Ian S. Painter , a,d,⇑ 9 Q2 Neil F. Abernethy 10 Q3 a Department of Biomedical Informatics and Medical Education, University of Washington, 850 Republican St., Box 358047, Seattle, WA 98109, United States 11 b Department of Biological Structure, University of Washington, 1959 NE Pacific St., Box 357420, United States 12 c Department of Epidemiology, University of Washington, 850 Republican St., Box 358047, Seattle, WA 98109, United States 13 d Department of Health Services, University of Washington, 1959 NE Pacific St., Box 359442, Seattle, WA 98195, United States 1415 1617 article info abstract 3219 20 Article history: Background: A myriad of new tools and algorithms have been developed to help public health professionals 33 21 Received 13 September 2013 analyze and visualize the complex data used in infectious disease control. To better understand approaches 34 22 Accepted 3 April 2014 to meet these users’ information needs, we conducted a systematic literature review focused on the land- 35 23 Available online xxxx scape of infectious disease visualization tools for public health professionals, with a special emphasis on 36 geographic information systems (GIS), molecular epidemiology, and social network analysis. -

Component 5: History of Health Information Technology in the U.S

Component 5: History of Health Information Technology in the U.S. Instructor Manual Version 3.0/Spring 2012 Notes to Instructors This Instructor Manual is a resource for instructors using this component. Each component is broken down into units, which include the following elements: • Learning objectives • Suggested student readings, texts, reference links to supplement the narrated PowerPoint slides • Lectures (voiceover PowerPoint in Flash format); PowerPoint slides (Microsoft PowerPoint format), lecture transcripts (Microsoft Word format); and audio files (MP3 format) for each lecture • Self-assessment questions reflecting Unit Objectives with answer keys and/or expected outcomes • Application Activities (e.g., discussion questions, assignments, projects) with instructor guidelines, answer keys and/or expected outcomes Health IT Workforce Curriculum History of Health Information 2 Technology in the U.S. Version 3.0/Spring 2012 This material was developed by The University of Alabama at Birmingham, funded by the Department of Health and Human Services, Office of the National Coordinator for Health Information echnologyT under Award Number 1U24OC000023 Contents Notes to Instructors ...................................................................................2 Disclaimer ..................................................................................................5 Component Overview ................................................................................6 Component Objectives ..............................................................................6 -

Medical Informatics

MEDICAL INFORMATICS Knowledge Management and Data Mining in Biomedicine INTEGRATED SERIES IN INFORMATION SYSTEMS Series Editors Professor Ramesh Sharda Prof. Dr. Stefan Vo13 Oklahoma State University Universitat Hamburg Other published titles in the series: E-BUSINESS MANAGEMENT: Integration of Web Technologies with Business Models1 Michael J. Shaw VIRTUAL CORPORATE UNIVERSITIES: A Matrix of Knowledge and Learning for the New Digital DawdWalter R.J. Baets & Gert Van der Linden SCALABLE ENTERPRISE SYSTEMS: An Introduction to Recent Advances1 edited by Vittal Prabhu, Soundar Kumara, Manjunath Kamath LEGAL PROGRAMMING: Legal Compliance for RFID and Software Agent Ecosystems in Retail Processes and Beyond1 Brian Subirana and Malcolm Bain LOGICAL DATA MODELING: What It Is and How To Do It1 Alan Chmura and J. Mark Heumann DESIGNING AND EVALUATING E-MANAGEMENT DECISION TOOLS: The Integration of Decision and Negotiation Models into Internet-Multimedia Technologies1 Giampiero E.G. Beroggi INFORMATION AND MANAGEMENT SYSTEMS FOR PRODUCT CUSTOMIZATIONI Blecker, Friedrich, Kaluza, Abdelkafi & Kreutler MEDICAL INFORMATICS Knowledge Management and Data Mining in Biomedicine edited by Hsinchun Chen Sherrilynne S. Fuller Carol Friedman William Hersh - Springer Hsinchun Chen Sherrilynne S. Fuller The University of Arizona, USA University of Washington, USA Carol Friedman William Hersh Columbia University, USA Oregon Health & Science Univ., USA Library of Congress Cataloging-in-Publication Data A C.I.P. Catalogue record for this book is available from the Library of Congress. ISBN-10: 0-387-2438 1-X (HB) ISBN- 10: 0-387-25739-X (e-book) ISBN- 13: 978-0387-24381-8 (HB) ISBN- 13: 978-0387-25739-6 (e-book) O 2005 by Springer Science+Business Media, Inc. -

Development and Evaluation of Leveraging Biomedical

DEVELOPMENT AND EVALUATION OF LEVERAGING BIOMEDICAL INFORMATICS TECHNIQUES TO ENHANCE PUBLIC HEALTH SURVEILLANCE by Kailah T. Davis A dissertation submitted to the faculty of The University of Utah in partial fulfillment of the requirements for the degree of Doctor of Philosophy Department of Biomedical Informatics The University of Utah August 2014 Copyright © Kailah T. Davis 2014 All Rights Reserved The University of Utah Graduate School STATEMENT OF DISSERTATION APPROVAL The dissertation of ________________ Kailah T. Davis ________________________ has been approved by the following supervisory committee members: ___ Julio Facelli_________________, Chair ____ 1/27/2014 __ Date Approved ____________Catherine Staes_____________, Member _____2/06/2014____ Date Approved ____________Leslie Lenert________________, Member _____2/03/2014____ Date Approved ____________Per Gesteland _______________, Member _____2/12/2014____ Date Approved ____________Robert Kessler_______________, Member _____6/10/2014____ Date Approved And by _____________ Wendy Chapman_____________________, Chair of the Department _________________ Biomedical Informatics_________________ __ and by David B. Kieda, Dean of The Graduate School. ABSTRACT Public health surveillance systems are crucial for the timely detection and response to public health threats. Since the terrorist attacks of September 11, 2001, and the release of anthrax in the following month, there has been a heightened interest in public health surveillance. The years immediately following these attacks were -

Continuous Artificial Prediction Markets As a Syndromic

Continuous Artificial Prediction Markets as a Syndromic Surveillance Technique Fatemeh Jahedpari 1 Syndromic Surveillance Appearance of highly virulent viruses warrant early detection of outbreaks to protect community health. The main goal of public health surveillance and more specifically `syndromic surveillance systems' is early detection of an outbreak in a society using available data sources. In this paper, we discuss what are the challenges of syndromic surveillance systems and how continuous Artificial Prediction Market (c-APM) [Jahedpari et al., 2017] can effectively be applied to the problem of syndromic surveillance. c-APM can effectively be applied to the problem of syndromic surveillance by analysing each data source with a selection of algorithms and integrating their results according to an adaptive weighting scheme. Section 2 provides an introduction and explains syndromic surveillance. Then, we discuss the syndromic surveillance data sources in Section 3 and present some syndromic surveillance systems in Section 4. The statement of the problem in this field is covered in Section 5. After that, we discuss Google Flu Trends (GFT) and GP model [Lampos et al., 2015], which is proposed by Google Flu Trends team to improve GFT engine performance, in Section 6 and 7 respectively. Also, in these sections, we evaluate the performance of c-APM as a syndromic surveillance system. Finally, Section 8 provides the conclusion of this paper. 2 Introduction According to the World Health Organisation (WHO) [World Health Organization, 2013], the United Nations directing and coordinating health authority, public health surveillance is: The continuous, systematic collection, analysis and interpretation of health-related data needed for the planning, implementation, and evaluation of public health practice. -

An Educational &Analytical Investigation

International Journal of Applied Science and Engineering, 8(1): 01-09, June 2020 DOI: ©2020 New Delhi Publishers. All rights reserved Informatics: Foundation, Nature, Types and Allied areas— An Educational &Analytical Investigation P. K. Paul1* and P.S. Aithal2 1Executive Director, MCIS, Department of CIS, Information Scientist (Offg.), Raiganj University (RGU), West Bengal, India 2Vice Chancellor, Srinivas University, Karnataka, India *Corresponding author: [email protected] ABSTRACT Informatics is an emerging subject that concern with both Information Technology and Management Science. It is very close to Information Science, Information Systems, Information Technology rather Computer Science, Computer Engineering, Computer Application, etc. The term Informatics widely used as an alternative to Information Science and IT in many countries. Apart from the IT and Computing stream, the field ‘Informatics’ also available in the Departments, Units, etc. viz. Management Sciences, Health Sciences, Environmental Sciences, Social Sciences, etc., based on nature of the Informatics. Informatics can be seen as a technology based or also as a domain or field specific depending upon nature and thus it is emerging as an Interdisciplinary Sciences. There are few dimensions of Informatics that can be noted viz. Bio Sciences, Pure & Mathematical Sciences, Social Sciences, Management Sciences, Legal & Educational Sciences, etc. This is a conceptual paper deals with the academic investigation in respect of the Informatics branch; both in internationally and in India. Keywords: Informatics, IT, Informatics Systems, Biological Informatics, Emerging Subjects, Academics, Development Informatics is a developing field that cares about both technologies and information. Initially, it was treated as a practicing nomenclature and now it becomes a field of study and growing internationally in different higher educational institutes which are offering a program on simple ‘Informatics’ or any domain specific viz. -

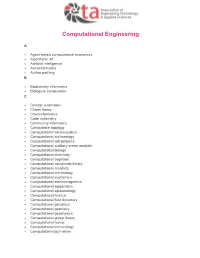

AETA-Computational-Engineering.Pdf

Computational Engineering A Agent-based computational economics Algorithmic art Artificial intelligence Astroinformatics Author profiling B Biodiversity informatics Biological computation C Cellular automaton Chaos theory Cheminformatics Code stylometry Community informatics Computable topology Computational aeroacoustics Computational archaeology Computational astrophysics Computational auditory scene analysis Computational biology Computational chemistry Computational cognition Computational complexity theory Computational creativity Computational criminology Computational economics Computational electromagnetics Computational epigenetics Computational epistemology Computational finance Computational fluid dynamics Computational genomics Computational geometry Computational geophysics Computational group theory Computational humor Computational immunology Computational journalism Computational law Computational learning theory Computational lexicology Computational linguistics Computational lithography Computational logic Computational magnetohydrodynamics Computational Materials Science Computational mechanics Computational musicology Computational neurogenetic modeling Computational neuroscience Computational number theory Computational particle physics Computational photography Computational physics Computational science Computational engineering Computational scientist Computational semantics Computational semiotics Computational social science Computational sociology Computational -

Development of Guidance on Ethics and Governance of Artificial Intelligence for Health

WHO Consultation Towards the Development of guidance on ethics and governance of artificial intelligence for health Geneva, Switzerland, 2–4 October 2019 Meeting report WHO consultation towards the development of guidance on ethics and governance of artificial intelligence for health: meeting report, Geneva, Switzerland, 2–4 October 2019 ISBN 978-92-4-001275-2 (electronic version) ISBN 978-92-4-001276-9 (print version) © World Health Organization 2021 Some rights reserved. This work is available under the Creative Commons Attribution-NonCommercial- ShareAlike 3.0 IGO licence (CC BY-NC-SA 3.0 IGO; https://creativecommons.org/licenses/by-nc- sa/3.0/igo). Under the terms of this licence, you may copy, redistribute and adapt the work for non-commercial purposes, provided the work is appropriately cited, as indicated below. In any use of this work, there should be no suggestion that WHO endorses any specific organization, products or services. The use of the WHO logo is not permitted. If you adapt the work, then you must license your work under the same or equivalent Creative Commons licence. If you create a translation of this work, you should add the following disclaimer along with the suggested citation: “This translation was not created by the World Health Organization (WHO). WHO is not responsible for the content or accuracy of this translation. The original English edition shall be the binding and authentic edition”. Any mediation relating to disputes arising under the licence shall be conducted in accordance with the mediation rules of the World Intellectual Property Organization (http://www.wipo.int/amc/en/mediation/rules/). -

Dmitry Korkin, Ph.D. E-Mail: [email protected] WWW

Bioinformatics and Computational Biology Program Department of Computer Science Worcester Polytechnic Institute, Worcester, MA Dmitry Korkin, Ph.D. e-mail: [email protected] WWW: http://korkinlab.org Education • Postdoc in Bioinformatics and Computational Biology University of California San Francisco: 2003-2007; Rockefeller University: 2002 Research topic: Structural bioinformatics and systems biology of protein-protein interactions • Ph.D. in Computer Science Faculty of Computer Science, University of New Brunswick, NB, Canada: 2003 Research topic: Machine learning with applications to computer-aided drug design • M.Sc. in Mathematics, Applied Mathematics Faculty of Mechanics and Mathematics, Moscow State University, Moscow, Russia: 1999 Research Topic: Application of information theory to Security Policy • B.Sc. in Mathematics, Applied Mathematics Faculty of Mechanics and Mathematics, Moscow State University, Moscow, Russia: 1997 Academic positions • Director, Bioinformatics and Computational Biology Program 2017-present Worcester Polytechnic Institute, Worcester, MA, USA • Associate Professor in Bioinformatics and Computer Science with tenure 2014–present Dept. of Computer Science and Program in Bioinformatics and Computational Biology Courtesy appointment with Data Science Program and Depts. of Applied Mathematics, Biology and Biotechnology, Worcester Polytechnic Institute, MA, USA • Associate Professor in Bioinformatics and Computer Science with tenure 2013–2014 Dept. of Computer Science and Informatics Institute, University of Missouri-Columbia -

Infectious Disease Informatics and Outbreak Detection

Chapter 13 INFECTIOUS DISEASE INFORMATICS AND OUTBREAK DETECTION Daniel zeng', Hsinchun hen', Cecil ~~nch~,Millicent ids son^, and Ivan ~otham~ '~ana~ementInformation Systems Department, Eller College of Management, University of Arizona, Tucson, Arizona 85721; '~ivisionof Medical Informatics, School of Medicine, University of California, Davis, California 95616; also with California Department of Health Services; 3~ewYork State Department of Health, Albany, New York 12220; also with School of Public Health, University at Albany Chapter Overview Infectious disease informatics is an emerging field that studies data collection, sharing, modeling, and management issues in the domain of infectious diseases. This chapter provides an overview of this field with specific emphasis on the following two sets of topics: (a) the design and main system components of an infectious disease information infrastructure, and (b) spatio-temporal data analysis and modeling techniques used to identify possible disease outbreaks. Several case studies involving real- world applications and research prototypes are presented to illustrate the application context and relevant system design and data modeling issues. Keywords infectious disease informatics; data and messaging standards; outbreak detection; hotspot analysis; data visualization Infectious Disease Informatics and Outbreak Detection 361 1. INTRODUCTION Infectious or communicable diseases have been a risk for human society since the onset of the human race. The large-scale spread of infectious diseases often has a major impact on the society and individuals alike and sometimes determines the course of history (McNeill, 1976). Infectious diseases are still a fact of modern life. Outbreaks of new diseases such as AIDS are causing major problems across the world and known, treatable diseases in developing countries still pose serious threats to human life and exact heavy tolls from nations' economies (Pinner et al., 2003). -

Mobile Communications for Medical Care a Study of Current and Future Healthcare and Health Promotion Applications, and Their Use in China and Elsewhere

Mobile Communications for Medical Care a study of current and future healthcare and health promotion applications, and their use in China and elsewhere Final Report 21 April 2011 Mobile Communications for Medical Care – Final Report 2 21 April 2011 Mobile Communications for Medical Care a study of current and future healthcare and health promotion applications, and their use in China and elsewhere Final Report 21 April 2011 Contents Executive Summary 5 About the Authors 8 Foreword by China Mobile 9 1. Objectives, Approach and Overview of the mHealth Market 11 2. Overview of Existing Applications of mHealth 19 3. Overview of Potential Next-Generation Applications of mHealth 29 4. Illustrative Business Cases for mHealth Provision 41 5. Realising the Health Benefits to Society 59 6. Deployment of mHealth Applications 69 Special Feature: Summary of Case Studies of mHealth Deployments in China 81 Annexes A mHealth Applications Identified in the Course of the Project 93 B Participants in the Workshop and the Interview Programme 113 References 119 Mobile Communications for Medical Care – Final Report 4 21 April 2011 Mobile Communications for Medical Care – Final Report 5 21 April 2011 Executive Summary This report examines the use of mobile networks to enhance healthcare (so-called “mHealth”), as an example of how mobile communications can contribute to sustainable development. We define mHealth as “a service or application that involves voice or data communication for health purposes between a central point and remote locations. It includes telehealth (or eHealth) applications if delivery over a mobile network adds utility to the application. It also includes the use of mobile phones and other devices as platforms for local health-related purposes as long as there is some use of a network.” Innovative mHealth applications have the potential to transform healthcare in both the developing and the developed world.