Small-Set Expansion in Shortcode Graph and the 2-To-2 Conjecture

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Strongish Planted Clique Hypothesis and Its Consequences

The Strongish Planted Clique Hypothesis and Its Consequences Pasin Manurangsi Google Research, Mountain View, CA, USA [email protected] Aviad Rubinstein Stanford University, CA, USA [email protected] Tselil Schramm Stanford University, CA, USA [email protected] Abstract We formulate a new hardness assumption, the Strongish Planted Clique Hypothesis (SPCH), which postulates that any algorithm for planted clique must run in time nΩ(log n) (so that the state-of-the-art running time of nO(log n) is optimal up to a constant in the exponent). We provide two sets of applications of the new hypothesis. First, we show that SPCH implies (nearly) tight inapproximability results for the following well-studied problems in terms of the parameter k: Densest k-Subgraph, Smallest k-Edge Subgraph, Densest k-Subhypergraph, Steiner k-Forest, and Directed Steiner Network with k terminal pairs. For example, we show, under SPCH, that no polynomial time algorithm achieves o(k)-approximation for Densest k-Subgraph. This inapproximability ratio improves upon the previous best ko(1) factor from (Chalermsook et al., FOCS 2017). Furthermore, our lower bounds hold even against fixed-parameter tractable algorithms with parameter k. Our second application focuses on the complexity of graph pattern detection. For both induced and non-induced graph pattern detection, we prove hardness results under SPCH, improving the running time lower bounds obtained by (Dalirrooyfard et al., STOC 2019) under the Exponential Time Hypothesis. 2012 ACM Subject Classification Theory of computation → Problems, reductions and completeness; Theory of computation → Fixed parameter tractability Keywords and phrases Planted Clique, Densest k-Subgraph, Hardness of Approximation Digital Object Identifier 10.4230/LIPIcs.ITCS.2021.10 Related Version A full version of the paper is available at https://arxiv.org/abs/2011.05555. -

Approximating Cumulative Pebbling Cost Is Unique Games Hard

Approximating Cumulative Pebbling Cost Is Unique Games Hard Jeremiah Blocki Department of Computer Science, Purdue University, West Lafayette, IN, USA https://www.cs.purdue.edu/homes/jblocki [email protected] Seunghoon Lee Department of Computer Science, Purdue University, West Lafayette, IN, USA https://www.cs.purdue.edu/homes/lee2856 [email protected] Samson Zhou School of Computer Science, Carnegie Mellon University, Pittsburgh, PA, USA https://samsonzhou.github.io/ [email protected] Abstract P The cumulative pebbling complexity of a directed acyclic graph G is defined as cc(G) = minP i |Pi|, where the minimum is taken over all legal (parallel) black pebblings of G and |Pi| denotes the number of pebbles on the graph during round i. Intuitively, cc(G) captures the amortized Space-Time complexity of pebbling m copies of G in parallel. The cumulative pebbling complexity of a graph G is of particular interest in the field of cryptography as cc(G) is tightly related to the amortized Area-Time complexity of the Data-Independent Memory-Hard Function (iMHF) fG,H [7] defined using a constant indegree directed acyclic graph (DAG) G and a random oracle H(·). A secure iMHF should have amortized Space-Time complexity as high as possible, e.g., to deter brute-force password attacker who wants to find x such that fG,H (x) = h. Thus, to analyze the (in)security of a candidate iMHF fG,H , it is crucial to estimate the value cc(G) but currently, upper and lower bounds for leading iMHF candidates differ by several orders of magnitude. Blocki and Zhou recently showed that it is NP-Hard to compute cc(G), but their techniques do not even rule out an efficient (1 + ε)-approximation algorithm for any constant ε > 0. -

A New Point of NP-Hardness for Unique Games

A new point of NP-hardness for Unique Games Ryan O'Donnell∗ John Wrighty September 30, 2012 Abstract 1 3 We show that distinguishing 2 -satisfiable Unique-Games instances from ( 8 + )-satisfiable instances is NP-hard (for all > 0). A consequence is that we match or improve the best known c vs. s NP-hardness result for Unique-Games for all values of c (except for c very close to 0). For these c, ours is the first hardness result showing that it helps to take the alphabet size larger than 2. Our NP-hardness reductions are quasilinear-size and thus show nearly full exponential time is required, assuming the ETH. ∗Department of Computer Science, Carnegie Mellon University. Supported by NSF grants CCF-0747250 and CCF-0915893, and by a Sloan fellowship. Some of this research performed while the author was a von Neumann Fellow at the Institute for Advanced Study, supported by NSF grants DMS-083537 and CCF-0832797. yDepartment of Computer Science, Carnegie Mellon University. 1 Introduction Thanks largely to the groundbreaking work of H˚astad[H˚as01], we have optimal NP-hardness of approximation results for several constraint satisfaction problems (CSPs), including 3Lin(Z2) and 3Sat. But for many others | including most interesting CSPs with 2-variable constraints | we lack matching algorithmic and NP-hardness results. Take the 2Lin(Z2) problem for example, in which there are Boolean variables with constraints of the form \xi = xj" and \xi 6= xj". The largest approximation ratio known to be achievable in polynomial time is roughly :878 [GW95], whereas it 11 is only known that achieving ratios above 12 ≈ :917 is NP-hard [H˚as01, TSSW00]. -

Research Statement

RESEARCH STATEMENT SAM HOPKINS Introduction The last decade has seen enormous improvements in the practice, prevalence, and usefulness of machine learning. Deep nets give our phones ears; matrix completion gives our televisions good taste. Data-driven articial intelligence has become capable of the dicult—driving a car on a highway—and the nearly impossible—distinguishing cat pictures without human intervention [18]. This is the stu of science ction. But we are far behind in explaining why many algorithms— even now-commonplace ones for relatively elementary tasks—work so stunningly well as they do in the wild. In general, we can prove very little about the performance of these algorithms; in many cases provable performance guarantees for them appear to be beyond attack by our best proof techniques. This means that our current-best explanations for how such algorithms can be so successful resort eventually to (intelligent) guesswork and heuristic analysis. This is not only a problem for our intellectual honesty. As machine learning shows up in more and more mission- critical applications it is essential that we understand the guarantees and limits of our approaches with the certainty aorded only by rigorous mathematical analysis. The theory-of-computing community is only beginning to equip itself with the tools to make rigorous attacks on learning problems. The sophisticated learning pipelines used in practice are built layer-by-layer from simpler fundamental statistical inference algorithms. We can address these statistical inference problems rigorously, but only by rst landing on tractable problem de- nitions. In their traditional theory-of-computing formulations, many of these inference problems have intractable worst-case instances. -

Limits of Approximation Algorithms: Pcps and Unique Games (DIMACS Tutorial Lecture Notes)1

Limits of Approximation Algorithms: PCPs and Unique Games (DIMACS Tutorial Lecture Notes)1 Organisers: Prahladh Harsha & Moses Charikar arXiv:1002.3864v1 [cs.CC] 20 Feb 2010 1Jointly sponsored by the DIMACS Special Focus on Hardness of Approximation, the DIMACS Special Focus on Algorithmic Foundations of the Internet, and the Center for Computational Intractability with support from the National Security Agency and the National Science Foundation. Preface These are the lecture notes for the DIMACS Tutorial Limits of Approximation Algorithms: PCPs and Unique Games held at the DIMACS Center, CoRE Building, Rutgers University on 20-21 July, 2009. This tutorial was jointly sponsored by the DIMACS Special Focus on Hardness of Approximation, the DIMACS Special Focus on Algorithmic Foundations of the Internet, and the Center for Computational Intractability with support from the National Security Agency and the National Science Foundation. The speakers at the tutorial were Matthew Andrews, Sanjeev Arora, Moses Charikar, Prahladh Harsha, Subhash Khot, Dana Moshkovitz and Lisa Zhang. We thank the scribes { Ashkan Aazami, Dev Desai, Igor Gorodezky, Geetha Jagannathan, Alexander S. Kulikov, Darakhshan J. Mir, Alan- tha Newman, Aleksandar Nikolov, David Pritchard and Gwen Spencer for their thorough and meticulous work. Special thanks to Rebecca Wright and Tami Carpenter at DIMACS but for whose organizational support and help, this workshop would have been impossible. We thank Alantha Newman, a phone conversation with whom sparked the idea of this workshop. We thank the Imdadullah Khan and Aleksandar Nikolov for video recording the lectures. The video recordings of the lectures will be posted at the DIMACS tutorial webpage http://dimacs.rutgers.edu/Workshops/Limits/ Any comments on these notes are always appreciated. -

How to Play Unique Games Against a Semi-Random Adversary

How to Play Unique Games against a Semi-Random Adversary Study of Semi-Random Models of Unique Games Alexandra Kolla Konstantin Makarychev Yury Makarychev Microsoft Research IBM Research TTIC Abstract In this paper, we study the average case complexity of the Unique Games problem. We propose a natural semi-random model, in which a unique game instance is generated in several steps. First an adversary selects a completely satisfiable instance of Unique Games, then she chooses an "–fraction of all edges, and finally replaces (“corrupts”) the constraints corresponding to these edges with new constraints. If all steps are adversarial, the adversary can obtain any (1 − ") satisfiable instance, so then the problem is as hard as in the worst case. In our semi-random model, one of the steps is random, and all other steps are adversarial. We show that known algorithms for unique games (in particular, all algorithms that use the standard SDP relaxation) fail to solve semi-random instances of Unique Games. We present an algorithm that with high probability finds a solution satisfying a (1 − δ) fraction of all constraints in semi-random instances (we require that the average degree of the graph is Ω(log~ k)). To this end, we consider a new non-standard SDP program for Unique Games, which is not a relaxation for the problem, and show how to analyze it. We present a new rounding scheme that simultaneously uses SDP and LP solutions, which we believe is of independent interest. Our result holds only for " less than some absolute constant. We prove that if " ≥ 1=2, then the problem is hard in one of the models, that is, no polynomial–time algorithm can distinguish between the following two cases: (i) the instance is a (1 − ") satisfiable semi–random instance and (ii) the instance is at most δ satisfiable (for every δ > 0); the result assumes the 2–to–2 conjecture. -

Lecture 17: the Strong Exponential Time Hypothesis 1 Introduction 2 ETH, SETH, and the Sparsification Lemma

CS 354: Unfulfilled Algorithmic Fantasies May 29, 2019 Lecture 17: The Strong Exponential Time Hypothesis Lecturer: Joshua Brakensiek Scribe: Weiyun Ma 1 Introduction We start with a recap of where we are. One of the biggest hardness assumption in history is P 6= NP, which came around in the 1970s. This assumption is useful for a number of hardness results, but often people need to make stronger assumptions. For example, in the last few weeks of this course, we have used average-case hardness assumptions (e.g. hardness of random 3-SAT or planted clique) to obtain distributional results. Another kind of results have implications on hardness of approximation. For instance, the PCP Theorem is known to be equivalent to solving some approximate 3-SAT is hard unless P=NP. We have also seen conjectures in this category that are still open, even assuming P 6= NP, such as the Unique Games Conjecture and the 2-to-2 Conjecture. Today, we are going to focus on hardness assumptions on time complexity. For example, for 3-SAT, P 6= NP only implies that it cannot be solved in polynomial time. On the other hand, the best known algorithms run in exponential time in n (the number of variables), which motivates the hardness assumption that better algorithms do not exist. This leads to conjectures known as the Exponential Time Hypothesis (ETH) and a stronger version called the Strong Exponential Time Hypothesis (SETH). Informally, ETH says that 3-SAT cannot be solved in 2o(n) time, and SETH says that k-SAT needs roughly 2n time for large k. -

Lecture 24: Hardness Assumptions 1 Overview 2 NP-Hardness 3 Learning Parity with Noise

A Theorist's Toolkit (CMU 18-859T, Fall 2013) Lecture 24: Hardness Assumptions December 2, 2013 Lecturer: Ryan O'Donnell Scribe: Jeremy Karp 1 Overview This lecture is about hardness and computational problems that seem hard. Almost all of the theory of hardness is based on assumptions. We make assumptions about some problems, then we do reductions from one problem to another. Then, we want to make the minimal number of assumptions necessary to show computational hardness. In fact, all work on computational complexity and hardness is essentially in designing efficient algorithms. 2 NP-Hardness A traditional assumption to make is that 3SAT is not in P or equivalently P 6= NP . From this assumption, we also see that Maximum Independent Set, for example, is not in P through a reduction. Such a reduction takes as input a 3CNF formula '. The reduction itself is an algorithm that runs in polynomial time which outputs a graph G. For the reduction to work as we want it to, we prove that if ' is satisfiable then G has an independent set of size at least some value k and if ' is not satisfiable then the maximum independent set of G has size less than k. This reduction algorithm will be deterministic. This is the basic idea behind proofs of NP -hardness. There are a few downsides to this approach. This only give you a worst-case hardness of a problem. For cryptographic purposes, it would be much better to have average-case hardness. We can think of average-case hardness as having an efficiently sampleable distribution of instances that are hard for polynomial time algorithms. -

Counterexamples to the Low-Degree Conjecture Justin Holmgren NTT Research, Palo Alto, CA, USA [email protected] Alexander S

Counterexamples to the Low-Degree Conjecture Justin Holmgren NTT Research, Palo Alto, CA, USA [email protected] Alexander S. Wein Courant Institute of Mathematical Sciences, New York University, NY, USA [email protected] Abstract A conjecture of Hopkins (2018) posits that for certain high-dimensional hypothesis testing problems, no polynomial-time algorithm can outperform so-called “simple statistics”, which are low-degree polynomials in the data. This conjecture formalizes the beliefs surrounding a line of recent work that seeks to understand statistical-versus-computational tradeoffs via the low-degree likelihood ratio. In this work, we refute the conjecture of Hopkins. However, our counterexample crucially exploits the specifics of the noise operator used in the conjecture, and we point out a simple way to modify the conjecture to rule out our counterexample. We also give an example illustrating that (even after the above modification), the symmetry assumption in the conjecture is necessary. These results do not undermine the low-degree framework for computational lower bounds, but rather aim to better understand what class of problems it is applicable to. 2012 ACM Subject Classification Theory of computation → Computational complexity and crypto- graphy Keywords and phrases Low-degree likelihood ratio, error-correcting codes Digital Object Identifier 10.4230/LIPIcs.ITCS.2021.75 Funding Justin Holmgren: Most of this work was done while with the Simons Institute for the Theory of Computing. Alexander S. Wein: Partially supported by NSF grant DMS-1712730 and by the Simons Collaboration on Algorithms and Geometry. Acknowledgements We thank Sam Hopkins and Tim Kunisky for comments on an earlier draft. -

Inapproximability of Maximum Biclique Problems, Minimum $ K $-Cut And

Inapproximability of Maximum Biclique Problems, Minimum k-Cut and Densest At-Least-k-Subgraph from the Small Set Expansion Hypothesis∗ Pasin Manurangsi† UC Berkeley May 11, 2017 Abstract The Small Set Expansion Hypothesis is a conjecture which roughly states that it is NP-hard to distinguish between a graph with a small subset of vertices whose (edge) expansion is almost zero and one in which all small subsets of vertices have expansion almost one. In this work, we prove conditional inapproximability results for the following graph problems based on this hypothesis: • Maximum Edge Biclique: given a bipartite graph G, find a complete bipartite subgraph of G that contains maximum number of edges. We show that, assuming the Small Set Expansion Hypothesis and that NP * BPP, no polynomial time algorithm approximates Maximum Edge Biclique to within a factor of n1−ε of the optimum for every constant ε> 0. • Maximum Balanced Biclique: given a bipartite graph G, find a balanced complete bipartite subgraph of G that contains maximum number of vertices. Similar to Maximum Edge Biclique, we prove that this problem is inapproximable in polynomial time to within a factor of n1−ε of the optimum for every constant ε> 0, assuming the Small Set Expansion Hypothesis and that NP * BPP. • Minimum k-Cut: given a weighted graph G, find a set of edges with minimum total weight whose removal partitions the graph into (at least) k connected components. For this problem, we prove that it is NP-hard to approximate it to within (2 − ε) factor of the optimum for every constant ε> 0, assuming the Small Set Expansion Hypothesis. -

Current Events Bulletin

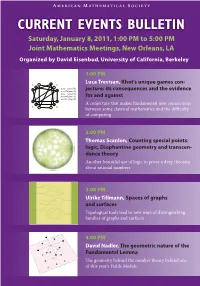

A MERICAN M ATHEMATICAL S OCIETY 2011 CURRENT EVENTS BULLETIN Committee CURRENT EVENTS BULLETIN David Eisenbud, University of California, Berkeley, Chair David Aldous, University of California, Berkeley Saturday, January 8, 2011, 1:00 PM to 5:00 PM David Auckly, Mathematical Sciences Research Institute Hélène Barcelo, Mathematical Sciences Research Institute Joint Mathematics Meetings, New Orleans, LA Laura DeMarco, University of Illinois at Chicago Matthew Emerton, Northwestern University Susan Friedlander, University of Southern California Organized by David Eisenbud, University of California, Berkeley Robert Ghrist, University of Pennsylvania Andrew Granville, University of Montreal Ben Green, Cambridge University 1:00 PM Michael Hopkins, Harvard University Luca Trevisan, Khot's unique games con- László Lovász, Eotvos Lorand University x1 x3 x1-x3=1 (mod 3) jecture: its consequences and the evidence John Morgan, State University of NewYork, Stony Brook x1-x2=2 (mod 3) George Papanicolaou, Stanford University x2-x3=1 (mod 3) x4-x2=0 (mod 3) for and against Frank Sottile, Texas A & M University x3-x4=1 (mod 3) Richard Taylor, Harvard University A conjecture that makes fundamental new connections x x Akshay Venkatesh, Stanford University 2 4 between some classical mathematics and the difficulty David Wagner, University of Waterloo of computing Avi Wigderson, Institute for Advanced Study Carol Wood, Wesleyan University Andrei Zelevinsky, Northeastern University 2:00 PM Thomas Scanlon, Counting special points: logic, Diophantine geometry and transcen- dence theory Another beautiful use of logic to prove a deep theorem about rational numbers 3:00 PM Ulrike Tillmann, Spaces of graphs and surfaces Topological tools lead to new ways of distinguishing families of graphs and surfaces 4:00 PM David Nadler, The geometric nature of the Cover graphic associated with Nadler's talk courtesy of David Jordan. -

On the Mysteries of MAX NAE-SAT

On the Mysteries of MAX NAE-SAT Joshua Brakensiek∗ Neng Huangy Aaron Potechinz Uri Zwickx Abstract MAX NAE-SAT is a natural optimization problem, closely related to its better-known relative MAX SAT. The approximability status of MAX NAE-SAT is almost completely understood if all clauses have the same size k, for some k ≥ 2. We refer to this problem as MAX NAE-fkg-SAT. For k = 2, it is essentially the celebrated MAX CUT problem. For k = 3, it is related to the MAX CUT problem in graphs that can be fractionally covered by triangles. For k ≥ 4, it is known that an approximation ratio 1 of 1 − 2k−1 , obtained by choosing a random assignment, is optimal, assuming P 6= NP . For every k ≥ 2, 7 an approximation ratio of at least 8 can be obtained for MAX NAE-fkg-SAT. There was some hope, 7 therefore, that there is also a 8 -approximation algorithm for MAX NAE-SAT, where clauses of all sizes are allowed simultaneously. 7 Our main result is that there is no 8 -approximation algorithm for MAX NAE-SAT, assuming the unique games conjecture (UGC). In fact, even for almost satisfiable instances of MAX NAE-f3; 5g-SAT (i.e., MAX NAE-SAT where all clauses have size 3 or 5), the best approximation ratio that can be p 3( 21−4) achieved, assuming UGC, is at most 2 ≈ 0:8739. Using calculus of variations, we extend the analysis of O'Donnell and Wu for MAX CUT to MAX NAE-f3g-SAT. We obtain an optimal algorithm, assuming UGC, for MAX NAE-f3g-SAT, slightly im- proving on previous algorithms.