Observing the Virtual Universes of Cosmological N-Body Simulations

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Gravitational Waves from Resolvable Massive Black Hole Binary Systems

Mon. Not. R. Astron. Soc. 000, 000–000 (0000) Printed 22 October 2018 (MN LATEX style file v2.2) Gravitational waves from resolvable massive black hole binary systems and observations with Pulsar Timing Arrays A. Sesana1, A. Vecchio2 and M. Volonteri3 1Center for Gravitational Wave Physics, The Pennsylvania State University, University Park, PA 16802, USA 2School of Physics and Astronomy, University of Birmingham, Edgbaston, Birmingham, B15 2TT, UK 3 Dept. of Astronomy, University of Michigan, Ann Arbor, MI 48109, USA Received — ABSTRACT Massive black holes are key components of the assembly and evolution of cosmic structures and a number of surveys are currently on-going or planned to probe the demographics of these objects and to gain insight into the relevant physical processes. Pulsar Timing Arrays (PTAs) currently provide the only means to observe gravita- > 7 tional radiation from massive black hole binary systems with masses ∼ 10 M⊙. The whole cosmic population produces a stochastic background that could be detectable with upcoming Pulsar Timing Arrays. Sources sufficiently close and/or massive gener- ate gravitational radiation that significantly exceeds the level of the background and could be individually resolved. We consider a wide range of massive black hole binary assembly scenarios, we investigate the distribution of the main physical parameters of the sources, such as masses and redshift, and explore the consequences for Pulsar Timing Arrays observations. Depending on the specific massive black hole population model, we estimate that on average at least one resolvable source produces timing residuals in the range ∼ 5 − 50 ns. Pulsar Timing Arrays, and in particular the future Square Kilometre Array (SKA), can plausibly detect these unique systems, although the events are likely to be rare. -

The Illustristng Simulations: Public Data Release

Nelson et al. Computational Astrophysics and Cosmology (2019)6:2 https://doi.org/10.1186/s40668-019-0028-x R E S E A R C H Open Access The IllustrisTNG simulations: public data release Dylan Nelson1*,VolkerSpringel1,6,7, Annalisa Pillepich2, Vicente Rodriguez-Gomez8,PaulTorrey9,5, Shy Genel4, Mark Vogelsberger5, Ruediger Pakmor1,6, Federico Marinacci10,5, Rainer Weinberger3, Luke Kelley11,MarkLovell12,13, Benedikt Diemer3 and Lars Hernquist3 Abstract We present the full public release of all data from the TNG100 and TNG300 simulations of the IllustrisTNG project. IllustrisTNG is a suite of large volume, cosmological, gravo-magnetohydrodynamical simulations run with the moving-mesh code AREPO. TNG includes a comprehensive model for galaxy formation physics, and each TNG simulation self-consistently solves for the coupled evolution of dark matter, cosmic gas, luminous stars, and supermassive black holes from early time to the present day, z = 0. Each of the flagship runs—TNG50, TNG100, and TNG300—are accompanied by halo/subhalo catalogs, merger trees, lower-resolution and dark-matter only counterparts, all available with 100 snapshots. We discuss scientific and numerical cautions and caveats relevant when using TNG. The data volume now directly accessible online is ∼750 TB, including 1200 full volume snapshots and ∼80,000 high time-resolution subbox snapshots. This will increase to ∼1.1 PB with the future release of TNG50. Data access and analysis examples are available in IDL, Python, and Matlab. We describe improvements and new functionality in the web-based API, including on-demand visualization and analysis of galaxies and halos, exploratory plotting of scaling relations and other relationships between galactic and halo properties, and a new JupyterLab interface. -

Machine Learning and Cosmological Simulations I: Semi-Analytical Models

MNRAS 000,1{18 (2015) Preprint 20 August 2018 Compiled using MNRAS LATEX style file v3.0 Machine Learning and Cosmological Simulations I: Semi-Analytical Models Harshil M. Kamdar1;2?, Matthew J. Turk2;4 and Robert J. Brunner2;3;4;5 1Department of Physics, University of Illinois, Urbana, IL 61801 USA 2Department of Astronomy, University of Illinois, Urbana, IL 61801 USA 3Department of Statistics, University of Illinois, Champaign, IL 61820 USA 4National Center for Supercomputing Applications, Urbana, IL 61801 USA 5Beckman Institute For Advanced Science and Technology, University of Illinois, Urbana, IL, 61801 USA Accepted 2015 October 1. Received 2015 September 30; in original form 2015 July 2 ABSTRACT We present a new exploratory framework to model galaxy formation and evolution in a hierarchical universe by using machine learning (ML). Our motivations are two- fold: (1) presenting a new, promising technique to study galaxy formation, and (2) quantitatively analyzing the extent of the influence of dark matter halo properties on galaxies in the backdrop of semi-analytical models (SAMs). We use the influential Millennium Simulation and the corresponding Munich SAM to train and test vari- ous sophisticated machine learning algorithms (k-Nearest Neighbors, decision trees, random forests and extremely randomized trees). By using only essential dark matter 12 halo physical properties for haloes of M > 10 M and a partial merger tree, our model predicts the hot gas mass, cold gas mass, bulge mass, total stellar mass, black hole mass and cooling radius at z = 0 for each central galaxy in a dark matter halo for the Millennium run. Our results provide a unique and powerful phenomenolog- ical framework to explore the galaxy-halo connection that is built upon SAMs and demonstrably place ML as a promising and a computationally efficient tool to study small-scale structure formation. -

Virtu - the Virtual Universe

VirtU - The Virtual Universe comprising the Theoretical Virtual Observatory & the Theory/Observations Interface A collaborative e-science proposal between Carlos Frenk University of Durham Ofer Lahav University College London Nicholas Walton Cambridge University Carlton Baugh University of Durham Greg Bryan University of Oxford Shaun Cole University of Durham Jean-Christophe Desplat Edinburgh Parallel Computing Centre Gavin Dalton University of Oxford George Efstathiou Cambridge University Nick Holliman University of Durham Adrian Jenkins University of Durham Andrew King University of Leicester Simon Morris University of Durham Jerry Ostriker Cambridge University John Peacock University of Edinburgh Frazer Pearce University of Nottingham Jim Pringle Cambridge University Chris Rudge University of Leicester Joe Silk University of Oxford Chris Simpson University of Durham Linda Smith University College London Iain Stewart University of Durham Tom Theuns University of Durham Peter Thomas University of Sussex Gavin Pringle Edinburgh Parallel Computing Centre Jie Xu University of Durham Sukyoung Yi University of Oxford Overseas collaborators: Joerg Colberg University of Pittsburgh Hugh Couchman McMaster University August Evrard University of Michigan Guinevere Kauffmann Max-Planck Institut fur Astrophysik Volker Springel Max-Planck Institut fur Astrophysik Rob Thacker McMaster University Simon White Max-Planck Institut fur Astrophysik 1 Executive Summary 1. We propose to construct a Virtual Universe (VirtU) consisting of the \Theoretical Virtual -

Mocking the Universe: the Jubilee Cosmological Simulations

Mocking the Universe: The Jubilee cosmological simulations Deep galaxy surveys, large-scale structure and dark energy. Spanish participation in future projects, Valencia, 29-30 March 2012 JUBILEE Juropa Hubble Volume Simulation Project Participants: P.I: S. Gottlober (AIP) I. I. Iliev (Sussex) II. G. Yepes (UAM) III. J.M. Diego (IFCA) IV. E. Martínez González (IFCA) Why do we need large particle simulations? Large Volume Galaxy Surveys DES, KIDS, BOSS, JPAS, LSST, BigBOSS, Euclid They will probe 10-100 Gpc^3 volumes • Need to resolve halos hosting the faintest galaxies of these surveys to produce realistic mock catalogues. Higher z surveys imply smaller galaxies and smaller halos->more mass resolution. • Fundamental tool to compare clustering properties Zero crossing of CF. 130Mpc of galaxies with theoretical predictions from Prada et al 2012 cosmological models at few % level. Not possible only with linear theory. Must do the full non-linear evolution for scales 100+ Mpc (BAO, zero crossing) Galaxy Biases: Large mass resolution is needed if BAO in BOSS, internal sub-structure of dm halos has to be properly 100Mpc scale resolved to map halos to galaxies. e.g. Using the Halo Abundance Matching technique (e.g. Trujillo-Gómez et al 2011, Nuza et al 2012) We will need trillion+ particle simulations Large Volume Galaxy Surveys A typical example: BOSS (z=0.1..0.7) Box size to host BOSS survey : 3.5 h^-1 Gpc BOSS completed down to galx with Vcir >350 km/s - > Mvir ~5x10^12 Msun. To properly resolve the peak of the Vrot in a dark matter halo we need a minimum of 500-1000 particles. -

Numerical Cosmology: Recreating the Universe in a Supercomputer The

March 2006 The Alexandria Lectures Numerical Cosmology: Recreating the Universe in a Supercomputer Simon White Max Planck Institute for Astrophysics The Three-fold Way to Astrophysical Truth OBSERVATION The Three-fold Way to Astrophysical Truth OBSERVATION THEORY The Three-fold Way to Astrophysical Truth OBSERVATION SIMULATION THEORY INGREDIENTS FOR SIMULATIONS ● The physical contents of the Universe Ordinary (baryonic) matter – protons, neutrons, electrons Radiation (photons, neutrinos...) Dark Matter Dark Energy ● The Laws of Physics General Relativity Electromagnetism Standard model of particle physics Thermodynamics ● Initial and boundary conditions Global cosmological context Creation of “initial” structure ● Astrophysical phenomenology (“subgrid” physics) Star formation and evoluti on The COBE satellite (1989 - 1993) ● Three instruments Far Infrared Absolute Spectroph. Differential Microwave Radiom. Diffuse InfraRed Background Exp Spectrum of the Cosmic Microwave Background Data from COBE/FIRAS a near-perfect black body! What do we learn from the COBE spectrum? ● The microwave background radiation looks like thermal radiation from a `Planckian black-body'. This determines its temperature T = 2.73K ● In the past the Universe was hot and almost without structure -- Void and without form -- At that time it was nearly in thermal equilibrium ● There has been no substantial heating of the Universe since a few months after the Big Bang itself. COBE's temperature map of the entire sky T = 2.728 K T = 0.1 K COBE's temperature map of the -

The Millennium Simulation: Cosmic Evolution in a Supercomputer Simon White Max Planck Institute for Astrophysics the COBE Satellite (1989 - 1993)

The Millennium Simulation: cosmic evolution in a supercomputer Simon White Max Planck Institute for Astrophysics The COBE satellite (1989 - 1993) ● Two instruments made maps of the whole sky in microwaves and in infrared radiation ● One instrument took a precise spectrum of the sky in microwaves COBE's temperature map of the entire sky T = 2.728 K T = 0.1 K COBE's temperature map of the entire sky T = 2.728 K T = 0.0034 K COBE's temperature map of the entire sky T = 2.728 K T = 0.00002 K Structure in the COBE map ● One side of the sky is `hot', the other is `cold' the Earth's motion through the Cosmos V = 600 km/s Milky Way ● Radiation from hot gas and dust in our own Milky Way ● Structure in the Microwave Background itself Structure in the Microwave Background Where is the structure? In the cosmic “clouds”, 40 billion light years away What are we seeing? Weak gravito-sound waves in the clouds When do we see these clouds? When the Universe was 400,000 years old, and was 1,000 times smaller and 1,000 times hotter than today How big are the structures? At least a billion light-years across (in the COBE maps) When were they made? During inflation, perhaps 10-30sec. after the Big Bang What did they turn into? Everything we see in the present Universe The WMAP Satellite at Lagrange-Point L2 The WMAP of the whole CMB sky Bennett et al 2003 What can we learn from these structures? The pattern of the structures is influenced by several things: --the Geometry of the Universe finite or infinite eternal or doomed to end --the Content of the Universe: -

Exploring the Millennium Run-Scalable Rendering of Large

Exploring the Millennium Run - Scalable Rendering of Large-Scale Cosmological Datasets Roland Fraedrich, Jens Schneider, and Rudiger¨ Westermann Fig. 1. Visualizations of the Millennium Simulation with more than 10 billion particles at different scales and a screen space error below one pixel. On a 1200x800 viewport the average frame rate is 11 fps. Abstract—In this paper we investigate scalability limitations in the visualization of large-scale particle-based cosmological simula- tions, and we present methods to reduce these limitations on current PC architectures. To minimize the amount of data to be streamed from disk to the graphics subsystem, we propose a visually continuous level-of-detail (LOD) particle representation based on a hier- archical quantization scheme for particle coordinates and rules for generating coarse particle distributions. Given the maximal world space error per level, our LOD selection technique guarantees a sub-pixel screen space error during rendering. A brick-based page- tree allows to further reduce the number of disk seek operations to be performed. Additional particle quantities like density, velocity dispersion, and radius are compressed at no visible loss using vector quantization of logarithmically encoded floating point values. By fine-grain view-frustum culling and presence acceleration in a geometry shader the required geometry throughput on the GPU can be significantly reduced. We validate the quality and scalability of our method by presenting visualizations of a particle-based cosmological dark-matter simulation exceeding 10 billion elements. Index Terms—Particle Visualization, Scalability, Cosmology. 1 INTRODUCTION Particle-based cosmological simulations play an eminent role in re- By today, for the largest available cosmological simulations an in- producing the large-scale structure of the Universe. -

The Environment of Radio Galaxies and Quasars: Roderik Overzier Z=6 Z=1

The environment of radio galaxies and quasars: a new perspective using the Millennium Simulations z=1 8 z=6 DM z=6 z=1.4 z=0 Roderik Overzier GAL JHU/MPA Seeon, June 8 2007 The environment of radio galaxies and quasars: a new perspective using the Millennium Simulations z=1 8 z=6 DM z=6 z=1.4 z=0 Roderik Overzier GAL JHU/MPA Seeon, June 8 2007 Q1: What are the richest structures present at z>2? Q2: Is this the typical environment of z>2 radio galaxies and z=6 quasars? Q3: Can we find/detect these structures? Motivation 1 The environment of high redshift radio galaxies TNJ1338 : “Protocluster” of Lyman break galaxies, Lya emitters and a luminous radio galaxy at z=4.1(?) Subaru 30’x30’ VLT 2x7’x7’ ACS 3.4’x3.4’ Miley et al. (2004) Overzier et al. (2006) Intema et al. (2006) Venemans et al. (2002) - How representative of HzRGs in general ? - How representative of forming clusters in general? - Relation to non-RG protoclusters? - Derived cluster evolution relies on several critical assumptions that are not directly measurable (needed: bias, volume, mass overdensity, cluster mass, virialization redshift, etc.) Motivation 2 Luminous Quasars at z~6 • SDSS found ~20 QSOs at z>5.7 (Fan et al. 2006) • Near end reionization epoch 9 • Black hole masses of ~10 Mo • Spectral properties similar to luminous quasars at low redshift • Metallicity implies rapid formation of a massive host galaxy • Rarest objects in early Universe (~1 Gpc-3) (Hopkins et al. 2006) How can we estimate the quasar host halo mass and its z=0 descendant? - Stellar mass: quasar host galaxy unaccessible - Clustering: not feasible with ~20 objects “The Fate of the First Quasars” z=6 • z=6: Stellar mass = 6.8×1010 h −1 M⊙, the largest in the entire simulation at z = 6.2 • z=0: The centre of the ninth most z=0 massive cluster, 15 −1 M = 1.46×10 h M⊙ • The quasar progenitor can be traced back to z=17 Millennium Run (V. -

Cosmological Simulations Volker Springel

Cosmological Simulations Volker Springel Simulation predictions for the dark sector of ΛCDM Can we falsify ΛCDM with simulations? Exaflop computing Beyond the dark sector: Hydrodynamic simulations Challenges for galaxy formation simulations Unsolved Problems in Cosmology and Astrophysics Benasque, February 2011 The first slice in the CfA redshift survey 1100 galaxies in a wedge, 6 degrees wide and 110 degrees long de Lapparent, Geller, Huchra (1986) Current galaxy redshift surveys map the Universe with several hundred thousand galaxies The initial conditions for cosmic structure formation are directly observable THE MICROWAVE SKY WMAP Science Team (2003, 2006, 2008, 2010) The basic dynamics of structure formation in the dark matter BASIC EQUATIONS AND THEIR DISCRETIZATION Gravitation Friedmann-Lemaitre model (Newtonian approximation to GR in an expanding space-time) Collisionless Boltzmann equation with self-gravity Dark matter is collisionless Hamiltonian dynamics in expanding space-time Monte-Carlo integration as N-body System Problems: 3N coupled, non-linear differential ● N is very large equations of second order ● All equations are coupled with each other 'Millennium' simulation Springel et al. (2005) CDM 10.077.696.000 particles m=8.6 x 108 M /h ⊙ Why are cosmological simulations of structure formation useful for studying the dark universe? Simulations are the theoretical tool of choice for calculations in the non-linear regime. They connect the (simple) cosmological initial conditions with the (complex) present-day universe. Predictions from N-body simulations: Abundance of objects (as a function of mass and time) Their spatial distribution Internal structure of halos (e.g. density profiles, spin) Mean formation epochs Merger rates Detailed dark matter distribution on large and fairly small scales Galaxy formation models Gravitational lensing Baryonic acoustic oscillations in the matter distribution Integrated Sachs-Wolfe effect Dark matter annihilation rate Morphology of large-scale structure (“cosmic web”) ... -

MINING VIRTUAL UNIVERSES (ONLINE) Lectures and Hands-On Sessions at ISSAC 2012 Gerard Lemson MPA, Garching, Germany

MINING VIRTUAL UNIVERSES (ONLINE) Lectures and hands-on sessions at ISSAC 2012 Gerard Lemson MPA, Garching, Germany ISSAC 2012 SDSC, San Diego, USA 1 Why me? Millennium Run Database (online) arXiv:astro-ph/0608019, gavo.mpa-garching.mpg.de/Millennium also multidark.org Millennium Run Observatory (online) arXiv:1206.6923 galformod.mpa-garching.mpg.de/mrobs With (thanks to): Raul Angulo, Jeremy Blaizot, Emmanuel Bertin, Tamas Budavari, Darren Croton, Gabriella DeLucia, Matthias Egger, GAVO, Bruno Henriques, Gabriel-Dominique Marleau, Roderik Overzier, Guo Qi, Volker Springel, Alex Szalay, Virgo Consortium, Simon White. ISSAC 2012 SDSC, San Diego, USA 2 Why here? “Moore’s law” for N-body simulations Courtesy Simon White Lectures and hands-on sessions L1: Overview + Phenomenology L2: Virtual Universes in a Database L3: Virtual Observatory and Theory H1: Querying databases H2: Filling databases and publishing them ISSAC 2012 SDSC, San Diego, USA 4 FOF groups and Subhalos Raw data: Particles Mock images Density fields Subhalo merger trees Synthetic galaxies (SAM) Mock catalogues Lectures and hands-on sessions L1: Overview + Phenomenology L2: Virtual Universes in a Database L3: Virtual Observatory and Theory H1: Querying databases H2: Filling databases and publishing them ISSAC 2012 SDSC, San Diego, USA 6 Some typical questions, easily posed and answered with a database Environmental dependencies Conditional statistics galaxies in halos Comparison galaxy formation algorithms Time evolution of halos, galaxies Mock catalogues -

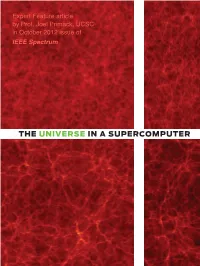

The Universe in a Supercomputer

THE UNIVERSE IN A SUPERCOMPUTER 42C6>:::HE:8IGJB6EG>A'%%- WWW.SPECTRUM.IEEE.ORG 10.Cosmos.Sim.NA.indd 42 9/18/12 12:48 PM THE UNIVERSE IN A SUPERCOMPUTER 42C6>:::HE:8IGJB6EG>A'%%- WWW.SPECTRUM.IEEE.ORG 10.Cosmos.Sim.NA.indd 42 9/18/12 12:48 PM COSMIC WEB: The Bolshoi simulation models the evolution of dark matter, which is responsible for the large- scale structure of the universe. Here, snapshots from the simulation show the dark matter distribution at 500 million and 2.2 billion years [top] and 6 billion and 13.7 billion years [bottom] after the big bang. These images are 50-million-light-year-thick slices of a cube of simulated universe that today would measure roughly 1 billion light-years on a side and encompass about 100 galaxy clusters. SOURCES: SIMULATION, ANATOLY KLYPIN AND JOEL R. PRIMACK; VISUALIZATION, STEFAN GOTTLÖBER/LEIBNIZ INSTITUTE FOR ASTROPHYSICS POTSDAM To understand the cosmos, we must evolve it all over again By Joel R. Primack HEN IT COMES TO RECONSTRUCTING THE PAST, you might think that astrophysicists have it easy. After all, the sky is awash with evidence. For most of the universe’s history, space has been largely transparent, so much so Wthat light emitted by distant galaxies can travel for billions of years before finally reaching Earth. It might seem that all researchers have to do to find out what the universe looked like, say, 10 billion years ago is to build a telescope sensitive enough to pick up that ancient light. Actually, it’s more complicated than that.