Xbase Working Document

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Definition of Database Design Standards for Human Rights Agencies

A Definition of Database Design Standards for Human Rights Agencies by Patrick Ball, et al. AMERICAN ASSOCIATION FOR THE ADVANCEMENT OF SCIENCE 1994 TABLE OF CONTENTS Introduction Definition of an Entity Entity versus Role versus Link What is a Link? Presentation of a Data Model Rule-types with Rule Examples and Instance Examples . 6.1 Type of Rule: Act . 6.2 Type of Rule: Relationship . 6.3 Rule-type Name: Biography Rule Parsimony: the Trade between Precision and Simplicity xBase Table Design Implementation Example . 8.1 xBase Implementation Example . 8.2 Abstract Fields & Data Types SQL Database Design Implementation Example . 9.1 Querying & Performance . 9.2 Main Difference . 9.3 Storage of the Rules in SQL Model 1. Introduction In 1993, HURIDOCS published their Standard Human Rights Event Formats (Dueck et al. 1993a) which describe standards for the collection and exchange of information on human rights abuses. These formats represent a major step forward in enabling human rights organizations to develop manual and computerized systems for collecting and exchanging data. The formats define common fields for collection of human rights event, victim, source, perpetrator and agency intervention data, and a common vocabulary for many of the fields in the formats, for example occupation, type of event and geographical location. The formats are designed as a tool leading toward both manual and computerized systems of human rights violation documentation. Before organizations implement documentation systems which will meet their needs, a wide range of issues must be considered. One of these problems is the structural problems of some data having complex relations to other data. -

Xbase++ Language Concepts for Newbies Geek Gatherings Roger Donnay

Xbase++ Language Concepts for Newbies Geek Gatherings Roger Donnay Introduction Xbase++ has extended the capabilities of the language beyond what is available in FoxPro and Clipper. For FoxPro developers and Clipper developers who are new to Xbase++, there are new variable types and language concepts that can enhance the programmer's ability to create more powerful and more supportable applications. The flexibility of the Xbase++ language is what makes it possible to create libraries of functions that can be used dynamically across multiple applications. The preprocessor, code blocks, ragged arrays and objects combine to give the programmer the ability to create their own language of commands and functions and all the advantages of a 4th generation language. This session will also show how these language concepts can be employed to create 3rd party add-on products to Xbase++ that will integrate seamlessly into Xbase++ applications. The Xbase++ language in incredibly robust and it could take years to understand most of its capabilities, however when migrating Clipper and FoxPro applications, it is not necessary to know all of this. I have aided many Clipper and FoxPro developers with the migration process over the years and I have found that only a basic introduction to the following concepts are necessary to get off to a great start: * The Xbase++ Project. Creation of EXEs and DLLs. * The compiler, linker and project builder . * Console mode for quick migration of Clipper and Fox 2.6 apps. * INIT and EXIT procedures, DBESYS, APPSYS and MAIN. * The DBE (Database engine) * LOCALS, STATICS, PRIVATE and PUBLIC variables. * STATIC functions. -

Course Description

6/20/2018 Syllabus Syllabus This is a single, concatenated file, suitable for printing or saving as a PDF for offline viewing. Please note that some animations or images may not work. Course Description This module is also available as a concatenated page, suitable for printing or saving as a PDF for offline viewing. MET CS669 Database Design and Implementation for Business This course uses the latest database tools and techniques for persistent data and object-modeling and management. Students gain extensive hands-on experience with exercises and a term project using Oracle, SQL Server, and other leading database management systems. Students learn to model persistent data using the standard Entity-Relationship model (ERM) and how to diagram those models using Entity-Relationship Diagrams (ERDs), Extended Entity-Relationship Diagrams (EERDs), and UML diagrams. Students learn the standards-based Structured Query Language (SQL) and the extensions to the SQL standards implemented in Oracle and SQL Server. Students learn the basics of database programming, and write simple stored procedures and triggers. The Role of this Course in the MSCIS Online Curriculum This is a core course in the MSCIS online curriculum. It provides students with an understanding and experience with database technology, database design, SQL, and the roles of databases in enterprises. This course is a prerequisite for the three additional database courses in the MSCIS online curriculum, which are CS674 Database Security, CS699 Data Mining and Business Intelligence and CS779 Advanced Database Management. By taking these three courses you can obtain the Concentration in Database Management and Business Intelligence. CS674 Database Security also satisfies an elective requirement for the Concentration in Security. -

Xbase PDF Class

Xbase++ PDF Class User guide 2020 Created by Softsupply Informatica Rua Alagoas, 48 01242-000 São Paulo, SP Brazil Tel (5511) 3159-1997 Email : [email protected] Contact : Edgar Borger Table of Contents Overview ....................................................................................................................................................4 Installing ...............................................................................................................................................5 Changes ...............................................................................................................................................6 Acknowledgements ............................................................................................................................10 Demo ..................................................................................................................................................11 GraDemo ............................................................................................................................................13 Charts .................................................................................................................................................15 Notes ..................................................................................................................................................16 Class Methods .........................................................................................................................................17 -

Design and Development of a Thermodynamic Properties Database Using the Relational Data Model

DESIGN AND DEVELOPMENT OF A THERMODYNAMIC PROPERTIES DATABASE USING THE RELATIONAL DATA MODEL By PARIKSHIT SANGHAVI Bachelor ofEngineering Birla Institute ofTechnology and Science, Pilani, India 1992 Submitted to the Faculty ofthe Graduate College ofthe Oklahoma State University in partial fulfillment of the requirements for the Degree of MASTER OF SCIENCE December, 1995 DESIGN AND DEVELOPMENT OF A THERMODYNAMIC PROPERTIES DATABASE USING THE RELATIONAL DATA MODEL Thesis Approved: ~ (}.J -IJ trT,J T~ K t 4J~ _ K1t'Il1·B~~ Dean ofthe Graduate College ii ACKNOWLEDGMENTS I would like to thank my adviser Dr. Jan Wagner, for his expert guidance and criticism towards the design and implementation ofthe GPA Database. I would like to express my appreciation to Dr. Martin S. High, for his encouragement and support on the GPA Database project. I am grateful to Dr. Khaled Gasem, for helping me gain a better understanding of the aspects pertaining to thermodynamics in.the GPA Database project. I would also like to thank Dr. James R. Whiteley for taking the time to provide constructive criticism for this thesis as a member ofmy thesis committee. I would like to thank the Enthalpy and Phase Equilibria Steering Committees of the Gas Processors Association for their support. The GPA Database project would not have been possible without their confidence in the faculty and graduate research assistants at Oklahoma State University. I wish to acknowledge Abhishek Rastogi, Heather Collins, C. S. Krishnan, Srikant, Nhi Lu, and Eric Maase for working with me on the GPA Database. I am grateful for the emotional support provided by my family. -

R&R Report Writer Version 9.0, Xbase & SQL Editions New Release

R&R Report Writer Version 9.0, xBase & SQL Editions New Release Enhancements and New Capabilities Liveware Publishing is pleased to announce an exciting set of enhancements to R&R Report Writer!! This is the first MAJOR update to R&R in almost five years, but Liveware has made up for lost time with substantial improvements for every R&R user. Developers building reports for their applications, end-users creating their own ad-hoc and production reports, and even casual R&R runtime users will all find something in this release to streamline their reporting activities. For those who have purchased and implemented every update, and particularly Liveware’s Version 8.1 release licensees, you will be rewarded for your loyalty with a reduced upgrade price and a wide range of capabilities that will make R&R even more indispensable. For others who waited, this is the upgrade to buy since it contains so many unique and compelling ideas about reporting -- and ones you can use right away!! Version 9.0 includes 5 new “modules” -- what we consider significant extensions of R&R capabilities -- and many convenience and usability improvements to open reporting to database users at all levels. The 5 new modules are (with page number in parenthesis): Email Report Bursting & Distribution (2) Report Librariantm (4) ParameteRRtm Field Prompting (9) FlexLinktm Indexing-on-the-fly (11) Rapid Runnertm Runtime Control (16) Among the other improvements, the most significant are: Pervasive Band Color-coding (18) Page X of Y Functions (20) Redesigned Table Join Dialog (21) Print Preview Band Identification (23) Drill-down in Result Set Browser (24) Visual dBase 7 Support (25) This document will summarize each of these modules and improvements and describe how they expand R&R’s capabilities to an ever-wider range of reporting needs. -

Building Permit/Code Enforcement Instruction Manual

Building Permit/Code Enforcement Instruction Manual Table of contents: Section 1 - Introduction Section 2 Page 1 - File setup/Screen layout Page 2 - Screen Layout/Menu Buttons Section 3 Page 1 - File Preferences Page 2 - File Preference Data Page 3 - File Preferences User Definition Page 4 - File Preferences Detail Information Section 4 Page 1 - File Parcel Locate Page 2 - Assessment Information Page 3 - Folder Selection Section 5 Page 1 - Applicant Folder Page 2 - Permit Folder Page 3 - Action Folder Page 4 - Site Folder Page 5 - Building Folder Page 6 - Inspections Folder Page 7 - Inspections Folder Information Page 8 - Contractor Folder Page 9 - Contractor Folder Information Page 10 - Notes Folder Page 11 – Notes Folder Information Table of Contents cont: Section 6 Page 1 - Change Permit Information Page 2 - Change Permit Folder Location Section 7 Page 1 - Housing Code Folder Page 2 - Housing Code Folder Information Section 8 Page 1 - Complaint Folder Page 2 - Complaint Folder Information Section 9 Page 1 - Report Writer System Page 2 - Report Writer Report Selection Page 3 - Report Writer Report Filter Section 1 Page 1 Introduction The Building Permit/Code Enforcement System has been designed to meet the need of today’s Code Enforcement Office. The software was developed in a Relational Database format (DB4) and compiled with a Windows version that is used in combination with the Assessment System files. The program can accommodate a wide variety of machines and operating systems and can be used with a Laptop in the field and files transferred to the Desktop back in the office. The file structure has complete compatibility with the Assessment System software and Assessor files can be used to establish base information on applications for all pertinent data relating to the Assessment Roll. -

What Is It and Why Should VFP Developers Care?

The X-Sharp Project What is it and why should VFP developers care? X# Vendor Session October 2019 X#: a new incarnation of an old development language X# Vendor Session October 2019 Agenda • A bit of history • Why X# • What is X# • X# and Visual FoxPro • New Features • Where can I get it X# Vendor Session October 2019 xBase languages, a bit of History -1 • Started at JPL with a project called Vulcan, by Wayne Ratliff (PTDOS, Later CP/M) (1978) • Ashton Tate bought it and released it under the name dBASE II for Apple II and DOS (1980) • An improved version dBASE III in 1984 • In the 80’s several competitors appeared on the market: Clipper, FoxBASE, DBXL, QuickSilver, Arago, Force, FlagShip and many more. Together these products were called “xBase”. The share the programming language and many functions and working with DBF files • Some were faster, others allowed to sell royalty free compiled versions of apps • Then Microsoft launched Windows in 1990 X# Vendor Session October 2019 xBase languages, a bit of History -2 • The move to Windows resulted in several product changes, also products were sold and resold: • dBase (AT) -> (Borland, Inprise, Borland) • QuickSilver -> Borland, then vanished • FlagShip -> MultiSoft (Various Unix versions, Windows) • Clipper (Nantucket)-> Visual Objects (Computer Associates) • FoxBASE (Fox Software) -> FoxPro (Microsoft) • Some products “died” • New products appeared, especially as successors of Clipper • (x) Harbour (open source, also for unix) • Xbase++ X# Vendor Session October 2019 xBase languages, a bit of History -3 • Now in the 2010’s most of these products are ‘dead’, no longer developed for various reasons. -

Xbase++ the Next Generation of Xbase-Technology

Overview • Xbase++ the language • Meeting points with PostgreSQL • Pass-Through-SQL (P-SQL) • PostgreSQL does ISAM and more! • Xbase++ as a Server-Side-Language • Into the future... • Summary PGCon May 2008 Alaska Software Inc. Xbase++ the platform • 4GL language, 100% Clipper compatible • With asynchronous garbage collection, automatic multi threading – no deadlock no need to sync. resource access • OOP engine with multiple inheritance, dynamic class/object creation and full object persistence • Lamba expressions aka. Lisp Codeblocks over the wire • Full dynamic loadable features and copy deployment • The language and runtime itself introduce no-limits! • Very powerfull preprocessor to add DSL constructs! • Supports development of Console/Textmode, Graphical User- Interface, Service, CGI, Web-Application-Server and Compiled- Web-Page application types • Has its roots on the OS/2 platform • Currently focuses onto the Windows 32Bit and 64 Bit Operating-Systems • Hybrid Compiler, generates native code and VM code. PGCon May 2008 Alaska Software Inc. Data-Access in Xbase++ & xBase • Rule-1: There is no impedance mismatch – Any table datatype is a valid language datatype this includes operators and expressive behaviour and NIL-State / NULL-behaviour – Only tables introduce fixed-typing, the language itself must be dynamic typed to adapt automatically to schema changes • Rule-2: The language is the database and vice versa – Any expression of the development language is a valid expression on behalf of the database/table (index-key-expr., functions, methods) • Rule-3: Isolation semantics of the database and language are the same – Different application access a remote data source and different threads accessing the same field/column are underlying the same isolation principles. -

Teaching OOP to FPW with Stored Procedures Dave Jinkerson and Scott Malinowski

Print Article Seite 1 von 4 Issue Date: FoxTalk May 1998 Teaching OOP to FPW with Stored Procedures Dave Jinkerson and Scott Malinowski Many FoxPro developers would like to make the transition between FPW and VFP, but they don't know where to start. Dave and Scott will show you how to use your current knowledge of FPW to build the bridge every programmer needs to cross from procedural programming into OOP. In this article, they'll look at an example that will help you move your procedural programming style one step closer to OOP and, in so doing, begin the journey from FPW to VFP. Object-oriented programming (OOP) is a data-centered view of programming in which data and behavior are strongly linked. The proper way to view OOP is as a programming style that captures the behavior of the real world in a way that hides implementation details. With a language that supports OOP, data and behavior are conceived as classes, and Instances of classes become objects. What many FPW programmers don't realize is that, in a language that doesn't support OOP, you can rely on programming style and still create an OOP-like result. ADTs with style A key factor in OOP is the creation of user-defined data types from the language's native data types. In languages that support OOP, user-defined extensions to the native types are called Abstract Data Types (ADT). To create an ADT, you must define a set of values and a collection of operations that can act on those values. -

Big Data Solutions and Applications

P. Bellini, M. Di Claudio, P. Nesi, N. Rauch Slides from: M. Di Claudio, N. Rauch Big Data Solutions and applications Slide del corso: Sistemi Collaborativi e di Protezione Corso di Laurea Magistrale in Ingegneria 2013/2014 http://www.disit.dinfo.unifi.it Distributed Data Intelligence and Technology Lab 1 Related to the following chapter in the book: • P. Bellini, M. Di Claudio, P. Nesi, N. Rauch, "Tassonomy and Review of Big Data Solutions Navigation", in "Big Data Computing", Ed. Rajendra Akerkar, Western Norway Research Institute, Norway, Chapman and Hall/CRC press, ISBN 978‐1‐ 46‐657837‐1, eBook: 978‐1‐ 46‐657838‐8, july 2013, in press. http://www.tmrfindia.org/b igdata.html 2 Index 1. The Big Data Problem • 5V’s of Big Data • Big Data Problem • CAP Principle • Big Data Analysis Pipeline 2. Big Data Application Fields 3. Overview of Big Data Solutions 4. Data Management Aspects • NoSQL Databases • MongoDB 5. Architectural aspects • Riak 3 Index 6. Access/Data Rendering aspects 7. Data Analysis and Mining/Ingestion Aspects 8. Comparison and Analysis of Architectural Features • Data Management • Data Access and Visual Rendering • Mining/Ingestion • Data Analysis • Architecture 4 Part 1 The Big Data Problem. 5 Index 1. The Big Data Problem • 5V’s of Big Data • Big Data Problem • CAP Principle • Big Data Analysis Pipeline 2. Big Data Application Fields 3. Overview of Big Data Solutions 4. Data Management Aspects • NoSQL Databases • MongoDB 5. Architectural aspects • Riak 6 What is Big Data? Variety Volume Value 5V’s of Big Data Velocity Variability 7 5V’s of Big Data (1/2) • Volume: Companies amassing terabytes/petabytes of information, and they always look for faster, more efficient, and lower‐ cost solutions for data management. -

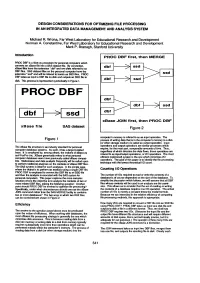

Processing in an Integrated Data Management and Analysis System

DESIGN CONSIDERATIONS FOR OPTIMIZING FILE PROCESSING IN AN INTEGRATED DATA MANAGEMENT AND ANALYSIS SYSTEM Michael R. Wrona. Far West Laboratory for Educational Research and Development Norman A. Constantine. Far West Laboratory for Educational Research and Development Mark P. Branagh. Stanford University Introduction PRoe OBF first, then MERGE PROC OBF is a SAS (R) procedure for personal computers which converts an xBase file into a SAS dataset file. By convention, dbf ssd xBase files have the extension· .dbt and are often referred to as OBF files. SAS dataset files on the personal computer have the extension -.sscl" and will be refered to herein as SSD files. PROC OBF takes as input a OBF file on disk and outputs an SSO file to dbf l---=>~I ssd disk. This process is represented symbolically in Figure 1. IL_----1 PROC DBF dbf L-d_b_fj----=>""I ssd L--d_bf--ll----=>~I ssd dbf xBase JOIN first, then PROe OBF xBase file SASdataset Figure 2 computer's memory is refered to as an input operation. The Figure 1 process of writing data that is in the computer's memory to a disk (or other storage medium) is called an output operation. Input The xBase file strucbJre is an industry standard for personal operations and output operations are similar processes which require, for the most part, comparable amounts of time. Thus; computer database systems. As such, it has a large installed regardless of which direction the data flows, these operations are base. It is employed by, among others, the makers of dBase (A) refered to as inpuUoutput operations, or 110 operations.