Hazlitt Et Al. V. Apple Inc

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Protecting Sensitive and Personal Information from Ransomware-Caused Data Breaches

Protecting Sensitive and Personal Information from Ransomware-Caused Data Breaches OVERVIEW Over the past several years, the Cybersecurity and Infrastructure Security Ransomware is a serious and increasing threat to all government and Agency (CISA) and our partners have responded to a significant number of private sector organizations, including ransomware incidents, including recent attacks against a U.S. pipeline critical infrastructure organizations. In company and a U.S. software company, which affected managed service response, the U.S. government providers (MSPs) and their downstream customers. launched StopRansomware.gov, a centralized, whole-of-government Ransomware is malware designed to encrypt files on a device, rendering webpage providing ransomware files and the systems that rely on them unusable. Traditionally, malicious resources, guidance, and alerts. actors demand ransom in exchange for decryption. Over time, malicious actors have adjusted their ransomware tactics to be more destructive and impactful. Malicious actors increasingly exfiltrate data and then threaten to sell or leak it—including sensitive or personal information—if the ransom is not paid. These data breaches can cause financial loss to the victim organization and erode customer trust. All organizations are at risk of falling victim to a ransomware incident and are responsible for protecting sensitive and personal data stored on their systems. This fact sheet provides information for all government and private sector organizations, including critical infrastructure organizations, on preventing and responding to ransomware-caused data breaches. CISA encourages organizations to adopt a heightened state of awareness and implement the recommendations below. PREVENTING RANSOMWARE ATTACKS 1. Maintain offline, encrypted backups of data and regularly test your backups. -

About the Sony Hack

All About the Sony Hack Sony Pictures Entertainment was hacked in late November by a group called the Guardians of Peace. The hackers stole a significant amount of data off of Sony’s servers, including employee conversations through email and other documents, executive salaries, and copies of unreleased January/February 2015 Sony movies. Sony’s network was down for a few days as administrators worked to assess the damage. According to the FBI, the hackers are believed have ties with the North Korean government, which has denied any involvement with the hack and has even offered to help the United States discover the identities of the hackers. Various analysts and security experts have stated that it is unlikely All About the Sony Hack that the North Korean government is involved, claiming that the government likely doesn’t have the Learn how Sony was attacked and infrastructure to succeed in a hack of this magnitude. what the potential ramifications are. The hackers quickly turned their focus to an upcoming Sony film, “The Interview,” a comedy about Securing Your Files in Cloud two Americans who assassinate North Korean leader Kim Jong-un. The hackers contacted Storage reporters on Dec. 16, threatening to commit acts of terrorism towards people going to see the Storing files in the cloud is easy movie, which was scheduled to be released on Dec. 25. Despite the lack of credible evidence that and convenient—but definitely not attacks would take place, Sony decided to postpone the movie’s release. On Dec. 19, President risk-free. Obama went on record calling the movie’s cancelation a mistake. -

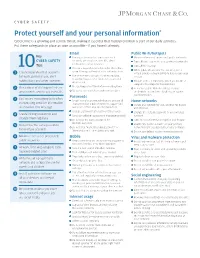

Protect Yourself and Your Personal Information*

CYBER SAFETY Protect yourself and your personal information * Cybercrime is a growing and serious threat, making it essential that fraud prevention is part of our daily activities. Put these safeguards in place as soon as possible—if you haven’t already. Email Public Wi-Fi/hotspots Key Use separate email accounts: one each Minimize the use of unsecured, public networks CYBER SAFETY for work, personal use, user IDs, alerts Turn oF auto connect to non-preferred networks 10 notifications, other interests Tips Turn oF file sharing Choose a reputable email provider that oFers spam filtering and multi-factor authentication When public Wi-Fi cannot be avoided, use a 1 Create separate email accounts virtual private network (VPN) to help secure your for work, personal use, alert Use secure messaging tools when replying session to verified requests for financial or personal notifications and other interests information Disable ad hoc networking, which allows direct computer-to-computer transmissions Encrypt important files before emailing them 2 Be cautious of clicking on links or Never use public Wi-Fi to enter personal attachments sent to you in emails Do not open emails from unknown senders credentials on a website; hackers can capture Passwords your keystrokes 3 Use secure messaging tools when Create complex passwords that are at least 10 Home networks transmitting sensitive information characters; use a mix of numbers, upper- and Create one network for you, another for guests via email or text message lowercase letters and special characters and children -

The 2014 Sony Hack and the Role of International Law

The 2014 Sony Hack and the Role of International Law Clare Sullivan* INTRODUCTION 2014 has been dubbed “the year of the hack” because of the number of hacks reported by the U.S. federal government and major U.S. corporations in busi- nesses ranging from retail to banking and communications. According to one report there were 1,541 incidents resulting in the breach of 1,023,108,267 records, a 78 percent increase in the number of personal data records compro- mised compared to 2013.1 However, the 2014 hack of Sony Pictures Entertain- ment Inc. (Sony) was unique in nature and in the way it was orchestrated and its effects. Based in Culver City, California, Sony is the movie making and entertain- ment unit of Sony Corporation of America,2 the U.S. arm of Japanese electron- ics company Sony Corporation.3 The hack, discovered in November 2014, did not follow the usual pattern of hackers attempting illicit activities against a business. It did not specifically target credit card and banking information, nor did the hackers appear to have the usual motive of personal financial gain. The nature of the wrong and the harm inflicted was more wide ranging and their motivation was apparently ideological. Identifying the source and nature of the wrong and harm is crucial for the allocation of legal consequences. Analysis of the wrong and the harm show that the 2014 Sony hack4 was more than a breach of privacy and a criminal act. If, as the United States maintains, the Democratic People’s Republic of Korea (herein- after North Korea) was behind the Sony hack, the incident is governed by international law. -

5Tips for Securing Your Mobile Device for Telehealth

Tips for Securing your Mobile Device 5for Telehealth The Health Insurance Portability and Accountability Act (HIPAA) requires that providers protect your information and not share it without your permission. Telehealth providers are required by law to secure medical information that can be shared electronically by encrypting messages and adding other safeguards into the software they use. However, patients’ devices on the receiving end of care often do not always have these safeguards while some medical devices have been shown to be vulnerable to hackers. It is therefore the responsibility of the patient to secure personal devices. 01 Use a PIN or Passcode to secure device Securing your mobile device is important for ensuring that others do not have access to your confidential information and applications. To protect your iPad, iPhone, Android phone you need to set a passcode. It is a 4- to 6-digit PIN used to grant access to the device, like the code you use for an ATM bank card or a debit card. Securing your Apple (iPhone and iPad) and Android devices In addition to allowing you to secure your phone with a passcode, Newer Apple and Android devices also use biometrics called Touch ID and Face ID on Apple, and Face recognition, Irises, and Fingerprints on some Android devices. These tools use your Face, eyes, and fingerprints as unique identifiers to help secure your devices. Face ID and Face recognition use your facial features in order to unlock your device. Touch ID, which is no longer being used on newer versions of iPhone and iPad, and Fingerprints on Android is a fingerprinting tool. -

Legal-Process Guidelines for Law Enforcement

Legal Process Guidelines Government & Law Enforcement within the United States These guidelines are provided for use by government and law enforcement agencies within the United States when seeking information from Apple Inc. (“Apple”) about customers of Apple’s devices, products and services. Apple will update these Guidelines as necessary. All other requests for information regarding Apple customers, including customer questions about information disclosure, should be directed to https://www.apple.com/privacy/contact/. These Guidelines do not apply to requests made by government and law enforcement agencies outside the United States to Apple’s relevant local entities. For government and law enforcement information requests, Apple complies with the laws pertaining to global entities that control our data and we provide details as legally required. For all requests from government and law enforcement agencies within the United States for content, with the exception of emergency circumstances (defined in the Electronic Communications Privacy Act 1986, as amended), Apple will only provide content in response to a search issued upon a showing of probable cause, or customer consent. All requests from government and law enforcement agencies outside of the United States for content, with the exception of emergency circumstances (defined below in Emergency Requests), must comply with applicable laws, including the United States Electronic Communications Privacy Act (ECPA). A request under a Mutual Legal Assistance Treaty or the Clarifying Lawful Overseas Use of Data Act (“CLOUD Act”) is in compliance with ECPA. Apple will provide customer content, as it exists in the customer’s account, only in response to such legally valid process. -

Attack on Sony 2014 Sammy Lui

Attack on Sony 2014 Sammy Lui 1 Index • Overview • Timeline • Tools • Wiper Malware • Implications • Need for physical security • Employees – Accomplices? • Dangers of Cyberterrorism • Danger to Other Companies • Damage and Repercussions • Dangers of Malware • Defense • Reparations • Aftermath • Similar Attacks • Sony Attack 2011 • Target Attack • NotPetya • Sources 2 Overview • Attack lead by the Guardians of Peace hacker group • Stole huge amounts of data from Sony’s network and leaked it online on Wikileaks • Data leaks spanned over a few weeks • Threatening Sony to not release The Interview with a terrorist attack 3 Timeline • 11/24/14 - Employees find Terabytes of data stolen from computers and threat messages • 11/26/14 - Hackers post 5 Sony movies to file sharing networks • 12/1/14 - Hackers leak emails and password protected files • 12/3/14 – Hackers leak files with plaintext credentials and internal and external account credentials • 12/5/14 – Hackers release invitation along with financial data from Sony 4 Timeline • 12/07/14 – Hackers threaten several employees to sign statement disassociating themselves with Sony • 12/08/14 - Hackers threaten Sony to not release The Interview • 12/16/14 – Hackers leaks personal emails from employees. Last day of data leaks. • 12/25/14 - Sony releases The Interview to select movie theaters and online • 12/26/14 –No further messages from the hackers 5 Tools • Targeted attack • Inside attack • Wikileaks to leak data • The hackers used a Wiper malware to infiltrate and steal data from Sony employee -

Certified Device List for Mobiliti – Phone Channel

Mobiliti™ Certified Device List December 2019 - ASP Version CONFIDENTIAL – LIMITED: Distribution restricted to Fiserv employees and clients © 2011-2019 Fiserv, Inc. or its affiliates. All rights reserved. This work is confidential and its use is strictly limited. Use is permitted only in accordance with the terms of the agreement under which it was furnished. Any other use, duplication, or dissemination without the prior written consent of Fiserv, Inc. or its affiliates is strictly prohibited. The information contained herein is subject to change without notice. Except as specified by the agreement under which the materials are furnished, Fiserv, Inc. and its affiliates do not accept any liabilities with respect to the information contained herein and is not responsible for any direct, indirect, special, consequential or exemplary damages resulting from the use of this information. No warranties, either express or implied, are granted or extended by this document. http://www.fiserv.com Fiserv is a registered trademark of Fiserv, Inc. Other brands and their products are trademarks or registered trademarks of their respective holders and should be noted as such. CONFIDENTIAL – LIMITED: Distribution restricted to Fiserv employees and clients Contents Revision and History .................................................................................................................. 4 Certified Device List for Mobiliti – Phone Channel .................................................................. 5 Scope ..................................................................................................................................................... -

Apple Submission NIST RFI Privacy Framework

Apple Submission in Response to NIST Request for Information Developing a Privacy Framework, Docket No. 181101997-8997-01 January 14, 2018 Apple appreciates this opportunity to comment on NIST’s Request For Information (RFI) regarding its proposed Privacy Framework. Apple supports the development of the Privacy Framework and NIST’s engagement on this important topic. At Apple, we believe privacy is a fundamental human right. We have long embraced the principles of privacy-by-design and privacy-by-default, not because the law requires it, but because it is the right thing for our customers. We’ve proved time and again that great experiences don’t have to come at the expense of privacy and security. Instead, they support them. Every Apple product is designed from the ground up to protect our users’ personal information. We’ve shown that protecting privacy is possible at every level of the technical stack and shown how these protections must evolve over time. Users can protect their devices with Face ID or Touch ID, where their data is converted into a mathematical representation that is encrypted and used only by the Secure Enclave1 on their device, cannot be accessed by the operating system, and is never stored on Apple servers. In communication, we use end-to-end encryption to protect iMessage and FaceTime conversations so that no one but the participants can access them. On the web, Safari was the first browser to block third-party cookies by default, and we introduced Intelligent Tracking Protection to combat the growth of online tracking. When we use data to create better experiences for our users, we work hard to do it in a way that doesn’t compromise privacy. -

Account Protections a Google Perspective

Account Protections a Google Perspective Elie Bursztein Google, @elie with the help of many Googlers updated March 2021 Security and Privacy Group Slides available here: https://elie.net/account Security and Privacy Group 4 in 10 US Internet users report having their online information compromised Source the United States of P@ssw0rd$ - Harris / Google poll Security and Privacy Group How do attacker compromise accounts? Security and Privacy Group Main source of compromised accounts Data breach Phishing Keyloggers Security and Privacy Group The blackmarket is fueling the account compromised ecosystem Security and Privacy Group Accounts and hacking tools are readily available on the blackmarket Security and Privacy Group Volume of credentials stolen in 2016: a lower bound Data breach Phishing Keyloggers 4.3B+ 12M+ 1M+ Data Breaches, Phishing, or Malware? Understanding the Risks of Stolen Credentials CCS’17 Security and Privacy Group Data breach Phishing Keyloggers Stolen credentials volume credentials Stolen Targeted attack Risk Security and Privacy Group Stolen credential origin takeaways The black market Password reuse is Phishing and fuels account the largest source keyloggers poses compromise of compromise a significant risk Security and Privacy Group How can we prevent account compromise? Security and Privacy Group Defense in depth leveraging many competing technologies Security and Privacy Group Increasing security comes at the expense of additional friction including lock-out risk, monetary cost, and user education Security and Privacy -

The Ultimate Guide to Vendor Data Breach Response

The Ultimate Guide to Vendor Data Breach Response This paper was authored by Corvus’s VP of Smart Breach Response Lauren Winchester in collaboration with Dominic Paluzzi, Co-Chair of the Data Privacy and Cybersecurity practice at the law firm McDonald Hopkins. Commercial insurance reimagined. The Ultimate Guide to Vendor Data Breach Response Cyber insurance brokers help their clients plan for what happens when they experience a data breach or ransomware incident. Something often overlooked is how that plan may change when the breach is at a vendor and the investigation is outside of their control. Most organizations are in the midst of a decade-old shift to deeper integration with managed service providers, software-as-a-service tools, and other cloud-based software solutions. Having worked with thousands of brokers and policyholders, we’ve observed an unspoken assumption that these vendors, with their highly advanced products, are also par- agons of cybersecurity. That’s a misplaced assumption for three reasons. The bigger and more complex an organization, generally the harder it is to keep safe. Vendors may have excellent security teams and practices, but 1. face a sisyphean task given their scale. The adversarial question. These companies may be at greater risk because criminals see them as a rich target -- if they can infiltrate the vendor, they 2. can potentially extend their attack to hundreds or thousands of customer organizations. Some providers have an air of invincibility about their exposure, likely for the same reason their customers instinctively trust them. A survey by 3. Coveware shows a large disconnect between what MSPs believe to be the cost and consequences of an attack, and what they are in reality. -

Read Apple Pay Faqs

Apple Pay FAQs What is Apple Pay? Apple pay is an easy, secure and private way to pay on iPhone, iPad, Apple Watch and Mac. You can make purchases in stores by holding your compatible device near a contactless reader at participating merchants or when shopping online at participating merchants. You can find more information about Apple Pay and how to set up your card at apple.com. What do I need to use Apple Pay? • Eligible device • Supported card from Texas DPS Credit Union • Latest version of iOS, watchOS or macOS • An Apple ID signed into iCloud What Apple devices are compatible? • iPhone models with Face ID • iPhone models with Touch ID, except for iPhone 5s • iPad Pro, iPad Air, iPad and iPad mini models with Touch ID or Face ID • Apple Watch Series 1 and 2 and later • Mac models with Touch ID How do I add my card to Apple Pay? • On your compatible device, access the Settings app, scroll down to Wallet & Apple Pay, then click Add Card, under Payment Cards and follow the prompts. The card information will be verified and confirm whether you can use your card with Apple Pay. If the Credit Union needs more information to verify your card, you will receive a prompt to contact us. After your card has been verified, you can start using Apple Pay. OR • Go to the Wallet and tap the plus sign. Follow the prompts to add a new card. Tap Next. The card information will be verified and confirm whether you can use your card with Apple Pay.