Lilypond Contributor's Guide

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Source Code Trees in the VALLEY of THE

PROGRAMMING GNOME Source code trees IN THE VALLEY OF THE CODETHORSTEN FISCHER So you’ve just like the one in Listing 1. Not too complex, eh? written yet another Unfortunately, creating a Makefile isn’t always the terrific GNOME best solution, as assumptions on programs program. Great! But locations, path names and others things may not be does it, like so many true in all cases, forcing the user to edit the file in other great programs, order to get it to work properly. lack something in terms of ease of installation? Even the Listing 1: A simple Makefile for a GNOME 1: CC=/usr/bin/gcc best and easiest to use programs 2: CFLAGS=`gnome-config —cflags gnome gnomeui` will cause headaches if you have to 3: LDFLAGS=`gnome-config —libs gnome gnomeui` type in lines like this, 4: OBJ=example.o one.o two.o 5: BINARIES=example With the help of gcc -c sourcee.c gnome-config —libs —cflags 6: gnome gnomeui gnomecanvaspixbuf -o sourcee.o 7: all: $(BINARIES) Automake and Autoconf, 8: you can create easily perhaps repeated for each of the files, and maybe 9: example: $(OBJ) with additional compiler flags too, only to then 10: $(CC) $(LDFLAGS) -o $@ $(OBJ) installed source code demand that everything is linked. And at the end, 11: do you then also have to copy the finished binary 12: .c.o: text trees. Read on to 13: $(CC) $(CFLAGS) -c $< manually into the destination directory? Instead, 14: find out how. wouldn’t you rather have an easy, portable and 15: clean: quick installation process? Well, you can – if you 16: rm -rf $(OBJ) $(BINARIES) know how. -

Red Hat Enterprise Linux 6 Developer Guide

Red Hat Enterprise Linux 6 Developer Guide An introduction to application development tools in Red Hat Enterprise Linux 6 Dave Brolley William Cohen Roland Grunberg Aldy Hernandez Karsten Hopp Jakub Jelinek Developer Guide Jeff Johnston Benjamin Kosnik Aleksander Kurtakov Chris Moller Phil Muldoon Andrew Overholt Charley Wang Kent Sebastian Red Hat Enterprise Linux 6 Developer Guide An introduction to application development tools in Red Hat Enterprise Linux 6 Edition 0 Author Dave Brolley [email protected] Author William Cohen [email protected] Author Roland Grunberg [email protected] Author Aldy Hernandez [email protected] Author Karsten Hopp [email protected] Author Jakub Jelinek [email protected] Author Jeff Johnston [email protected] Author Benjamin Kosnik [email protected] Author Aleksander Kurtakov [email protected] Author Chris Moller [email protected] Author Phil Muldoon [email protected] Author Andrew Overholt [email protected] Author Charley Wang [email protected] Author Kent Sebastian [email protected] Editor Don Domingo [email protected] Editor Jacquelynn East [email protected] Copyright © 2010 Red Hat, Inc. and others. The text of and illustrations in this document are licensed by Red Hat under a Creative Commons Attribution–Share Alike 3.0 Unported license ("CC-BY-SA"). An explanation of CC-BY-SA is available at http://creativecommons.org/licenses/by-sa/3.0/. In accordance with CC-BY-SA, if you distribute this document or an adaptation of it, you must provide the URL for the original version. Red Hat, as the licensor of this document, waives the right to enforce, and agrees not to assert, Section 4d of CC-BY-SA to the fullest extent permitted by applicable law. -

The GNOME Desktop Environment

The GNOME desktop environment Miguel de Icaza ([email protected]) Instituto de Ciencias Nucleares, UNAM Elliot Lee ([email protected]) Federico Mena ([email protected]) Instituto de Ciencias Nucleares, UNAM Tom Tromey ([email protected]) April 27, 1998 Abstract We present an overview of the free GNU Network Object Model Environment (GNOME). GNOME is a suite of X11 GUI applications that provides joy to users and hackers alike. It has been designed for extensibility and automation by using CORBA and scripting languages throughout the code. GNOME is licensed under the terms of the GNU GPL and the GNU LGPL and has been developed on the Internet by a loosely-coupled team of programmers. 1 Motivation Free operating systems1 are excellent at providing server-class services, and so are often the ideal choice for a server machine. However, the lack of a consistent user interface and of consumer-targeted applications has prevented free operating systems from reaching the vast majority of users — the desktop users. As such, the benefits of free software have only been enjoyed by the technically savvy computer user community. Most users are still locked into proprietary solutions for their desktop environments. By using GNOME, free operating systems will have a complete, user-friendly desktop which will provide users with powerful and easy-to-use graphical applications. Many people have suggested that the cause for the lack of free user-oriented appli- cations is that these do not provide enough excitement to hackers, as opposed to system- level programming. Since most of the GNOME code had to be written by hackers, we kept them happy: the magic recipe here is to design GNOME around an adrenaline response by trying to use exciting models and ideas in the applications. -

Why and How I Use Lilypond Daniel F

Why and How I Use LilyPond Daniel F. Savarese Version 1.1 Copyright © 2018 Daniel F. Savarese1 even with an academic discount. I never got my money's Introduction worth out of it. At the time I couldn't explain exactly why, but I was never productive using it. In June of 2017, I received an email from someone using my classical guitar transcriptions inquiring about how I Years later, when I started playing piano, I upgraded to use LilyPond2 to typeset (or engrave) music. He was the latest version of Finale and suddenly found it easier dissatisfied with his existing WYSIWYG3 commercial to produce scores using the software. It had nothing to software and was looking for alternatives. He was im- do with new features in the product. After notating eight pressed with the appearance of my transcription of Lá- original piano compositions, I realized that my previous grima and wondered if I would share the source for it difficulties had to do with the idiosyncratic requirements and my other transcriptions. of guitar music that were not well-supported by the soft- ware. Nevertheless, note entry and the overall user inter- I sent the inquirer a lengthy response explaining that I'd face of Finale were tedious. I appreciated how accurate like to share the source for my transcriptions, but that it the MIDI playback could be with respect to dynamics, wouldn't be readily usable by anyone given the rather tempo changes, articulations, and so on. But I had little involved set of support files and programs I've built to need for MIDI output. -

Downloads." the Open Information Security Foundation

Performance Testing Suricata The Effect of Configuration Variables On Offline Suricata Performance A Project Completed for CS 6266 Under Jonathon T. Giffin, Assistant Professor, Georgia Institute of Technology by Winston H Messer Project Advisor: Matt Jonkman, President, Open Information Security Foundation December 2011 Messer ii Abstract The Suricata IDS/IPS engine, a viable alternative to Snort, has a multitude of potential configurations. A simplified automated testing system was devised for the purpose of performance testing Suricata in an offline environment. Of the available configuration variables, seventeen were analyzed independently by testing in fifty-six configurations. Of these, three variables were found to have a statistically significant effect on performance: Detect Engine Profile, Multi Pattern Algorithm, and CPU affinity. Acknowledgements In writing the final report on this endeavor, I would like to start by thanking four people who made this project possible: Matt Jonkman, President, Open Information Security Foundation: For allowing me the opportunity to carry out this project under his supervision. Victor Julien, Lead Programmer, Open Information Security Foundation and Anne-Fleur Koolstra, Documentation Specialist, Open Information Security Foundation: For their willingness to share their wisdom and experience of Suricata via email for the past four months. John M. Weathersby, Jr., Executive Director, Open Source Software Institute: For allowing me the use of Institute equipment for the creation of a suitable testing -

GNU Wget 1.10 the Non-Interactive Download Utility Updated for Wget 1.10, Apr 2005

GNU Wget 1.10 The non-interactive download utility Updated for Wget 1.10, Apr 2005 by Hrvoje Nikˇsi´cand the developers Copyright c 1996–2005, Free Software Foundation, Inc. Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.2 or any later version published by the Free Software Foundation; with the Invariant Sections being “GNU General Public License” and “GNU Free Documentation License”, with no Front-Cover Texts, and with no Back-Cover Texts. A copy of the license is included in the section entitled “GNU Free Documentation License”. Chapter 1: Overview 1 1 Overview GNU Wget is a free utility for non-interactive download of files from the Web. It supports http, https, and ftp protocols, as well as retrieval through http proxies. This chapter is a partial overview of Wget’s features. • Wget is non-interactive, meaning that it can work in the background, while the user is not logged on. This allows you to start a retrieval and disconnect from the system, letting Wget finish the work. By contrast, most of the Web browsers require constant user’s presence, which can be a great hindrance when transferring a lot of data. • Wget can follow links in html and xhtml pages and create local versions of remote web sites, fully recreating the directory structure of the original site. This is sometimes referred to as “recursive downloading.” While doing that, Wget respects the Robot Exclusion Standard (‘/robots.txt’). Wget can be instructed to convert the links in downloaded html files to the local files for offline viewing. -

Autotools Tutorial

Autotools Tutorial Mengke HU ECE Department Drexel University ASPITRG Group Meeting Outline 1 Introduction 2 GNU Coding standards 3 Autoconf 4 Automake 5 Libtools 6 Demonstration The Basics of Autotools 1 The purpose of autotools I It serves the needs of your users (checking platform and libraries; compiling and installing ). I It makes your project incredibly portablefor dierent system platforms. 2 Why should we use autotools: I A lot of free softwares target Linux operating system. I Autotools allow your project to build successfully on future versions or distributions with virtually no changes to the build scripts. The Basics of Autotools 1 The purpose of autotools I It serves the needs of your users (checking platform and libraries; compiling and installing ). I It makes your project incredibly portablefor dierent system platforms. 2 Why should we use autotools: I A lot of free softwares target Linux operating system. I Autotools allow your project to build successfully on future versions or distributions with virtually no changes to the build scripts. The Basics of Autotools 1 3 GNU packages for GNU build system I Autoconf Generate a conguration script for a project I Automake Simplify the process of creating consistent and functional makeles I Libtool Provides an abstraction for the portable creation of shared libraries 2 Basic steps (commends) to build and install software I tar -zxvf package_name-version.tar.gz I cd package_name-version I ./congure I make I sudo make install The Basics of Autotools 1 3 GNU packages for GNU build -

Name Synopsis Description Options

GPGTAR(1) GNU Privacy Guard 2.2 GPGTAR(1) NAME gpgtar −Encrypt or sign files into an archive SYNOPSIS gpgtar [options] filename1 [ filename2, ... ] directory1 [ directory2, ... ] DESCRIPTION gpgtar encrypts or signs files into an archive.Itisangpg-ized tar using the same format as used by PGP’s PGP Zip. OPTIONS gpgtar understands these options: --create Put givenfiles and directories into a vanilla ‘‘ustar’’archive. --extract Extract all files from a vanilla ‘‘ustar’’archive. --encrypt -e Encrypt givenfiles and directories into an archive.This option may be combined with option --symmetric for an archive that may be decrypted via a secret key orapassphrase. --decrypt -d Extract all files from an encrypted archive. --sign -s Makeasigned archive from the givenfiles and directories. This can be combined with option --encrypt to create a signed and then encrypted archive. --list-archive -t List the contents of the specified archive. --symmetric -c Encrypt with a symmetric cipher using a passphrase. The default symmetric cipher used is AES-128, but may be chosen with the --cipher-algo option to gpg. --recipient user -r user Encrypt for user id user.For details see gpg. --local-user user -u user Use user as the key tosign with. Fordetails see gpg. --output file -o file Write the archive tothe specified file file. --verbose -v Enable extra informational output. GnuPG 2.2.12 2018-12-11 1 GPGTAR(1) GNU Privacy Guard 2.2 GPGTAR(1) --quiet -q Trytobeasquiet as possible. --skip-crypto Skip all crypto operations and create or extract vanilla ‘‘ustar’’archives. --dry-run Do not actually output the extracted files. -

With Yocto/Openembedded

PORTING NEW CODE TO RISC-V WITH YOCTO/OPENEMBEDDED Martin Maas ([email protected]) 1st RISC-V Workshop, January 15, 2015 Monterey, CA WHY WE NEED A LINUX DISTRIBUTION • To build an application for RISC-V, you need to: – Download and build the RISC-V toolchain + Linux – Download, patch and build application + dependencies – Create an image and run it in QEMU or on hardware • Problems with this approach: – Error-prone: Easy to corrupt FS or get a step wrong – Reproducibility: Others can’t easily reuse your work – Rigidity: If a dependency changes, need to do it all over • We need a Linux distribution! – Automatic build process with dependency tracking – Ability to distribute binary packages and SDKs 2 RISCV-POKY: A PORT OF THE YOCTO PROJECT • We ported the Yocto Project – Official Linux Foundation Workgroup, supported by a large number of industry partners – Part I: Collection of hundreds of recipes (scripts that describe how to build packages for different platforms), shared with OpenEmbedded project – Part II: Bitbake, a parallel build system that takes recipes and fetches, patches, cross-compiles and produces packages (RPM/DEB), images, SDKs, etc. • Focus on build process and customizability 3 GETTING STARTED WITH RISCV-POKY • Let’s build a full Linux system including the GCC toolchain, Linux, QEMU + a large set of packages (including bash, ssh, python, perl, apt, wget,…) • Step I: Clone riscv-poky: git clone [email protected]:ucb-bar/riscv-poky.git • Step II: Set up the build system: source oe-init-build-env • Step III: Build an image (may -

Replacing PGP 2.X with Gnupg

Replacing PGP 2.x with GnuPG This article is based on an earlier PGP 2.x/GnuPG compatability guide (http://www.toehold.com/~kyle/pgp- compat.html) written by Kyle Hasselbacher (<[email protected]>). Mike Ashley (<[email protected]>) edited and expanded it. Michael Fischer v. Mollard (<[email protected]>) transformed the HTML source to Doc- Book SGML and also expanded it further. Some of the details described here came from the gnupg-devel and gnupg-user mailing lists. The workaround for both signing with and encrypting to an RSA key were taken from Gero Treuner’s compatability script (http://muppet.faveve.uni-stuttgart.de/~gero/gpg-2comp/changes.html). Please direct questions, bug reports, or suggesstions to the maintainer, Mike Ashley. Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.1 or any later version published by the Free Software Foundation; with no Invariant Sections, no Front-Cover Texts, and no Back-Cover Texts. A copy of the license is included in the section entitled "GNU Free Documentation License". Introduction This document describes how to communicate with people still using old versions of PGP 2.x GnuPG can be used as a nearly complete replacement for PGP 2.x. You may encrypt and decrypt PGP 2.x messages using imported old keys, but you cannot generate PGP 2.x keys. This document demonstrates how to extend the standard distribution of GnuPG to support PGP 2.x keys as well as what options must be used to ensure inter- operation with PGP 2.x users. -

Lilypond Cheatsheet

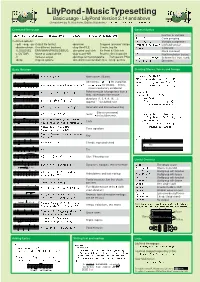

LilyPond-MusicTypesetting Basic usage - LilyPond Version 2.14 and above Cheatsheet by R. Kainhofer, Edition Kainhofer, http://www.edition-kainhofer.com/ Command-line usage General Syntax lilypond [-l LOGLEVEL] [-dSCMOPTIONS] [-o OUTPUT] [-V] FILE.ly [FILE2.ly] \xxxx function or variable { ... } Code grouping Common options: var = {...} Variable assignment --pdf, --png, --ps Output file format -dpreview Cropped “preview” image \version "2.14.0" LilyPond version -dbackend=eps Use different backend -dlog-file=FILE Create .log file % dots Comment -l LOGLEVEL ERR/WARN/PROG/DEBUG -dno-point-and-click No Point & Click info %{ ... %} Block comment -o OUTDIR Name of output dir/file -djob-count=NR Process files in parallel c\... Postfix-notation (notes) -V Verbose output -dpixmap-format=pngalpha Transparent PNG #'(..), ##t, #'sym Scheme list, true, symb. -dhelp Help on options -dno-delete-intermediate-files Keep .ps files x-.., x^.., x_.. Directions Basic Notation Creating Staves, Voices and Groups \version "2.15.0" c d e f g a b Note names (Dutch) SMusic = \relative c'' { c1\p } Alterations: -is/-es for sharp/flat, SLyrics = \lyricmode { Oh! } cis bes as cisis beses b b! b? -isis/-eses for double, ! forces, AMusic = \relative c' { e1 } ? shows cautionary accidental \relative c' {c f d' c,} Relative mode (change less than a \score { fifth), raise/lower one octave \new ChoirStaff << \new Staff { g1 g2 g4 g8 g16 g4. g4.. durations (1, 2, 4, 8, 16, ...); append “.” for dotted note \new Voice = "Sop" { \dynamicUp \SMusic -

Free Software Needs Free Tools

Free Software Needs Free Tools Benjamin Mako Hill [email protected] June 6, 2010 Over the last decade, free software developers have been repeatedly tempted by devel- opment tools that offer the ability to build free software more efficiently or powerfully. The only cost, we are told, is that the tools themselves are nonfree or run as network services with code we cannot see, copy, or run ourselves. In their decisions to use these tools and services – services such as BitKeeper, SourceForge, Google Code and GitHub – free software developers have made “ends-justify-the-means” decisions that trade away the freedom of both their developer communities and their users. These decisions to embrace nonfree and private development tools undermine our credibility in advocating for soft- ware freedom and compromise our freedom, and that of our users, in ways that we should reject. In 2002, Linus Torvalds announced that the kernel Linux would move to the “Bit- Keeper” distributed version control system (DVCS). While the decision generated much alarm and debate, BitKeeper allowed kernel developers to work in a distributed fashion in a way that, at the time, was unsupported by free software tools – some Linux developers decided that benefits were worth the trade-off in developers’ freedom. Three years later the skeptics were vindicated when BitKeeper’s owner, Larry McVoy, revoked several core kernel developers’ gratis licenses to BitKeeper after Andrew Tridgell attempted to write a free replacement for BitKeeper. Kernel developers were forced to write their own free software replacement: the project now known as Git. Of course, free software’s relationships to nonfree development tools is much larger than BitKeeper.