Modernization | Supplement

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Energy Star Qualified Buildings

1 ENERGY STAR® Qualified Buildings As of 1-1-03 Building Address City State Alabama 10044 3535 Colonnade Parkway Birmingham AL Bellsouth City Center 600 N 19th St. Birmingham AL Arkansas 598 John L. McClellan Memorial Veterans Hospital 4300 West 7th Street Little Rock AR Arizona 24th at Camelback 2375 E Camelback Phoenix AZ Phoenix Federal Courthouse -AZ0052ZZ 230 N. First Ave. Phoenix AZ 649 N. Arizona VA Health Care System - Prescott 500 Highway 89 North Prescott AZ America West Airlines Corporate Headquarters 111 W. Rio Salado Pkwy. Tempe AZ Tempe, AZ - Branch 83 2032 West Fourth Street Tempe AZ 678 Southern Arizona VA Health Care System-Tucson 3601 South 6th Avenue Tucson AZ Federal Building 300 West Congress Tucson AZ Holualoa Centre East 7810-7840 East Broadway Tucson AZ Holualoa Corporate Center 7750 East Broadway Tucson AZ Thomas O' Price Service Center Building #1 4004 S. Park Ave. Tucson AZ California Agoura Westlake 31355 31355 Oak Crest Drive Agoura CA Agoura Westlake 31365 31365 Oak Crest Drive Agoura CA Agoura Westlake 4373 4373 Park Terrace Dr Agoura CA Stadium Centre 2099 S. State College Anaheim CA Team Disney Anaheim 700 West Ball Road Anaheim CA Anahiem City Centre 222 S Harbor Blvd. Anahiem CA 91 Freeway Business Center 17100 Poineer Blvd. Artesia CA California Twin Towers 4900 California Ave. Bakersfield CA Parkway Center 4200 Truxton Bakersfield CA Building 69 1 Cyclotron Rd. Berkeley CA 120 Spalding 120 Spalding Dr. Beverly Hills CA 8383 Wilshire 8383 Wilshire Blvd. Beverly Hills CA 9100 9100 Wilshire Blvd. Beverly Hills CA 9665 Wilshire 9665 Wilshire Blvd. -

Amazon's Document

REQUEST FOR INFORMATION Project Clancy TALENT A. Big Questions and Big Ideas 1. Population Changes and Key Drivers. a. Population level - Specify the changes in total population in your community and state over the last five years and the major reasons for these changes. Please also identify the majority source of inbound migration. Ne Yok Cit’s populatio ge fo . illio to . illio oe the last fie eas ad is projected to surpass 9 million by 2030.1 New York City continues to attract a dynamic and diverse population of professionals, students, and families of all backgrounds, mainly from Latin America (including the Caribbean, Central America, and South America), China, and Eastern Europe.2 Estiate of Ne York City’s Populatio Year Population 2011 8,244,910 2012 8,336,697 2013 8,405,837 2014 8,491,079 2015 8,550,405 2016 8,537,673 Source: American Community Survey 1-Year Estimates Cumulative Estimates of the Components of Population Change for New York City and Counties Time period: April 1, 2010 - July 1, 2016 Total Natural Net Net Net Geographic Area Population Increase Migration: Migration: Migration: Change (Births-Deaths) Total Domestic International New York City Total 362,540 401,943 -24,467 -524,013 499,546 Bronx 70,612 75,607 -3,358 -103,923 100,565 Brooklyn 124,450 160,580 -32,277 -169,064 136,787 Manhattan 57,861 54,522 7,189 -91,811 99,000 1 New York City Population Projections by Age/Sex & Borough, 2010-2040 2 Place of Birth for the Foreign-Born Population in 2012-2016, American Community Survey PROJECT CLANCY PROPRIETARY AND CONFIDENTIAL 4840-0257-2381.3 1 Queens 102,332 99,703 7,203 -148,045 155,248 Staten Island 7,285 11,531 -3,224 -11,170 7,946 Source: Population Division, U.S. -

Ig¾a Corporate Plan

In the beginning … water covered the Earth. Out of the water emerged an island, a single giant mass of land; an ancient supercontinent called Pangaea. Over billions of years, tectonic forces broke Pangaea apart, dividing the supercontinent into ever distant land masses: Africa, Eurasia, Australasia, North and South America. As the land masses drifted farther apart, so too did the people who inhabited them. Huge bodies of water, time and space, separated people from their friends, family, loved ones and partners in trade. Until now… Igæa: (eye-jee-uh) n: “i” mod. (interconnected; internet; idea); “Gaea” Grk. (earth; land). 1) The new supercontinent; a virtual place created through light speed communications; 2) Interconnected Earth; everywhere at once, omnipresent; 3) A global Internet Protocol (IP) communications network carrying streaming digital voice, video and data, transcending physical barriers, to bring all the people of the world togeth- er in one virtual place at any time. Important Information Confidential This corporate plan and all of its contents were produced internally by Igæa. For more information contact: Kirk Rittenhouse Manz, CEO Igæa 119 Windsor Drive Nashville, Tennessee 37205 E-mail [email protected] Telephone 615/353-9737 Facsimile 615/353-9521 www.igaea.com 2nd Quarter 2000 This business plan is provided for purposes of information and evaluation only. It does not constitute an offer to sell, or a solicitation of securities, offers to buy or any other interest in the business. Any such offering will be made only by appropriate documents and in accordance with applicable State and Federal laws. The information contained in this document is absolutely confidential and is intend- ed only for persons to whom it is transmitted by the Company and to their imme- diate business associates with whom they are required to confer in order to prop- erly evaluate this business opportunity. -

THE VERIZON TELEPHONE COMPANIES TARIFF F.C.C. NO. 21 1St Revised Page 4-1 Cancels Original Page 4-1

THE VERIZON TELEPHONE COMPANIES TARIFF F.C.C. NO. 21 1st Revised Page 4-1 Cancels Original Page 4-1 SPECIAL CONSTRUCTION (D)(x) (S)(y) 4. Verizon New York Inc. Special Construction Cases 4.1 Charges for the State of Connecticut (Company Code 5131) 4.1.1 Special Construction Cases Prior to May 25, 1984 The following cases are subject to the regulations specified in 2.1 through 2.8 preceding, with the following exception. When the initial liability period expires, an annual underutilization charge applies to the difference between 70% of the number of original specially constructed facilities and the number of facilities in service at filed tariff rates at that time. For purposes of determining the underutilization charge, any facilities subject to minimum period monthly charges are considered to be in service at filed tariff rates. There are no special construction cases prior to May 24, 1984 for the State of Connecticut. (S)(y) (D)(x) (x) Filed under authority of Special Permission No. 02-053 of the Federal Communications Commission. (y) Reissued material filed under TR 169, to become effective on April 13, 2002. (TR 176) Issued: April 11, 2002 Effective: April 13, 2002 Vice President, Federal Regulatory 1300 I Street, NW, Washington, D.C. 20005 THE VERIZON TELEPHONE COMPANIES TARIFF F.C.C. NO. 21 8th Revised Page 4-2 Cancels 7th Revised Page 4-2 SPECIAL CONSTRUCTION 4. Verizon New York Inc. Special Construction Cases (Cont'd) 4.1 Charges for the State of Connecticut (Company Code 5131) (Cont'd) 4.1.2 Charges to Provide Permanent Facilities Customer: Greenwich Capital Markets ID# 2003-264110 Description: Special Construction of facilities to provide fiber based telecommunications services at 600 Steamboat Road, Greenwich, CT 06830. -

NL August 1987

VOL. 12, NUMBER 5 7*t Nmlcttir o//A« P*rk West V7//<f« Ttnmnu Assoctetto* AUGUST 1987 MORE PWV MANAGEMENT CHANGES MANAGEMENT INVITES PWVTA TO MEET There has now been a complete changing of the After numerous requests from PWVTA to meet guard in the senior management of PWV. with Park West Management over the years, Starting at the very top, Leona Helmsley's which were always turned down (indeed, our office is taking an increasing operational existence as a tenants' association was never responsibility reviewing, for example, roof officially recognized in any way), we were repair bids at 400. Previously reported was invited by the new Park West Manager, the summary dismissal of Myron Marmorstein Alex Butkevich, to meet with him and his (October 1986) after more than 20 years at colleagues. We met with them on July 23 and PWV. Michael MacGovern, an 18-year PWV were assured that they were serious in want- veteran, was similarly dismissed from his ing to discuss tenant matters with us. This post as Operations Manager in June. was subsequently put in writing by Richard Weiss and Gerald Harris, formerly Benjamin E. Lipschitz, Vice President of directing PWV from Helmsley Spear's downtown Park West Management Corp. headquarters, and Carol Mann, a vice presi- dent of the Park West Management Corp., and We were told of the changes which will be the signer of the purchase agreements for made in 372/382 (detailed elsewhere in this Helmsley's Supervisory Management Company, newsletter), including having TV cameras in are no longer with the Helmsley corporations. -

2007 Labeled Buildings List Final Feb6 Bystate

ENERGY STAR® Qualified Buildings and Manufacturing Plants As of December 31, 2007 Building/Plant Name City State Building/Plant Type Alabama Calhoun County Administration Building Anniston AL Courthouse Calhoun County Court House Anniston AL Courthouse 10044 Birmingham AL Office Alabama Operations Center Birmingham AL Office BellSouth City Center Birmingham AL Office Birmingham Homewood TownePlace Suites by Marriott Birmingham AL Hotel/Motel Roberta Plant Calera AL Cement Plant Honda Manufacturing of Alabama, LLC Lincoln AL Auto Assembly Plant Alaska Elmendorf AFB, 3MDG, DoD/VA Joint Venture Hospital Elmendorf Air Force Base AK Hospital Arizona 311QW - Phoenix Chandler Courtyard Chandler AZ Hotel/Motel Bashas' Chandler AZ Supermarket/Grocery Bashas' Food City Chandler AZ Supermarket/Grocery Phoenix Cement Clarkdale AZ Cement Plant Flagstaff Embassy Suites Flagstaff AZ Hotel/Motel Fort Defiance Indian Hospital Fort Defiance AZ Hospital 311K5 - Phoenix Mesa Courtyard Mesa AZ Hotel/Motel 100 North 15th Avenue Building Phoenix AZ Office 1110 West Washington Building Phoenix AZ Office 24th at Camelback Phoenix AZ Office 311JF - Phoenix Camelback Courtyard Phoenix AZ Hotel/Motel 311K3 - Courtyard Phoenix Airport Phoenix AZ Hotel/Motel 311K4 - Phoenix North Courtyard Phoenix AZ Hotel/Motel 3131 East Camelback Phoenix AZ Office 57442 - Phoenix Airport Residence Inn Phoenix AZ Hotel/Motel Arboleda Phoenix AZ Office Bashas' Food City Phoenix AZ Supermarket/Grocery Biltmore Commerce Center Phoenix AZ Office Biltmore Financial Center I Phoenix AZ -

Skyscrapers and District Heating, an Inter-Related History 1876-1933

Skyscrapers and District Heating, an inter-related History 1876-1933. Introduction: The aim of this article is to examine the relationship between a new urban and architectural form, the skyscraper, and an equally new urban infrastructure, district heating, both of witch were born in the north-east United States during the late nineteenth century and then developed in tandem through the 1920s and 1930s. These developments will then be compared with those in Europe, where the context was comparatively conservative as regards such innovations, which virtually never occurred together there. I will argue that, the finest example in Europe of skyscrapers and district heating planned together, at Villeurbanne near Lyons, is shown to be the direct consequence of American influence. Whilst central heating had appeared in the United Kingdom in the late eighteenth and the early nineteenth centuries, district heating, which developed the same concept at an urban scale, was realized in Lockport (on the Erie Canal, in New York State) in the 1880s. In United States were born the two important scientists in the fields of heating and energy, Benjamin Franklin (1706-1790) and Benjamin Thompson Rumford (1753-1814). Standard radiators and boilers - heating surfaces which could be connected to central or district heating - were also first patented in the United States in the late 1850s.1 A district heating system produces energy in a boiler plant - steam or high-pressure hot water - with pumps delivering the heated fluid to distant buildings, sometimes a few kilometers away. Heat is therefore used just as in other urban networks, such as those for gas and electricity. -

Chapter 5. Historic Resources 5.1 Introduction

CHAPTER 5. HISTORIC RESOURCES 5.1 INTRODUCTION 5.1.1 CONTEXT Lower Manhattan is home to many of New York City’s most important historic resources and some of its finest architecture. It is the oldest and one of the most culturally rich sections of the city. Thus numerous buildings, street fixtures and other structures have been identified as historically significant. Officially recognized resources include National Historic Landmarks, other individual properties and historic districts listed on the State and National Registers of Historic Places, properties eligible for such listing, New York City Landmarks and Historic Districts, and properties pending such designation. National Historic Landmarks (NHL) are nationally significant historic places designated by the Secretary of the Interior because they possess exceptional value or quality in illustrating or interpreting the heritage of the United States. All NHLs are included on the National Register, which is the nation’s official list of historic properties worthy of preservation. Historic resources include both standing structures and archaeological resources. Historically, Lower Manhattan’s skyline was developed with the most technologically advanced buildings of the time. As skyscraper technology allowed taller buildings to be built, many pioneering buildings were erected in Lower Manhattan, several of which were intended to be— and were—the tallest building in the world, such as the Woolworth Building. These modern skyscrapers were often constructed alongside older low buildings. By the mid 20th-century, the Lower Manhattan skyline was a mix of historic and modern, low and hi-rise structures, demonstrating the evolution of building technology, as well as New York City’s changing and growing streetscapes. -

Life After Lockup: Improving Reentry from Jail to the Community Kathy Gnall, Pennsylvania Department of Correction Miriam J

Amy L. Solomon Jenny W.L. Osborne Stefan F. LoBuglio Jeff Mellow Debbie A. Mukamal Title of Section i 00-monograph-frontmatter.indd 1 4/29/08 11:10:25 AM 2100 M Street NW Washington, DC 20037 www.urban.org © 2008 Urban Institute On the Cover: Center photograph reprinted with permission from the Center for Employment Opportunities. This report was prepared under grant 2005-RE-CX-K148 awarded by the Bureau of Justice Assistance. The Bureau of Justice Assistance is a component of the Offi ce of Justice Programs, which also includes the Bureau of Justice Statistics, the National Institute of Justice, the Office of Juvenile Justice and Delinquency Prevention, and the Office for Victims of Crime. Opinions expressed in this document are those of the authors and do not necessarily represent the official position or policies of the U.S. Department of Justice, the Urban Institute, John Jay College of Criminal Justice, or the Montgomery County, Maryland Department of Correction and Rehabilitation. About the Authors my L. Solomon is a senior research associate at the Urban Institute, where she works to link the research activities of the Justice Policy Center to the policy and A practice arenas. Amy directs projects relating to reentry from local jails, community supervision, and innovative reentry practices at the neighborhood level. Jenny W.L. Osborne is a research associate in the Urban Institute’s Justice Policy Center, where she is involved in policy and practitioner-oriented prisoner reentry projects and serves as the center’s primary contact for prisoner reentry research. Jenny is the project coordinator for the Jail Reentry Roundtable Initiative and the Transition from Jail to Community project. -

Guide to the Department of Buildings Architectural Drawings and Plans for Lower Manhattan, Circa 1866-1978 Collection No

NEW YORK CITY MUNICIPAL ARCHIVES 31 CHAMBERS ST., NEW YORK, NY 10007 Guide to the Department of Buildings architectural drawings and plans for Lower Manhattan, circa 1866-1978 Collection No. REC 0074 Processing, description, and rehousing by the Rolled Building Plans Project Team (2018-ongoing): Amy Stecher, Porscha Williams Fuller, David Mathurin, Clare Manias, Cynthia Brenwall. Finding aid written by Amy Stecher in May 2020. NYC Municipal Archives Guide to the Department of Buildings architectural drawings and plans for Lower Manhattan, circa 1866-1978 1 NYC Municipal Archives Guide to the Department of Buildings architectural drawings and plans for Lower Manhattan, circa 1866-1978 Summary Record Group: RG 025: Department of Buildings Title of the Collection: Department of Buildings architectural drawings and plans for Lower Manhattan Creator(s): Manhattan (New York, N.Y.). Bureau of Buildings; Manhattan (New York, N.Y.). Department of Buildings; New York (N.Y.). Department of Buildings; New York (N.Y.). Department of Housing and Buildings; New York (N.Y.). Department for the Survey and Inspection of Buildings; New York (N.Y.). Fire Department. Bureau of Inspection of Buildings; New York (N.Y.). Tenement House Department Date: circa 1866-1978 Abstract: The Department of Buildings requires the filing of applications and supporting material for permits to construct or alter buildings in New York City. This collection contains the plans and drawings filed with the Department of Buildings between 1866-1978, for the buildings on all 958 blocks of Lower Manhattan, from the Battery to 34th Street, as well as a small quantity of material for blocks outside that area. -

Annual Report 2012 a MESSAGE from the CHAIR and the PRESIDENT

Annual Report 2012 A MESSAGE FROM THE CHAIR AND THE PRESIDENT In 2009, the Hudson Square Connection was estab- This Fiscal Year, our Board also approved an lished as the 64th Business Improvement District ambitious five year $27 million Streetscape (BID) in New York City. Since its inception, the Improvement Plan, which is poised to further the organization has worked hard to foster a strong transformation of Hudson Square. Prepared with sense of community in Hudson Square. Our objec- a team led by Matthews Nielsen Landscape Archi- tive has always been to create a special place in New tects, the Plan provides a blueprint for beautifying York where people want to work, play and live their and enlivening the streets, and reinforcing a lives. Fiscal Year 2012 was a break out year for us as socially, culturally and environmentally connected an organization. It was the year we began to see real community. The Plan is constructed as a public- progress against our ambitious goals. private partnership between the BID and the City During Fiscal Year 2012, we made great strides of New York. Our thanks to all of you who spent so in achieving our mission to reclaim our streets and much time sharing your thoughts and your dreams sidewalks for people. Numerous improvements for this wonderful neighborhood. We hope you’ll have lead to an enhanced environment that balances agree that the Plan captures the unique and vibrant pedestrians, cyclists and motorists. The Hudson identity of Hudson Square. Let’s work together to Square Connection’s Pedestrian Traffic Managers make it happen! are now a part of our community, easing the way During our first years as a BID, we talked a for all of us as we make our way to the subways great deal, as a community, about the future of each evening. -

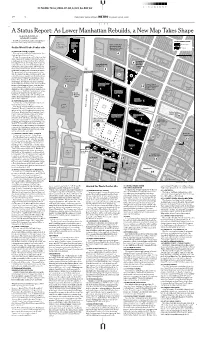

As Lower Manhattan Rebuilds, a New Map Takes Shape

ID NAME: Nxxx,2004-07-04,A,024,Bs-BW,E2 3 7 15 25 50 75 85 93 97 24 Ø N THE NEW YORK TIMES METRO SUNDAY, JULY 4, 2004 CITY A Status Report: As Lower Manhattan Rebuilds, a New Map Takes Shape By DAVID W. DUNLAP and GLENN COLLINS Below are projects in and around ground DEVELOPMENT PLAN zero and where they stood as of Friday. Embassy Goldman Suites Hotel/ Sachs Bank of New York BUILDINGS UA Battery Park Building Technology and On the World Trade Center site City theater site 125 Operations Center PARKS GREENWICH ST. 75 Park Place Barclay St. 101 Barclay St. (A) FREEDOM TOWER / TOWER 1 2 MURRAY ST. Former site of 6 World Trade Center, the 6 United States Custom House 0Feet 200 Today, the cornerstone will be laid for this WEST BROADWAY skyscraper, with about 60,000 square feet of retail space at its base, followed by 2.6 mil- Fiterman Hall, lion square feet of office space on 70 stories, 9 Borough of topped by three stories including an obser- Verizon Building Manhattan PARK PL. vation deck and restaurants. Above the en- 4 World 140 West St. Community College closed portion will be an open-air structure Financial 3 5 with wind turbines and television antennas. Center 7 World The governor’s office is a prospective ten- VESEY ST. BRIDGE Trade Center 100 Church St. ant. Occupancy is expected in late 2008. The WASHINGTON ST. 7 cost of the tower, apart from the infrastruc- 3 World 8 BARCLAY ST. ture below, is estimated at $1 billion to $1.3 Financial Center billion.