Recommendations for Security and Safety Co-Engineering Merge ITEA2 Project # 11011

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

CMMN Modeling of the Risk Management Process Extended Abstract

CMMN modeling of the risk management process Extended abstract Tiago Barros Ferreira Instituto Superior Técnico [email protected] ABSTRACT Enterprise Risk Management (ERM) is thus a very important activity to support the Risk management is a development activity and achievement of the organizations' goals. increasingly plays a crucial role in Risk management is defined as “coordinated organization´s management. Being very activities to direct and control an organization valuable for organizations, they develop and with regard to risk” [1]. ERM, as a risk implement enterprise risk management management activity, comprises the strategies that improve their business model identification of the potential events in the whole and bring them better results. Enterprise risk organization that can affect the objectives, the management strategies are based on the respective risk appetite, and thus, with implementation of a risk management process reasonable assurance, the fulfillment of the and supporting structure. Together, this process organization's goals [2]. and structure form a framework. ISO 31000 Many organizations nowadays have their standard is currently the main global reference risk management framework, including framework for risk management, proposing the activities to identify their risks, and their general principles and guidelines for risk prevention measures, documented. To this end, management, regardless of context. The most [1] is at this moment the main reference for risk recent revision of the standard ISO 31000:2018 management frameworks, proposing the [1] depicts the process of risk management as general principles and guidelines for risk a case rather than a sequential process. To that management, regardless of their context. -

Office Market Review 2015 Update Final Report

Office Market Review 2015 Update Final Report On Bath City Centre on behalf of Bath and North East Somerset Council Prepared by Lambert Smith Hampton Tower Wharf Cheese Lane Bristol BS2 0JJ Tel: 0117 926 6666 Date: October 2015 CONTENTS INTRODUCTION EXECUTIVE SUMMARY 4 1. SUPPLY OF OFFICE ACCOMMODATION 5 1.1 Location 5 1.2 Supply 5 1.3 Grade of offices 5 1.4 The Office stock 6 1.5 Vacancy rate 1.6 Impact of Permitted development rights 1.7 Take Up 9 1.8 Additional supply 10 2. DEMAND 2.1 Sources of demand 12 2.2 Latent and suppressed demand 12 2.3 Requirement of modern occupiers 13 2.4 Attraction of Bath city centre to office occupiers 14 3. DELIVERY OF OFFICE SPACE 3.1 Why has Bath not delivered private sector investment. 16 3.2 What should Bath be seeking to deliver. 16 3.3 Opportunities. 18 4. CONCLUSIONS & OBSERVATIONS 4.1 General 21 4.2 Bath Occupiers 21 4.3 Potential Occupiers 21 4.4 The Future 22 Appendices Appendix 1 Area Plan Appendix 2 Bath overview and statistics Appendix 3 Bath Office Occupiers Survey Bath & North East Somerset Council 2 October 2015 Office Market Review INTRODUCTION Lambert Smith Hampton has been requested by Bath and North-East Somerset Council to provide an up dated market analysis of the existing supply and demand for offices in Bath City Centre. This analysis will be used to help inform and shape policy. It will also feed into the potential delivery of new office space and direction of the Council’s Place-Making Plan. -

Deliverable D7.5: Standards and Methodologies Big Data Guidance

Project acronym: BYTE Project title: Big data roadmap and cross-disciplinarY community for addressing socieTal Externalities Grant number: 619551 Programme: Seventh Framework Programme for ICT Objective: ICT-2013.4.2 Scalable data analytics Contract type: Co-ordination and Support Action Start date of project: 01 March 2014 Duration: 36 months Website: www.byte-project.eu Deliverable D7.5: Standards and methodologies big data guidance Author(s): Jarl Magnusson, DNV GL AS Erik Stensrud, DNV GL AS Tore Hartvigsen, DNV GL AS Lorenzo Bigagli, National Research Council of Italy Dissemination level: Public Deliverable type: Final Version: 1.1 Submission date: 26 July 2017 Table of Contents Preface ......................................................................................................................................... 3 Task 7.5 Description ............................................................................................................... 3 Executive summary ..................................................................................................................... 4 1 Introduction ......................................................................................................................... 5 2 Big Data Standards Organizations ...................................................................................... 6 3 Big Data Standards ............................................................................................................. 8 4 Big Data Quality Standards ............................................................................................. -

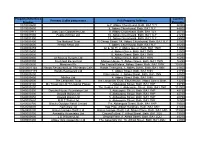

Property Reference Number Primary Liable Party Name Full Property

Property Reference Current Primary Liable party name Full Property Address Number Rateable 0010000600 6-7, Abbey Churchyard, Bath, BA1 1LY 30000 0010000800 8, Abbey Churchyard, Bath, BA1 1LY 44500 0010000911 Jody Cory Goldsmiths Ltd 9, Abbey Churchyard, Bath, BA1 1LY 20750 0010001000 Fudge Kitchen Ltd 10, Abbey Churchyard, Bath, BA1 1LY 21250 0010001300 13, Abbey Churchyard, Bath, BA1 1LY 25000 0010001400 The National Trust 15 Cheap Street, 14, Abbey Churchyard, Bath, BA1 1LY 51000 0010500000 Presto Retail Ltd 7, Abbey Churchyard, Bath, BA1 1LY 38250 0020000255 Gr & 1st Fl, 2, Abbey Green, Bath, BA1 1NW 13000 0020000400 4, Abbey Green, Bath, BA1 1NW 12500 0020000500 5, Abbey Green, Bath, BA1 1NW 28250 0020000800 At Sixes & Sevens Ltd 8, Abbey Green, Bath, BA1 1NW 16250 0020000900 Freshford Bazaar Ltd Mignon House, 9, Abbey Green, Bath, BA1 1NW 16250 0020500000 Marstons Plc The Crystal Palace, Abbey Green, Bath, BA1 1NW 103000 00300001165 Hands Restaurant Ltd T/A Hands Cafe Hands Tearooms, 1, Abbey Street, Bath, BA1 1NN 21500 00300001175 Hands Restaurant Ltd 1, Abbey Street, Bath, BA1 1NN 21750 0030000200 Elton House, 2, Abbey Street, Bath, BA1 1NN 24500 0030000400 Mirifice Ltd 4, Abbey Street, Bath, BA1 1NN 31750 0030000500 The Landmark Trust The Landmark Trust, Elton House, Abbey Street, Bath, 13250 0040000100 My Travel Uk Ltd T/A Going Places 1, Abbeygate Street, Bath, BA1 1NP 39750 0040000200 The Golden Cot, 2, Abbeygate Street, Bath, BA1 1NP 112000 0040000300 Dorothy House Foundation Ltd 3, Abbeygate Street, Bath, BA1 1NP 45750 0040000400 -

A Quick Guide to Responsive Web Development Using Bootstrap 3

STEP BY STEP BOOTSTRAP 3: A QUICK GUIDE TO RESPONSIVE WEB DEVELOPMENT USING BOOTSTRAP 3 Author: Riwanto Megosinarso Number of Pages: 236 pages Published Date: 22 May 2014 Publisher: Createspace Independent Publishing Platform Publication Country: United States Language: English ISBN: 9781499655629 DOWNLOAD: STEP BY STEP BOOTSTRAP 3: A QUICK GUIDE TO RESPONSIVE WEB DEVELOPMENT USING BOOTSTRAP 3 Step by Step Bootstrap 3: A Quick Guide to Responsive Web Development Using Bootstrap 3 PDF Book Written by a team of noted teaching experts led by award-winning Texas-based author Dr. Today we knowthat many other factors in?uence the well- functioning of a computer system. Rossi, M. Answers to selected exercises and a list of commonly used words are provided at the back of the book. He demonstrates how the most dynamic and effective people - from CEOs to film-makers to software entrepreneurs - deploy them. The chapters in this volume illustrate how learning scientists, assessment experts, learning technologists, and domain experts can work together in an integrated effort to develop learning environments centered on challenge-based instruction, with major support from technology. This continues to be the only book that brings together all of the steps involved in communicating findings based on multivariate analysis - finding data, creating variables, estimating statistical models, calculating overall effects, organizing ideas, designing tables and charts, and writing prose - in a single volume. Step by Step Bootstrap 3: A Quick Guide to Responsive Web Development Using Bootstrap 3 Writer The Dreams Our Stuff is Made Of: How Science Fiction conquered the WorldAdvance Praise ""What a treasure house is this book. -

Copy of NNDR Assessments Exceeding 30000 20 06 11

Company name Full Property Address inc postcode Current Rateable Value Eriks Uk Ltd Ber Ltd, Brassmill Lane, Bath, BA1 3JE 69000 Future Publishing Ltd 1st & 2nd Floors, 5-10, Westgate Buildings, Bath, BA1 1EB 163000 Nationwide Building Society Nationwide Building Society, 3-4, Bath Street, Bath, BA1 1SA 112000 The Eastern Eye Ltd 1st & 2nd Floors, 8a, Quiet Street, Bath, BA1 2JU 32500 Messers A Jones & Sons Ltd 19, Cheap Street, Bath, BA1 1NA 66000 Bath & N E Somerset Council 12, Charlotte Street, Bath, BA1 2NE 41250 Carr & Angier Levels 6 & Part 7, The Old Malthouse, Clarence Street, Bath, BA1 5NS 30500 Butcombe Brewery Ltd Old Crown (The), Kelston, Bath, BA1 9AQ 33000 Shaws (Cardiff) Ltd 19, Westgate Street, Bath, BA1 1EQ 50000 Lifestyle Pharmacy Ltd 14, New Bond Street, Bath, BA1 1BE 84000 Bath Rugby Ltd Club And Prems, Bath Rfc, Pulteney Mews, Bath, BA2 4DS 110000 West Of England Language Services Ltd International House Language S, 5, Trim Street, Bath, BA1 1HB 43250 O'Hara & Wood 29, Gay Street, Bath, BA1 2PD 33250 Wellsway (Bath) Ltd Wellsway B.M.W., Lower Bristol Road, Bath, BA2 3DR 131000 Bath & N E Somerset Council Lewis House, Manvers Street, Bath, BA1 1JH 252500 Abbey National Bldg Society Abbey National Bldg Society, 5a-6, Bath Street, Bath, BA1 1SA 84000 Paradise House Hotel, 86-88, Holloway, Bath, BA2 4PS 42000 12, Upper Borough Walls, Bath, BA1 1RH 35750 Moss Electrical Ltd 45-46 St James Parade, Lower Borough Walls, Bath, BA1 1UQ 49000 Claire Accessories Uk Ltd 23, Stall Street, Bath, BA1 1QF 100000 Thorntons Plc 1st Floor -

EMSCLOUD - an Evaluative Model of Cloud Services Cloud Service Management

See discussions, stats, and author profiles for this publication at: https://www.researchgate.net/publication/277022271 EMSCLOUD - An evaluative model of cloud services cloud service management Conference Paper · May 2015 DOI: 10.1109/INTECH.2015.7173479 CITATION READS 1 146 2 authors, including: Mehran Misaghi Sociedade Educacional de Santa Catarina (… 56 PUBLICATIONS 14 CITATIONS SEE PROFILE All in-text references underlined in blue are linked to publications on ResearchGate, Available from: Mehran Misaghi letting you access and read them immediately. Retrieved on: 05 July 2016 Fifth international conference on Innovative Computing Technology (INTECH 2015) EMSCLOUD – An Evaluative Model of Cloud Services Cloud service management Leila Regina Techio Mehran Misaghi Post-Graduate Program in Production Engineering Post-Graduate Program in Production Engineering UNISOCIESC UNISOCIESC Joinville – SC, Brazil Joinville – SC, Brazil [email protected] [email protected] Abstract— Cloud computing is considered a paradigm both data repository. There are challenges to be overcome in technology and business. Its widespread adoption is an relation to internal and external risks related to information increasingly effective trend. However, the lack of quality metrics security area, such as virtualization, SLA (Service Level and audit of services offered in the cloud slows its use, and it Agreement), reliability, availability, privacy and integrity [2]. stimulates the increase in focused discussions with the adaptation of existing standards in management services for cloud services The benefits presented by cloud computing, such as offered. This article describes the EMSCloud, that is an Evaluative Model of Cloud Services following interoperability increased scalability, high performance, high availability and standards, risk management and audit of cloud IT services. -

International Standard Iso 19600:2014(E)

This preview is downloaded from www.sis.se. Buy the entire standard via https://www.sis.se/std-918326 INTERNATIONAL ISO STANDARD 19600 First edition 2014-12-15 Compliance management systems — Guidelines Systèmes de management de la conformité — Lignes directrices Reference number ISO 19600:2014(E) © ISO 2014 This preview is downloaded from www.sis.se. Buy the entire standard via https://www.sis.se/std-918326 ISO 19600:2014(E) COPYRIGHT PROTECTED DOCUMENT © ISO 2014 All rights reserved. Unless otherwise specified, no part of this publication may be reproduced or utilized otherwise in any form or by any means, electronic or mechanical, including photocopying, or posting on the internet or an intranet, without prior written permission. Permission can be requested from either ISO at the address below or ISO’s member body in the country of the requester. ISOTel. copyright+ 41 22 749 office 01 11 Case postale 56 • CH-1211 Geneva 20 FaxWeb + www.iso.org 41 22 749 09 47 E-mail [email protected] Published in Switzerland ii © ISO 2014 – All rights reserved This preview is downloaded from www.sis.se. Buy the entire standard via https://www.sis.se/std-918326 ISO 19600:2014(E) Contents Page Foreword ........................................................................................................................................................................................................................................iv Introduction ..................................................................................................................................................................................................................................v -

Master Glossary

Master Glossary Term Definition Course Appearances 12-Factor App Design A methodology for building modern, scalable, maintainable software-as-a-service Continuous Delivery applications. Architecture 2-Factor or 2-Step Two-Factor Authentication, also known as 2FA or TFA or Two-Step Authentication is DevSecOps Engineering Authentication when a user provides two authentication factors; usually firstly a password and then a second layer of verification such as a code texted to their device, shared secret, physical token or biometrics. A/B Testing Deploy different versions of an EUT to different customers and let the customer Continuous Delivery feedback determine which is best. Architecture A3 Problem Solving A structured problem-solving approach that uses a lean tool called the A3 DevOps Foundation Problem-Solving Report. The term "A3" represents the paper size historically used for the report (a size roughly equivalent to 11" x 17"). Acceptance of a The "A" in the Magic Equation that represents acceptance by stakeholders. DevOps Leader Solution Access Management Granting an authenticated identity access to an authorized resource (e.g., data, DevSecOps Engineering service, environment) based on defined criteria (e.g., a mapped role), while preventing an unauthorized identity access to a resource. Access Provisioning Access provisioning is the process of coordinating the creation of user accounts, e-mail DevSecOps Engineering authorizations in the form of rules and roles, and other tasks such as provisioning of physical resources associated with enabling new users to systems or environments. Administration Testing The purpose of the test is to determine if an End User Test (EUT) is able to process Continuous Delivery administration tasks as expected. -

05-December-2012 Register of Sponsors Licensed Under the Points-Based System

REGISTER OF SPONSORS (Tiers 2 & 5 and Sub Tiers Only) DATE: 05-December-2012 Register of Sponsors Licensed Under the Points-based System This is a list of organisations licensed to sponsor migrants under Tiers 2 & 5 of the Points-Based System. It shows the organisation's name (in alphabetical order), the tier(s) they are licensed for, and whether they are A-rated or B-rated against each sub-tier. A sponsor may be licensed under more than one tier, and may have different ratings for each tier. No. of Sponsors on Register Licensed under Tiers 2 and 5: 25,750 Organisation Name Town/City County Tier & Rating Sub Tier (aq) Limited Leeds West Yorkshire Tier 2 (A rating) Tier 2 General ?What If! Ltd London Tier 2 (A rating) Tier 2 General Tier 2 (A rating) Intra Company Transfers (ICT) @ Bangkok Cafe Newcastle upon Tyne Tyne and Wear Tier 2 (A rating) Tier 2 General @ Home Accommodation Services Ltd London Tier 2 (A rating) Tier 2 General Tier 5TW (A rating) Creative & Sporting 1 Life London Limited London Tier 2 (A rating) Tier 2 General 1 Tech LTD London Tier 2 (A rating) Tier 2 General 100% HALAL MEAT STORES LTD BIRMINGHAM West Midlands Tier 2 (A rating) Tier 2 General 1000heads Ltd London Tier 2 (A rating) Tier 2 General 1000mercis LTD London Tier 2 (A rating) Tier 2 General Tier 2 (A rating) Intra Company Transfers (ICT) 101 Thai Kitchen London Tier 2 (A rating) Tier 2 General 101010 DIGITAL LTD NEWARK Page 1 of 1632 Organisation Name Town/City County Tier & Rating Sub Tier Tier 2 (A rating) Tier 2 General 108 Medical Ltd London Tier 2 (A rating) Tier 2 General 111PIX.Com Ltd London Tier 2 (A rating) Tier 2 General 119 West st Ltd Glasgow Tier 2 (A rating) Tier 2 General 13 Artists Brighton Tier 5TW (A rating) Creative & Sporting 13 strides Middlesbrough Cleveland Tier 2 (A rating) Tier 2 General 145 Food & Leisure Northampton Northampton Tier 2 (A rating) Tier 2 General 15 Healthcare Ltd London Tier 2 (A rating) Tier 2 General 156 London Road Ltd t/as Cake R us Sheffield S. -

OWASP Top 10 - 2017 the Ten Most Critical Web Application Security Risks

OWASP Top 10 - 2017 The Ten Most Critical Web Application Security Risks This work is licensed under a https://owasp.org Creative Commons Attribution-ShareAlike 4.0 International License 1 TOC Table of Contents Table of Contents About OWASP The Open Web Application Security Project (OWASP) is an TOC - About OWASP ……………………………… 1 open community dedicated to enabling organizations to FW - Foreword …………..………………...……… 2 develop, purchase, and maintain applications and APIs that can be trusted. I - Introduction ………..……………….……..… 3 At OWASP, you'll find free and open: RN - Release Notes …………..………….…..….. 4 • Application security tools and standards. Risk - Application Security Risks …………….…… 5 • Complete books on application security testing, secure code development, and secure code review. T10 - OWASP Top 10 Application Security • Presentations and videos. Risks – 2017 …………..……….....….…… 6 • Cheat sheets on many common topics. • Standard security controls and libraries. A1:2017 - Injection …….………..……………………… 7 • Local chapters worldwide. A2:2017 - Broken Authentication ……………………... 8 • Cutting edge research. • Extensive conferences worldwide. A3:2017 - Sensitive Data Exposure ………………….. 9 • Mailing lists. A4:2017 - XML External Entities (XXE) ……………... 10 Learn more at: https://www.owasp.org. A5:2017 - Broken Access Control ……………...…….. 11 All OWASP tools, documents, videos, presentations, and A6:2017 - Security Misconfiguration ………………….. 12 chapters are free and open to anyone interested in improving application security. A7:2017 - Cross-Site Scripting (XSS) ….…………….. 13 We advocate approaching application security as a people, A8:2017 - Insecure Deserialization …………………… 14 process, and technology problem, because the most A9:2017 - Using Components with Known effective approaches to application security require Vulnerabilities .……………………………… 15 improvements in these areas. A10:2017 - Insufficient Logging & Monitoring….…..….. 16 OWASP is a new kind of organization. -

Recently Completed September October 2015

Current Standards Activities Page 1 of 15 Browse By Group: View All New Publications | Supplements | Amendments | Errata | Endorsements | Reaffirmations | Withdrawals | Formal Interpretations | Informs & Certification Notices | Previous Reports Last updated: November 5, 2015 New Publications This section lists new standards, new editions (including adoptions), and special publications that have been recently published. Clicking on "View Detail" will display the scope of the document and other details available on CSA’s Online store. Program Standard Title Date Posted Scope Quality / Business Z710-15 Activités du Bureau du registre de la nation métisse 10/29/2015 View Detail Management Communications / CAN/CSA-ISO/IEC Identification cards - Test methods - Part 5: Optical memory cards 10/29/2015 View Detail Information 10373-5:15 (Adopted ISO/IEC 10373-5:2014, third edition, 2014-10-01) Communications / CAN/CSA-ISO/IEC Information technology - Programming languages, their 10/29/2015 View Detail Information 1989:15 environments and system software interfaces - Programming language COBOL (Adopted ISO/IEC 1989:2014, second edition, 2014-06-01) Communications / CAN/CSA-ISO/IEC Information technology - Magnetic stripes on savingsbooks 10/29/2015 View Detail Information 8484:15 (Adopted ISO/IEC 8484:2014, second edition, 2014-08-15) http://standardsactivities.csa.ca/standardsactivities/recent_infoupdate.asp 11/5/2015 Current Standards Activities Page 2 of 15 Electrical / Electronics C22.2 NO. 184.1-15 Solid-state dimming controls 10/22/2015 View Detail (Bi-national standard with UL 1472) Electrical / Electronics C22.2 NO. 295-15 Neutral grounding devices 10/22/2015 View Detail Electrical / Electronics C22.2 NO. 94.1-15 Enclosures for electrical equipment, non-environmental 10/22/2015 View Detail considerations (Tri-national standard with NMX-J-235/1-ANCE-2015 and UL 50) Electrical / Electronics C22.2 NO.