An Object-Oriented Approach to the Design of Graphical User Interface Systems

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Interface Types for Haskell

Interface Types for Haskell Peter Thiemann and Stefan Wehr Institut f¨urInformatik, Universit¨atFreiburg, Germany {thiemann,wehr}@informatik.uni-freiburg.de Abstract. Interface types are a useful concept in object-oriented pro- gramming languages like Java or C#. A clean programming style advo- cates relying on interfaces without revealing their implementation. Haskell’s type classes provide a closely related facility for stating an in- terface separately from its implementation. However, there are situations in which no simple mechanism exists to hide the identity of the imple- mentation type of a type class. This work provides such a mechanism through the integration of lightweight interface types into Haskell. The extension is non-intrusive as no additional syntax is needed and no existing programs are affected. The implementation extends the treat- ment of higher-rank polymorphism in production Haskell compilers. 1 Introduction Interfaces in object-oriented programming languages and type classes in Haskell are closely related: both define the types of certain operations without revealing their implementations. In Java, the name of an interface also acts as an interface type, whereas the name of a type class can only be used to constrain types. Interface types are a proven tool for ensuring data abstraction and information hiding. In many cases, Haskell type classes can serve the same purpose, but there are situations for which the solutions available in Haskell have severe drawbacks. Interface types provide a simple and elegant solution in these situations. A modest extension to Haskell provides the simplicity and elegance of interface types: simply allow programmers to use the name of a type class as a first- class type. -

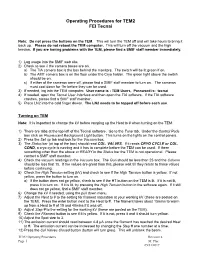

Operating Procedures for TEM2 FEI Tecnai

Operating Procedures for TEM2 FEI Tecnai Note: Do not press the buttons on the TEM. This will turn the TEM off and will take hours to bring it back up. Please do not reboot the TEM computer. This will turn off the vacuum and the high tension. If you are having problems with the TEM, please find a SMIF staff member immediately. 1) Log usage into the SMIF web site. 2) Check to see if the camera boxes are on. a) The TIA camera box is the box behind the monitors. The switch will be lit green if on. b) The AMT camera box is on the floor under the Cryo holder. The green light above the switch should be on. c) If either of the cameras were off, please find a SMIF staff member to turn on. The cameras must cool down for 1hr before they can be used. 3) If needed, log into the TEM computer. User name is : TEM Users, Password is: tecnai 4) If needed, open the Tecnai User Interface and then open the TIA software. If the TIA software crashes, please find a SMIF staff member. 5) Place LN2 into the cold finger dewar. The LN2 needs to be topped off before each use. Turning on TEM Note: It is important to change the kV before ramping up the Heat to # when turning on the TEM. 1) There are tabs at the top left of the Tecnai software. Go to the Tune tab. Under the Control Pads box click on Fluorescent Background Light button. This turns on the lights on the control panels. -

Chapter 8. Deploying an Osgi Service

Chapter 8. Deploying an OSGi Service The OSGi core framework defines the OSGi Service Layer, which provides a simple mechanism for bundles to interact by registering Java objects as services in the OSGi service registry. One of the strengths of the OSGi service model is that any Java object can be offered as a service: there are no particular constraints, inheritance rules, or annotations that must be applied to the service class. This chapter describes how to deploy an OSGi service using the OSGi blueprint container. The Blueprint Container ........................................................................................................... 92 Blueprint Configuration ................................................................................................... 93 Defining a Service Bean .................................................................................................. 95 Exporting a Service ........................................................................................................ 98 Importing a Service ...................................................................................................... 104 Publishing an OSGi Service .................................................................................................... 113 Accessing an OSGi Service ..................................................................................................... 118 FUSE ESB Deploying into the OSGi Container Version 4.2 91 Chapter 8. Deploying an OSGi Service The Blueprint Container Blueprint Configuration -

Mixin-Based Programming in C++1

Mixin-Based Programming in C++1 Yannis Smaragdakis Don Batory College of Computing Department of Computer Sciences Georgia Institute of Technology The University of Texas at Austin Atlanta, GA 30332 Austin, Texas 78712 [email protected] [email protected] Abstract. Combinations of C++ features, like inheritance, templates, and class nesting, allow for the expression of powerful component patterns. In particular, research has demonstrated that, using C++ mixin classes, one can express lay- ered component-based designs concisely with efficient implementations. In this paper, we discuss pragmatic issues related to component-based programming using C++ mixins. We explain surprising interactions of C++ features and poli- cies that sometimes complicate mixin implementations, while other times enable additional functionality without extra effort. 1 Introduction Large software artifacts are arguably among the most complex products of human intellect. The complexity of software has led to implementation methodologies that divide a problem into manageable parts and compose the parts to form the final prod- uct. Several research efforts have argued that C++ templates (a powerful parameteriza- tion mechanism) can be used to perform this division elegantly. In particular, the work of VanHilst and Notkin [29][30][31] showed how one can implement collaboration-based (or role-based) designs using a certain templatized class pattern, known as a mixin class (or just mixin). Compared to other techniques (e.g., a straightforward use of application frameworks [17]) the VanHilst and Notkin method yields less redundancy and reusable components that reflect the structure of the design. At the same time, unnecessary dynamic binding can be eliminated, result- ing into more efficient implementations. -

Interface Evolution Via Virtual Extension Methods Brian Goetz Fourth Draft, June 2011

Interface evolution via virtual extension methods Brian Goetz Fourth draft, June 2011 1. Problem statement Once published, it is impossible to add methods to an interface without breaking existing implementations. (Specifically, adding a method to an interface is not a source- compatible change.) The longer the time since a library has been published, the more likely it is that this restriction will cause grief for its maintainers. The addition of closures to the Java language in JDK 7 place additional stress on the aging Collection interfaces; one of the most significant benefits of closures is that it enables the development of more powerful libraries. It would be disappointing to add a language feature that enables better libraries while at the same time not extending the core libraries to take advantage of that feature1. V1 of the Lambda Strawman proposed C#-style static extension methods as a means of creating the illusion of adding methods to existing classes and interfaces, but they have significant limitations – for example, they cannot be overridden by classes that implement the interface being extended, so implementations are stuck with the “one size fits all” implementation provided as an extension2, and they are not reflectively discoverable. 2. Virtual extension methods3 In this document, we outline a mechanism for adding new methods to existing interfaces, which we call virtual extension methods. Existing interfaces can be augmented without compromising backward compatibility4 by adding extension methods to the interface, whose declaration would contain instructions for finding the default implementation in the event that implementers do not provide a method body. -

Dynamic Interfaces

Dynamic Interfaces Vasco T. Vasconcelos Simon J. Gay Antonio´ Ravara Departamento de Informatica´ Department of Computing Science SQIG at Instituto de Telecomunicac¸oes˜ Faculdade de Cienciasˆ da University of Glasgow, UK Department of Mathematics, IST, Universidade de Lisboa, Portugal [email protected] Technical University of Lisbon, Portugal [email protected] [email protected] Nils Gesbert Alexandre Z. Caldeira Department of Computing Science Departamento de Informatica´ University of Glasgow, UK Faculdade de Cienciasˆ da [email protected] Universidade de Lisboa, Portugal [email protected] Abstract 1. Introduction We define a small class-based object-oriented language in which Standard class-based object-oriented languages present the pro- the availability of methods depends on an object’s abstract state: grammer with the following view: an object is declared to belong to objects’ interfaces are dynamic. Each class has a session type which a certain type, and the type defines various methods; it is therefore provides a global specification of the availability of methods in possible at any time to call any of the methods described in the type. each state. A key feature is that the abstract state of an object However, there are often semantic reasons why it is not appropriate may depend on the result of a method whose return type is an to call a particular method when the object is in a particular inter- enumeration. Static typing guarantees that methods are only called nal state. For example: with a stack, one should not attempt to pop when they are available. -

Software User Guide

Cycle Host User's Guide This guide is an evolving document. If you find sections that are unclear, or missing information, please let us know ([email protected]). Please check our website (www.wetlabs.com) periodically for updates. WET Labs, Inc. PO Box 518 Philomath, OR 97370 541-929-5650 fax: 541-929-5277 www.wetlabs.com 28 January 2010 Cycle Host User's Guide Revision 1.04 1/59 Cycle Host Installation The following sub-sections detail the steps necessary to install and run the Cycle Host program (also referred to as "the host") for the first time on a new computer. System Requirements Below are the recommended minimum requirements for a computer to be used to run the host program. Although Windows is currently the only supported operating system, future releases are planned to support Linux and MAC OS as well. Please let us know of your interest in these platforms via [email protected]. Feature Requirements Operating System Windows XP/Vista/2000/2003 (32 and 64 bit versions) Memory 128 Megabytes Disk Space 125 Megabytes (excludes data file storage) Data Port Serial port or USB to Serial adapter supporting 19200 baud Input Devices Keyboard (minimum) Mouse or other pointing device (recommended) Monitor Color, 1024 x 768 (minimum recommended) Table 1: Host Computer System Requirements The Java Runtime Environment The Cycle Host program is written in the JavaTM language developed by Sun Microsystems Inc. For this reason, execution of the host requires the installation of the Java Runtime Environment, or JRE. At the time of this release the current JRE is Version 6 Update 16. -

Create Mixins with Interfaces and Extension Methods by Bill Wagner

Create Mixins with Interfaces and Extension Methods by Bill Wagner Mixins are small utility classes that define some useful functionality that will usually become part of something larger. For example, a class that implements a serial number to uniquely identify an object, or a timestamp that reports when an object was created. In C++, these mixin classes would be created using regular class definitions, and larger classes that wanted to use the mixin behavior would simply inherit from the mixin, in addition to any base classes it needed. Because the .NET Framework does not support multiple inheritance, we’ve typically used interface to define mixins. That works, but it’s not as powerful. One of the limitations of using interfaces to define mixin behavior is that every class that implements an interface must reimplement every method defined in that interface. That leads many developers to creating minimal interface definitions. (IEqualityComparer<T> has one method, IComparable<T> has only method). In those instances, the interface’s full functionality is completely defined in those small methods. But, what about cases where your interface can, or should, contain many more methods in order to support the developers that want to use the interface? That’s a perfect use case for extension methods. By creating extension methods using the interface, you can inject many more methods into the public definition of the interface, without forcing every developer to re-create those methods whenever he or she implements that interface. I’ve created a simple example based on strings that represent file paths. I often write classes that contain files or directories, usually for offline caches, or similar needs. -

User Guide 8.2.4

User Guide 8.2.4 Copyright Manual Copyright © 2000-2017 AB Software Consulting Ltd. All rights reserved. AB Software Consulting Ltd. reserves the right to revise this document and to make changes from time to time in the content hereof without obligation to notify any person or persons of such revisions or changes. The software described in this document is supplied under a licence agreement and is protected by UK and international copyright laws. Any implied warranties including any warranties of merchantability or fitness for a particular purpose are limited to the terms of the express warranties set out in the licence agreement. Software Copyright © 2000-2017 AB Software Consulting Ltd. All rights reserved. Trademarks AB Tutor is the registered trademark of AB Software Consulting Ltd. Windows, Windows 7/8/10/2003/2008/2012 are trademarks of Microsoft Corporation. Other products, trademarks or registered trademarks are the property of their respective owners. Contents Using AB Tutor Introduction The AB Tutor interface What is AB Tutor? The list view Basic ABT setup The thumbnail view Advanced setup options Commands Introduction to passwords Power commands Startup passwords Connecting to clients Connection password Screen sharing Startup switches Chat (text and audio) Installation Screen Capture Installation on Windows File transfers Installation on Mac OS Key Sequences Installation on iPad Admin commands Activating AB Tutor Launch Push out updates Policies Uninstallation Block printer Remote Deployment Utility Block external drive Site -

Angel® Concentrated Platelet Rich Plasma (Cprp) System - Operator’S Manual

Angel® Concentrated Platelet Rich Plasma (cPRP) System - Operator’s Manual Software Version 1.20 DFU-0263-5 Revision 0 05/2020 This page intentionally left blank Table of Contents Before You Get Started Introduction .............................................................................................................................................. vii Indications for Use ................................................................................................................................... vii Contraindications for Use ......................................................................................................................... vii Warnings .................................................................................................................................................. vii Precautions ............................................................................................................................................... x Additional Information .............................................................................................................................. xii Symbols .................................................................................................................................................. xiii Requirements for the disposal of waste electrical and electronic equipment (WEEE) ........................... xiv Service Information ................................................................................................................................ -

Variable-Arity Generic Interfaces

Variable-Arity Generic Interfaces T. Stephen Strickland, Richard Cobbe, and Matthias Felleisen College of Computer and Information Science Northeastern University Boston, MA 02115 [email protected] Abstract. Many programming languages provide variable-arity func- tions. Such functions consume a fixed number of required arguments plus an unspecified number of \rest arguments." The C++ standardiza- tion committee has recently lifted this flexibility from terms to types with the adoption of a proposal for variable-arity templates. In this paper we propose an extension of Java with variable-arity interfaces. We present some programming examples that can benefit from variable-arity generic interfaces in Java; a type-safe model of such a language; and a reduction to the core language. 1 Introduction In April of 2007 the C++ standardization committee adopted Gregor and J¨arvi's proposal [1] for variable-length type arguments in class templates, which pre- sented the idea and its implementation but did not include a formal model or soundness proof. As a result, the relevant constructs will appear in the upcom- ing C++09 draft. Demand for this feature is not limited to C++, however. David Hall submitted a request for variable-arity type parameters for classes to Sun in 2005 [2]. For an illustration of the idea, consider the remarks on first-class functions in Scala [3] from the language's homepage. There it says that \every function is a value. Scala provides a lightweight syntax for defining anonymous functions, it supports higher-order functions, it allows functions to be nested, and supports currying." To achieve this integration of objects and closures, Scala's standard library pre-defines ten interfaces (traits) for function types, which we show here in Java-like syntax: interface Function0<Result> { Result apply(); } interface Function1<Arg1,Result> { Result apply(Arg1 a1); } interface Function2<Arg1,Arg2,Result> { Result apply(Arg1 a1, Arg2 a2); } .. -

Using the Bridge Design Pattern for Osgi Service Update

Using the Bridge Design Pattern for OSGi Service Update Hans Werner Pohl Jens Gerlach {hans,jens}@first.fraunhofer.de Fraunhofer Institute for Computer Architecture and Software Technology (FIRST) Berlin Germany Abstract In the OSGi framework, components cooperate by sharing service objects. The suggested way to replace a service by a newer version consists of updat- ing its containing components which requires a temporary shutdown of the component. Special care must be taken to avoid dangling references to old service instances. As this appears to be an overly expensive strategy, we describe the use of the well-known Bridge design pattern to decouple service replacement from component updates. Instead of registering services only references to instances of automatically generated bridge classes are registered. This solves not only the problem of dangling references but also avoids stopping and starting de- pendent bundles. 1 Introduction The Open Service Gateway Initiative (OSGi)[1] is a consortium that has specified a JavaTM component framework[2] for delivering, activating, and replacing services over wide-area networks to local-area networks and devices. Since OSGi server im- plementations can be quite small, it is a good candidate when looking for supporting runtime evolution in embedded systems. OSGi components interact by sharing so-called service objects. Services are reg- istered by one component and referenced by others. A major problem of OSGi com- ponent exchange at runtime is the replacement of service objects. Shutting down all dependent components and restarting the replaced sub-system, as recommended by OSGi, seems overly expensive. In this paper, we describe the use of the well-known Bridge design pattern to decouple service replacement from bundle updates.