An Introduction to a Class of Matrix Cone Programming

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

On the Cone of a Finite Dimensional Compact Convex Set at a Point

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Elsevier - Publisher Connector On the Cone of a Finite Dimensional Compact Convex Set at a Point C. H. Sung Department of Mathematics University of Califmia at San Diego La Joll41, California 92093 and Department of Mathematics San Diego State University San Diego, California 92182 and B. S. Tam* Department of Mathematics Tamkang University Taipei, Taiwan 25137, Republic of China Submitted by Robert C. Thompson ABSTRACT Four equivalent conditions for a convex cone in a Euclidean space to be an F,-set are given. Our result answers in the negative a recent open problem posed by Tam [5], characterizes the barrier cone of a convex set, and also provides an alternative proof for the known characterizations of the inner aperture of a convex set as given by BrBnsted [2] and Larman [3]. 1. INTRODUCTION Let V be a Euclidean space and C be a nonempty convex set in V. For each y E C, the cone of C at y, denoted by cone(y, C), is the cone *The work of this author was supported in part by the National Science Council of the Republic of China. LINEAR ALGEBRA AND ITS APPLICATIONS 90:47-55 (1987) 47 0 Elsevier Science Publishing Co., Inc., 1987 52 Vanderbilt Ave., New York, NY 10017 00243795/87/$3.50 48 C. H. SUNG AND B. S. TAM { a(r - Y) : o > 0 and x E C} (informally called a point’s cone). On 23 August 1983, at the International Congress of Mathematicians in Warsaw, in a short communication session, the second author presented the paper [5] and posed the following open question: Is it true that, given any cone K in V, there always exists a compact convex set C whose point’s cone at some given point y is equal to K? In view of the relation cone(y, C) = cone(0, C - { y }), the given point y may be taken to be the origin of the space. -

New Algorithms in the Frobenius Matrix Algebra for Polynomial Root-Finding

City University of New York (CUNY) CUNY Academic Works Computer Science Technical Reports CUNY Academic Works 2014 TR-2014006: New Algorithms in the Frobenius Matrix Algebra for Polynomial Root-Finding Victor Y. Pan Ai-Long Zheng How does access to this work benefit ou?y Let us know! More information about this work at: https://academicworks.cuny.edu/gc_cs_tr/397 Discover additional works at: https://academicworks.cuny.edu This work is made publicly available by the City University of New York (CUNY). Contact: [email protected] New Algoirthms in the Frobenius Matrix Algebra for Polynomial Root-finding ∗ Victor Y. Pan[1,2],[a] and Ai-Long Zheng[2],[b] Supported by NSF Grant CCF-1116736 and PSC CUNY Award 64512–0042 [1] Department of Mathematics and Computer Science Lehman College of the City University of New York Bronx, NY 10468 USA [2] Ph.D. Programs in Mathematics and Computer Science The Graduate Center of the City University of New York New York, NY 10036 USA [a] [email protected] http://comet.lehman.cuny.edu/vpan/ [b] [email protected] Abstract In 1996 Cardinal applied fast algorithms in Frobenius matrix algebra to complex root-finding for univariate polynomials, but he resorted to some numerically unsafe techniques of symbolic manipulation with polynomials at the final stages of his algorithms. We extend his work to complete the computations by operating with matrices at the final stage as well and also to adjust them to real polynomial root-finding. Our analysis and experiments show efficiency of the resulting algorithms. 2000 Math. -

Contents 1. Introduction 1 2. Cones in Vector Spaces 2 2.1. Ordered Vector Spaces 2 2.2

ORDERED VECTOR SPACES AND ELEMENTS OF CHOQUET THEORY (A COMPENDIUM) S. COBZAS¸ Contents 1. Introduction 1 2. Cones in vector spaces 2 2.1. Ordered vector spaces 2 2.2. Ordered topological vector spaces (TVS) 7 2.3. Normal cones in TVS and in LCS 7 2.4. Normal cones in normed spaces 9 2.5. Dual pairs 9 2.6. Bases for cones 10 3. Linear operators on ordered vector spaces 11 3.1. Classes of linear operators 11 3.2. Extensions of positive operators 13 3.3. The case of linear functionals 14 3.4. Order units and the continuity of linear functionals 15 3.5. Locally order bounded TVS 15 4. Extremal structure of convex sets and elements of Choquet theory 16 4.1. Faces and extremal vectors 16 4.2. Extreme points, extreme rays and Krein-Milman's Theorem 16 4.3. Regular Borel measures and Riesz' Representation Theorem 17 4.4. Radon measures 19 4.5. Elements of Choquet theory 19 4.6. Maximal measures 21 4.7. Simplexes and uniqueness of representing measures 23 References 24 1. Introduction The aim of these notes is to present a compilation of some basic results on ordered vector spaces and positive operators and functionals acting on them. A short presentation of Choquet theory is also included. They grew up from a talk I delivered at the Seminar on Analysis and Optimization. The presentation follows mainly the books [3], [9], [19], [22], [25], and [11], [23] for the Choquet theory. Note that the first two chapters of [9] contains a thorough introduction (with full proofs) to some basics results on ordered vector spaces. -

Subspace-Preserving Sparsification of Matrices with Minimal Perturbation

Subspace-preserving sparsification of matrices with minimal perturbation to the near null-space. Part I: Basics Chetan Jhurani Tech-X Corporation 5621 Arapahoe Ave Boulder, Colorado 80303, U.S.A. Abstract This is the first of two papers to describe a matrix sparsification algorithm that takes a general real or complex matrix as input and produces a sparse output matrix of the same size. The non-zero entries in the output are chosen to minimize changes to the singular values and singular vectors corresponding to the near null-space of the input. The output matrix is constrained to preserve left and right null-spaces exactly. The sparsity pattern of the output matrix is automatically determined or can be given as input. If the input matrix belongs to a common matrix subspace, we prove that the computed sparse matrix belongs to the same subspace. This works with- out imposing explicit constraints pertaining to the subspace. This property holds for the subspaces of Hermitian, complex-symmetric, Hamiltonian, cir- culant, centrosymmetric, and persymmetric matrices, and for each of the skew counterparts. Applications of our method include computation of reusable sparse pre- conditioning matrices for reliable and efficient solution of high-order finite element systems. The second paper in this series [1] describes our open- arXiv:1304.7049v1 [math.NA] 26 Apr 2013 source implementation, and presents further technical details. Keywords: Sparsification, Spectral equivalence, Matrix structure, Convex optimization, Moore-Penrose pseudoinverse Email address: [email protected] (Chetan Jhurani) Preprint submitted to Computers and Mathematics with Applications October 10, 2018 1. Introduction We present and analyze a matrix-valued optimization problem formulated to sparsify matrices while preserving the matrix null-spaces and certain spe- cial structural properties. -

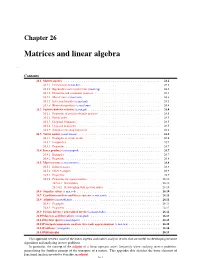

Matrices and Linear Algebra

Chapter 26 Matrices and linear algebra ap,mat Contents 26.1 Matrix algebra........................................... 26.2 26.1.1 Determinant (s,mat,det)................................... 26.2 26.1.2 Eigenvalues and eigenvectors (s,mat,eig).......................... 26.2 26.1.3 Hermitian and symmetric matrices............................. 26.3 26.1.4 Matrix trace (s,mat,trace).................................. 26.3 26.1.5 Inversion formulas (s,mat,mil)............................... 26.3 26.1.6 Kronecker products (s,mat,kron).............................. 26.4 26.2 Positive-definite matrices (s,mat,pd) ................................ 26.4 26.2.1 Properties of positive-definite matrices........................... 26.5 26.2.2 Partial order......................................... 26.5 26.2.3 Diagonal dominance.................................... 26.5 26.2.4 Diagonal majorizers..................................... 26.5 26.2.5 Simultaneous diagonalization................................ 26.6 26.3 Vector norms (s,mat,vnorm) .................................... 26.6 26.3.1 Examples of vector norms.................................. 26.6 26.3.2 Inequalities......................................... 26.7 26.3.3 Properties.......................................... 26.7 26.4 Inner products (s,mat,inprod) ................................... 26.7 26.4.1 Examples.......................................... 26.7 26.4.2 Properties.......................................... 26.8 26.5 Matrix norms (s,mat,mnorm) .................................... 26.8 26.5.1 -

A Logarithmic Minimization Property of the Unitary Polar Factor in The

A logarithmic minimization property of the unitary polar factor in the spectral norm and the Frobenius matrix norm Patrizio Neff∗ Yuji Nakatsukasa† Andreas Fischle‡ October 30, 2018 Abstract The unitary polar factor Q = Up in the polar decomposition of Z = Up H is the minimizer over unitary matrices Q for both Log(Q∗Z) 2 and its Hermitian part k k sym (Log(Q∗Z)) 2 over both R and C for any given invertible matrix Z Cn×n and k * k ∈ any matrix logarithm Log, not necessarily the principal logarithm log. We prove this for the spectral matrix norm for any n and for the Frobenius matrix norm in two and three dimensions. The result shows that the unitary polar factor is the nearest orthogonal matrix to Z not only in the normwise sense, but also in a geodesic distance. The derivation is based on Bhatia’s generalization of Bernstein’s trace inequality for the matrix exponential and a new sum of squared logarithms inequality. Our result generalizes the fact for scalars that for any complex logarithm and for all z C 0 ∈ \ { } −iϑ 2 2 −iϑ 2 2 min LogC(e z) = log z , min Re LogC(e z) = log z . ϑ∈(−π,π] | | | | || ϑ∈(−π,π] | | | | || Keywords: unitary polar factor, matrix logarithm, matrix exponential, Hermitian part, minimization, unitarily invariant norm, polar decomposition, sum of squared logarithms in- equality, optimality, matrix Lie-group, geodesic distance arXiv:1302.3235v4 [math.CA] 2 Jan 2014 AMS 2010 subject classification: 15A16, 15A18, 15A24, 15A44, 15A45, 26Dxx ∗Corresponding author, Head of Lehrstuhl f¨ur Nichtlineare Analysis und Modellierung, Fakult¨at f¨ur Mathematik, Universit¨at Duisburg-Essen, Campus Essen, Thea-Leymann Str. -

Some Applications of the Hahn-Banach Separation Theorem 1

Some Applications of the Hahn-Banach Separation Theorem 1 Frank Deutsch , Hein Hundal, Ludmil Zikatanov January 1, 2018 arXiv:1712.10250v1 [math.FA] 29 Dec 2017 1We dedicate this paper to our friend and colleague Professor Yair Censor on the occasion of his 75th birthday. Abstract We show that a single special separation theorem (namely, a consequence of the geometric form of the Hahn-Banach theorem) can be used to prove Farkas type theorems, existence theorems for numerical quadrature with pos- itive coefficients, and detailed characterizations of best approximations from certain important cones in Hilbert space. 2010 Mathematics Subject Classification: 41A65, 52A27. Key Words and Phrases: Farkas-type theorems, numerical quadrature, characterization of best approximations from convex cones in Hilbert space. 1 Introduction We show that a single separation theorem|the geometric form of the Hahn- Banach theorem|has a variety of different applications. In section 2 we state this general separation theorem (Theorem 2.1), but note that only a special consequence of it is needed for our applications (Theorem 2.2). The main idea in Section 2 is the notion of a functional being positive relative to a set of functionals (Definition 2.3). Then a useful characterization of this notion is given in Theorem 2.4. Some applications of this idea are given in Section 3. They include a proof of the existence of numerical quadrature with positive coefficients, new proofs of Farkas type theorems, an application to determining best approximations from certain convex cones in Hilbert space, and a specific application of the latter to determine best approximations that are also shape-preserving. -

Complemented Brunn–Minkowski Inequalities and Isoperimetry for Homogeneous and Non-Homogeneous Measures

Complemented Brunn{Minkowski Inequalities and Isoperimetry for Homogeneous and Non-Homogeneous Measures Emanuel Milman1 and Liran Rotem2 Abstract Elementary proofs of sharp isoperimetric inequalities on a normed space (Rn; k·k) equipped with a measure µ = w(x)dx so that wp is homogeneous are provided, along with a characterization of the corresponding equality cases. When p 2 (0; 1] and in addition wp is assumed concave, the result is an immediate corollary of the Borell{Brascamp{Lieb extension of the classical Brunn{Minkowski inequality, providing a new elementary proof of a recent result of Cabr´e{RosOton{Serra. When p 2 (−1=n; 0), the relevant property turns out to be a novel \q{complemented Brunn{Minkowski" inequality: n n n 8λ 2 (0; 1) 8 Borel sets A; B ⊂ R such that µ(R n A); µ(R n B) < 1 ; n n q n q 1=q µ∗(R n (λA + (1 − λ)B)) ≤ (λµ(R n A) + (1 − λ)µ(R n B) ) ; p 1 1 which we show is always satisfied by µ when w is homogeneous with q = p + n; in par- ticular, this is satisfied by the Lebesgue measure with q = 1=n. This gives rise to a new class of measures, which are \complemented" analogues of the class of convex measures introduced by Borell, but which have vastly different properties. The resulting isoperi- metric inequality and characterization of isoperimetric minimizers extends beyond the re- cent results of Ca~nete{Rosalesand Howe. The isoperimetric and Brunn-Minkowski type inequalities also extend to the non-homogeneous setting, under a certain log-convexity assumption on the density. -

Perron–Frobenius Theorem for Matrices with Some Negative Entries

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Elsevier - Publisher Connector Linear Algebra and its Applications 328 (2001) 57–68 www.elsevier.com/locate/laa Perron–Frobenius theorem for matrices with some negative entries Pablo Tarazaga a,∗,1, Marcos Raydan b,2, Ana Hurman c aDepartment of Mathematics, University of Puerto Rico, P.O. Box 5000, Mayaguez Campus, Mayaguez, PR 00681-5000, USA bDpto. de Computación, Facultad de Ciencias, Universidad Central de Venezuela, Ap. 47002, Caracas 1041-A, Venezuela cFacultad de Ingeniería y Facultad de Ciencias Económicas, Universidad Nacional del Cuyo, Mendoza, Argentina Received 17 December 1998; accepted 24 August 2000 Submitted by R.A. Brualdi Abstract We extend the classical Perron–Frobenius theorem to matrices with some negative entries. We study the cone of matrices that has the matrix of 1’s (eet) as the central ray, and such that any matrix inside the cone possesses a Perron–Frobenius eigenpair. We also find the maximum angle of any matrix with eet that guarantees this property. © 2001 Elsevier Science Inc. All rights reserved. Keywords: Perron–Frobenius theorem; Cones of matrices; Optimality conditions 1. Introduction In 1907, Perron [4] made the fundamental discovery that the dominant eigenvalue of a matrix with all positive entries is positive and also that there exists a correspond- ing eigenvector with all positive entries. This result was later extended to nonnegative matrices by Frobenius [2] in 1912. In that case, there exists a nonnegative dominant ∗ Corresponding author. E-mail addresses: [email protected] (P. Tarazaga), [email protected] (M. -

Logarithmic Growth and Frobenius Filtrations for Solutions of P-Adic

Logarithmic growth and Frobenius filtrations for solutions of p-adic differential equations Bruno Chiarellotto∗ Nobuo Tsuzuki† Version of October 24, 2006 Abstract For a ∇-module M over the ring K[[x]]0 of bounded functions we define the notion of special and generic log-growth filtrations. Moreover, if M admits also a ϕ-structure then it is endowed also with generic and special Frobenius slope filtrations. We will show that in the case of M a ϕ-∇-module of rank 2, the two filtrations coincide. We may then extend such a result also for a module defined on an open set of the projective line. This generalizes previous results of Dwork about the coincidences between the log-growth of the solutions of hypergeometric equations and the Frobenius slopes. Contents 1 Log-growth of solutions 7 2 ϕ-modules over a p-adically complete discrete valuation field 9 3 Log-growth filtration over E and E 15 4 Generic and special log-growth filtrations 19 5 Examples 21 6 Generic and special Frobenius slope filtrations 26 7 Comparison between log-growth filtration and Frobenius filtration. The rank 2 case 29 Introduction The meaningful connections in the p-adic setting are those with a good radius of conver- gence for their solutions or horizontal sections. In general this condition is referred as the condition of solvability and it is granted, for example, under the hypotheses of having a Frobenius structure. It has been noticed long time ago that the solutions do not have in ∗[email protected] †[email protected] 1 general the same growth in having their good radius of convergence. -

![Arxiv:1511.08154V1 [Math.NT] 25 Nov 2015 M-412.Tescn Uhrswr a Upre Yten the by Supported Was Work Author’S Second the DMS-1401224](https://docslib.b-cdn.net/cover/9551/arxiv-1511-08154v1-math-nt-25-nov-2015-m-412-tescn-uhrswr-a-upre-yten-the-by-supported-was-work-author-s-second-the-dms-1401224-1849551.webp)

Arxiv:1511.08154V1 [Math.NT] 25 Nov 2015 M-412.Tescn Uhrswr a Upre Yten the by Supported Was Work Author’S Second the DMS-1401224

NOTES ON CARDINAL’S MATRICES JEFFREY C. LAGARIAS AND DAVID MONTAGUE ABSTRACT. These notes are motivated by the work of Jean-Paul Cardinal on certain symmetric matrices related to the Mertens function. He showed that certain norm bounds on his matrices implied the Riemann hypothesis. Using a different matrix norm we show an equivalence of the Riemann hypothesis to suitable norm bounds on his Mertens matrices in the new norm. We also specify a deformed version of his Mertens matrices that unconditionally satisfy a norm bound of the same strength as this Riemann hypothesis bound. 1. INTRODUCTION In 2010 Jean-Paul Cardinal [3] introduced for each n 1 two symmetric integer ≥ matrices n and n constructed using a set of “approximate divisors" of n, defined below. EachU of theseM matrices can be obtained from the other. Both matrices are s s matrices, where s = s(n) is about 2√n. Here s(n) counts the number of distinct values× taken by n when k runs from 1 to n. These values comprise the approximate divisors, ⌊ k ⌋ and we label them k1 =1,k2, ..., ks = n in increasing order. We start with defining Cardinal’s basic matrix n attached to an integer n. The (i, j)- n U th entry of n is . One can show that such matrices take value 1 on the anti- U ⌊ kikj ⌋ diagonal, and are 0 at all entries below the antidiagonal, consequently it has determinant 1. (The antidiagonal entries are the (i, j)-entries with i + j = s +1, numbering rows and± columns from 1 to s.) All entries above the antidiagonal are positive. -

A Absolute Uncertainty Or Error, 414 Absorbing Markov Chains, 700

Index Bauer–Fike bound, 528 A beads on a string, 559 Bellman, Richard, xii absolute uncertainty or error, 414 Beltrami, Eugenio, 411 absorbing Markov chains, 700 Benzi, Michele, xii absorbing states, 700 Bernoulli, Daniel, 299 addition, properties of, 82 Bessel, Friedrich W., 305 additive identity, 82 Bessel’s inequality, 305 additive inverse, 82 best approximate inverse, 428 adjacency matrix, 100 biased estimator, 446 adjoint, 84, 479 binary representation, 372 adjugate, 479 Birkhoff, Garrett, 625 affine functions, 89 Birkhoff’s theorem, 702 affine space, 436 bit reversal, 372 algebraic group, 402 bit reversing permutation matrix, 381 algebraic multiplicity, 496, 510 block diagonal, 261–263 amplitude, 362 rank of, 137 Anderson-Duffin formula, 441 using real arithmetic, 524 Anderson, Jean, xii block matrices, 111 Anderson, W. N., Jr., 441 determinant of, 475 angle, 295 linear operators, 392 canonical, 455 block matrix multiplication, 111 between complementary spaces, 389, 450 block triangular, 112, 261–263 maximal, 455 determinants, 467 principal, 456 eigenvalues of, 501 between subspaces, 450 Bolzano–Weierstrass theorem, 670 annihilating polynomials, 642 Boolean matrix, 679 aperiodic Markov chain, 694 bordered matrix, 485 Arnoldi’s algorithm, 653 eigenvalues of, 552 Arnoldi, Walter Edwin, 653 branch, 73, 204 associative property Brauer, Alfred, 497 of addition, 82 Bunyakovskii, Victor, 271 of matrix multiplication, 105 of scalar multiplication, 83 C asymptotic rate of convergence, 621 augmented matrix, 7 cancellation law, 97 Autonne, L., 411 canonical angles, 455 canonical form, reducible matrix, 695 B Cantor, Georg, 597 Cauchy, Augustin-Louis, 271 back substitution, 6, 9 determinant formula, 484 backward error analysis, 26 integral formula, 611 backward triangle inequality, 273 Cauchy–Bunyakovskii–Schwarz inequality, 272 band matrix, 157 Cauchy–Goursat theorem, 615 Bartlett, M.