Solutions of the Inverse Frobenius-Perron Problem

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Periodic Staircase Matrices and Generalized Cluster Structures

PERIODIC STAIRCASE MATRICES AND GENERALIZED CLUSTER STRUCTURES MISHA GEKHTMAN, MICHAEL SHAPIRO, AND ALEK VAINSHTEIN Abstract. As is well-known, cluster transformations in cluster structures of geometric type are often modeled on determinant identities, such as short Pl¨ucker relations, Desnanot–Jacobi identities and their generalizations. We present a construction that plays a similar role in a description of general- ized cluster transformations and discuss its applications to generalized clus- ter structures in GLn compatible with a certain subclass of Belavin–Drinfeld Poisson–Lie brackets, in the Drinfeld double of GLn, and in spaces of periodic difference operators. 1. Introduction Since the discovery of cluster algebras in [4], many important algebraic varieties were shown to support a cluster structure in a sense that the coordinate rings of such variety is isomorphic to a cluster algebra or an upper cluster algebra. Lie theory and representation theory turned out to be a particularly rich source of varieties of this sort including but in no way limited to such examples as Grassmannians [5, 18], double Bruhat cells [1] and strata in flag varieties [15]. In all these examples, cluster transformations that connect distinguished coordinate charts within a ring of regular functions are modeled on three-term relations such as short Pl¨ucker relations, Desnanot–Jacobi identities and their Lie-theoretic generalizations of the kind considered in [3]. This remains true even in the case of exotic cluster structures on GLn considered in [8, 10] where cluster transformations can be obtained by applying Desnanot–Jacobi type identities to certain structured matrices of a size far exceeding n. -

New Algorithms in the Frobenius Matrix Algebra for Polynomial Root-Finding

City University of New York (CUNY) CUNY Academic Works Computer Science Technical Reports CUNY Academic Works 2014 TR-2014006: New Algorithms in the Frobenius Matrix Algebra for Polynomial Root-Finding Victor Y. Pan Ai-Long Zheng How does access to this work benefit ou?y Let us know! More information about this work at: https://academicworks.cuny.edu/gc_cs_tr/397 Discover additional works at: https://academicworks.cuny.edu This work is made publicly available by the City University of New York (CUNY). Contact: [email protected] New Algoirthms in the Frobenius Matrix Algebra for Polynomial Root-finding ∗ Victor Y. Pan[1,2],[a] and Ai-Long Zheng[2],[b] Supported by NSF Grant CCF-1116736 and PSC CUNY Award 64512–0042 [1] Department of Mathematics and Computer Science Lehman College of the City University of New York Bronx, NY 10468 USA [2] Ph.D. Programs in Mathematics and Computer Science The Graduate Center of the City University of New York New York, NY 10036 USA [a] [email protected] http://comet.lehman.cuny.edu/vpan/ [b] [email protected] Abstract In 1996 Cardinal applied fast algorithms in Frobenius matrix algebra to complex root-finding for univariate polynomials, but he resorted to some numerically unsafe techniques of symbolic manipulation with polynomials at the final stages of his algorithms. We extend his work to complete the computations by operating with matrices at the final stage as well and also to adjust them to real polynomial root-finding. Our analysis and experiments show efficiency of the resulting algorithms. 2000 Math. -

Arxiv:Math/0010243V1

FOUR SHORT STORIES ABOUT TOEPLITZ MATRIX CALCULATIONS THOMAS STROHMER∗ Abstract. The stories told in this paper are dealing with the solution of finite, infinite, and biinfinite Toeplitz-type systems. A crucial role plays the off-diagonal decay behavior of Toeplitz matrices and their inverses. Classical results of Gelfand et al. on commutative Banach algebras yield a general characterization of this decay behavior. We then derive estimates for the approximate solution of (bi)infinite Toeplitz systems by the finite section method, showing that the approximation rate depends only on the decay of the entries of the Toeplitz matrix and its condition number. Furthermore, we give error estimates for the solution of doubly infinite convolution systems by finite circulant systems. Finally, some quantitative results on the construction of preconditioners via circulant embedding are derived, which allow to provide a theoretical explanation for numerical observations made by some researchers in connection with deconvolution problems. Key words. Toeplitz matrix, Laurent operator, decay of inverse matrix, preconditioner, circu- lant matrix, finite section method. AMS subject classifications. 65T10, 42A10, 65D10, 65F10 0. Introduction. Toeplitz-type equations arise in many applications in mathe- matics, signal processing, communications engineering, and statistics. The excellent surveys [4, 17] describe a number of applications and contain a vast list of references. The stories told in this paper are dealing with the (approximate) solution of biinfinite, infinite, and finite hermitian positive definite Toeplitz-type systems. We pay special attention to Toeplitz-type systems with certain decay properties in the sense that the entries of the matrix enjoy a certain decay rate off the diagonal. -

THREE STEPS on an OPEN ROAD Gilbert Strang This Note Describes

Inverse Problems and Imaging doi:10.3934/ipi.2013.7.961 Volume 7, No. 3, 2013, 961{966 THREE STEPS ON AN OPEN ROAD Gilbert Strang Massachusetts Institute of Technology Cambridge, MA 02139, USA Abstract. This note describes three recent factorizations of banded invertible infinite matrices 1. If A has a banded inverse : A=BC with block{diagonal factors B and C. 2. Permutations factor into a shift times N < 2w tridiagonal permutations. 3. A = LP U with lower triangular L, permutation P , upper triangular U. We include examples and references and outlines of proofs. This note describes three small steps in the factorization of banded matrices. It is written to encourage others to go further in this direction (and related directions). At some point the problems will become deeper and more difficult, especially for doubly infinite matrices. Our main point is that matrices need not be Toeplitz or block Toeplitz for progress to be possible. An important theory is already established [2, 9, 10, 13-16] for non-Toeplitz \band-dominated operators". The Fredholm index plays a key role, and the second small step below (taken jointly with Marko Lindner) computes that index in the special case of permutation matrices. Recall that banded Toeplitz matrices lead to Laurent polynomials. If the scalars or matrices a−w; : : : ; a0; : : : ; aw lie along the diagonals, the polynomial is A(z) = P k akz and the bandwidth is w. The required index is in this case a winding number of det A(z). Factorization into A+(z)A−(z) is a classical problem solved by Plemelj [12] and Gohberg [6-7]. -

![Arxiv:2004.05118V1 [Math.RT]](https://docslib.b-cdn.net/cover/7210/arxiv-2004-05118v1-math-rt-267210.webp)

Arxiv:2004.05118V1 [Math.RT]

GENERALIZED CLUSTER STRUCTURES RELATED TO THE DRINFELD DOUBLE OF GLn MISHA GEKHTMAN, MICHAEL SHAPIRO, AND ALEK VAINSHTEIN Abstract. We prove that the regular generalized cluster structure on the Drinfeld double of GLn constructed in [9] is complete and compatible with the standard Poisson–Lie structure on the double. Moreover, we show that for n = 4 this structure is distinct from a previously known regular generalized cluster structure on the Drinfeld double, even though they have the same compatible Poisson structure and the same collection of frozen variables. Further, we prove that the regular generalized cluster structure on band periodic matrices constructed in [9] possesses similar compatibility and completeness properties. 1. Introduction In [6, 8] we constructed an initial seed Σn for a complete generalized cluster D structure GCn in the ring of regular functions on the Drinfeld double D(GLn) and proved that this structure is compatible with the standard Poisson–Lie structure on D(GLn). In [9, Section 4] we constructed a different seed Σ¯ n for a regular D D generalized cluster structure GCn on D(GLn). In this note we prove that GCn D shares all the properties of GCn : it is complete and compatible with the standard Poisson–Lie structure on D(GLn). Moreover, we prove that the seeds Σ¯ 4(X, Y ) and T T Σ4(Y ,X ) are not mutationally equivalent. In this way we provide an explicit example of two different regular complete generalized cluster structures on the same variety with the same compatible Poisson structure and the same collection of frozen D variables. -

The Spectra of Large Toeplitz Band Matrices with A

MATHEMATICS OF COMPUTATION Volume 72, Number 243, Pages 1329{1348 S 0025-5718(03)01505-9 Article electronically published on February 3, 2003 THESPECTRAOFLARGETOEPLITZBANDMATRICES WITH A RANDOMLY PERTURBED ENTRY A. BOTTCHER,¨ M. EMBREE, AND V. I. SOKOLOV Abstract. (j;k) This paper is concerned with the union spΩ Tn(a) of all possible spectra that may emerge when perturbing a large n n Toeplitz band matrix × Tn(a)inthe(j; k) site by a number randomly chosen from some set Ω. The main results give descriptive bounds and, in several interesting situations, even (j;k) provide complete identifications of the limit of sp Tn(a)asn .Also Ω !1 discussed are the cases of small and large sets Ω as well as the \discontinuity of (j;k) the infinite volume case", which means that in general spΩ Tn(a)doesnot converge to something close to sp(j;k) T (a)asn ,whereT (a)isthecor- Ω !1 responding infinite Toeplitz matrix. Illustrations are provided for tridiagonal Toeplitz matrices, a notable special case. 1. Introduction and main results For a complex-valued continuous function a on the complex unit circle T,the infinite Toeplitz matrix T (a) and the finite Toeplitz matrices Tn(a) are defined by n T (a)=(aj k)j;k1 =1 and Tn(a)=(aj k)j;k=1; − − where a` is the `th Fourier coefficient of a, 2π 1 iθ i`θ a` = a(e )e− dθ; ` Z: 2π 2 Z0 Here, we restrict our attention to the case where a is a trigonometric polynomial, a , implying that at most a finite number of the Fourier coefficients are nonzero; equivalently,2P T (a) is a banded matrix. -

Subspace-Preserving Sparsification of Matrices with Minimal Perturbation

Subspace-preserving sparsification of matrices with minimal perturbation to the near null-space. Part I: Basics Chetan Jhurani Tech-X Corporation 5621 Arapahoe Ave Boulder, Colorado 80303, U.S.A. Abstract This is the first of two papers to describe a matrix sparsification algorithm that takes a general real or complex matrix as input and produces a sparse output matrix of the same size. The non-zero entries in the output are chosen to minimize changes to the singular values and singular vectors corresponding to the near null-space of the input. The output matrix is constrained to preserve left and right null-spaces exactly. The sparsity pattern of the output matrix is automatically determined or can be given as input. If the input matrix belongs to a common matrix subspace, we prove that the computed sparse matrix belongs to the same subspace. This works with- out imposing explicit constraints pertaining to the subspace. This property holds for the subspaces of Hermitian, complex-symmetric, Hamiltonian, cir- culant, centrosymmetric, and persymmetric matrices, and for each of the skew counterparts. Applications of our method include computation of reusable sparse pre- conditioning matrices for reliable and efficient solution of high-order finite element systems. The second paper in this series [1] describes our open- arXiv:1304.7049v1 [math.NA] 26 Apr 2013 source implementation, and presents further technical details. Keywords: Sparsification, Spectral equivalence, Matrix structure, Convex optimization, Moore-Penrose pseudoinverse Email address: [email protected] (Chetan Jhurani) Preprint submitted to Computers and Mathematics with Applications October 10, 2018 1. Introduction We present and analyze a matrix-valued optimization problem formulated to sparsify matrices while preserving the matrix null-spaces and certain spe- cial structural properties. -

Conversion of Sparse Matrix to Band Matrix Using an Fpga For

CONVERSION OF SPARSE MATRIX TO BAND MATRIX USING AN FPGA FOR HIGH-PERFORMANCE COMPUTING by Anjani Chaudhary, M.S. A thesis submitted to the Graduate Council of Texas State University in partial fulfillment of the requirements for the degree of Master of Science with a Major in Engineering December 2020 Committee Members: Semih Aslan, Chair Shuying Sun William A Stapleton COPYRIGHT by Anjani Chaudhary 2020 FAIR USE AND AUTHOR’S PERMISSION STATEMENT Fair Use This work is protected by the Copyright Laws of the United States (Public Law 94-553, section 107). Consistent with fair use as defined in the Copyright Laws, brief quotations from this material are allowed with proper acknowledgement. Use of this material for financial gain without the author’s express written permission is not allowed. Duplication Permission As the copyright holder of this work, I, Anjani Chaudhary, authorize duplication of this work, in whole or in part, for educational or scholarly purposes only. ACKNOWLEDGEMENTS Throughout my research and report writing process, I am grateful to receive support and suggestions from the following people. I would like to thank my supervisor and chair, Dr. Semih Aslan, Associate Professor, Ingram School of Engineering, for his time, support, encouragement, and patience, without which I would not have made it. My committee members Dr. Bill Stapleton, Associate Professor, Ingram School of Engineering, and Dr. Shuying Sun, Associate Professor, Department of Mathematics, for their essential guidance throughout the research and my graduate advisor, Dr. Vishu Viswanathan, for providing the opportunity and valuable suggestions which helped me to come one more step closer to my goal. -

Copyrighted Material

JWST600-IND JWST600-Penney September 28, 2015 7:40 Printer Name: Trim: 6.125in × 9.25in INDEX Additive property of determinants, 244 Cofactor expansion, 240 Argument of a complex number, 297 Column space, 76 Column vector, 2 Band matrix, 407 Complementary vector, 319 Band width, 407 Complex eigenvalue, 302 Basis Complex eigenvector, 302 chain, 438 Complex linear transformation, 304 coordinate matrix for a basis, 217 Complex matrices, 301 coordinate transformation, 223 Complex number definition, 104 argument, 297 normalization, 315 conjugate, 302 ordered, 216 Complex vector space, 303 orthonormal, 315 Conjugate of a complex number, 302 point transformation, 223 Consumption matrix, 198 standard Coordinate matrix, 217 M(m,n), 119 Coordinates n, 119 coordinate vector, 216 Rn, 118 coordinate matrix for a basis, 217 COPYRIGHTEDcoordinate MATERIAL vector, 216 Cn, 302 point matrix, 217 Chain basis, 438 point transformation, 223 Characteristic polynomial, 275 Cramer’s rule, 265 Coefficient matrix, 75 Current, 41 Linear Algebra: Ideas and Applications, Fourth Edition. Richard C. Penney. © 2016 John Wiley & Sons, Inc. Published 2016 by John Wiley & Sons, Inc. Companion website: www.wiley.com/go/penney/linearalgebra 487 JWST600-IND JWST600-Penney September 28, 2015 7:40 Printer Name: Trim: 6.125in × 9.25in 488 INDEX Data compression, 349 Haar function, 346 Determinant Hermitian additive property, 244 adjoint, 412 cofactor expansion, 240 symmetric, 414 Cramer’s rule, 265 Hermitian, orthogonal, 413 inverse matrix, 267 Homogeneous system, 80 Laplace -

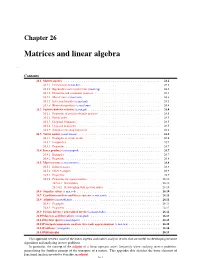

Matrices and Linear Algebra

Chapter 26 Matrices and linear algebra ap,mat Contents 26.1 Matrix algebra........................................... 26.2 26.1.1 Determinant (s,mat,det)................................... 26.2 26.1.2 Eigenvalues and eigenvectors (s,mat,eig).......................... 26.2 26.1.3 Hermitian and symmetric matrices............................. 26.3 26.1.4 Matrix trace (s,mat,trace).................................. 26.3 26.1.5 Inversion formulas (s,mat,mil)............................... 26.3 26.1.6 Kronecker products (s,mat,kron).............................. 26.4 26.2 Positive-definite matrices (s,mat,pd) ................................ 26.4 26.2.1 Properties of positive-definite matrices........................... 26.5 26.2.2 Partial order......................................... 26.5 26.2.3 Diagonal dominance.................................... 26.5 26.2.4 Diagonal majorizers..................................... 26.5 26.2.5 Simultaneous diagonalization................................ 26.6 26.3 Vector norms (s,mat,vnorm) .................................... 26.6 26.3.1 Examples of vector norms.................................. 26.6 26.3.2 Inequalities......................................... 26.7 26.3.3 Properties.......................................... 26.7 26.4 Inner products (s,mat,inprod) ................................... 26.7 26.4.1 Examples.......................................... 26.7 26.4.2 Properties.......................................... 26.8 26.5 Matrix norms (s,mat,mnorm) .................................... 26.8 26.5.1 -

A Logarithmic Minimization Property of the Unitary Polar Factor in The

A logarithmic minimization property of the unitary polar factor in the spectral norm and the Frobenius matrix norm Patrizio Neff∗ Yuji Nakatsukasa† Andreas Fischle‡ October 30, 2018 Abstract The unitary polar factor Q = Up in the polar decomposition of Z = Up H is the minimizer over unitary matrices Q for both Log(Q∗Z) 2 and its Hermitian part k k sym (Log(Q∗Z)) 2 over both R and C for any given invertible matrix Z Cn×n and k * k ∈ any matrix logarithm Log, not necessarily the principal logarithm log. We prove this for the spectral matrix norm for any n and for the Frobenius matrix norm in two and three dimensions. The result shows that the unitary polar factor is the nearest orthogonal matrix to Z not only in the normwise sense, but also in a geodesic distance. The derivation is based on Bhatia’s generalization of Bernstein’s trace inequality for the matrix exponential and a new sum of squared logarithms inequality. Our result generalizes the fact for scalars that for any complex logarithm and for all z C 0 ∈ \ { } −iϑ 2 2 −iϑ 2 2 min LogC(e z) = log z , min Re LogC(e z) = log z . ϑ∈(−π,π] | | | | || ϑ∈(−π,π] | | | | || Keywords: unitary polar factor, matrix logarithm, matrix exponential, Hermitian part, minimization, unitarily invariant norm, polar decomposition, sum of squared logarithms in- equality, optimality, matrix Lie-group, geodesic distance arXiv:1302.3235v4 [math.CA] 2 Jan 2014 AMS 2010 subject classification: 15A16, 15A18, 15A24, 15A44, 15A45, 26Dxx ∗Corresponding author, Head of Lehrstuhl f¨ur Nichtlineare Analysis und Modellierung, Fakult¨at f¨ur Mathematik, Universit¨at Duisburg-Essen, Campus Essen, Thea-Leymann Str. -

NAG Library Chapter Contents F16 – NAG Interface to BLAS F16 Chapter Introduction

F16 – NAG Interface to BLAS Contents – F16 NAG Library Chapter Contents f16 – NAG Interface to BLAS f16 Chapter Introduction Function Mark of Name Introduction Purpose f16dbc 7 nag_iload Broadcast scalar into integer vector f16dlc 9 nag_isum Sum elements of integer vector f16dnc 9 nag_imax_val Maximum value and location, integer vector f16dpc 9 nag_imin_val Minimum value and location, integer vector f16dqc 9 nag_iamax_val Maximum absolute value and location, integer vector f16drc 9 nag_iamin_val Minimum absolute value and location, integer vector f16eac 24 nag_ddot Dot product of two vectors, allows scaling and accumulation. f16ecc 7 nag_daxpby Real weighted vector addition f16ehc 9 nag_dwaxpby Real weighted vector addition preserving input f16elc 9 nag_dsum Sum elements of real vector f16fbc 7 nag_dload Broadcast scalar into real vector f16gcc 24 nag_zaxpby Complex weighted vector addition f16ghc 9 nag_zwaxpby Complex weighted vector addition preserving input f16glc 9 nag_zsum Sum elements of complex vector f16hbc 7 nag_zload Broadcast scalar into complex vector f16jnc 9 nag_dmax_val Maximum value and location, real vector f16jpc 9 nag_dmin_val Minimum value and location, real vector f16jqc 9 nag_damax_val Maximum absolute value and location, real vector f16jrc 9 nag_damin_val Minimum absolute value and location, real vector f16jsc 9 nag_zamax_val Maximum absolute value and location, complex vector f16jtc 9 nag_zamin_val Minimum absolute value and location, complex vector f16pac 8 nag_dgemv Matrix-vector product, real rectangular matrix