Volume 39, Issue 3

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

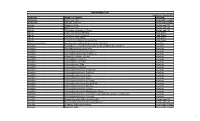

Number of Libraries 1 Akaki Tsereteli State University 2 Batumi

№ Number of libraries 1 Akaki Tsereteli State University 2 Batumi Navigation Teaching University 3 Batumi Shota Rustaveli State University 4 Batumi State Maritime Academy 5 Business and Technology University 6 Caucasus International University 7 Caucasus University 8 Collage Iberia 9 David Agmashenebeli University of Georgia 10 David Tvildiani Medical University 11 East European University 12 European University 13 Free Academy of Tbilisi 14 Georgian American University (GAU) 15 Georgian Aviation University 16 Georgian Patriarchate Saint Tbel Abuserisdze Teaching University 17 Georgian state teaching university of physical education and sport education and sport 18 Georgian Technical University 19 Gori State Teaching University 20 Guram Tavartkiladze Tbilisi Teaching University 21 Iakob Gogebashvili Telavi State University 22 Ilia State University 23 International Black Sea University 24 Korneli Kekelidze Georgian National Centre of Manuscripts 25 Kutaisi Ilia Tchavtchavadze Public Library 26 LEPL - Vocational College "Black Sea" 27 LEPL Vocational College Lakada 28 LTD East-West Teaching University 29 LTD Kutaisi University 30 LTD Schllo IB Mtiebi 31 LTD Tbilisi Free School 32 National Archives of Georgia 33 National University of Georgia (SEU) 34 New Higher Education Institute 35 New Vision University (NVU) 36 Patriarchate of Georgia Saint King Tamar University 37 Petre Shotadze Tbilisi Medical Academy 38 Public Collage MERMISI 39 Robert Shuman European School 40 Samtskhe-Javakheti State Teaching University 41 Shota Meskhia Zugdidi State Teaching University 42 Shota Rustaveli theatre and Film Georgia State University 43 St. Andrews Patriarchate Georgian University 44 Sulkhan-Saba Orbeliani University 45 Tbilisi Humanitarian Teaching University 46 Tbilisi open teaching University 47 Tbilisi State Academy of Arts 48 Tbilisi State Medical University (TSMU) 49 TSU National Scientific Library. -

Curriculum Vitae Tamta Khalvashi, Phd Address: Vaja-Pshavela Ave

Curriculum Vitae Tamta Khalvashi, PhD Address: Vaja-Pshavela Ave. Bl. 5. H.3, Flat 57 0186 Tbilisi, Georgia Phone: +99599 351618 Email: [email protected] [email protected] Last UPdate: 30/07/2016 Education • Doctor of Philosophy: DePartment of AnthroPology University of CoPenhagen (2011- SePtember 2015) • Visiting Research Fellow: Institute of Social and Cultural Anthropology, University of Oxford (2009-2010) • Visiting Study Fellow: International Gender Studies Centre, Queen Elizabeth House, University of Oxford (January-March, 2008) • MA in Conflict Management Ivane Javakhishvili Tbilisi State University (2004 – 2006) • BA in Social Psychology (With Honors) Ivane Javakhishvili Tbilisi State University (2000 – 2004) Positions • Fulbright Postdoctoral Scholar: New York University, Department of Anthropology (August 2016 – present) • Assistant Professor: School of Governance and Social Sciences, Free University of Tbilisi (SePtember 2015 – present) • Academic Coordinator: School of Governance and Social Sciences, Free University of Tbilisi. (October 2010 – March 2011) Duties: Designing the curriculum for the School, interviewing lecturers, organizing public lectures, and coordinating meetings with academic committee members. 1 • Research Assistant: ESRC funded Project on social and human rights imPact assessment and the governance of technology, University of Oxford. (SePtember 2010 – October 2010) Duties: Carrying out media research in Georgian archives on the politics of the construction of the Baku-Tbilisi-Ceihan Oil pipeline. -

Unai Members List August 2021

UNAI MEMBER LIST Updated 27 August 2021 COUNTRY NAME OF SCHOOL REGION Afghanistan Kateb University Asia and the Pacific Afghanistan Spinghar University Asia and the Pacific Albania Academy of Arts Europe and CIS Albania Epoka University Europe and CIS Albania Polytechnic University of Tirana Europe and CIS Algeria Centre Universitaire d'El Tarf Arab States Algeria Université 8 Mai 1945 Guelma Arab States Algeria Université Ferhat Abbas Arab States Algeria University of Mohamed Boudiaf M’Sila Arab States Antigua and Barbuda American University of Antigua College of Medicine Americas Argentina Facultad de Ciencias Económicas de la Universidad de Buenos Aires Americas Argentina Facultad Regional Buenos Aires Americas Argentina Universidad Abierta Interamericana Americas Argentina Universidad Argentina de la Empresa Americas Argentina Universidad Católica de Salta Americas Argentina Universidad de Congreso Americas Argentina Universidad de La Punta Americas Argentina Universidad del CEMA Americas Argentina Universidad del Salvador Americas Argentina Universidad Nacional de Avellaneda Americas Argentina Universidad Nacional de Cordoba Americas Argentina Universidad Nacional de Cuyo Americas Argentina Universidad Nacional de Jujuy Americas Argentina Universidad Nacional de la Pampa Americas Argentina Universidad Nacional de Mar del Plata Americas Argentina Universidad Nacional de Quilmes Americas Argentina Universidad Nacional de Rosario Americas Argentina Universidad Nacional de Santiago del Estero Americas Argentina Universidad Nacional de -

Jorbenadze Revaz

CURRICULUM VITAE ________________________________________________________________________ Last name: Jorbenadze First name: Revaz Address: Ingorokva st. # 19 a, room 33, Tbilisi, Georgia Telepone: 2 905620 (home); + 995 599 906154 Email: [email protected]. [email protected] ________________________________________________________________________ Education ________________________________________________________________________ 2014 – Training course – Working with adversity survivors.(Certificate); 2008 – Seminar in Mediation Techniques 2003 – 2007 – Seminar in Conflict Management and Techniques for Mediation , Denver University. 2003 – Seminar in Academic Writing; 2002 - Seminar: Protecting of Civil Interests (Certificate); 2002 - Seminar: Fundraising of Non-governmental organizations (Certificate); 2001 - Seminar: Use of Qualitative Methods in Social Sciences; 2000-2001 - Raising the level of skill at Political Science Institute of Poland; 1998 - Seminar: Conflict Management and Mediation (Certificate); 1997 - Seminar: Conflict Management and Negotiation (Certificate); 1995 - Seminar: Experiential Learning Techniques (Certificate); 1995 - Seminar: Alternative Conflict Resolution (Certificate); 1995 – Seminar in Conflict Management and Facilitation (Certificate); 1994 - PhD thesis in Social Psychology (Candidate Dissertation); 1992-1994 - Seminar: Jungian Psychotherapy; 1990 - Seminar: Managing Changes in Changing World; 1990 – Seminar in Psycho synthesis ; 1990 – Seminar in Family Psychotherapy (Certificate); 1981 - Tbilisi -

International Students' Scientific Conference

International Students’ Scientific Conference Prospects for European Integration of the Southern Caucasus Tbilisi, October 25-26, 2014 International Students’ Scientific Conference Prospects for European Integration of the Southern Caucasus Tbilisi, October 25-26, 2014 ISSN – 1987 – 5703 UDC 330/34(479) (063) Tbilisi, 2014 ს-279 D-49 კრებული შედგენილია ”სამხრეთ კავკასიის ევროპულ სივრცეში ინტეგრაციის პერსპექტივები” დევიზით გამართულ მეექვსე სტუდენტთა საერთაშორისო სამეცნიერო კონფერენციაზე წარმოდგენილი საუკეთესო ნაშრომებით. The collection contains the best scientific works of the Internationals Students’ Scientific Conference “ The Pros- pects for European Integration of the Southern Caucasus’’. სარედაქციო საბჭო: პროფ. შალვა მაჭავარიანი (თავმჯდომარე), პროფ. გურამ ლეჟავა, პროფ. თეიმურაზ ხუციშვილი, პროფ. სერგი კაპანაძე, პროფ. ინდრეკ იაკობსონი, პროფ. გიორგი ღაღანიძე, პროფ. ტანელ კერიკმაე, თათია ღერკენაშვილი (მდივანი). Editing Board: Prof. Shalva Machavariani (head), Prof. Guram Lezhava, Prof. Teimuraz Khutsishvili, Prof. Sergi Kapanadze, Prof. Indrek Jakobson, Prof. Giorgi Gaganidze, Prof. Tanel Kerikmae, Tatia Gherkenashvili (secretary) გამომცემელი: კავკასიის უნივერსიტეტი, ფრიდრიხ ებერტის ფონდი, გამომცემლობა ”სი-ჯი-ეს”-თან თანამშრომლობით. Published by Caucasus University, Friedrich-Ebert-Stiftung, by the collaboartion with CSG. პროექტი განხორციელდა ფრიდრიხ ებერტის ფონდისა და ბავშვთა და ახალგაზრდობის განვითარების ფონდის ხელშეწყობით. პუბლიკაციაში წარმოდგენილია ავტორთა პირადი მოსაზრებები. დაუშვებელია ფრიდრიხ ებერტის ფონდის მიერ გამოცემული მასალების -

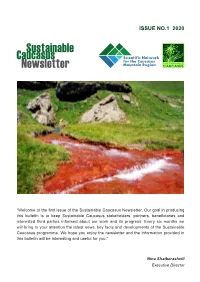

Issue No.1 2020

ISSUE NO.1 2020 “Welcome to the first issue of the Sustainable Caucasus Newsletter. Our goal in producing this bulletin is to keep Sustainable Caucasus stakeholders, partners, beneficiaries and interested third parties informed about our work and its progress. Every six months we will bring to your attention the latest news, key facts and developments of the Sustainable Caucasus programme. We hope you enjoy the newsletter and the information provided in this bulletin will be interesting and useful for you.” Nina Shatberashvili Executive Director BACKGROUND The Caucasus Network for Sustainable implementing other actions as appropriate. Development of Mountain Regions seeks to Sustainable Caucasus is the co-ordination promote sustainable mountain development unit of the Scientific Network for the by supporting regional cooperation, bringing Caucasus Mountain Region (SNC-mt). Since together key stakeholders and implementing its foundation, Sustainable Caucasus has innovative solutions on the ground. To defined the following key priorities in its work achieve its objectives, Sustainable Caucasus to enhance co-operation and partnership for looks to utilise a variety of activities targeted sustainable development of the Caucasus at sustainable development in the Caucasus region: mountain region including: capacity building, transforming and disseminating knowledge; ■ Strengthening the science-policy interface developing, analysing and monitoring relevant ■ Knowledge generation policies; supporting training and education; ■ Research for development raising awareness; enhancing stakeholder ■ Best practices and policy advocacy co-operation and experience sharing; ■ Higher education promoting and introducing best practices; and ■ Networking and membership 2 STRENGTHENING THE SCIENCE-POLICY INTERFACE CAUCASUS MOUNTAIN FORUM The Caucasus Mountain Forum (CMF) is one by Ankara University, was organised within of SNC-mt’s flagship initiatives. -

Lia Matchavariani

Curriculum Vitae LIA MATCHAVARIANI Date of birth: 6 February, 1960 Mob.: (+995) 599 234494; Office: (+995 32) 2293649 [email protected] WORK EXPERIENCE Dates & Name of employer 2012-present – Iv. Javakhishvili Tbilisi State University (TSU) Occupation, position held Professor – Faculty of Exact & Natural Sciences, Geography Department Dates & Name of employer 2009-2012, 2006-2009 – Iv. Javakhishvili Tbilisi State University (TSU) Occupation, position held Assoc. Professor – Faculty of Exact & Natural Sciences, Geography Department Dates & Name of employer 2005-2011 – Iv. Javakhishvili Tbilisi State University (TSU) Senior Specialist at the Office of Academic Process Management & Scientific Researches – Occupation, position held Faculty of Exact & Natural Sciences Dates & Name of employer 2003-2005 – Iv. Javakhishvili Tbilisi State University (TSU) Occupation, position held Vice-Dean – Faculty of Geography Dates & Name of employer 1998-2006 – Iv. Javakhishvili Tbilisi State University (TSU) Occupation, position held Docent, Lecturer – Chair: Geography of Georgia; Geography-Geology Faculty Dates & Name of employer 1992-1998, 1990-1992, 1982-1990 –Tbilisi State University (TSU) Occupation, position held Docent, Teacher, Laboratorian – Chair: Hydrology, Soil Science; Geography-Geology Faculty EDUCATION Dates & Name of organization 2006, Iv. Javakhishvili Tbilisi State University Title of qualification awarded Scientific Degree: Doctor of Sciences in Geography (Geo-ecology) Dates & Name of organization 1989, GSAU, Tbilisi Title of -

Engaging Students to Be Active Citizens and Involved in The

ERASMUS+ Key Action 2 Capacity Building Program for Higher Institutions of Education 2016 Selected Project: Curriculum Reform for Promoting Civic Education and Democratic Principles in Israel and in Georgia CURE Project number: 573322-EPP-1-2016-IL-EPPKA2-CBHE Engaging Students to be active citizens in their community: CURE’s Centers for Social and Civic Involvement by: Rhonda Sofer, Coordinator of CURE Tomer Ben Hamou, Coordinator of Student Activities in Israel Tamar Moshiashvili, Coordinator of Student Activities in Georgia Contributors: Alan Bainbridge, Canterbury Christ Church University Evanne Ratner, Gordon Academic College of Education Disclaimer: "This project has been funded with support from the European Commission. This publication reflects the views only of the authors, and the commission cannot be held responsible for any use which may be made of the information contained therein" able of contents T Acknowledgements 3 Introduction 5 By Rhonda Sofer Chapter 1: An Overview of the Impact of the Centers of 8 Social and Civic Involvement and its student activities By Alan Bainbridge Chapter 2: Community Placemaking 14 By Tomer Ben Hamou Chapter 3: World Cafe 22 By Tomer Ben Hamou Chapter 4: Dialogue through Discussions, Debates, the Media 25 and Field Trips By Evanne Ratner Chapter 5: CURE’s Student Activities in Georgia: School-based 27 Community Action By Tamar Moshiashvili Conclusion: Some Reflections on CURE’s Centers of Social and Civic Involvements and Student Activities in Israel and in 43 Georgia By Dr. Rhonda Sofer, Coordinator of CURE Recommended Literature 46 Appendix 1: About the CURE project 50 2 Acknowledgements I have been the coordinator of the ERASMUS+ program Curriculum Reform for Promoting Democracy and Civic Education in Israel and Georgia -acronym CURE, officially from October 15, 2016 (website- https://cure.erasmus-plus.org.il/ ). -

Specific Support to Georgia: Horizon 2020 Policy Support Facility Mission (1) Mission Dates: December 4-7, 2017 Agenda Monday, December 4, 2017

Specific Support to Georgia: Horizon 2020 Policy Support Facility mission (1) Mission dates: December 4-7, 2017 Agenda Monday, December 4, 2017 Time Meeting Venue 13:00 – 14:00 Dr. Mikheil Chkhenkeli, Minister of Education and Ministry of Education and Science of Science of Georgia Georgia Address: #52 Dimitri Uznadze Str., Dr. Alexander Tevzadze, Deputy Minister of Tbilisi, Georgia Education and Science of Georgia 15:00 – 17:00 Meeting with Rectors of Major State Research Ivane Javakhishvili Tbilisi State University Universities of Georgia: Address: #1 Chavchavadze ave. Room - Ivane Javakhishvili Tbilisi State University #107 Dr. George Sharvashidze, Rector, - Ilia State University Dr. Giga Zedania, Rector, - Georgian Technical University Dr. Archil Prangishvili - Tbilisi State Medical University Dr. Zurab Vadachkoria, Rector, - Sokhumi State University Dr. Zurab Khonelidze, Rector - Akaki Tsereteli State University Dr. George Ghavtadze - Shota Meskhia State Teaching University of Zugdidi Dr. Tea Khupenia - Batumi Shota Rustaveli State University Dr. Natia Tsiklashvili - Samtskhe-Javakheti State University Merab Beridze/Maka Kachkachishvili-Beridze 17:30 – 18:30 Meeting with representatives of MoES, SRNSFG Shota Rustaveli National Science and the delegationn of the European Union to Foundation of Georgia, Georgia: Address: # 1 Aleksidze Street, III floor, Conference Hall Mr. Kakha Khandolishvili, Ms.Natia Gabitashvili, Ms. Manana Mikaberidze, Dr. Nino Gachechiladze, Dr. Ekaterine Kldiashvili, Ms. Mariam Keburia Ms. Nika Kochishvili Wrap-up -

Title of the Program: Economics the Programe Is Carried out with the International School of Economics at Tbilisi State University (ISET)

Title of the Program: Economics The programe is carried out with the International School of Economics at Tbilisi State University (ISET) Academic Degree Offered: PhD in Economics. Head of the PhD program: Wilfred J. Ethier, Professor of the University of Pennsylvania, ISET Academic Director. Qualification of the program: a) Goals and Aims of the Program: To educate highly qualified economists who are on demand by educational institutions, public and private sectors; To conduct research and provide education in economics that meet international standards with regard to the interest of the public and private sectors; To establish new methods of economic research and learning in the South Caucasus. To educate those students (from the South Caucasus) who are not able or who do not wish to continue their studies abroad. Students will be able to get knowledge in accordance with the international standards and get a PhD degree in economics without leaving Georgia. a) Learning outcome: Upon the completion of the program students will be able to demonstrate: fundamental knowledge in modern principles and methods of macro and micro economics, transition economics, economic geography, environmental economics, labor economics, monetary and political economics. Students who are able to independently use received knowledge and solve the problems in original way; skills for making optimal decisions regarding the role of the government and economic development; skills in solving practical problems by using theoretical concepts and approaches; multicultural experience as the students get the opportunity to study with the students from the South Caucasus and also work with the professors from Western countries; knowledge of modern methods and approaches in economic science Teaching skills. -

FORMATO PDF Ranking Instituciones Acadã©Micas Por Sub Ã

Ranking Instituciones Académicas por sub área OCDE 2020 5. Ciencias Sociales > 5.06 Ciencias Políticas PAÍS INSTITUCIÓN RANKING PUNTAJE USA Harvard University 1 5,000 UNITED KINGDOM University of Oxford 2 5,000 UNITED KINGDOM London School Economics & Political Science 3 5,000 USA Stanford University 4 5,000 NETHERLANDS University of Amsterdam 5 5,000 USA Princeton University 6 5,000 USA Columbia University 7 5,000 UNITED KINGDOM University of Manchester 8 5,000 USA New York University 9 5,000 UNITED KINGDOM University of Warwick 10 5,000 USA University of Michigan 11 5,000 USA Yale University 12 5,000 AUSTRALIA Australian National University 13 5,000 UNITED KINGDOM University College London 14 5,000 NORWAY University of Oslo 15 5,000 UNITED KINGDOM Kings College London 16 5,000 USA Georgetown University 17 5,000 UNITED KINGDOM University of Edinburgh 18 5,000 DENMARK Aarhus University 19 5,000 USA University of California Berkeley 20 5,000 ITALY European University Institute 21 5,000 NETHERLANDS Utrecht University 22 5,000 SWITZERLAND University of Zurich 23 5,000 USA University of Pennsylvania 24 5,000 NETHERLANDS Erasmus University Rotterdam 25 5,000 NETHERLANDS Leiden University 26 5,000 USA Duke University 27 5,000 UNITED KINGDOM University of Cambridge 28 5,000 UNITED KINGDOM University of Nottingham 29 5,000 USA George Washington University 30 5,000 SWEDEN University of Gothenburg 31 5,000 UNITED KINGDOM University of Exeter 32 5,000 USA Cornell University 33 5,000 DENMARK University of Copenhagen 34 5,000 CANADA University of -

CURRICULUM VITAE (CV) Name, Surname: Giorgi Khishtovani, Phd

CURRICULUM VITAE (CV) Name, Surname: Giorgi Khishtovani, PhD Country of Citizenship/Residence Georgia Education: - Doctoral Studies (Dr. rer. Pol.), University of Bremen, Faculty of Business Studies and Economics (2011-2014) Doctoral Thesis: “The Transformation of Governance Structures in Georgia, 2003- 2012” - Master of Law (LL.M.), Major: Entrepreneurial Law, University of Trier, Faculty of Law (2008-2009) Master’s Thesis: “Mergers and Acquisitions Warranty Rights” - Master of Economic and Social Studies, Major: Finance and Investments, University of Trier, Faculty of Business Administration and Economics (2005-2008) Master’s Thesis: “Critical Analysis of “Discounted Cash-Flow”-Method” - B.A. in Business and Law, Georgian Technical University (2000-2005) Student Exchange Year, Leuphana University, Germany (2003-2004) Bachelor’s Thesis: “Activities of International Financial Institutions in Developing Countries” Employment record on academic positions: Period Employing Country Summary of activities performed organization and your title/position. Contact info for references 12/2016- Iliauni Business School, Georgia - Teaching classes in Finance and Investment on BA present Ilia State University and MA levels: • Firm Valuation, Associate Professor and • Fundamentals of Financial Management, Head of “Finance and • Advanced Finance, Investment” Department • Insurance Business Cases, • Regulatory Impact Assessment (RIA) • Foundations of Business 09/2015- Department of Finance, Georgia - Teaching classes in Finance and Investment: 12/2016