UC Riverside UC Riverside Electronic Theses and Dissertations

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Osmand - This Article Describes How to Use Key Feature

HowToArticles - osmand - This article describes how to use key feature... http://code.google.com/p/osmand/wiki/HowToArticles#First_steps [email protected] | My favorites ▼ | Profile | Sign out osmand Navigation & routing based on Open Street Maps for Android devices Project Home Downloads Wiki Issues Source Search for ‹‹ HowToArticles HowTo Articles This article describes how to use key features How To First steps Featured How To Understand vector en, ru Updated and raster maps How To Download data How To Find on map Introduction How To Filter POI This articles helps you to understand how to use the application, and gives you idea's about how the functionality could be used. How To Customize map view How To How To Arrange layers and overlays How To Manage favorite How To First steps places How To Navigate to point First you can think about which features are most usable and suitable for you. You can use Osmand online and offline for How To Use routing displaying a lot of online maps, pre-downloaded very compact so-called OpenStreetMap "vector" map-files. You can search and How To Use voice routing find adresses, places of interest (POI) and favorites, you can find routes to navigate with car, bike and by foot, you can record, How To Limit internet replay and follow selfcreated or downloaded GPX tracks by foot and bike. You can find Public Transport stops, lines and even usage shortest public transport routes!. You can use very expanded filter options to show and find POI's. You can share your position with friends by mail or SMS text-messages. -

Comparison of GUI Testing Tools for Android Applications

Comparison of GUI testing tools for Android applications University of Oulu Department of Information Processing Science Master’s Thesis Tomi Lämsä Date 22.5.2017 2 Abstract Test automation is an intriguing area of software engineering, especially in Android development. This is since Android applications must be able to run in many different permutations of operating system versions and hardware choices. Comparison of different tools for automated UI testing of Android applications is done in this thesis. In a literature review several different tools available and their popularity is researched and the structure of the most popular tools is looked at. The two tools identified to be the most popular are Appium and Espresso. In an empirical study the two tools along with Robotium, UiAutomator and Tau are compared against each other in test execution speed, maintainability of the test code, reliability of the test tools and in general issues. An empirical study was carried out by selecting three Android applications for which an identical suite of tests was developed with each tool. The test suites were then run and the execution speed and reliability was analysed based on these results. The test code written is also analysed for maintainability by calculating the lines of code and the number of method calls needed to handle asynchrony related to UI updates. The issues faced by the test developer with the different tools are also analysed. This thesis aims to help industry users of these kinds of applications in two ways. First, it could be used as a source on what tools are over all available for UI testing of Android applications. -

Pysal Documentation Release 1.14.3

pysal Documentation Release 1.14.3 PySAL Developers Oct 04, 2019 Contents 1 User Guide 3 2 Developer Guide 73 3 Library Reference 97 Bibliography 109 i ii pysal Documentation, Release 1.14.3 Releases • Stable 1.14.3 (Released 2017-11-2) • Development PySAL is an open source library of spatial analysis functions written in Python intended to support the development of high level applications. PySAL is open source under the BSD License. Contents 1 pysal Documentation, Release 1.14.3 2 Contents CHAPTER 1 User Guide 1.1 Introduction Contents • Introduction – History – Scope – Research Papers and Presentations 1.1.1 History PySAL grew out of a collaborative effort between Luc Anselin’s group previously located at the University of Illinois, Champaign-Urbana, and Serge Rey who was at San Diego State University. It was born out of a recognition that the respective projects at the two institutions, PySpace (now GeoDaSpace) and STARS - Space Time Analysis of Regional Systems, could benefit from a shared analytical core, since this would limit code duplication and free up additional developer time to focus on enhancements of the respective applications. This recognition also came at a time when Python was starting to make major inroads in geographic information systems as represented by projects such as the Python Cartographic Library, Shapely and ESRI’s adoption of Python as a scripting language, among others. At the same time there was a dearth of Python modules for spatial statistics, spatial econometrics, location modeling and other areas of spatial analysis, and the role for PySAL was then expanded beyond its support of STARS and GeoDaSpace to provide a library of core spatial analytical functions that could support the next generation of spatial analysis applications. -

Assessing the Credibility of Volunteered Geographic Information: the Case of Openstreetmap

ASSESSING THE CREDIBILITY OF VOLUNTEERED GEOGRAPHIC INFORMATION: THE CASE OF OPENSTREETMAP BANI IDHAM MUTTAQIEN February, 2017 SUPERVISORS: Dr. F.O. Ostermann Dr. ir. R.L.G. Lemmens ASSESSING THE CREDIBILITY OF VOLUNTEERED GEOGRAPHIC INFORMATION: THE CASE OF OPENSTREETMAP BANI IDHAM MUTTAQIEN Enschede, The Netherlands, February, 2017 Thesis submitted to the Faculty of Geo-Information Science and Earth Observation of the University of Twente in partial fulfillment of the requirements for the degree of Master of Science in Geo-information Science and Earth Observation. Specialization: Geoinformatics SUPERVISORS: Dr. F.O. Ostermann Dr.ir. R.L.G. Lemmens THESIS ASSESSMENT BOARD: Prof. Dr. M.J. Kraak (Chair) Dr. S. Jirka (External Examiner, 52°North Initiative for Geospatial Open Source Software GmbH) DISCLAIMER This document describes work undertaken as part of a program of study at the Faculty of Geo-Information Science and Earth Observation of the University of Twente. All views and opinions expressed therein remain the sole responsibility of the author, and do not necessarily represent those of the Faculty. ABSTRACT The emerging paradigm of Volunteered Geographic Information (VGI) in the geospatial domain is interesting research since the use of this type of information in a wide range of applications domain has grown extensively. It is important to identify the quality and fitness-of-use of VGI because of non- standardized and crowdsourced data collection process as well as the unknown skill and motivation of the contributors. Assessing the VGI quality against external data source is still debatable due to lack of availability of external data or even uncomparable. Hence, this study proposes the intrinsic measure of quality through the notion of credibility. -

Mobile Developer's Guide to the Galaxy

Don’t Panic MOBILE DEVELOPER’S GUIDE TO THE GALAXY U PD A TE D & EX TE ND 12th ED EDITION published by: Services and Tools for All Mobile Platforms Enough Software GmbH + Co. KG Sögestrasse 70 28195 Bremen Germany www.enough.de Please send your feedback, questions or sponsorship requests to: [email protected] Follow us on Twitter: @enoughsoftware 12th Edition February 2013 This Developer Guide is licensed under the Creative Commons Some Rights Reserved License. Editors: Marco Tabor (Enough Software) Julian Harty Izabella Balce Art Direction and Design by Andrej Balaz (Enough Software) Mobile Developer’s Guide Contents I Prologue 1 The Galaxy of Mobile: An Introduction 1 Topology: Form Factors and Usage Patterns 2 Star Formation: Creating a Mobile Service 6 The Universe of Mobile Operating Systems 12 About Time and Space 12 Lost in Space 14 Conceptional Design For Mobile 14 Capturing The Idea 16 Designing User Experience 22 Android 22 The Ecosystem 24 Prerequisites 25 Implementation 28 Testing 30 Building 30 Signing 31 Distribution 32 Monetization 34 BlackBerry Java Apps 34 The Ecosystem 35 Prerequisites 36 Implementation 38 Testing 39 Signing 39 Distribution 40 Learn More 42 BlackBerry 10 42 The Ecosystem 43 Development 51 Testing 51 Signing 52 Distribution 54 iOS 54 The Ecosystem 55 Technology Overview 57 Testing & Debugging 59 Learn More 62 Java ME (J2ME) 62 The Ecosystem 63 Prerequisites 64 Implementation 67 Testing 68 Porting 70 Signing 71 Distribution 72 Learn More 4 75 Windows Phone 75 The Ecosystem 76 Implementation 82 Testing -

There Are Two Dedicated Dspace IRC (Internet Relay Chat) Channels (I.E

IRC Code of Conduct All DSpace support channels, mailing lists and meetings follow the LYRASIS Code of Conduct. We ask you to remain respectful in all discussions. We also encourage you to report any violations of the code of conduct to LYRASIS (see the Code of Conduct for more details). DSpace chat has moved to Slack Our old IRC channels are essentially unmonitored these days. Instead, we recommend joining our DSpace.org Slack for any chat-based support. You are also welcome to email your question to the dspace-tech Mailing List as an alternative, or choose one of the other DSpace Sup port options. There are two dedicated DSpace IRC (internet relay chat) channels (i.e. "rooms") on the irc.freenode.net server: #dspace (irc://irc.freenode.net/dspace) - all DSpace discussions and live Q & A. This channel is unlogged. #duraspace (irc://irc.freenode.net/duraspace) - Old duraspace community channel (no longer used). As of July 2018, this channel is unlogged. Pri or years logs are still available/archived. If you do not have access to an IRC client, or are new to IRC, the following web interfaces are very easy way to get started: Login to #dspace IRC at: http://webchat.freenode.net?channels=dspace Enter in a nickname of your choice (e.g. firstName_lastName) & click "Connect" Login to #duraspace IRC at: http://webchat.freenode.net?channels=duraspace Enter in a nickname of your choice (e.g. firstName_lastName) & click "Connect" Or you can login to both IRC channels at the same time: http://webchat.freenode.net?channels=duraspace,dspace For a list of IRC client software, see: http://en.wikipedia.org/wiki/Comparison_of_Internet_Relay_Chat_clients Many current Instant Messaging (IM) clients also support IRC. -

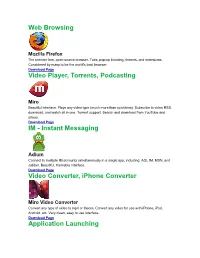

Instant Messaging Video Converter, Iphone Converter Application

Web Browsing Mozilla Firefox The premier free, open-source browser. Tabs, pop-up blocking, themes, and extensions. Considered by many to be the world's best browser. Download Page Video Player, Torrents, Podcasting Miro Beautiful interface. Plays any video type (much more than quicktime). Subscribe to video RSS, download, and watch all in one. Torrent support. Search and download from YouTube and others. Download Page IM - Instant Messaging Adium Connect to multiple IM accounts simultaneously in a single app, including: AOL IM, MSN, and Jabber. Beautiful, themable interface. Download Page Video Converter, iPhone Converter Miro Video Converter Convert any type of video to mp4 or theora. Convert any video for use with iPhone, iPod, Android, etc. Very clean, easy to use interface. Download Page Application Launching Quicksilver Quicksilver lets you start applications (and do just about everything) with a few quick taps of your fingers. Warning: start using Quicksilver and you won't be able to imagine using a Mac without it. Download Page Email Mozilla Thunderbird Powerful spam filtering, solid interface, and all the features you need. Download Page Utilities The Unarchiver Uncompress RAR, 7zip, tar, and bz2 files on your Mac. Many new Mac users will be puzzled the first time they download a RAR file. Do them a favor and download UnRarX for them! Download Page DVD Ripping Handbrake DVD ripper and MPEG-4 / H.264 encoding. Very simple to use. Download Page RSS Vienna Very nice, native RSS client. Download Page RSSOwl Solid cross-platform RSS client. Download Page Peer-to-Peer Filesharing Cabos A simple, easy to use filesharing program. -

Navegação Turn-By-Turn Em Android Relatório De Estágio Para A

INSTITUTO POLITÉCNICO DE COIMBRA INSTITUTO SUPERIOR DE ENGENHARIA DE COIMBRA Navegação Turn-by-Turn em Android Relatório de estágio para a obtenção do grau de Mestre em Informática e Sistemas Autor Luís Miguel dos Santos Henriques Orientação Professor Doutor João Durães Professor Doutor Bruno Cabral Mestrado em Engenharia Informática e Sistemas Navegação Turn-by-Turn em Android Relatório de estágio apresentado para a obtenção do grau de Mestre em Informática e Sistemas Especialização em Desenvolvimento de Software Autor Luís Miguel dos Santos Henriques Orientador Professor Doutor João António Pereira Almeida Durães Professor do Departamento de Engenharia Informática e de Sistemas Instituto Superior de Engenharia de Coimbra Supervisor Professor Doutor Bruno Miguel Brás Cabral Sentilant Coimbra, Fevereiro, 2019 Agradecimentos Aos meus pais por todo o apoio que me deram, Ao meu irmão pela inspiração, À minha namorada por todo o amor e paciência, Ao meu primo, por me fazer acreditar que nunca é tarde, Aos meus professores por me darem esta segunda oportunidade, A todos vocês devo o novo rumo da minha vida. Obrigado. i ii Abstract This report describes the work done during the internship of the Master's degree in Computer Science and Systems, Specialization in Software Development, from the Polytechnic of Coimbra - ISEC. This internship, which began in October 17 of 2017 and ended in July 18 of 2018, took place in the company Sentilant, and had as its main goal the development of a turn-by- turn navigation module for a logistics management application named Drivian Tasks. During the internship activities, a turn-by-turn navigation module was developed from scratch, while matching the specifications indicated by the project managers in the host entity. -

Free Your Android! Not Free As in Free Beer About the FSFE This flyer Was Printed by the Free Software You Don't Have to Pay for the Apps from F-Droid

Free as in Freedom Free Your Android! Not Free as in Free Beer About the FSFE This flyer was printed by the Free Software You don't have to pay for the apps from F-Droid. A lot Foundation Europe (FSFE), a non-profit organi- of applications from Google Play or Apple's App Store sation dedicated to promoting Free Software Get a are also free of charge. However, Free Software is not and working to build a free digital society. about price, but liberty. Free App Store Access to software de- When you don't control a program, the program termines how we can take for Your Android controls you. Whoever controls the software therefore part in our society. There- controls you. fore, FSFE is dedicated to ensure equal access and For example, nobody is allowed to study how a non- participation in the infor- free app works and what it actually does on your mation age by fighting for phone. Sometimes it just doesn't do exactly what you digital freedom. want, but there are also apps that contain malicious features like leaking your data without your knowledge. Nobody should ever be forced to use software that does not grant the freedoms to use, Running exclusively Free Software on your device puts study, share and improve the software. You you in full control. Even though you may not have the should have the right to shape technology as skills to directly exercise all of your freedom, you you see fit. benefit from a vibrant community that is enabled by freedom and uses it collaboratively. -

Behavioral Analysis of Android Applications Using Automated Instrumentation

2013 Seventh International Conference on Software Security and Reliability Companion Behavioral Analysis of Android Applications Using Automated Instrumentation Mohammad Karami, Mohamed Elsabagh, Parnian Najafiborazjani, and Angelos Stavrou Computer Science Department, George Mason University, Fairfax, VA 22030 { mkarami, melsabag, pnajafib, astavrou}@gmu.edu Abstract—Google’s Android operating system has become one application is not a straight forward task due to variety of the most popular operating system for hand-held devices. Due inputs and heterogeneity of the technologies [12]. to its ubiquitous use, open source nature and wide-spread Two primary methods are being employed for mobile appli- popularity, it has become the target of recent mobile malware. In this paper, we present our efforts on effective security cation analysis: white-box approach and black-box approach. inspection mechanisms for identification of malicious applications In black-box testing only the inputs and outputs of the appli- for Android mobile applications. To achieve that, we devel- cation are being exercised. On the other hand, for white box oped a comprehensive software inspection framework. Moreover, approach the source code need to be analyzed. Since the source to identify potential software reliability flaws and to trigger code of the malicious applications that we get from Google malware, we develop a transparent instrumentation system for automating user interactions with an Android application that Play is not available we cannot analyze the internal structure does not require source code. Additionally, for run-time behavior of the malicious applications to figure out what they exactly analysis of an application, we monitor the I/O system calls gener- do, but we can utilize the black-box testing to define their ated the by application under monitoring to the underlying Linux functionality. -

Unit Testing, Integration Testing and Continuous Builds for Android

Unit Testing, Integration Testing and Continuous Builds Manfred Moser simpligility technologies inc. http://www.simpligility.com @simpligility Agenda Get an overview about testing and continuous integration for Android app development Why testing? What can we test? How can we do it? 2 Manfred Moser simpligility.com Apache Maven See previous presentation Maven used to control build and more Good library reuse and dependency use – makes testing easier out of the box Strong tool support But its all possible without Maven too... Why (automated) testing? Find problem early and you ● Can fix it quickly ● Safe money on QA testing ● Do not get negative customer feedback ● Deal with feature requests instead of bugs ● Avoid production problems ● Can refactor (and change) without breaking old stuff 4 Manfred Moser simpligility.com What are we testing? Plain java code Android dependent code Configuration User interface Look and feel 5 Manfred Moser simpligility.com JVM vs Dalvik/Android stack JVM based: ● Faster ● More tools available ● More mature tooling Dalvik based: ● Necessary for integration tests ● Reproduce actual behaviour ● Full stack testing (beyond VM, to native..) 6 Manfred Moser simpligility.com JVM testing tools ● JUnit ● TestNG ● EasyMock ● Unitils ● Cobertura ● Emma ● and many more 7 Android SDK Test Tools ● Integrated Junit ● use on emulator/device though ● Instrumentation Test Tools ● rich set of classes for testing ● now well documented ● MonkeyRunner ● control device/emulator running tests ● take screenshots ● jython 8 Dalvik/Android -

Implementazione Dell'accessibilità in Opentripplanner

ALMA MATER STUDIORUM – UNIVERSITÀ DI BOLOGNA CAMPUS DI CESENA SCUOLA DI SCIENZE CORSO DI LAUREA IN SCIENZE E TECNOLOGIE INFORMATICHE Implementazione dell’accessibilità in OpenTripPlanner Relazione finale in Tecnologie Web Relatore Presentata da Dott.ssa Paola Salomoni Stefano Nicoletti Correlatore Dott.ssa Catia Prandi Sessione III Anno Accademico 2014/2015 Indice Indice INDICE .................................................................................................................. I INTRODUZIONE .................................................................................................... 1 1 ACCESSIBLE WEB E ACCESSIBLE MAP ..................................................... 5 1.1 ACCESSIBLE WEB .............................................................................................. 5 1.1.1 Definizione .............................................................................................. 6 1.1.2 Standard ISO ........................................................................................... 8 1.1.3 Linee guida e leggi .................................................................................. 8 1.2 ACCESSIBLE MAP ............................................................................................ 12 1.2.1 Background ........................................................................................... 13 1.2.2 Temi e target .......................................................................................... 14 1.2.3 Tecniche per rendere le mappe accessibili ..........................................