Defining and Detecting Environment Discrimination in Android Apps

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Technology That Brings Together All Things Mobile

NFC – The Technology That Brings Together All Things Mobile Philippe Benitez Wednesday, June 4th, 2014 NFC enables fast, secure, mobile contactless services… Card Emulation Mode Reader Mode P2P Mode … for both payment and non-payment services Hospitality – Hotel room keys Mass Transit – passes and limited use tickets Education – Student badge Airlines – Frequent flyer card and boarding passes Enterprise & Government– Employee badge Automotive – car sharing / car rental / fleet management Residential - Access Payment – secure mobile payments Events – Access to stadiums and large venues Loyalty and rewards – enhanced consumer experience 3 h h 1996 2001 2003 2005 2007 2014 2014 2007 2005 2003 2001 1996 previous experiences experiences previous We are benefiting from from benefiting are We Barriers to adoption are disappearing ! NFC Handsets have become mainstream ! Terminalization is being driven by ecosystem upgrades ! TSM Provisioning infrastructure has been deployed Barriers to adoption are disappearing ! NFC Handsets have become mainstream ! Terminalization is being driven by ecosystem upgrades ! TSM Provisioning infrastructure has been deployed 256 handset models now in market worldwide Gionee Elife E7 LG G Pro 2 Nokia Lumia 1020 Samsung Galaxy Note Sony Xperia P Acer E320 Liquid Express Google Nexus 10 LG G2 Nokia Lumia 1520 Samsung Galaxy Note 3 Sony Xperia S Acer Liquid Glow Google Nexus 5 LG Mach Nokia Lumia 2520 Samsung Galaxy Note II Sony Xperia Sola Adlink IMX-2000 Google Nexus 7 (2013) LG Optimus 3D Max Nokia Lumia 610 NFC Samsung -

USER MANUAL Welcome!

M351 USER MANUAL Welcome! An Internet phone of a brand new generation, Meizu M351 is designed to bring users countless surprise and joy. users are welcome to visit the official MEIZU website at: http://www.meizu.com On our website, users can browse for software, download firmware upgrades, participate in online discussions, learn more using tips, and much more! Because the constant improvements we made for our products, the features found in the user manual users are currently reading may differ from the actual product. Make sure to always download the latest manual from our official website. This manual was last updated January 18, 2011. This manual was last updated July 22, 2013. Meizu M351 Legal information © 2003-2012 Meizu Inc. All rights reserved. Meizu and the Meizu logo are trademarks belonging to Meizu both in the PRC and overseas. Google, Google logo, Android, Google search, Gmail, Google Mail and Android Market are trademarks of Google, Inc. Street View Images © 2010 Google. Bluetooth and the Bluetooth logo are trademarks of Bluetooth SIG, Inc. Java, J2ME and all other Java-based trademarks are registered trademarks belonging to Sun Microsystems, Inc. in the United States and other countries. Meizu (or Meizu's licensors) own all legal rights to the product, trademarks and interests, including but not limited to any intellectual property rights found in services (whether those rights have been registered, and regardless of where in the world those rights may exist). Meizu company services may include information designated as confidential. Without the prior written consent of Meizu; transcription, replication, reproduction or translation of some or all of said contents are prohibited. -

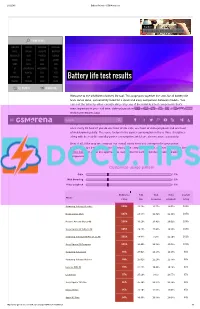

Battery Life Test Results HUAWEI TOSHIBA INTEX PLUM

2/12/2015 Battery life tests GSMArena.com Starborn SAMSUNG GALAXY S6 EDGE+ REVIEW PHONE FINDER SAMSUNG LENOVO VODAFONE VERYKOOL APPLE XIAOMI GIGABYTE MAXWEST MICROSOFT ACER PANTECH CELKON NOKIA ASUS XOLO GIONEE SONY OPPO LAVA VIVO LG BLACKBERRY MICROMAX NIU HTC ALCATEL BLU YEZZ MOTOROLA ZTE SPICE PARLA Battery life test results HUAWEI TOSHIBA INTEX PLUM ALL BRANDS RUMOR MILL Welcome to the GSMArena battery life tool. This page puts together the stats for all battery life tests we've done, conveniently listed for a quick and easy comparison between models. You can sort the table by either overall rating or by any of the individual test components that's most important to you call time, video playback or web browsing.TIP US 828K 100K You can find all about our84K 137K RSS LOG IN SIGN UP testing procedures here. SearchOur overall rating gives you an idea of how much battery backup you can get on a single charge. An overall rating of 40h means that you'll need to fully charge the device in question once every 40 hours if you do one hour of 3G calls, one hour of video playback and one hour of web browsing daily. The score factors in the power consumption in these three disciplines along with the reallife standby power consumption, which we also measure separately. Best of all, if the way we compute our overall rating does not correspond to your usage pattern, you are free to adjust the different usage components to get a closer match. Use the sliders below to adjust the approximate usage time for each of the three battery draining components. -

Test Report on Terminal Compatibility of Huawei's WLAN Products

Huawei WLAN ● Wi-Fi Experience Interoperability Test Reports Test Report on Terminal Compatibility of Huawei's WLAN Products Huawei Technologies Co., Ltd. Test Report on Terminal Compatibility of Huawei's WLAN Products 1 Overview WLAN technology defined in IEEE 802.11 is gaining wide popularity today. WLAN access can replace wired access as the last-mile access solution in scenarios such as public hotspot, home broadband access, and enterprise wireless offices. Compared with other wireless technologies, WLAN is easier to operate and provides higher bandwidth with lower costs, fully meeting user requirements for high-speed wireless broadband services. Wi-Fi terminals are major carriers of WLAN technology and play an essential part in WLAN technology promotion and application. Mature terminal products available on the market cover finance, healthcare, education, transportation, energy, and retail industries. On the basis of WLAN technology, the terminals derive their unique authentication behaviors and implementation methods, for example, using different operating systems. Difference in Wi-Fi chips used by the terminals presents a big challenge to terminal compatibility of Huawei's WLAN products. Figure 1-1 Various WLAN terminals To identify access behaviors and implementation methods of various WLAN terminals and validate Huawei WLAN products' compatibility with the latest mainstream terminals used in various industries, Huawei WLAN product test team carried out a survey on mainstream terminals available on market. Based on the survey result, the team used technologies and methods specific to the WLAN field to test performance indicators of Huawei's WLAN products, including the access capability, authentication and encryption, roaming, protocol, and terminal identification. -

Compatibility Sheet

COMPATIBILITY SHEET SanDisk Ultra Dual USB Drive Transfer Files Easily from Your Smartphone or Tablet Using the SanDisk Ultra Dual USB Drive, you can easily move files from your Android™ smartphone or tablet1 to your computer, freeing up space for music, photos, or HD videos2 Please check for your phone/tablet or mobile device compatiblity below. If your device is not listed, please check with your device manufacturer for OTG compatibility. Acer Acer A3-A10 Acer EE6 Acer W510 tab Alcatel Alcatel_7049D Flash 2 Pop4S(5095K) Archos Diamond S ASUS ASUS FonePad Note 6 ASUS FonePad 7 LTE ASUS Infinity 2 ASUS MeMo Pad (ME172V) * ASUS MeMo Pad 8 ASUS MeMo Pad 10 ASUS ZenFone 2 ASUS ZenFone 3 Laser ASUS ZenFone 5 (LTE/A500KL) ASUS ZenFone 6 BlackBerry Passport Prevro Z30 Blu Vivo 5R Celkon Celkon Q455 Celkon Q500 Celkon Millenia Epic Q550 CoolPad (酷派) CoolPad 8730 * CoolPad 9190L * CoolPad Note 5 CoolPad X7 大神 * Datawind Ubislate 7Ci Dell Venue 8 Venue 10 Pro Gionee (金立) Gionee E7 * Gionee Elife S5.5 Gionee Elife S7 Gionee Elife E8 Gionee Marathon M3 Gionee S5.5 * Gionee P7 Max HTC HTC Butterfly HTC Butterfly 3 HTC Butterfly S HTC Droid DNA (6435LVW) HTC Droid (htc 6435luw) HTC Desire 10 Pro HTC Desire 500 Dual HTC Desire 601 HTC Desire 620h HTC Desire 700 Dual HTC Desire 816 HTC Desire 816W HTC Desire 828 Dual HTC Desire X * HTC J Butterfly (HTL23) HTC J Butterfly (HTV31) HTC Nexus 9 Tab HTC One (6500LVW) HTC One A9 HTC One E8 HTC One M8 HTC One M9 HTC One M9 Plus HTC One M9 (0PJA1) -

Electronic 3D Models Catalogue (On July 26, 2019)

Electronic 3D models Catalogue (on July 26, 2019) Acer 001 Acer Iconia Tab A510 002 Acer Liquid Z5 003 Acer Liquid S2 Red 004 Acer Liquid S2 Black 005 Acer Iconia Tab A3 White 006 Acer Iconia Tab A1-810 White 007 Acer Iconia W4 008 Acer Liquid E3 Black 009 Acer Liquid E3 Silver 010 Acer Iconia B1-720 Iron Gray 011 Acer Iconia B1-720 Red 012 Acer Iconia B1-720 White 013 Acer Liquid Z3 Rock Black 014 Acer Liquid Z3 Classic White 015 Acer Iconia One 7 B1-730 Black 016 Acer Iconia One 7 B1-730 Red 017 Acer Iconia One 7 B1-730 Yellow 018 Acer Iconia One 7 B1-730 Green 019 Acer Iconia One 7 B1-730 Pink 020 Acer Iconia One 7 B1-730 Orange 021 Acer Iconia One 7 B1-730 Purple 022 Acer Iconia One 7 B1-730 White 023 Acer Iconia One 7 B1-730 Blue 024 Acer Iconia One 7 B1-730 Cyan 025 Acer Aspire Switch 10 026 Acer Iconia Tab A1-810 Red 027 Acer Iconia Tab A1-810 Black 028 Acer Iconia A1-830 White 029 Acer Liquid Z4 White 030 Acer Liquid Z4 Black 031 Acer Liquid Z200 Essential White 032 Acer Liquid Z200 Titanium Black 033 Acer Liquid Z200 Fragrant Pink 034 Acer Liquid Z200 Sky Blue 035 Acer Liquid Z200 Sunshine Yellow 036 Acer Liquid Jade Black 037 Acer Liquid Jade Green 038 Acer Liquid Jade White 039 Acer Liquid Z500 Sandy Silver 040 Acer Liquid Z500 Aquamarine Green 041 Acer Liquid Z500 Titanium Black 042 Acer Iconia Tab 7 (A1-713) 043 Acer Iconia Tab 7 (A1-713HD) 044 Acer Liquid E700 Burgundy Red 045 Acer Liquid E700 Titan Black 046 Acer Iconia Tab 8 047 Acer Liquid X1 Graphite Black 048 Acer Liquid X1 Wine Red 049 Acer Iconia Tab 8 W 050 Acer -

Presentación De Powerpoint

Modelos compatibles de Imóvil No. Marca Modelo Teléfono Versión del sistema operativo 1 360 1501_M02 1501_M02 Android 5.1 2 100+ 100B 100B Android 4.1.2 3 Acer Iconia Tab A500 Android 4.0.3 4 ALPS (Golden Master) MR6012H1C2W1 MR6012H1C2W1 Android 4.2.2 5 ALPS (Golden Master) PMID705GTV PMID705GTV Android 4.2.2 6 Amazon Fire HD 6 Fire HD 6 Fire OS 4.5.2 / Android 4.4.3 7 Amazon Fire Phone 32GB Fire Phone 32GB Fire OS 3.6.8 / Android 4.2.2 8 Amoi A862W A862W Android 4.1.2 9 amzn KFFOWI KFFOWI Android 5.1.1 10 Apple iPad 2 (2nd generation) MC979ZP iOS 7.1 11 Apple iPad 4 MD513ZP/A iOS 7.1 12 Apple iPad 4 MD513ZP/A iOS 8.0 13 Apple iPad Air MD785ZP/A iOS 7.1 14 Apple iPad Air 2 MGLW2J/A iOS 8.1 15 Apple iPad Mini MD531ZP iOS 7.1 16 Apple iPad Mini 2 FE276ZP/A iOS 8.1 17 Apple iPad Mini 3 MGNV2J/A iOS 8.1 18 Apple iPhone 3Gs MC132ZP iOS 6.1.3 19 Apple iPhone 4 MC676LL iOS 7.1.2 20 Apple iPhone 4 MC603ZP iOS 7.1.2 21 Apple iPhone 4 MC604ZP iOS 5.1.1 22 Apple iPhone 4s MD245ZP iOS 8.1 23 Apple iPhone 4s MD245ZP iOS 8.4.1 24 Apple iPhone 4s MD245ZP iOS 6.1.2 25 Apple iPhone 5 MD297ZP iOS 6.0 26 Apple iPhone 5 MD298ZP/A iOS 8.1 27 Apple iPhone 5 MD298ZP/A iOS 7.1.1 28 Apple iPhone 5c MF321ZP/A iOS 7.1.2 29 Apple iPhone 5c MF321ZP/A iOS 8.1 30 Apple iPhone 5s MF353ZP/A iOS 8.0 31 Apple iPhone 5s MF353ZP/A iOS 8.4.1 32 Apple iPhone 5s MF352ZP/A iOS 7.1.1 33 Apple iPhone 6 MG492ZP/A iOS 8.1 34 Apple iPhone 6 MG492ZP/A iOS 9.1 35 Apple iPhone 6 Plus MGA92ZP/A iOS 9.0 36 Apple iPhone 6 Plus MGAK2ZP/A iOS 8.0.2 37 Apple iPhone 6 Plus MGAK2ZP/A iOS 8.1 -

Lista De Compatibilidad Dispositivo Mitpv

Lista de Compatibilidad Dispositivo miTPV MARCA MODELO SISTEMA OPERATIVO 100+ 100B Android 4.1.2 360 1501_M02 Android 5.1 Acer Iconia Tab Android 4.0.3 ALPS (Golden Master) MR6012H1C2W1 Android 4.2.2 ALPS (Golden Master) PMID705GTV Android 4.2.2 Amazon Fire HD 6 Fire OS 4.5.2 / Android 4.4.3 Amazon Fire Phone 32GB Fire OS 3.6.8 / Android 4.2.2 Amoi A862W Android 4.1.2 amzn KFFOWI Android 5.1.1 Apple iPad 2 (2nd generation) iOS 7.1 Apple iPad 4 iOS 7.1 Apple iPad 4 iOS 8.0 Apple iPad Air iOS 7.1 Apple iPad Air 2 iOS 8.1 Apple iPad Mini iOS 7.1 Apple iPad Mini 2 iOS 8.1 Apple iPad Mini 3 iOS 8.1 Apple iPhone 3Gs iOS 6.1.3 Apple iPhone 4 iOS 7.1.2 Apple iPhone 4 iOS 7.1.2 Apple iPhone 4 iOS 5.1.1 Apple iPhone 4s iOS 8.1 Apple iPhone 4s iOS 8.4.1 Apple iPhone 4s iOS 6.1.2 Apple iPhone 5 iOS 6.0 Apple iPhone 5 iOS 8.1 Apple iPhone 5 iOS 7.1.1 Apple iPhone 5c iOS 7.1.2 Apple iPhone 5c iOS 8.1 Apple iPhone 5s iOS 8.0 Apple iPhone 5s iOS 8.4.1 Apple iPhone 5s iOS 7.1.1 Apple iPhone 6 iOS 9.1 Apple iPhone 6 iOS 8.1 Apple iPhone 6 Plus iOS 9.0 Apple iPhone 6 Plus iOS 8.0.2 Apple iPhone 6 Plus iOS 8.1 Apple iPhone 6s iOS 9.1 Apple iPhone 6s Plus iOS 9.1 Apple iPod touch 4th Generation iOS 5.1.1 Apple iPod touch 4th Generation iOS 5.0.1 Apple iPod touch 5th Generation 16GB iOS 8.1 Apple iPod touch 5th Generation 32GB iOS 6.1.3 Aquos IS11SH Android 2.3.3 Aquos IS12SH Android 2.3.3 Lista de Compatibilidad Dispositivo miTPV MARCA MODELO SISTEMA OPERATIVO Aquos IS13SH Android 2.3.5 Aquos SH-12C Android 2.3.3 Aquos SH-13C Android 2.3.4 Arrow Girls' Popteen -

Mobile Mcode 3D Cover Mold Apple Iphone 4-EM02 EM01 Yes 0 Apple Iphone 4S-EM01 EM02 Yes 0 Apple Iphone 5/5S/SE-EM04 EM03 Yes

ExclusiveBay Solutions More than 500 models of 3D Sublimation Mobile Covers, Molds, Silicon Whatsapp or Call +91-8199993691, +91-8587095427 Mobile Mcode 3D Cover Mold Apple Iphone 4-EM02 EM01 Yes 0 Apple Iphone 4S-EM01 EM02 Yes 0 Apple Iphone 5/5s/SE-EM04 EM03 Yes 0 Apple Iphone 5S/5/SE-EM03 EM04 Yes 0 Apple Iphone 6-EM06 EM05 Yes 0 Apple Iphone 6S-EM05 EM06 Yes 0 Samsung Galaxy J7 (2015) EM08 Yes 0 Samsung Galaxy J1 (2015) EM09 Yes Yes Samsung Galaxy J5 (2016) EM10 Yes Yes Samsung Galaxy J5 (2015) EM11 Yes 0 Samsung Galaxy J2 (2016)-EM96 EM12 Yes 0 Samsung Galaxy J2 (2015) EM13 Yes 0 Xiaomi Redmi Note 3 EM14 Yes 0 Samsung Galaxy J7 (2016) EM15 Yes 0 Xiaomi Mi 5 EM16 Yes 0 Xiaomi Mi 4 EM17 Yes Yes Xiaomi Mi 4i EM18 Yes 0 Samsung Galaxy E5(E500) (2016) EM19 Yes 0 Samsung Galaxy E7(E700) (2016) EM20 Yes 0 Motorola Moto X Play EM21 Yes 0 Motorola Moto G Turbo-EM24-EM29 EM22 Yes 0 Motorola Moto X Style EM23 Yes 0 Motorola Moto Turbo-EM22-EM29 EM24 Yes 0 Motorola Moto X Force-EM563 EM25 Yes 0 Motorola Moto X 2nd Gen EM26 Yes 0 Motorola Moto G 1st Gen EM27 Yes 0 Motorola Moto G 2nd Gen EM28 Yes Yes Motorola Moto G 3rd Gen-EM22 EM29 Yes 0 Motorola Moto E 1st Gen EM30 Yes Yes Lenovo Vibe K5-EM45 EM31 Yes Yes Lenovo Vibe K4 Note/A7010-EM272 EM32 Yes 0 Lenovo A2010 4G-EM606/441 EM34 Yes 0 Lenovo A7000/K3 Note-EM342 EM35 Yes 0 Huawei P9 EM36 Yes Yes LeEco (LeTV) Le 2 Pro-EM600 EM41 Yes Yes Lenovo K5 Note EM42 Yes 0 Lenovo Vibe K5 Plus-EM31 EM45 Yes 0 Lenovo Vibe P1 Turbo-EM343 EM46 Yes 0 LG G5 EM47 Yes 0 LG Stylus 2 Plus-EM577 EM48 Yes 0 Micromax -

Acer Alcatel Archos ASUS Blackberry Blu Celkon Coolpad (酷派)

Acer Acer A3-A10 Acer EE6 Acer W510 tab Alcatel Alcatel_7049D Flash 2 Pop4S(5095K) Archos Diamond S ASUS ASUS FonePad Note 6 ASUS FonePad 7 LTE ASUS Infinity 2 ASUS MeMo Pad (ME172V) * ASUS MeMo Pad 8 ASUS MeMo Pad 10 ASUS ZenFone 2 ASUS ZenFone 3 Laser ASUS ZenFone 5 (LTE/A500KL) ASUS ZenFone 6 BlackBerry Passport Prevro Z30 Blu Vivo 5R Celkon Celkon Q455 Celkon Q500 Celkon Millenia Epic Q550 CoolPad (酷派) CoolPad 8730 * CoolPad 9190L * CoolPad Note 5 CoolPad X7 大神 * Datawind Ubislate 7Ci Dell Venue 8 Venue 10 Pro Gionee (金立) Gionee E7 * Gionee Elife S5.5 Gionee Elife S7 Gionee Elife E8 Gionee Marathon M3 Gionee S5.5 * Gionee P7 Max HTC HTC Butterfly HTC Butterfly 3 HTC Butterfly S HTC Droid DNA (6435LVW) HTC Droid (htc 6435luw) HTC Desire 10 Pro HTC Desire 500 Dual HTC Desire 601 HTC Desire 620h HTC Desire 700 Dual HTC Desire 816 HTC Desire 816W HTC Desire 828 Dual HTC Desire X * HTC J Butterfly (HTL23) HTC J Butterfly (HTV31) HTC Nexus 9 Tab HTC One (6500LVW) HTC One A9 HTC One E8 HTC One M8 HTC One M9 HTC One M9 Plus HTC One M9 (0PJA1) HTC One (PN07120) HTC One Dual HTC One Max HTC One Mini (PO58220) * HTC One Mini 2 HTC One Remix HTC One X9 HTC One X plus (PM63100) * Huawei (华为) Huawei 荣耀 6 * Huawei 荣耀 6 Plus* Huawei Ascend D2 * Huawei Ascend G535 Huawei Ascend P6 Huawei Ascend Mate * Huawei Ascend Mate2 Huawei B199 * Huawei Enjoy 6 Huawei Honor 6X Huawei Honor 6 Plus Huawei Mate Huawei Mate 2 * Huawei Mate 7 * Huawei Mate 8 Huawei Mediapad 2 Huawei -

Phone Model Phone Model Number OS Version 100B 100B Android 4.1

OS version Phone Model Phone Model Number 100B 100B Android 4.1.2 Iconia Tab A500 Android 4.0.3 MR6012H1C2W1 MR6012H1C2W1 Android 4.2.2 Fire HD 6 Fire HD 6 Fire OS 4.5.2 / Android 4.4.3 Fire Phone 32GB Fire Phone 32GB Fire OS 3.6.8 / Android 4.2.2 A862W A862W Android 4.1.2 iPad 2 (2nd generation) MC979ZP iOS 7.1 iPad 4 MD513ZP/A iOS 7.1 iPad 4 MD513ZP/A iOS 8.0 iPad Air MD785ZP/A iOS 7.1 iPad Air 2 MGLW2J/A iOS 8.1 iPad Mini MD531ZP iOS 7.1 iPad Mini 2 FE276ZP/A iOS 8.1 iPad Mini 3 MGNV2J/A iOS 8.1 iPhone 3Gs MC132ZP iOS 6.1.3 iPhone 4 MC676LL iOS 7.1.2 iPhone 4 MC603ZP iOS 7.1.2 iPhone 4 MC604ZP iOS 5.1.1 iPhone 4s MD245ZP iOS 8.1 iPhone 4s MD245ZP iOS 8.4.1 iPhone 4s MD245ZP iOS 6.1.2 iPhone 5 MD297ZP iOS 6.0 iPhone 5 MD298ZP/A iOS 8.1 iPhone 5 MD298ZP/A iOS 7.1.1 iPhone 5c MF321ZP/A iOS 7.1.2 iPhone 5c MF321ZP/A iOS 8.1 iPhone 5s MF353ZP/A iOS 8.0 iPhone 5s MF353ZP/A iOS 8.4.1 iPhone 5s MF352ZP/A iOS 7.1.1 iPhone 6 MG492ZP/A iOS 8.1 iPhone 6 MG492ZP/A iOS 9.1 iPhone 6 Plus MGA92ZP/A iOS 9.0 iPhone 6 Plus MGAK2ZP/A iOS 8.0.2 iPhone 6 Plus MGAK2ZP/A iOS 8.1 iPhone 6s MKQK2ZP/A iOS 9.1 iPhone 6s Plus MKU22ZP/A iOS 9.1 iPod touch 4th Generation MD057ZP iOS 5.1.1 iPod touch 4th Generation MC540ZP iOS 5.0.1 iPod touch 5th Generation 16GB MGG72ZP/A iOS 8.1 iPod touch 5th Generation 32GB MD714ZP/A iOS 6.1.3 IS11SH IS11SH Android 2.3.3 IS12SH IS12SH Android 2.3.3 IS13SH IS13SH Android 2.3.5 SH-12C SH-12C Android 2.3.3 SH-13C SH-13C Android 2.3.4 Girls' Popteen F-03D Android 2.3.5 Kiss F-03D Android 2.3.5 X LTE F-05D Android 2.3.5 ME302C ME302C -

Presentación De Powerpoint

Modelos compatibles de Imóvil Versión del sistema operativo o No. Marca Modelo Teléfono superior 1 360 1501_M02 1501_M02 Android 5.1 2 360 N4 1503-M02 Android 6.0 3 100+ 100B 100B Android 4.1.2 4 Acer Iconia Tab A500 Android 4.0.3 5 ALPS (Golden Master) MR6012H1C2W1 MR6012H1C2W1 Android 4.2.2 6 ALPS (Golden Master) PMID705GTV PMID705GTV Android 4.2.2 7 Amazon Fire HD 6 Fire HD 6 Android 4.4.3 8 Amazon Fire Phone 32GB Fire Phone 32GB Android 4.2.2 9 Amoi A862W A862W Android 4.1.2 10 amzn KFFOWI KFFOWI Android 5.1.1 11 Apple iPad 2 (2nd generation) MC979ZP iOS 7.1 12 Apple iPad 4 MD513ZP/A iOS 7.1 13 Apple iPad 4 MD513ZP/A iOS 8.0 14 Apple iPad Air MD785ZP/A iOS 7.1 15 Apple iPad Air 2 MGLW2J/A iOS 8.1 16 Apple iPad Mini MD531ZP iOS 7.1 17 Apple iPad Mini 2 FE276ZP/A iOS 8.1 18 Apple iPad Mini 3 MGNV2J/A iOS 8.1 19 Apple iPad Pro MM172ZP/A iOS 10.3.2 20 Apple iPad Pro 10.5-inch Wi-Fi MQDW2ZP/A iOS 10.3.2 21 Apple iPad Pro 12.9-inch Wi-Fi MQDC2ZP/A iOS 10.3.2 22 Apple iPad Wi-Fi MP2G2ZP/A iOS 10.3.1 23 Apple iPhone 3Gs MC132ZP iOS 6.1.3 24 Apple iPhone 4 MC603ZP iOS 7.1.2 25 Apple iPhone 4 MC604ZP iOS 5.1.1 26 Apple iPhone 4 MC676LL iOS 7.1.2 27 Apple iPhone 4s MD245ZP iOS 6.1.2 28 Apple iPhone 4s MD245ZP iOS 8.1 29 Apple iPhone 4s MD245ZP iOS 8.4.1 30 Apple iPhone 5 MD297ZP iOS 6.0 31 Apple iPhone 5 MD298ZP/A iOS 7.1.1 32 Apple iPhone 5 MD298ZP/A iOS 8.1 33 Apple iPhone 5c MF321ZP/A iOS 7.1.2 34 Apple iPhone 5c MF321ZP/A iOS 8.1 35 Apple iPhone 5s MF352ZP/A iOS 7.1.1 36 Apple iPhone 5s MF353ZP/A iOS 8.0 37 Apple iPhone 5s MF353ZP/A iOS