A Class of Multivariate Skew Distributions: Properties and Inferential Issues

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Supplementary Materials

Int. J. Environ. Res. Public Health 2018, 15, 2042 1 of 4 Supplementary Materials S1) Re-parametrization of log-Laplace distribution The three-parameter log-Laplace distribution, LL(δ ,,ab) of relative risk, μ , is given by the probability density function b−1 μ ,0<<μ δ 1 ab δ f ()μ = . δ ab+ a+1 δ , μ ≥ δ μ 1−τ τ Let b = and a = . Hence, the probability density function of LL( μ ,,τσ) can be re- σ σ τ written as b μ ,0<<=μ δ exp(μ ) 1 ab δ f ()μ = ya+ b δ a μδ≥= μ ,exp() μ 1 ab exp(b (log(μμ )−< )), log( μμ ) = μ ab+ exp(a (μμ−≥ log( ))), log( μμ ) 1 ab exp(−−b| log(μμ ) |), log( μμ ) < = μ ab+ exp(−−a|μμ log( ) |), log( μμ ) ≥ μμ− −−τ | log( )τ | μμ < exp (1 ) , log( ) τ 1(1)ττ− σ = μσ μμ− −≥τμμ| log( )τ | exp , log( )τ . σ S2) Bayesian quantile estimation Let Yi be the continuous response variable i and Xi be the corresponding vector of covariates with the first element equals one. At a given quantile levelτ ∈(0,1) , the τ th conditional quantile T i i = β τ QY(|X ) τ of y given X is then QYτ (|iiXX ) ii ()where τ iiis the th conditional quantile and β τ i ()is a vector of quantile coefficients. Contrary to the mean regression generally aiming to minimize the squared loss function, the quantile regression links to a special class of check or loss τ βˆ τ function. The th conditional quantile can be estimated by any solution, i (), such that ββˆ τ =−ρ T τ ρ =−<τ iiii() argmini τ (Y X ()) where τ ()zz ( Iz ( 0))is the quantile loss function given in (1) and I(.) is the indicator function. -

On the Scale Parameter of Exponential Distribution

Review of the Air Force Academy No.2 (34)/2017 ON THE SCALE PARAMETER OF EXPONENTIAL DISTRIBUTION Anca Ileana LUPAŞ Military Technical Academy, Bucharest, Romania ([email protected]) DOI: 10.19062/1842-9238.2017.15.2.16 Abstract: Exponential distribution is one of the widely used continuous distributions in various fields for statistical applications. In this paper we study the exact and asymptotical distribution of the scale parameter for this distribution. We will also define the confidence intervals for the studied parameter as well as the fixed length confidence intervals. 1. INTRODUCTION Exponential distribution is used in various statistical applications. Therefore, we often encounter exponential distribution in applications such as: life tables, reliability studies, extreme values analysis and others. In the following paper, we focus our attention on the exact and asymptotical repartition of the exponential distribution scale parameter estimator. 2. SCALE PARAMETER ESTIMATOR OF THE EXPONENTIAL DISTRIBUTION We will consider the random variable X with the following cumulative distribution function: x F(x ; ) 1 e ( x 0 , 0) (1) where is an unknown scale parameter Using the relationships between MXXX( ) ; 22( ) ; ( ) , we obtain ()X a theoretical variation coefficient 1. This is a useful indicator, especially if MX() you have observational data which seems to be exponential and with variation coefficient of the selection closed to 1. If we consider x12, x ,... xn as a part of a population that follows an exponential distribution, then by using the maximum likelihood estimation method we obtain the following estimate n ˆ 1 xi (2) n i1 119 On the Scale Parameter of Exponential Distribution Since M ˆ , it follows that ˆ is an unbiased estimator for . -

Estimation of Common Location and Scale Parameters in Nonregular Cases Ahmad Razmpour Iowa State University

Iowa State University Capstones, Theses and Retrospective Theses and Dissertations Dissertations 1982 Estimation of common location and scale parameters in nonregular cases Ahmad Razmpour Iowa State University Follow this and additional works at: https://lib.dr.iastate.edu/rtd Part of the Statistics and Probability Commons Recommended Citation Razmpour, Ahmad, "Estimation of common location and scale parameters in nonregular cases " (1982). Retrospective Theses and Dissertations. 7528. https://lib.dr.iastate.edu/rtd/7528 This Dissertation is brought to you for free and open access by the Iowa State University Capstones, Theses and Dissertations at Iowa State University Digital Repository. It has been accepted for inclusion in Retrospective Theses and Dissertations by an authorized administrator of Iowa State University Digital Repository. For more information, please contact [email protected]. INFORMATION TO USERS This reproduction was made from a copy of a document sent to us for microfilming. While the most advanced technology has been used to photograph and reproduce this document, the quality of the reproduction is heavily dependent upon the quality of the material submitted. The following explanation of techniques is provided to help clarify markings or notations which may appear on this reproduction. 1. The sign or "target" for pages apparently lacking from the document photographed is "Missing Page(s)". If it was possible to obtain the missing page(s) or section, they are spliced into the film along with adjacent pages. This may have necessitated cutting through an image and duplicating adjacent pages to assure complete continuity. 2. When an image on the film is obliterated with a round black mark, it is an indication of either blurred copy because of movement during exposure, duplicate copy, or copyrighted materials that should not have been filmed. -

A Comparison of Unbiased and Plottingposition Estimators of L

WATER RESOURCES RESEARCH, VOL. 31, NO. 8, PAGES 2019-2025, AUGUST 1995 A comparison of unbiased and plotting-position estimators of L moments J. R. M. Hosking and J. R. Wallis IBM ResearchDivision, T. J. Watson ResearchCenter, Yorktown Heights, New York Abstract. Plotting-positionestimators of L momentsand L moment ratios have several disadvantagescompared with the "unbiased"estimators. For generaluse, the "unbiased'? estimatorsshould be preferred. Plotting-positionestimators may still be usefulfor estimatingextreme upper tail quantilesin regional frequencyanalysis. Probability-Weighted Moments and L Moments •r+l-" (--1)r • P*r,k Olk '- E p *r,!•[J!•. Probability-weightedmoments of a randomvariable X with k=0 k=0 cumulativedistribution function F( ) and quantile function It is convenient to define dimensionless versions of L mo- x( ) were definedby Greenwoodet al. [1979]to be the quan- tities ments;this is achievedby dividingthe higher-orderL moments by the scale measure h2. The L moment ratios •'r, r = 3, Mp,ra= E[XP{F(X)}r{1- F(X)} s] 4, '", are definedby ßr-" •r/•2 ß {X(u)}PUr(1 -- U)s du. L momentratios measure the shapeof a distributionindepen- dently of its scaleof measurement.The ratios *3 ("L skew- ness")and *4 ("L kurtosis")are nowwidely used as measures Particularlyuseful specialcases are the probability-weighted of skewnessand kurtosis,respectively [e.g., Schaefer,1990; moments Pilon and Adamowski,1992; Royston,1992; Stedingeret al., 1992; Vogeland Fennessey,1993]. 12•r= M1,0, r = •01 (1 - u)rx(u) du, Estimators Given an ordered sample of size n, Xl: n • X2:n • ''' • urx(u) du. X.... there are two establishedways of estimatingthe proba- /3r--- Ml,r, 0 =f01 bility-weightedmoments and L moments of the distribution from whichthe samplewas drawn. -

A Study of Non-Central Skew T Distributions and Their Applications in Data Analysis and Change Point Detection

A STUDY OF NON-CENTRAL SKEW T DISTRIBUTIONS AND THEIR APPLICATIONS IN DATA ANALYSIS AND CHANGE POINT DETECTION Abeer M. Hasan A Dissertation Submitted to the Graduate College of Bowling Green State University in partial fulfillment of the requirements for the degree of DOCTOR OF PHILOSOPHY August 2013 Committee: Arjun K. Gupta, Co-advisor Wei Ning, Advisor Mark Earley, Graduate Faculty Representative Junfeng Shang. Copyright c August 2013 Abeer M. Hasan All rights reserved iii ABSTRACT Arjun K. Gupta, Co-advisor Wei Ning, Advisor Over the past three decades there has been a growing interest in searching for distribution families that are suitable to analyze skewed data with excess kurtosis. The search started by numerous papers on the skew normal distribution. Multivariate t distributions started to catch attention shortly after the development of the multivariate skew normal distribution. Many researchers proposed alternative methods to generalize the univariate t distribution to the multivariate case. Recently, skew t distribution started to become popular in research. Skew t distributions provide more flexibility and better ability to accommodate long-tailed data than skew normal distributions. In this dissertation, a new non-central skew t distribution is studied and its theoretical properties are explored. Applications of the proposed non-central skew t distribution in data analysis and model comparisons are studied. An extension of our distribution to the multivariate case is presented and properties of the multivariate non-central skew t distri- bution are discussed. We also discuss the distribution of quadratic forms of the non-central skew t distribution. In the last chapter, the change point problem of the non-central skew t distribution is discussed under different settings. -

The Asymmetric T-Copula with Individual Degrees of Freedom

The Asymmetric t-Copula with Individual Degrees of Freedom d-fine GmbH Christ Church University of Oxford A thesis submitted for the degree of MSc in Mathematical Finance Michaelmas 2012 Abstract This thesis investigates asymmetric dependence structures of multivariate asset returns. Evidence of such asymmetry for equity returns has been reported in the literature. In order to model the dependence structure, a new t-copula approach is proposed called the skewed t-copula with individ- ual degrees of freedom (SID t-copula). This copula provides the flexibility to assign an individual degree-of-freedom parameter and an individual skewness parameter to each asset in a multivariate setting. Applying this approach to GARCH residuals of bivariate equity index return data and using maximum likelihood estimation, we find significant asymmetry. By means of the Akaike information criterion, it is demonstrated that the SID t-copula provides the best model for the market data compared to other copula approaches without explicit asymmetry parameters. In addition, it yields a better fit than the conventional skewed t-copula with a single degree-of-freedom parameter. In a model impact study, we analyse the errors which can occur when mod- elling asymmetric multivariate SID-t returns with the symmetric multi- variate Gauss or standard t-distribution. The comparison is done in terms of the risk measures value-at-risk and expected shortfall. We find large deviations between the modelled and the true VaR/ES of a spread posi- tion composed of asymmetrically distributed risk factors. Going from the bivariate case to a larger number of risk factors, the model errors increase. -

On the Meaning and Use of Kurtosis

Psychological Methods Copyright 1997 by the American Psychological Association, Inc. 1997, Vol. 2, No. 3,292-307 1082-989X/97/$3.00 On the Meaning and Use of Kurtosis Lawrence T. DeCarlo Fordham University For symmetric unimodal distributions, positive kurtosis indicates heavy tails and peakedness relative to the normal distribution, whereas negative kurtosis indicates light tails and flatness. Many textbooks, however, describe or illustrate kurtosis incompletely or incorrectly. In this article, kurtosis is illustrated with well-known distributions, and aspects of its interpretation and misinterpretation are discussed. The role of kurtosis in testing univariate and multivariate normality; as a measure of departures from normality; in issues of robustness, outliers, and bimodality; in generalized tests and estimators, as well as limitations of and alternatives to the kurtosis measure [32, are discussed. It is typically noted in introductory statistics standard deviation. The normal distribution has a kur- courses that distributions can be characterized in tosis of 3, and 132 - 3 is often used so that the refer- terms of central tendency, variability, and shape. With ence normal distribution has a kurtosis of zero (132 - respect to shape, virtually every textbook defines and 3 is sometimes denoted as Y2)- A sample counterpart illustrates skewness. On the other hand, another as- to 132 can be obtained by replacing the population pect of shape, which is kurtosis, is either not discussed moments with the sample moments, which gives or, worse yet, is often described or illustrated incor- rectly. Kurtosis is also frequently not reported in re- ~(X i -- S)4/n search articles, in spite of the fact that virtually every b2 (•(X i - ~')2/n)2' statistical package provides a measure of kurtosis. -

A Tail Quantile Approximation Formula for the Student T and the Symmetric Generalized Hyperbolic Distribution

A Service of Leibniz-Informationszentrum econstor Wirtschaft Leibniz Information Centre Make Your Publications Visible. zbw for Economics Schlüter, Stephan; Fischer, Matthias J. Working Paper A tail quantile approximation formula for the student t and the symmetric generalized hyperbolic distribution IWQW Discussion Papers, No. 05/2009 Provided in Cooperation with: Friedrich-Alexander University Erlangen-Nuremberg, Institute for Economics Suggested Citation: Schlüter, Stephan; Fischer, Matthias J. (2009) : A tail quantile approximation formula for the student t and the symmetric generalized hyperbolic distribution, IWQW Discussion Papers, No. 05/2009, Friedrich-Alexander-Universität Erlangen-Nürnberg, Institut für Wirtschaftspolitik und Quantitative Wirtschaftsforschung (IWQW), Nürnberg This Version is available at: http://hdl.handle.net/10419/29554 Standard-Nutzungsbedingungen: Terms of use: Die Dokumente auf EconStor dürfen zu eigenen wissenschaftlichen Documents in EconStor may be saved and copied for your Zwecken und zum Privatgebrauch gespeichert und kopiert werden. personal and scholarly purposes. Sie dürfen die Dokumente nicht für öffentliche oder kommerzielle You are not to copy documents for public or commercial Zwecke vervielfältigen, öffentlich ausstellen, öffentlich zugänglich purposes, to exhibit the documents publicly, to make them machen, vertreiben oder anderweitig nutzen. publicly available on the internet, or to distribute or otherwise use the documents in public. Sofern die Verfasser die Dokumente unter Open-Content-Lizenzen (insbesondere CC-Lizenzen) zur Verfügung gestellt haben sollten, If the documents have been made available under an Open gelten abweichend von diesen Nutzungsbedingungen die in der dort Content Licence (especially Creative Commons Licences), you genannten Lizenz gewährten Nutzungsrechte. may exercise further usage rights as specified in the indicated licence. www.econstor.eu IWQW Institut für Wirtschaftspolitik und Quantitative Wirtschaftsforschung Diskussionspapier Discussion Papers No. -

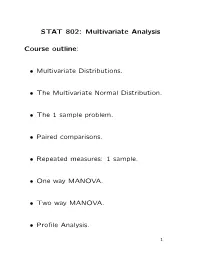

STAT 802: Multivariate Analysis Course Outline

STAT 802: Multivariate Analysis Course outline: Multivariate Distributions. • The Multivariate Normal Distribution. • The 1 sample problem. • Paired comparisons. • Repeated measures: 1 sample. • One way MANOVA. • Two way MANOVA. • Profile Analysis. • 1 Multivariate Multiple Regression. • Discriminant Analysis. • Clustering. • Principal Components. • Factor analysis. • Canonical Correlations. • 2 Basic structure of typical multivariate data set: Case by variables: data in matrix. Each row is a case, each column is a variable. Example: Fisher's iris data: 5 rows of 150 by 5 matrix: Case Sepal Sepal Petal Petal # Variety Length Width Length Width 1 Setosa 5.1 3.5 1.4 0.2 2 Setosa 4.9 3.0 1.4 0.2 . 51 Versicolor 7.0 3.2 4.7 1.4 . 3 Usual model: rows of data matrix are indepen- dent random variables. Vector valued random variable: function X : p T Ω R such that, writing X = (X ; : : : ; Xp) , 7! 1 P (X x ; : : : ; Xp xp) 1 ≤ 1 ≤ defined for any const's (x1; : : : ; xp). Cumulative Distribution Function (CDF) p of X: function FX on R defined by F (x ; : : : ; xp) = P (X x ; : : : ; Xp xp) : X 1 1 ≤ 1 ≤ 4 Defn: Distribution of rv X is absolutely con- tinuous if there is a function f such that P (X A) = f(x)dx (1) 2 ZA for any (Borel) set A. This is a p dimensional integral in general. Equivalently F (x1; : : : ; xp) = x1 xp f(y ; : : : ; yp) dyp; : : : ; dy : · · · 1 1 Z−∞ Z−∞ Defn: Any f satisfying (1) is a density of X. For most x F is differentiable at x and @pF (x) = f(x) : @x @xp 1 · · · 5 Building Multivariate Models Basic tactic: specify density of T X = (X1; : : : ; Xp) : Tools: marginal densities, conditional densi- ties, independence, transformation. -

Asymmetric Generalizations of Symmetric Univariate Probability Distributions Obtained Through Quantile Splicing

Asymmetric generalizations of symmetric univariate probability distributions obtained through quantile splicing a* a b Brenda V. Mac’Oduol , Paul J. van Staden and Robert A. R. King aDepartment of Statistics, University of Pretoria, Pretoria, South Africa; bSchool of Mathematical and Physical Sciences, University of Newcastle, Callaghan, Australia *Correspondence to: Brenda V. Mac’Oduol; Department of Statistics, University of Pretoria, Pretoria, South Africa. email: [email protected] Abstract Balakrishnan et al. proposed a two-piece skew logistic distribution by making use of the cumulative distribution function (CDF) of half distributions as the building block, to give rise to an asymmetric family of two-piece distributions, through the inclusion of a single shape parameter. This paper proposes the construction of asymmetric families of two-piece distributions by making use of quantile functions of symmetric distributions as building blocks. This proposition will enable the derivation of a general formula for the L-moments of two-piece distributions. Examples will be presented, where the logistic, normal, Student’s t(2) and hyperbolic secant distributions are considered. Keywords Two-piece; Half distributions; Quantile functions; L-moments 1. Introduction Balakrishnan, Dai, and Liu (2017) introduced a skew logistic distribution as an alterna- tive to the model proposed by van Staden and King (2015). It proposed taking the half distribution to the right of the location parameter and joining it to the half distribution to the left of the location parameter which has an inclusion of a single shape parameter, a > 0. The methodology made use of the cumulative distribution functions (CDFs) of the parent distributions to obtain the two-piece distribution. -

A Multivariate Student's T-Distribution

Open Journal of Statistics, 2016, 6, 443-450 Published Online June 2016 in SciRes. http://www.scirp.org/journal/ojs http://dx.doi.org/10.4236/ojs.2016.63040 A Multivariate Student’s t-Distribution Daniel T. Cassidy Department of Engineering Physics, McMaster University, Hamilton, ON, Canada Received 29 March 2016; accepted 14 June 2016; published 17 June 2016 Copyright © 2016 by author and Scientific Research Publishing Inc. This work is licensed under the Creative Commons Attribution International License (CC BY). http://creativecommons.org/licenses/by/4.0/ Abstract A multivariate Student’s t-distribution is derived by analogy to the derivation of a multivariate normal (Gaussian) probability density function. This multivariate Student’s t-distribution can have different shape parameters νi for the marginal probability density functions of the multi- variate distribution. Expressions for the probability density function, for the variances, and for the covariances of the multivariate t-distribution with arbitrary shape parameters for the marginals are given. Keywords Multivariate Student’s t, Variance, Covariance, Arbitrary Shape Parameters 1. Introduction An expression for a multivariate Student’s t-distribution is presented. This expression, which is different in form than the form that is commonly used, allows the shape parameter ν for each marginal probability density function (pdf) of the multivariate pdf to be different. The form that is typically used is [1] −+ν Γ+((ν n) 2) T ( n) 2 +Σ−1 n 2 (1.[xx] [ ]) (1) ΓΣ(νν2)(π ) This “typical” form attempts to generalize the univariate Student’s t-distribution and is valid when the n marginal distributions have the same shape parameter ν . -

A Comparison Between Skew-Logistic and Skew-Normal Distributions 1

MATEMATIKA, 2015, Volume 31, Number 1, 15–24 c UTM Centre for Industrial and Applied Mathematics A Comparison Between Skew-logistic and Skew-normal Distributions 1Ramin Kazemi and 2Monireh Noorizadeh 1,2Department of Statistics, Imam Khomeini International University Qazvin, Iran e-mail: [email protected] Abstract Skew distributions are reasonable models for describing claims in property- liability insurance. We consider two well-known datasets from actuarial science and fit skew-normal and skew-logistic distributions to these dataset. We find that the skew-logistic distribution is reasonably competitive compared to skew-normal in the literature when describing insurance data. The value at risk and tail value at risk are estimated for the dataset under consideration. Also, we compare the skew distributions via Kolmogorov-Smirnov goodness-of-fit test, log-likelihood criteria and AIC. Keywords Skew-logistic distribution; skew-normal distribution; value at risk; tail value at risk; log-likelihood criteria; AIC; Kolmogorov-Smirnov goodness-of-fit test. 2010 Mathematics Subject Classification 62P05, 91B30 1 Introduction Fitting an adequate distribution to real data sets is a relevant problem and not an easy task in actuarial literature, mainly due to the nature of the data, which shows several fea- tures to be accounted for. Eling [1] showed that the skew-normal and the skew-Student t distributions are reasonably competitive compared to some models when describing in- surance data. Bolance et al. [2] provided strong empirical evidence in favor of the use of the skew-normal, and log-skew-normal distributions to model bivariate claims data from the Spanish motor insurance industry.