Constructing Automated Test Oracle for Low Observable Software

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Investigation of Dispersion in Uence on the Chirp Microwave Generation Using Microwave Photonic Link Without Optical Lter

Scientia Iranica D (2018) 25(6), 3584{3590 Sharif University of Technology Scientia Iranica Transactions D: Computer Science & Engineering and Electrical Engineering http://scientiairanica.sharif.edu Investigation of dispersion in uence on the chirp microwave generation using microwave photonic link without optical lter M. Singha; and S. Kumar Raghuwanshib a. Department of Electronics & Communication Engineering, Photonic Research Laboratory (PRL), Indian Institute of Technology, Roorkee 247667, Uttarakhand, India. b. Department of Electronics Engineering, Indian Institute of Technology (Indian School of Mines), Dhanbad 826004, Jharkhand, India. Received 16 February 2016; received in revised form 1 October 2016; accepted 17 December 2016 KEYWORDS Abstract. In this work, we investigate the in uence of the second-order (2OD) and third-order (3OD) dispersion terms on chirp signal generation and transmission through RF photonics; RF photonic link without any optical lter. Dispersion equations are formalised using Triple Parallel- Taylor series and Bessel function to study the link performance. Our result (eye diagrams) Intensity Modulators shows that 2OD+3OD has a signi cant impact on chirp mm-wave propagation through (TP-IM); bres of di erent lengths. In this paper, chirp mm signal is controlled at photo detector by Fibre dispersion; individual phase term of external modulators. Moreover, we experimentally demonstrate RoF; that the chirp rate can be signi cantly controlled by properly choosing the type of bre in Optical sideband the experiments. We discuss the RF photonic link performance in terms of optical sideband suppression ratio suppression ratio (OPSSR), Radio Frequency Spurious Suppression Ratio (RFSSR), and (OPSSR); Bit Error Rate (BER). Theoretical results are veri ed using MATLAB Software. -

Nanoporous Metal-Organic Framework Cu2(BDC)2(DABCO) As an E Cient Heterogeneous Catalyst for One-Pot Facile Synthesis of 1,2,3-T

Scientia Iranica C (2019) 26(3), 1485{1496 Sharif University of Technology Scientia Iranica Transactions C: Chemistry and Chemical Engineering http://scientiairanica.sharif.edu Nanoporous metal-organic framework Cu2(BDC)2(DABCO) as an ecient heterogeneous catalyst for one-pot facile synthesis of 1,2,3-triazole derivatives in ethanol: Evaluating antimicrobial activity of the novel derivatives H. Tourani, M.R. Naimi-Jamal, L. Panahi, and M.G. Dekamin Research Laboratory of Green Organic Synthesis & Polymers, Department of Chemistry, Iran University of Science and Technology, Tehran, P.O. Box 16846-13114, I.R. Iran. Received 6 April 2018; received in revised form 11 August 2018; accepted 31 December 2018 KEYWORDS Abstract. Solvent-free ball-milling synthesized porous metal-organic framework Cu (BDC) (DABCO) (BDC: benzene-1,4-dicarboxylic acid, DABCO: 1,4-diazabicyclo Triazoles; 2 2 [2.2.2]octane) has been proved to be a practical catalyst for facile and convenient synthesis Heterogeneous of 1,2,3-triazole derivatives via multicomponent reaction of terminal alkynes, benzyl or alkyl catalysis; halides, and sodium azide in ethanol. Avoidance of usage and handling of hazardous organic Cu-MOF; azides, using ethanol as an easily available solvent, and simple preparation and recycling Click chemistry; of the catalyst make this procedure a truly scale-up-able one. The high loading of copper Antimicrobial. ions in the catalyst leads to ecient catalytic activity and hence, its low-weight usage in reaction. The catalyst was recycled and reused several times without signi cant loss of its activity. Furthermore, novel derivatives were examined to investigate their potential antimicrobial activity via microdilution method. -

Tb/S All-Optical Nonlinear Switching Using SOA Based Mach-Zehnder Interferometer

Scientia Iranica D (2014) 21(3), 843{852 Sharif University of Technology Scientia Iranica Transactions D: Computer Science & Engineering and Electrical Engineering www.scientiairanica.com Tb/s all-optical nonlinear switching using SOA based Mach-Zehnder interferometer Y. Khorramia;, V. Ahmadia and M. Razaghib a. Department of Electrical and Computer Engineering, Tarbiat Modares University, Tehran, P.O. Box 14115-143, Iran. b. Department of Electrical and Computer Engineering, University of Kurdistan, Sanandaj, P.O. Box 416, Iran. Received 23 June 2012; received in revised form 29 October 2012; accepted 6 May 2013 KEYWORDS Abstract. A new method for increasing the speed of all-optical Mach-Zehnder Interfero- metric (MZI) switching with a bulk Semiconductor Optical Ampli er (SOA), using chirped Ultrafast nonlinear control signals, is suggested and theoretically analyzed. For 125fs input and chirped control optics; pulses, we show acceleration of the gain recovery process using the cross phase modulation All-optical devices; (XPM) e ect. Our method depicts that Tb/s switching speeds, using bulk SOA-MZI with a Switching; proper Q-factor, is feasible. For the rst time, we reach operation capability at 2Tb/s with Chirping; a Q-factor of more than 10. The new scheme also improves the extinction and amplitude Mach-Zehnder ratio of the output power, as well as increasing the contrast ratio of the switched signal. interferometer; We use a nite di erence beam propagation method for MZI analysis, taking into account Semiconductor optical all nonlinear e ects of SOA, such as Group Velocity Dispersion (GVD), Kerr e ect, Two ampli ers. Photon Absorption (TPA), Carrier Heating (CH) and Spectral Hole Burning (SHB). -

An E Ective Approach to Structural Damage Localization in Exural Members Based on Generalized S-Transform

Scientia Iranica A (2019) 26(6), 3125{3139 Sharif University of Technology Scientia Iranica Transactions A: Civil Engineering http://scientiairanica.sharif.edu An e ective approach to structural damage localization in exural members based on generalized S-transform H. Amini Tehrania, A. Bakhshia;, and M. Akhavatb a. Department of Civil Engineering, Sharif University of Technology, Tehran, P.O. Box 11155-9313 Tehran, Iran. b. School of Civil Engineering, Iran University of Science & Technology, Tehran, Iran. Received 7 February 2017; received in revised form 22 October 2017; accepted 23 December 2017 KEYWORDS Abstract. This paper presents a method for structural damage localization based on signal processing using generalized S-transform (S ). The S-transform is a combination Damage localization; GS of the properties of the Short-Time Fourier Transform (STFT) and Wavelet Transform Signal processing; (WT) that has been developed over the last few years in an attempt to overcome inherent Generalized limitations of the wavelet and short-time Fourier transform in time-frequency representation S-transform; of non-stationary signals. The generalized type of this transform is the S -transform Time-frequency GS that is characterized by an adjustable Gaussian window width in the time-frequency representation; representation of signals. In this research, the S -transform was employed due to its Non-stationary GS favorable performance in the detection of the structural damages. The performance of signals. the proposed method was veri ed by means of three numerical examples and, also, the experimental data obtained from the vibration test of 8-DOFs mass-sti ness system. By means of a comparison between damage location obtained by the proposed method and the simulation model, it was concluded that the method was sensitive to the damage existence and clearly demonstrated the damage location. -

A New Algorithm for the Computation of the Decimals of the Inverse

Scientia Iranica D (2017) 24(3), 1363{1372 Sharif University of Technology Scientia Iranica Transactions D: Computer Science & Engineering and Electrical Engineering www.scientiairanica.com A new algorithm for the computation of the decimals of the inverse P. Sahaa; and D. Kumarb a. Department of Electronics and Communication Engineering, National Institute of Technology Meghalaya, Meghalaya-793003, Shillong, India. b. Department of Computer Science and Engineering, National Institute of Technology Meghalaya, Meghalaya-793003; Shillong, INDIA. Received 3 November 2014; received in revised form 4 December 2015; accepted 27 February 2016 KEYWORDS Abstract. Ancient mathematical formulae can be directly applied to the optimization of the algebraic computation. A new algorithm used to compute decimals of the inverse based Algorithm; on such ancient mathematics is reported in this paper. Sahayaks (auxiliary fraction) sutra Arithmetic; has been used for the hardware implementation of the decimals of the inverse. On account Decimal inverse; of the ancient formulae, reciprocal approximation of numbers can generate \on the y" T-Spice; either the rst exact n decimal of inverse, n being either arbitrary large or at least 6 in Propagation delay; almost all cases. The reported algorithm has been implemented, and functionality has been Ancient mathematics. checked in T-Spice. Performance parameters, like propagation delay and dynamic switching power consumptions, are calculated through spice-spectre of 90 nm CMOS technology. The propagation delay of the resulting 4-digit reciprocal approximation algorithm was only 1:8 uS and consumed 24:7 mW power. The implementation methodology o ered substantial reduction of propagation delay and dynamic switching power consumption from its counterpart (NR) based implementation. -

International Journal of Engineering and Advanced Technology (IJEAT)

IInntteerrnnaattiioonnaall JJoouurrnnaall ooff EEnnggiinneeeerriinngg aanndd AAddvvaanncceedd TTeecchhnnoollooggyy ISSN : 2249 - 8958 Website: www.ijeat.org Volume-2 Issue-5, June 2013 Published by: Blue Eyes Intelligence Engineering and Sciences Publication Pvt. Ltd. ced Te van ch d no A l d o n g y a g n i r e e IJEat n i I E n g X N t P e L IO n O E T r R A I V n NG NO f IN a o t l i o a n n r a u l J o www.ijeat.org Exploring Innovation Editor In Chief Dr. Shiv K Sahu Ph.D. (CSE), M.Tech. (IT, Honors), B.Tech. (IT) Director, Blue Eyes Intelligence Engineering & Sciences Publication Pvt. Ltd., Bhopal (M.P.), India Dr. Shachi Sahu Ph.D. (Chemistry), M.Sc. (Organic Chemistry) Additional Director, Blue Eyes Intelligence Engineering & Sciences Publication Pvt. Ltd., Bhopal (M.P.), India Vice Editor In Chief Dr. Vahid Nourani Professor, Faculty of Civil Engineering, University of Tabriz, Iran Prof.(Dr.) Anuranjan Misra Professor & Head, Computer Science & Engineering and Information Technology & Engineering, Noida International University, Noida (U.P.), India Chief Advisory Board Prof. (Dr.) Hamid Saremi Vice Chancellor of Islamic Azad University of Iran, Quchan Branch, Quchan-Iran Dr. Uma Shanker Professor & Head, Department of Mathematics, CEC, Bilaspur(C.G.), India Dr. Rama Shanker Professor & Head, Department of Statistics, Eritrea Institute of Technology, Asmara, Eritrea Dr. Vinita Kumari Blue Eyes Intelligence Engineering & Sciences Publication Pvt. Ltd., India Dr. Kapil Kumar Bansal Head (Research and Publication), SRM University, Gaziabad (U.P.), India Dr. -

Anomaly Detection and Analysis in Big Data Computer Science And

Anomaly Detection and Analysis in Big Data A Thesis submitted in partial fulfillment of the requirements for the award of degree of Doctor of Philosophy Submitted by Sahil Garg (901403026) Under the guidance of Dr. Shalini Batra Associate Professor, Computer Science and Engineering Department, Thapar Institute of Engineering and Technology, Patiala, India Computer Science and Engineering Department Thapar Institute of Engineering and Technology Patiala-147004, India September 2018 Contents List of Figures . .v List of Tables . vii List of Algorithms . viii Certificate . ix Acknowledgements . .x Abstract . xi 1 Introduction 1 1.1 Background . .3 1.2 Classification of Anomalies . .6 1.2.1 Point Anomalies . .6 1.2.2 Contextual Anomalies . .7 1.2.3 Collective Anomalies . .7 1.3 Modes of Machine Learning Algorithms . .8 1.3.1 Supervised Anomaly Detection . .8 1.3.2 Unsupervised Anomaly Detection . .8 1.3.3 Semi-Supervised Anomaly Detection . .9 1.4 Types of Anomaly Detection Techniques . .9 1.4.1 Classification Techniques . .9 i One-class classification . 11 Multi-class classification . 11 1.4.2 Clustering Techniques . 11 Partitioning based techniques . 13 Hierarchy based techniques . 15 Density based techniques . 15 Grid based techniques . 16 Graph based techniques . 16 1.4.3 Statistical Techniques . 17 Parametric Techniques . 18 Non-Parametric Techniques . 18 1.4.4 Rule-based Techniques . 18 1.4.5 Information Theory based Techniques . 19 1.5 Applications of Anomaly Detection . 20 1.6 Thesis Organization . 29 2 Literature Review 31 2.1 Dimensionality Reduction . 31 2.2 Optimization Schemes . 37 2.3 Machine Learning Approaches . 40 2.4 Deep Learning Approaches . -

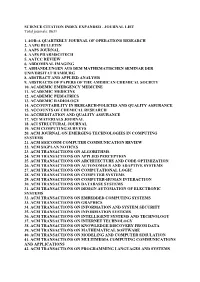

SCIENCE CITATION INDEX EXPANDED - JOURNAL LIST Total Journals: 8631

SCIENCE CITATION INDEX EXPANDED - JOURNAL LIST Total journals: 8631 1. 4OR-A QUARTERLY JOURNAL OF OPERATIONS RESEARCH 2. AAPG BULLETIN 3. AAPS JOURNAL 4. AAPS PHARMSCITECH 5. AATCC REVIEW 6. ABDOMINAL IMAGING 7. ABHANDLUNGEN AUS DEM MATHEMATISCHEN SEMINAR DER UNIVERSITAT HAMBURG 8. ABSTRACT AND APPLIED ANALYSIS 9. ABSTRACTS OF PAPERS OF THE AMERICAN CHEMICAL SOCIETY 10. ACADEMIC EMERGENCY MEDICINE 11. ACADEMIC MEDICINE 12. ACADEMIC PEDIATRICS 13. ACADEMIC RADIOLOGY 14. ACCOUNTABILITY IN RESEARCH-POLICIES AND QUALITY ASSURANCE 15. ACCOUNTS OF CHEMICAL RESEARCH 16. ACCREDITATION AND QUALITY ASSURANCE 17. ACI MATERIALS JOURNAL 18. ACI STRUCTURAL JOURNAL 19. ACM COMPUTING SURVEYS 20. ACM JOURNAL ON EMERGING TECHNOLOGIES IN COMPUTING SYSTEMS 21. ACM SIGCOMM COMPUTER COMMUNICATION REVIEW 22. ACM SIGPLAN NOTICES 23. ACM TRANSACTIONS ON ALGORITHMS 24. ACM TRANSACTIONS ON APPLIED PERCEPTION 25. ACM TRANSACTIONS ON ARCHITECTURE AND CODE OPTIMIZATION 26. ACM TRANSACTIONS ON AUTONOMOUS AND ADAPTIVE SYSTEMS 27. ACM TRANSACTIONS ON COMPUTATIONAL LOGIC 28. ACM TRANSACTIONS ON COMPUTER SYSTEMS 29. ACM TRANSACTIONS ON COMPUTER-HUMAN INTERACTION 30. ACM TRANSACTIONS ON DATABASE SYSTEMS 31. ACM TRANSACTIONS ON DESIGN AUTOMATION OF ELECTRONIC SYSTEMS 32. ACM TRANSACTIONS ON EMBEDDED COMPUTING SYSTEMS 33. ACM TRANSACTIONS ON GRAPHICS 34. ACM TRANSACTIONS ON INFORMATION AND SYSTEM SECURITY 35. ACM TRANSACTIONS ON INFORMATION SYSTEMS 36. ACM TRANSACTIONS ON INTELLIGENT SYSTEMS AND TECHNOLOGY 37. ACM TRANSACTIONS ON INTERNET TECHNOLOGY 38. ACM TRANSACTIONS ON KNOWLEDGE DISCOVERY FROM DATA 39. ACM TRANSACTIONS ON MATHEMATICAL SOFTWARE 40. ACM TRANSACTIONS ON MODELING AND COMPUTER SIMULATION 41. ACM TRANSACTIONS ON MULTIMEDIA COMPUTING COMMUNICATIONS AND APPLICATIONS 42. ACM TRANSACTIONS ON PROGRAMMING LANGUAGES AND SYSTEMS 43. ACM TRANSACTIONS ON RECONFIGURABLE TECHNOLOGY AND SYSTEMS 44. -

Machine Learning in Structural Engineering

Scientia Iranica A (2020) 27(6), 2645{2656 Sharif University of Technology Scientia Iranica Transactions A: Civil Engineering http://scientiairanica.sharif.edu Invited/Review Article Machine learning in structural engineering J.P. Amezquita-Sancheza;, M. Valtierra-Rodrigueza, and H. Adelib a. ENAP-RG, CA Sistemas Dinamicos, Faculty of Engineering, Departments of Electromechanical, and Biomedical Engineering, Autonomous University of Queretaro, Campus San Juan del Rio, Moctezuma 249, Col. San Cayetano, 76807, San Juan del Rio, Queretaro, Mexico. b. Department of Civil, Environmental, and Geodetic Engineering, The Ohio State University, 470 Hitchcock Hall, 2070 Neil Avenude, Columbus, OH 43220, USA. Received 14 November 2020; accepted 18 November 2020 KEYWORDS Abstract. This article presents a review of selected articles about structural engineering applications of Machine Learning (ML) in the past few years. It is divided into the following Civil structures; areas: structural system identi cation, structural health monitoring, structural vibration Machine learning; control, structural design, and prediction applications. Deep neural network algorithms Deep learning; have been the subject of a large number of articles in civil and structural engineering. There Structural are, however, other ML algorithms with great potential in civil and structural engineering engineering; that are worth exploring. Four novel supervised ML algorithms developed recently by the System identi cation; senior author and his associates with potential applications in civil/structural engineering Structural health are reviewed in this paper. They are the Enhanced Probabilistic Neural Network (EPNN), monitoring; the Neural Dynamic Classi cation (NDC) algorithm, the Finite Element Machine (FEMa), Vibration control; and the Dynamic Ensemble Learning (DEL) algorithm. Structural design; Prediction. -

ISI:Web of Science

*+,- ./-012 3/45/6 78 39: ;5/+< *<2=52 .0>? @A=BC ISI:Web of Science 1. APPLIED ENTOMOLOGY AND PHYTOPATHOLOGY -ISSN: 1026-5007 2. ARCHIVES OF IRANIAN MEDICINE- ISSN: 1029-2977 3. BANACH JOURNAL OF MATHEMATICAL ANALYSIS - ISSN: 1735-8787 4. BULLETIN OF THE IRANIAN MATHEMATICAL SOCIETY - ISSN: 1735-8515 5. CASPIAN JOURNAL OF ENVIRONMENTAL SCIENCES - ISSN: 1735-3033 6. DARU-JOURNAL OF FACULTY OF PHARMACY - ISSN: 1560-8115 7. HEPATITIS MONTHLY - ISSN: 1735-143X 8. IFRO NEWSLETTER œ ISSN: ****-**** 9. INTERNATIONAL JOURNAL OF CIVIL ENGINEERING - ISSN: 1735-0522 10. INTERNATIONAL JOURNAL OF ENVIRONMENTAL RESEARCH - ISSN: 1735-6865 11. INTERNATIONAL JOURNAL OF ENVIRONMENTAL SCIENCE AND TECHNOLOGY - ISSN: 1735-1472 12. INTERNATIONAL JOURNAL OF FERTILITY & STERILITY - ISSN: 2008-076X 13. INTERNATIONAL JOURNAL OF PLANT PRODUCTION - ISSN: 1735-6814 14. IRANIAN BIOMEDICAL JOURNAL - ISSN: 1028-852X 15. IRANIAN JOURNAL OF ALLERGY ASTHMA AND IMMUNOLOGY - ISSN: 1735-1502 16. IRANIAN JOURNAL OF ANIMAL BIOSYSTEMATICS - ISSN: 1735-434X 17. IRANIAN JOURNAL OF ARTHROPOD-BORNE DISEASES - ISSN: 1735-7179 18. IRANIAN JOURNAL OF BASIC MEDICAL SCIENCES - ISSN: 2008-3866 19. IRANIAN JOURNAL OF BIOTECHNOLOGY - ISSN: 1728-3043 20. IRANIAN JOURNAL OF BOTANY - ISSN: 1029-788X 21. IRANIAN JOURNAL OF CHEMISTRY & CHEMICAL ENGINEERING- INTERNATIONAL ENGLISH EDITION ISSN: 1021- 9986 22. IRANIAN JOURNAL OF ENVIRONMENTAL HEALTH SCIENCE & ENGINEERING - ISSN: 1735-1979 23. IRANIAN JOURNAL OF FISHERIES SCIENCES - ISSN: 1562-2916 24. IRANIAN JOURNAL OF FUZZY SYSTEMS - ISSN: 1735-0654 25. IRANIAN JOURNAL OF IMMUNOLOGY - ISSN: 1735-1383 26. IRANIAN JOURNAL OF OPHTHALMOLOGY - ISSN: 1735-4153 27. IRANIAN JOURNAL OF PARASITOLOGY - ISSN: 1735-7020 28. IRANIAN JOURNAL OF PEDIATRICS - -ISSN: 2008-2142 29. -

Employing a Novel Gait Pattern Generator on a Social Humanoid Robot

Scientia Iranica A (2019) 26(4), 2154{2166 Sharif University of Technology Scientia Iranica Transactions A: Civil Engineering http://scientiairanica.sharif.edu Invited Paper Employing a novel gait pattern generator on a social humanoid robot A. Meghdaria;, S. Behzadipourb, and M. Abedib a. Social & Cognitive Robotics Laboratory, Center of Excellence in Design, Robotics, and Automation (CEDRA), School of Mechanical Engineering, Sharif University of Technology, Tehran, Iran. b. School of Mechanical Engineering, Sharif University of Technology, Tehran, Iran. Received 11 February 2019; accepted 11 March 2019 KEYWORDS Abstract. This paper presents a novel Gait Pattern Generator (GPG) developed for the \Alice" social humanoid robot, which up to now lacked an appropriate walking pattern. Social robots; Due to the limitations of this robot, the proposed gate pattern generator was formulated Bipedal robots; based on a nine-mass model to decrease the modeling errors and the inverse kinematics of Gait pattern the whole lower-body was solved in such a way that the robot remained statically stable generating; during the movements. The main challenge of this work was to solve the inverse kinematics Inverse kinematics; of a 7-link chain with 12 degrees of freedom. For this purpose, a new graphical-numerical Static stability technique has been provided using the de nition of the kinematic equations of the robot condition. joints' Cartesian coordinates. This method resulted in a signi cant increase in the solution rate of calculations. Finally, a novel algorithm was developed for step-by-step displacement of the robot towards a desired destination in a two-dimensional space. Performance of the proposed gate pattern generator was evaluated both with a model of the robot in a MATLAB Simulink environment and in real experiments with the Alice humanoid robot. -

Higher Exibility of Recon Gurable Digital Micro/Nano Uidic Biochips Using an FPGA-Inspired Architecture

Scientia Iranica F (2016) 23(3), 1554{1562 Sharif University of Technology Scientia Iranica Transactions F: Nanotechnology www.scientiairanica.com Research Note Higher exibility of recon gurable digital micro/nano uidic biochips using an FPGA-inspired architecture M. Taajobiana and A. Jahanianb; a. Department of Computer Engineering, Science and Research Branch, Islamic Azad University, Tehran, Iran. b. Department of Computer Science and Engineering, Shahid Beheshti University, G.C., Tehran, P.O. Box 1982963113, Iran. Received 4 May 2015; received in revised form 15 March 2016; accepted 10 May 2016 KEYWORDS Abstract. Recent improvements in the bio-engineering area have composed wonderful opportunities to manipulate the bio-structures. Biochips are known as electronic platforms Digital micro- uidic to decrease the experiment time and cost and improve the exibility and automatability of biochip; operations. Digital micro/nano uidic biochips can carry discrete droplets of experiment Pin-constrained; materials on the chip to perform the projected assays. In this paper, a new FPGA-inspired Recon gurable. architecture is proposed for micro- uidic biochips in order to improve the con gurability and exibility of the biochip and also reduce the number of controlling pins. The simulation results show that in the proposed architecture, number of controlling pins is improved by 6.35x compared with the direct addressing method and the total experiment time is reduced by 34% compared with the eld-programmable pin-constrained method, improving con gurability and exibility. © 2016 Sharif University of Technology. All rights reserved. 1. Introduction The history of biochips started with genomic operations. These chips had the possibility of analyzing Biochip is an integrated uidic and electronic device DNA fragments and genomic tests [2].