Sequence Compression Benchmark (SCB) Database — a Comprehensive Evaluation of Reference-Free Compressors for FASTA-Formatted Sequences

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Schematic Entry

Schematic Entry Copyrights Software, documentation and related materials: Copyright © 2002 Altium Limited This software product is copyrighted and all rights are reserved. The distribution and sale of this product are intended for the use of the original purchaser only per the terms of the License Agreement. This document may not, in whole or part, be copied, photocopied, reproduced, translated, reduced or transferred to any electronic medium or machine-readable form without prior consent in writing from Altium Limited. U.S. Government use, duplication or disclosure is subject to RESTRICTED RIGHTS under applicable government regulations pertaining to trade secret, commercial computer software developed at private expense, including FAR 227-14 subparagraph (g)(3)(i), Alternative III and DFAR 252.227-7013 subparagraph (c)(1)(ii). P-CAD is a registered trademark and P-CAD Schematic, P-CAD Relay, P-CAD PCB, P-CAD ProRoute, P-CAD QuickRoute, P-CAD InterRoute, P-CAD InterRoute Gold, P-CAD Library Manager, P-CAD Library Executive, P-CAD Document Toolbox, P-CAD InterPlace, P-CAD Parametric Constraint Solver, P-CAD Signal Integrity, P-CAD Shape-Based Autorouter, P-CAD DesignFlow, P-CAD ViewCenter, Master Designer and Associate Designer are trademarks of Altium Limited. Other brand names are trademarks of their respective companies. Altium Limited www.altium.com Table of Contents chapter 1 Introducing P-CAD Schematic P-CAD Schematic Features ................................................................................................1 About -

ROOT I/O Compression Improvements for HEP Analysis

EPJ Web of Conferences 245, 02017 (2020) https://doi.org/10.1051/epjconf/202024502017 CHEP 2019 ROOT I/O compression improvements for HEP analysis Oksana Shadura1;∗ Brian Paul Bockelman2;∗∗ Philippe Canal3;∗∗∗ Danilo Piparo4;∗∗∗∗ and Zhe Zhang1;y 1University of Nebraska-Lincoln, 1400 R St, Lincoln, NE 68588, United States 2Morgridge Institute for Research, 330 N Orchard St, Madison, WI 53715, United States 3Fermilab, Kirk Road and Pine St, Batavia, IL 60510, United States 4CERN, Meyrin 1211, Geneve, Switzerland Abstract. We overview recent changes in the ROOT I/O system, enhancing it by improving its performance and interaction with other data analysis ecosys- tems. Both the newly introduced compression algorithms, the much faster bulk I/O data path, and a few additional techniques have the potential to significantly improve experiment’s software performance. The need for efficient lossless data compression has grown significantly as the amount of HEP data collected, transmitted, and stored has dramatically in- creased over the last couple of years. While compression reduces storage space and, potentially, I/O bandwidth usage, it should not be applied blindly, because there are significant trade-offs between the increased CPU cost for reading and writing files and the reduces storage space. 1 Introduction In the past years, Large Hadron Collider (LHC) experiments are managing about an exabyte of storage for analysis purposes, approximately half of which is stored on tape storages for archival purposes, and half is used for traditional disk storage. Meanwhile for High Lumi- nosity Large Hadron Collider (HL-LHC) storage requirements per year are expected to be increased by a factor of 10 [1]. -

![Arxiv:2004.10531V1 [Cs.OH] 8 Apr 2020](https://docslib.b-cdn.net/cover/5419/arxiv-2004-10531v1-cs-oh-8-apr-2020-215419.webp)

Arxiv:2004.10531V1 [Cs.OH] 8 Apr 2020

ROOT I/O compression improvements for HEP analysis Oksana Shadura1;∗ Brian Paul Bockelman2;∗∗ Philippe Canal3;∗∗∗ Danilo Piparo4;∗∗∗∗ and Zhe Zhang1;y 1University of Nebraska-Lincoln, 1400 R St, Lincoln, NE 68588, United States 2Morgridge Institute for Research, 330 N Orchard St, Madison, WI 53715, United States 3Fermilab, Kirk Road and Pine St, Batavia, IL 60510, United States 4CERN, Meyrin 1211, Geneve, Switzerland Abstract. We overview recent changes in the ROOT I/O system, increasing per- formance and enhancing it and improving its interaction with other data analy- sis ecosystems. Both the newly introduced compression algorithms, the much faster bulk I/O data path, and a few additional techniques have the potential to significantly to improve experiment’s software performance. The need for efficient lossless data compression has grown significantly as the amount of HEP data collected, transmitted, and stored has dramatically in- creased during the LHC era. While compression reduces storage space and, potentially, I/O bandwidth usage, it should not be applied blindly: there are sig- nificant trade-offs between the increased CPU cost for reading and writing files and the reduce storage space. 1 Introduction In the past years LHC experiments are commissioned and now manages about an exabyte of storage for analysis purposes, approximately half of which is used for archival purposes, and half is used for traditional disk storage. Meanwhile for HL-LHC storage requirements per year are expected to be increased by factor 10 [1]. arXiv:2004.10531v1 [cs.OH] 8 Apr 2020 Looking at these predictions, we would like to state that storage will remain one of the major cost drivers and at the same time the bottlenecks for HEP computing. -

Building and Installing Xen 4.X and Linux Kernel 3.X on Ubuntu and Debian Linux

Building and Installing Xen 4.x and Linux Kernel 3.x on Ubuntu and Debian Linux Version 2.3 Author: Teo En Ming (Zhang Enming) Website #1: http://www.teo-en-ming.com Website #2: http://www.zhang-enming.com Email #1: [email protected] Email #2: [email protected] Email #3: [email protected] Mobile Phone(s): +65-8369-2618 / +65-9117-5902 / +65-9465-2119 Country: Singapore Date: 10 August 2013 Saturday 4:56 A.M. Singapore Time 1 Installing Prerequisite Software sudo apt-get install ocaml-findlib sudo apt-get install bcc bin86 gawk bridge-utils iproute libcurl3 libcurl4-openssl-dev bzip2 module-init-tools transfig tgif texinfo texlive-latex-base texlive-latex-recommended texlive-fonts-extra texlive-fonts-recommended pciutils-dev mercurial build-essential make gcc libc6-dev zlib1g-dev python python-dev python-twisted libncurses5-dev patch libvncserver-dev libsdl-dev libjpeg62-dev iasl libbz2-dev e2fslibs-dev git-core uuid-dev ocaml libx11-dev bison flex sudo apt-get install gcc-multilib sudo apt-get install xz-utils libyajl-dev gettext sudo apt-get install git-core kernel-package fakeroot build-essential libncurses5-dev 2 Linux Kernel 3.x with Xen Virtualization Support (Dom0 and DomU) In this installation document, we will build/compile Xen 4.1.3-rc1-pre and Linux kernel 3.3.0-rc7 from sources. sudo apt-get install aria2 aria2c -x 5 http://www.kernel.org/pub/linux/kernel/v3.0/testing/linux-3.3-rc7.tar.bz2 tar xfvj linux-3.3-rc7.tar.bz2 cd linux-3.3-rc7 Page 1 of 25 (C) 2013 Teo En Ming (Zhang Enming) 3 Configuring the Linux kernel cp /boot/config-3.0.0-12-generic .config make oldconfig Accept the defaults for new kernel configuration options by pressing enter. -

Pack, Encrypt, Authenticate Document Revision: 2021 05 02

PEA Pack, Encrypt, Authenticate Document revision: 2021 05 02 Author: Giorgio Tani Translation: Giorgio Tani This document refers to: PEA file format specification version 1 revision 3 (1.3); PEA file format specification version 2.0; PEA 1.01 executable implementation; Present documentation is released under GNU GFDL License. PEA executable implementation is released under GNU LGPL License; please note that all units provided by the Author are released under LGPL, while Wolfgang Ehrhardt’s crypto library units used in PEA are released under zlib/libpng License. PEA file format and PCOMPRESS specifications are hereby released under PUBLIC DOMAIN: the Author neither has, nor is aware of, any patents or pending patents relevant to this technology and do not intend to apply for any patents covering it. As far as the Author knows, PEA file format in all of it’s parts is free and unencumbered for all uses. Pea is on PeaZip project official site: https://peazip.github.io , https://peazip.org , and https://peazip.sourceforge.io For more information about the licenses: GNU GFDL License, see http://www.gnu.org/licenses/fdl.txt GNU LGPL License, see http://www.gnu.org/licenses/lgpl.txt 1 Content: Section 1: PEA file format ..3 Description ..3 PEA 1.3 file format details ..5 Differences between 1.3 and older revisions ..5 PEA 2.0 file format details ..7 PEA file format’s and implementation’s limitations ..8 PCOMPRESS compression scheme ..9 Algorithms used in PEA format ..9 PEA security model .10 Cryptanalysis of PEA format .12 Data recovery from -

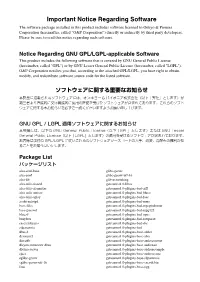

Important Notice Regarding Software

Important Notice Regarding Software The software package installed in this product includes software licensed to Onkyo & Pioneer Corporation (hereinafter, called “O&P Corporation”) directly or indirectly by third party developers. Please be sure to read this notice regarding such software. Notice Regarding GNU GPL/LGPL-applicable Software This product includes the following software that is covered by GNU General Public License (hereinafter, called "GPL") or by GNU Lesser General Public License (hereinafter, called "LGPL"). O&P Corporation notifies you that, according to the attached GPL/LGPL, you have right to obtain, modify, and redistribute software source code for the listed software. ソフトウェアに関する重要なお知らせ 本製品に搭載されるソフトウェアには、オンキヨー & パイオニア株式会社(以下「弊社」とします)が 第三者より直接的に又は間接的に使用の許諾を受けたソフトウェアが含まれております。これらのソフト ウェアに関する本お知らせを必ずご一読くださいますようお願い申し上げます。 GNU GPL / LGPL 適用ソフトウェアに関するお知らせ 本製品には、以下の GNU General Public License(以下「GPL」とします)または GNU Lesser General Public License(以下「LGPL」とします)の適用を受けるソフトウェアが含まれております。 お客様は添付の GPL/LGPL に従いこれらのソフトウェアソースコードの入手、改変、再配布の権利があ ることをお知らせいたします。 Package List パッケージリスト alsa-conf-base glibc-gconv alsa-conf glibc-gconv-utf-16 alsa-lib glib-networking alsa-utils-alsactl gstreamer1.0-libav alsa-utils-alsamixer gstreamer1.0-plugins-bad-aiff alsa-utils-amixer gstreamer1.0-plugins-bad-bluez alsa-utils-aplay gstreamer1.0-plugins-bad-faac avahi-autoipd gstreamer1.0-plugins-bad-mms base-files gstreamer1.0-plugins-bad-mpegtsdemux base-passwd gstreamer1.0-plugins-bad-mpg123 bluez5 gstreamer1.0-plugins-bad-opus busybox gstreamer1.0-plugins-bad-rawparse -

Metadefender Core V4.12.2

MetaDefender Core v4.12.2 © 2018 OPSWAT, Inc. All rights reserved. OPSWAT®, MetadefenderTM and the OPSWAT logo are trademarks of OPSWAT, Inc. All other trademarks, trade names, service marks, service names, and images mentioned and/or used herein belong to their respective owners. Table of Contents About This Guide 13 Key Features of Metadefender Core 14 1. Quick Start with Metadefender Core 15 1.1. Installation 15 Operating system invariant initial steps 15 Basic setup 16 1.1.1. Configuration wizard 16 1.2. License Activation 21 1.3. Scan Files with Metadefender Core 21 2. Installing or Upgrading Metadefender Core 22 2.1. Recommended System Requirements 22 System Requirements For Server 22 Browser Requirements for the Metadefender Core Management Console 24 2.2. Installing Metadefender 25 Installation 25 Installation notes 25 2.2.1. Installing Metadefender Core using command line 26 2.2.2. Installing Metadefender Core using the Install Wizard 27 2.3. Upgrading MetaDefender Core 27 Upgrading from MetaDefender Core 3.x 27 Upgrading from MetaDefender Core 4.x 28 2.4. Metadefender Core Licensing 28 2.4.1. Activating Metadefender Licenses 28 2.4.2. Checking Your Metadefender Core License 35 2.5. Performance and Load Estimation 36 What to know before reading the results: Some factors that affect performance 36 How test results are calculated 37 Test Reports 37 Performance Report - Multi-Scanning On Linux 37 Performance Report - Multi-Scanning On Windows 41 2.6. Special installation options 46 Use RAMDISK for the tempdirectory 46 3. Configuring Metadefender Core 50 3.1. Management Console 50 3.2. -

Improved Neural Network Based General-Purpose Lossless Compression Mohit Goyal, Kedar Tatwawadi, Shubham Chandak, Idoia Ochoa

JOURNAL OF LATEX CLASS FILES, VOL. 14, NO. 8, AUGUST 2015 1 DZip: improved neural network based general-purpose lossless compression Mohit Goyal, Kedar Tatwawadi, Shubham Chandak, Idoia Ochoa Abstract—We consider lossless compression based on statistical [4], [5] and generative modeling [6]). Neural network based data modeling followed by prediction-based encoding, where an models can typically learn highly complex patterns in the data accurate statistical model for the input data leads to substantial much better than traditional finite context and Markov models, improvements in compression. We propose DZip, a general- purpose compressor for sequential data that exploits the well- leading to significantly lower prediction error (measured as known modeling capabilities of neural networks (NNs) for pre- log-loss or perplexity [4]). This has led to the development of diction, followed by arithmetic coding. DZip uses a novel hybrid several compressors using neural networks as predictors [7]– architecture based on adaptive and semi-adaptive training. Unlike [9], including the recently proposed LSTM-Compress [10], most NN based compressors, DZip does not require additional NNCP [11] and DecMac [12]. Most of the previous works, training data and is not restricted to specific data types. The proposed compressor outperforms general-purpose compressors however, have been tailored for compression of certain data such as Gzip (29% size reduction on average) and 7zip (12% size types (e.g., text [12] [13] or images [14], [15]), where the reduction on average) on a variety of real datasets, achieves near- prediction model is trained in a supervised framework on optimal compression on synthetic datasets, and performs close to separate training data or the model architecture is tuned for specialized compressors for large sequence lengths, without any the specific data type. -

Lossless Compression of Internal Files in Parallel Reservoir Simulation

Lossless Compression of Internal Files in Parallel Reservoir Simulation Suha Kayum Marcin Rogowski Florian Mannuss 9/26/2019 Outline • I/O Challenges in Reservoir Simulation • Evaluation of Compression Algorithms on Reservoir Simulation Data • Real-world application - Constraints - Algorithm - Results • Conclusions 2 Challenge Reservoir simulation 1 3 Reservoir Simulation • Largest field in the world are represented as 50 million – 1 billion grid block models • Each runs takes hours on 500-5000 cores • Calibrating the model requires 100s of runs and sophisticated methods • “History matched” model is only a beginning 4 Files in Reservoir Simulation • Internal Files • Input / Output Files - Interact with pre- & post-processing tools Date Restart/Checkpoint Files 5 Reservoir Simulation in Saudi Aramco • 100’000+ simulations annually • The largest simulation of 10 billion cells • Currently multiple machines in TOP500 • Petabytes of storage required 600x • Resources are Finite • File Compression is one solution 50x 6 Compression algorithm evaluation 2 7 Compression ratio Tested a number of algorithms on a GRID restart file for two models 4 - Model A – 77.3 million active grid blocks 3.5 - Model K – 8.7 million active grid blocks 3 - 15.6 GB and 7.2 GB respectively 2.5 2 Compression ratio is between 1.5 1 compression ratio compression - From 2.27 for snappy (Model A) 0.5 0 - Up to 3.5 for bzip2 -9 (Model K) Model A Model K lz4 snappy gzip -1 gzip -9 bzip2 -1 bzip2 -9 8 Compression speed • LZ4 and Snappy significantly outperformed other algorithms -

Genomic Data Compression

Genomic data compression S. Cenk Sahinalp Bogaz’da Yaz Okulu 2018 Genome&storage&and&communication:&the&need • Research:)massive)genome)projects (e.g.)PCAWG))require)to)exchange) 10000s)of)genomes. • Need)to)cover)>200)cancer)types,)many)subtypes. • Within)PCAWG)TCGA)to)sequence)11K)patients)covering)33)cancer) types.)ICGC)to)cover)50)cancer)types)>15PB)data)at)The)Cancer) Genome)Collaboratory • Clinic:)$100s/genome (e.g.)NovaSeq))enable)sequencing)to)be)a) standard)tool)for)pathology • PCAWG)estimates)that)250M+)individuals)will)be)sequenced)by)2030 Current'needs • Typical(technology:(Illumina NovaSeq 1009400(bp reads,(2509500GB( uncompressed(data(for(high(coverage human(genome,(high(redundancy • 40%(of(the(human(genome(is(repetitive((mobile(genetic(elements,( centromeric DNA,(segmental(duplications,(etc.) • Upload/download:(55(hrs on(a(10Mbit(consumer(line;(5.5(hrs on(a( 100Mbit(high(speed(connection • Technologies(under(development:(expensive,(longer(reads,(higher(error( (PacBio,(Nanopore)(– lower(redundancy(and(higher(error(rates(limit( compression File%formats • Raw read'data:'FASTQ/FASTA'– dominant'fields:'(read'name,'sequence,' quality'score) • Mapped read'data:'SAM/BAM'– reads'reordered'based'on'mapping' locus'on'a'reference genome'(not'suitaBle'for'metagenomics,'organisms' with'no'reference) • Key'decisions'to'Be'made: • Each'format'can'Be'compressed'through'specialized'methods'– should' there'Be'a'standardized'format'for'compressed'genomes? • Better'file'formats'Based'on'mapping'loci'on'sequence'graphs' representing'common'variants'in'a'panJgenomic'reference? -

UG1144 (V2020.1) July 24, 2020 Revision History

See all versions of this document PetaLinux Tools Documentation Reference Guide UG1144 (v2020.1) July 24, 2020 Revision History Revision History The following table shows the revision history for this document. Section Revision Summary 07/24/2020 Version 2020.1 Appendix H: Partitioning and Formatting an SD Card Added a new appendix. 06/03/2020 Version 2020.1 Chapter 2: Setting Up Your Environment Added the Installing a Preferred eSDK as part of the PetaLinux Tool section. Chapter 4: Configuring and Building Added the PetaLinux Commands with Equivalent devtool Commands section. Chapter 6: Upgrading the Workspace Added new sections: petalinux-upgrade Options, Upgrading Between Minor Releases (2020.1 Tool with 2020.2 Tool) , Upgrading the Installed Tool with More Platforms, and Upgrading the Installed Tool with your Customized Platform. Chapter 7: Customizing the Project Added new sections: Creating Partitioned Images Using Wic and Configuring SD Card ext File System Boot. Chapter 8: Customizing the Root File System Added the Appending Root File System Packages section. Chapter 10: Advanced Configurations Updated PetaLinux Menuconfig System. Chapter 11: Yocto Features Added the Adding Extra Users to the PetaLinux System section. Appendix A: Migration Added Tool/Project Directory Structure. UG1144 (v2020.1) July 24, 2020Send Feedback www.xilinx.com PetaLinux Tools Documentation Reference Guide 2 Table of Contents Revision History...............................................................................................................2 -

Arrow: Integration to 'Apache' 'Arrow'

Package ‘arrow’ September 5, 2021 Title Integration to 'Apache' 'Arrow' Version 5.0.0.2 Description 'Apache' 'Arrow' <https://arrow.apache.org/> is a cross-language development platform for in-memory data. It specifies a standardized language-independent columnar memory format for flat and hierarchical data, organized for efficient analytic operations on modern hardware. This package provides an interface to the 'Arrow C++' library. Depends R (>= 3.3) License Apache License (>= 2.0) URL https://github.com/apache/arrow/, https://arrow.apache.org/docs/r/ BugReports https://issues.apache.org/jira/projects/ARROW/issues Encoding UTF-8 Language en-US SystemRequirements C++11; for AWS S3 support on Linux, libcurl and openssl (optional) Biarch true Imports assertthat, bit64 (>= 0.9-7), methods, purrr, R6, rlang, stats, tidyselect, utils, vctrs RoxygenNote 7.1.1.9001 VignetteBuilder knitr Suggests decor, distro, dplyr, hms, knitr, lubridate, pkgload, reticulate, rmarkdown, stringi, stringr, testthat, tibble, withr Collate 'arrowExports.R' 'enums.R' 'arrow-package.R' 'type.R' 'array-data.R' 'arrow-datum.R' 'array.R' 'arrow-tabular.R' 'buffer.R' 'chunked-array.R' 'io.R' 'compression.R' 'scalar.R' 'compute.R' 'config.R' 'csv.R' 'dataset.R' 'dataset-factory.R' 'dataset-format.R' 'dataset-partition.R' 'dataset-scan.R' 'dataset-write.R' 'deprecated.R' 'dictionary.R' 'dplyr-arrange.R' 'dplyr-collect.R' 'dplyr-eval.R' 'dplyr-filter.R' 'expression.R' 'dplyr-functions.R' 1 2 R topics documented: 'dplyr-group-by.R' 'dplyr-mutate.R' 'dplyr-select.R' 'dplyr-summarize.R'