Matrix Notation and Operations

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

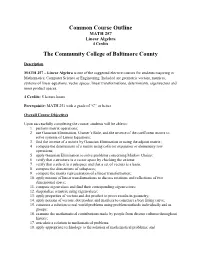

Common Course Outline MATH 257 Linear Algebra 4 Credits

Common Course Outline MATH 257 Linear Algebra 4 Credits The Community College of Baltimore County Description MATH 257 – Linear Algebra is one of the suggested elective courses for students majoring in Mathematics, Computer Science or Engineering. Included are geometric vectors, matrices, systems of linear equations, vector spaces, linear transformations, determinants, eigenvectors and inner product spaces. 4 Credits: 5 lecture hours Prerequisite: MATH 251 with a grade of “C” or better Overall Course Objectives Upon successfully completing the course, students will be able to: 1. perform matrix operations; 2. use Gaussian Elimination, Cramer’s Rule, and the inverse of the coefficient matrix to solve systems of Linear Equations; 3. find the inverse of a matrix by Gaussian Elimination or using the adjoint matrix; 4. compute the determinant of a matrix using cofactor expansion or elementary row operations; 5. apply Gaussian Elimination to solve problems concerning Markov Chains; 6. verify that a structure is a vector space by checking the axioms; 7. verify that a subset is a subspace and that a set of vectors is a basis; 8. compute the dimensions of subspaces; 9. compute the matrix representation of a linear transformation; 10. apply notions of linear transformations to discuss rotations and reflections of two dimensional space; 11. compute eigenvalues and find their corresponding eigenvectors; 12. diagonalize a matrix using eigenvalues; 13. apply properties of vectors and dot product to prove results in geometry; 14. apply notions of vectors, dot product and matrices to construct a best fitting curve; 15. construct a solution to real world problems using problem methods individually and in groups; 16. -

Parametrizations of K-Nonnegative Matrices

Parametrizations of k-Nonnegative Matrices Anna Brosowsky, Neeraja Kulkarni, Alex Mason, Joe Suk, Ewin Tang∗ October 2, 2017 Abstract Totally nonnegative (positive) matrices are matrices whose minors are all nonnegative (positive). We generalize the notion of total nonnegativity, as follows. A k-nonnegative (resp. k-positive) matrix has all minors of size k or less nonnegative (resp. positive). We give a generating set for the semigroup of k-nonnegative matrices, as well as relations for certain special cases, i.e. the k = n − 1 and k = n − 2 unitriangular cases. In the above two cases, we find that the set of k-nonnegative matrices can be partitioned into cells, analogous to the Bruhat cells of totally nonnegative matrices, based on their factorizations into generators. We will show that these cells, like the Bruhat cells, are homeomorphic to open balls, and we prove some results about the topological structure of the closure of these cells, and in fact, in the latter case, the cells form a Bruhat-like CW complex. We also give a family of minimal k-positivity tests which form sub-cluster algebras of the total positivity test cluster algebra. We describe ways to jump between these tests, and give an alternate description of some tests as double wiring diagrams. 1 Introduction A totally nonnegative (respectively totally positive) matrix is a matrix whose minors are all nonnegative (respectively positive). Total positivity and nonnegativity are well-studied phenomena and arise in areas such as planar networks, combinatorics, dynamics, statistics and probability. The study of total positivity and total nonnegativity admit many varied applications, some of which are explored in “Totally Nonnegative Matrices” by Fallat and Johnson [5]. -

DERIVATIONS and PROJECTIONS on JORDAN TRIPLES an Introduction to Nonassociative Algebra, Continuous Cohomology, and Quantum Functional Analysis

DERIVATIONS AND PROJECTIONS ON JORDAN TRIPLES An introduction to nonassociative algebra, continuous cohomology, and quantum functional analysis Bernard Russo July 29, 2014 This paper is an elaborated version of the material presented by the author in a three hour minicourse at V International Course of Mathematical Analysis in Andalusia, at Almeria, Spain September 12-16, 2011. The author wishes to thank the scientific committee for the opportunity to present the course and to the organizing committee for their hospitality. The author also personally thanks Antonio Peralta for his collegiality and encouragement. The minicourse on which this paper is based had its genesis in a series of talks the author had given to undergraduates at Fullerton College in California. I thank my former student Dana Clahane for his initiative in running the remarkable undergraduate research program at Fullerton College of which the seminar series is a part. With their knowledge only of the product rule for differentiation as a starting point, these enthusiastic students were introduced to some aspects of the esoteric subject of non associative algebra, including triple systems as well as algebras. Slides of these talks and of the minicourse lectures, as well as other related material, can be found at the author's website (www.math.uci.edu/∼brusso). Conversely, these undergraduate talks were motivated by the author's past and recent joint works on derivations of Jordan triples ([116],[117],[200]), which are among the many results discussed here. Part I (Derivations) is devoted to an exposition of the properties of derivations on various algebras and triple systems in finite and infinite dimensions, the primary questions addressed being whether the derivation is automatically continuous and to what extent it is an inner derivation. -

Diagonalizing a Matrix

Diagonalizing a Matrix Definition 1. We say that two square matrices A and B are similar provided there exists an invertible matrix P so that . 2. We say a matrix A is diagonalizable if it is similar to a diagonal matrix. Example 1. The matrices and are similar matrices since . We conclude that is diagonalizable. 2. The matrices and are similar matrices since . After we have developed some additional theory, we will be able to conclude that the matrices and are not diagonalizable. Theorem Suppose A, B and C are square matrices. (1) A is similar to A. (2) If A is similar to B, then B is similar to A. (3) If A is similar to B and if B is similar to C, then A is similar to C. Proof of (3) Since A is similar to B, there exists an invertible matrix P so that . Also, since B is similar to C, there exists an invertible matrix R so that . Now, and so A is similar to C. Thus, “A is similar to B” is an equivalence relation. Theorem If A is similar to B, then A and B have the same eigenvalues. Proof Since A is similar to B, there exists an invertible matrix P so that . Now, Since A and B have the same characteristic equation, they have the same eigenvalues. > Example Find the eigenvalues for . Solution Since is similar to the diagonal matrix , they have the same eigenvalues. Because the eigenvalues of an upper (or lower) triangular matrix are the entries on the main diagonal, we see that the eigenvalues for , and, hence, are . -

21. Orthonormal Bases

21. Orthonormal Bases The canonical/standard basis 011 001 001 B C B C B C B0C B1C B0C e1 = B.C ; e2 = B.C ; : : : ; en = B.C B.C B.C B.C @.A @.A @.A 0 0 1 has many useful properties. • Each of the standard basis vectors has unit length: q p T jjeijj = ei ei = ei ei = 1: • The standard basis vectors are orthogonal (in other words, at right angles or perpendicular). T ei ej = ei ej = 0 when i 6= j This is summarized by ( 1 i = j eT e = δ = ; i j ij 0 i 6= j where δij is the Kronecker delta. Notice that the Kronecker delta gives the entries of the identity matrix. Given column vectors v and w, we have seen that the dot product v w is the same as the matrix multiplication vT w. This is the inner product on n T R . We can also form the outer product vw , which gives a square matrix. 1 The outer product on the standard basis vectors is interesting. Set T Π1 = e1e1 011 B C B0C = B.C 1 0 ::: 0 B.C @.A 0 01 0 ::: 01 B C B0 0 ::: 0C = B. .C B. .C @. .A 0 0 ::: 0 . T Πn = enen 001 B C B0C = B.C 0 0 ::: 1 B.C @.A 1 00 0 ::: 01 B C B0 0 ::: 0C = B. .C B. .C @. .A 0 0 ::: 1 In short, Πi is the diagonal square matrix with a 1 in the ith diagonal position and zeros everywhere else. -

Partitioned (Or Block) Matrices This Version: 29 Nov 2018

Partitioned (or Block) Matrices This version: 29 Nov 2018 Intermediate Econometrics / Forecasting Class Notes Instructor: Anthony Tay It is frequently convenient to partition matrices into smaller sub-matrices. e.g. 2 3 2 1 3 2 3 2 1 3 4 1 1 0 7 4 1 1 0 7 A B (2×2) (2×3) 3 1 1 0 0 = 3 1 1 0 0 = C I 1 3 0 1 0 1 3 0 1 0 (3×2) (3×3) 2 0 0 0 1 2 0 0 0 1 The same matrix can be partitioned in several different ways. For instance, we can write the previous matrix as 2 3 2 1 3 2 3 2 1 3 4 1 1 0 7 4 1 1 0 7 a b0 (1×1) (1×4) 3 1 1 0 0 = 3 1 1 0 0 = c D 1 3 0 1 0 1 3 0 1 0 (4×1) (4×4) 2 0 0 0 1 2 0 0 0 1 One reason partitioning is useful is that we can do matrix addition and multiplication with blocks, as though the blocks are elements, as long as the blocks are conformable for the operations. For instance: A B D E A + D B + E (2×2) (2×3) (2×2) (2×3) (2×2) (2×3) + = C I C F 2C I + F (3×2) (3×3) (3×2) (3×3) (3×2) (3×3) A B d E Ad + BF AE + BG (2×2) (2×3) (2×1) (2×3) (2×1) (2×3) = C I F G Cd + F CE + G (3×2) (3×3) (3×1) (3×3) (3×1) (3×3) | {z } | {z } | {z } (5×5) (5×4) (5×4) 1 Intermediate Econometrics / Forecasting 2 Examples (1) Let 1 2 1 1 2 1 c 1 4 2 3 4 2 3 h i A = = = a a a and c = c 1 2 3 2 3 0 1 3 0 1 c 0 1 3 0 1 3 3 c1 h i then Ac = a1 a2 a3 c2 = c1a1 + c2a2 + c3a3 c3 The product Ac produces a linear combination of the columns of A. -

Analysis of Functions of a Single Variable a Detailed Development

ANALYSIS OF FUNCTIONS OF A SINGLE VARIABLE A DETAILED DEVELOPMENT LAWRENCE W. BAGGETT University of Colorado OCTOBER 29, 2006 2 For Christy My Light i PREFACE I have written this book primarily for serious and talented mathematics scholars , seniors or first-year graduate students, who by the time they finish their schooling should have had the opportunity to study in some detail the great discoveries of our subject. What did we know and how and when did we know it? I hope this book is useful toward that goal, especially when it comes to the great achievements of that part of mathematics known as analysis. I have tried to write a complete and thorough account of the elementary theories of functions of a single real variable and functions of a single complex variable. Separating these two subjects does not at all jive with their development historically, and to me it seems unnecessary and potentially confusing to do so. On the other hand, functions of several variables seems to me to be a very different kettle of fish, so I have decided to limit this book by concentrating on one variable at a time. Everyone is taught (told) in school that the area of a circle is given by the formula A = πr2: We are also told that the product of two negatives is a positive, that you cant trisect an angle, and that the square root of 2 is irrational. Students of natural sciences learn that eiπ = 1 and that sin2 + cos2 = 1: More sophisticated students are taught the Fundamental− Theorem of calculus and the Fundamental Theorem of Algebra. -

Triple Product Formula and the Subconvexity Bound of Triple Product L-Function in Level Aspect

TRIPLE PRODUCT FORMULA AND THE SUBCONVEXITY BOUND OF TRIPLE PRODUCT L-FUNCTION IN LEVEL ASPECT YUEKE HU Abstract. In this paper we derived a nice general formula for the local integrals of triple product formula whenever one of the representations has sufficiently higher level than the other two. As an application we generalized Venkatesh and Woodbury’s work on the subconvexity bound of triple product L-function in level aspect, allowing joint ramifications, higher ramifications, general unitary central characters and general special values of local epsilon factors. 1. introduction 1.1. Triple product formula. Let F be a number field. Let ⇡i, i = 1, 2, 3 be three irreducible unitary cuspidal automorphic representations, such that the product of their central characters is trivial: (1.1) w⇡i = 1. Yi Let ⇧=⇡ ⇡ ⇡ . Then one can define the triple product L-function L(⇧, s) associated to them. 1 ⌦ 2 ⌦ 3 It was first studied in [6] by Garrett in classical languages, where explicit integral representation was given. In particular the triple product L-function has analytic continuation and functional equation. Later on Shapiro and Rallis in [19] reformulated his work in adelic languages. In this paper we shall study the following integral representing the special value of triple product L function (see Section 2.2 for more details): − ⇣2(2)L(⇧, 1/2) (1.2) f (g) f (g) f (g)dg 2 = F I0( f , f , f ), | 1 2 3 | ⇧, , v 1,v 2,v 3,v 8L( Ad 1) v ZAD (FZ) D (A) ⇤ \ ⇤ Y D D Here fi ⇡i for a specific quaternion algebra D, and ⇡i is the image of ⇡i under Jacquet-Langlands 2 0 correspondence. -

Variables in Mathematics Education

Variables in Mathematics Education Susanna S. Epp DePaul University, Department of Mathematical Sciences, Chicago, IL 60614, USA http://www.springer.com/lncs Abstract. This paper suggests that consistently referring to variables as placeholders is an effective countermeasure for addressing a number of the difficulties students’ encounter in learning mathematics. The sug- gestion is supported by examples discussing ways in which variables are used to express unknown quantities, define functions and express other universal statements, and serve as generic elements in mathematical dis- course. In addition, making greater use of the term “dummy variable” and phrasing statements both with and without variables may help stu- dents avoid mistakes that result from misinterpreting the scope of a bound variable. Keywords: variable, bound variable, mathematics education, placeholder. 1 Introduction Variables are of critical importance in mathematics. For instance, Felix Klein wrote in 1908 that “one may well declare that real mathematics begins with operations with letters,”[3] and Alfred Tarski wrote in 1941 that “the invention of variables constitutes a turning point in the history of mathematics.”[5] In 1911, A. N. Whitehead expressly linked the concepts of variables and quantification to their expressions in informal English when he wrote: “The ideas of ‘any’ and ‘some’ are introduced to algebra by the use of letters. it was not till within the last few years that it has been realized how fundamental any and some are to the very nature of mathematics.”[6] There is a question, however, about how to describe the use of variables in mathematics instruction and even what word to use for them. -

Eigenvalues and Eigenvectors

Jim Lambers MAT 605 Fall Semester 2015-16 Lecture 14 and 15 Notes These notes correspond to Sections 4.4 and 4.5 in the text. Eigenvalues and Eigenvectors In order to compute the matrix exponential eAt for a given matrix A, it is helpful to know the eigenvalues and eigenvectors of A. Definitions and Properties Let A be an n × n matrix. A nonzero vector x is called an eigenvector of A if there exists a scalar λ such that Ax = λx: The scalar λ is called an eigenvalue of A, and we say that x is an eigenvector of A corresponding to λ. We see that an eigenvector of A is a vector for which matrix-vector multiplication with A is equivalent to scalar multiplication by λ. Because x is nonzero, it follows that if x is an eigenvector of A, then the matrix A − λI is singular, where λ is the corresponding eigenvalue. Therefore, λ satisfies the equation det(A − λI) = 0: The expression det(A−λI) is a polynomial of degree n in λ, and therefore is called the characteristic polynomial of A (eigenvalues are sometimes called characteristic values). It follows from the fact that the eigenvalues of A are the roots of the characteristic polynomial that A has n eigenvalues, which can repeat, and can also be complex, even if A is real. However, if A is real, any complex eigenvalues must occur in complex-conjugate pairs. The set of eigenvalues of A is called the spectrum of A, and denoted by λ(A). This terminology explains why the magnitude of the largest eigenvalues is called the spectral radius of A. -

Lecture 13: Simple Linear Regression in Matrix Format

11:55 Wednesday 14th October, 2015 See updates and corrections at http://www.stat.cmu.edu/~cshalizi/mreg/ Lecture 13: Simple Linear Regression in Matrix Format 36-401, Section B, Fall 2015 13 October 2015 Contents 1 Least Squares in Matrix Form 2 1.1 The Basic Matrices . .2 1.2 Mean Squared Error . .3 1.3 Minimizing the MSE . .4 2 Fitted Values and Residuals 5 2.1 Residuals . .7 2.2 Expectations and Covariances . .7 3 Sampling Distribution of Estimators 8 4 Derivatives with Respect to Vectors 9 4.1 Second Derivatives . 11 4.2 Maxima and Minima . 11 5 Expectations and Variances with Vectors and Matrices 12 6 Further Reading 13 1 2 So far, we have not used any notions, or notation, that goes beyond basic algebra and calculus (and probability). This has forced us to do a fair amount of book-keeping, as it were by hand. This is just about tolerable for the simple linear model, with one predictor variable. It will get intolerable if we have multiple predictor variables. Fortunately, a little application of linear algebra will let us abstract away from a lot of the book-keeping details, and make multiple linear regression hardly more complicated than the simple version1. These notes will not remind you of how matrix algebra works. However, they will review some results about calculus with matrices, and about expectations and variances with vectors and matrices. Throughout, bold-faced letters will denote matrices, as a as opposed to a scalar a. 1 Least Squares in Matrix Form Our data consists of n paired observations of the predictor variable X and the response variable Y , i.e., (x1; y1);::: (xn; yn). -

Algebra of Linear Transformations and Matrices Math 130 Linear Algebra

Then the two compositions are 0 −1 1 0 0 1 BA = = 1 0 0 −1 1 0 Algebra of linear transformations and 1 0 0 −1 0 −1 AB = = matrices 0 −1 1 0 −1 0 Math 130 Linear Algebra D Joyce, Fall 2013 The products aren't the same. You can perform these on physical objects. Take We've looked at the operations of addition and a book. First rotate it 90◦ then flip it over. Start scalar multiplication on linear transformations and again but flip first then rotate 90◦. The book ends used them to define addition and scalar multipli- up in different orientations. cation on matrices. For a given basis β on V and another basis γ on W , we have an isomorphism Matrix multiplication is associative. Al- γ ' φβ : Hom(V; W ) ! Mm×n of vector spaces which though it's not commutative, it is associative. assigns to a linear transformation T : V ! W its That's because it corresponds to composition of γ standard matrix [T ]β. functions, and that's associative. Given any three We also have matrix multiplication which corre- functions f, g, and h, we'll show (f ◦ g) ◦ h = sponds to composition of linear transformations. If f ◦ (g ◦ h) by showing the two sides have the same A is the standard matrix for a transformation S, values for all x. and B is the standard matrix for a transformation T , then we defined multiplication of matrices so ((f ◦ g) ◦ h)(x) = (f ◦ g)(h(x)) = f(g(h(x))) that the product AB is be the standard matrix for S ◦ T .