Reduced Dilation-Erosion Perceptron for Binary Classification

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Morphological Image Processing Introduction

Morphological Image Processing Introduction • In many areas of knowledge Morphology deals with form and structure (biology, linguistics, social studies, etc) • Mathematical Morphology deals with set theory • Sets in Mathematical Morphology represents objects in an Image 2 Mathematic Morphology • Used to extract image components that are useful in the representation and description of region shape, such as – boundaries extraction – skeletons – convex hull (italian: inviluppo convesso) – morphological filtering – thinning – pruning 3 Mathematic Morphology mathematical framework used for: • pre-processing – noise filtering, shape simplification, ... • enhancing object structure – skeletonization, convex hull... • segmentation – watershed,… • quantitative description – area, perimeter, ... 4 Z2 and Z3 • set in mathematic morphology represent objects in an image – binary image (0 = white, 1 = black) : the element of the set is the coordinates (x,y) of pixel belong to the object a Z2 • gray-scaled image : the element of the set is the coordinates (x,y) of pixel belong to the object and the gray levels a Z3 Y axis Y axis X axis Z axis X axis 5 Basic Set Operators Set operators Denotations A Subset B A ⊆ B Union of A and B C= A ∪ B Intersection of A and B C = A ∩ B Disjoint A ∩ B = ∅ c Complement of A A ={ w | w ∉ A} Difference of A and B A-B = {w | w ∈A, w ∉ B } Reflection of A Â = { w | w = -a for a ∈ A} Translation of set A by point z(z1,z2) (A)z = { c | c = a + z, for a ∈ A} 6 Basic Set Theory 7 Reflection and Translation Bˆ = {w ∈ E 2 : w -

Lecture 5: Binary Morphology

Lecture 5: Binary Morphology c Bryan S. Morse, Brigham Young University, 1998–2000 Last modified on January 15, 2000 at 3:00 PM Contents 5.1 What is Mathematical Morphology? ................................. 1 5.2 Image Regions as Sets ......................................... 1 5.3 Basic Binary Operations ........................................ 2 5.3.1 Dilation ............................................. 2 5.3.2 Erosion ............................................. 2 5.3.3 Duality of Dilation and Erosion ................................. 3 5.4 Some Examples of Using Dilation and Erosion . .......................... 3 5.5 Proving Properties of Mathematical Morphology .......................... 3 5.6 Hit-and-Miss .............................................. 4 5.7 Opening and Closing .......................................... 4 5.7.1 Opening ............................................. 4 5.7.2 Closing ............................................. 5 5.7.3 Properties of Opening and Closing ............................... 5 5.7.4 Applications of Opening and Closing .............................. 5 Reading SH&B, 11.1–11.3 Castleman, 18.7.1–18.7.4 5.1 What is Mathematical Morphology? “Morphology” can literally be taken to mean “doing things to shapes”. “Mathematical morphology” then, by exten- sion, means using mathematical principals to do things to shapes. 5.2 Image Regions as Sets The basis of mathematical morphology is the description of image regions as sets. For a binary image, we can consider the “on” (1) pixels to all comprise a set of values from the “universe” of pixels in the image. Throughout our discussion of mathematical morphology (or just “morphology”), when we refer to an image A, we mean the set of “on” (1) pixels in that image. The “off” (0) pixels are thus the set compliment of the set of on pixels. By Ac, we mean the compliment of A,or the off (0) pixels. -

Hit-Or-Miss Transform

Hit-or-miss transform • Used to extract pixels with specific neighbourhood configurations from an image • Grey scale extension exist • Uses two structure elements B1 and B2 to find a given foreground and background configuration, respectively C HMT B X={x∣B1x⊆X ,B2x⊆X } • Example: 1 Morphological Image Processing Lecture 22 (page 1) 9.4 The hit-or-miss transformation Illustration... Morphological Image Processing Lecture 22 (page 2) Objective is to find a disjoint region (set) in an image • If B denotes the set composed of X and its background, the• match/hit (or set of matches/hits) of B in A,is A B =(A X) [Ac (W X)] ¯∗ ª ∩ ª − Generalized notation: B =(B1,B2) • Set formed from elements of B associated with B1: • an object Set formed from elements of B associated with B2: • the corresponding background [Preceeding discussion: B1 = X and B2 =(W X)] − More general definition: • c A B =(A B1) [A B2] ¯∗ ª ∩ ª A B contains all the origin points at which, simulta- • ¯∗ c neously, B1 found a hit in A and B2 found a hit in A Hit-or-miss transform C HMT B X={x∣B1x⊆X ,B2x⊆X } • Can be written in terms of an intersection of two erosions: HMT X= X∩ X c B B1 B2 2 Hit-or-miss transform • Simple example usages - locate: – Isolated foreground pixels • no neighbouring foreground pixels – Foreground endpoints • one or zero neighbouring foreground pixels – Multiple foreground points • pixels having more than two neighbouring foreground pixels – Foreground contour points • pixels having at least one neighbouring background pixel 3 Hit-or-miss transform example • Locating 4-connected endpoints SEs for 4-connected endpoints Resulting Hit-or-miss transform 4 Hit-or-miss opening • Objective: keep all points that fit the SE. -

Digital Image Processing

Digital Image Processing Lecture # 11 Morphological Operations 1 Image Morphology 2 Introduction Morphology A branch of biology which deals with the form and structure of animals and plants Mathematical Morphology A tool for extracting image components that are useful in the representation and description of region shapes The language of mathematical morphology is Set Theory 3 Morphology: Quick Example Image after segmentation Image after segmentation and morphological processing 4 Introduction Morphological image processing describes a range of image processing techniques that deal with the shape (or morphology) of objects in an image Sets in mathematical morphology represents objects in an image. E.g. Set of all white pixels in a binary image. 5 Introduction foreground: background I(p )c I(p)0 This represents a digital image. Each square is one pixel. 6 Set Theory The set space of binary image is Z2 Each element of the set is a 2D vector whose coordinates are the (x,y) of a black (or white, depending on the convention) pixel in the image The set space of gray level image is Z3 Each element of the set is a 3D vector: (x,y) and intensity level. NOTE: Set Theory and Logical operations are covered in: Section 9.1, Chapter # 9, 2nd Edition DIP by Gonzalez Section 2.6.4, Chapter # 2, 3rd Edition DIP by Gonzalez 7 Set Theory 2 Let A be a set in Z . if a = (a1,a2) is an element of A, then we write aA If a is not an element of A, we write aA Set representation A{( a1 , a 2 ),( a 3 , a 4 )} Empty or Null set A 8 Set Theory Subset: if every element of A is also an element of another set B, the A is said to be a subset of B AB Union: The set of all elements belonging either to A, B or both CAB Intersection: The set of all elements belonging to both A and B DAB 9 Set Theory Two sets A and B are said to be disjoint or mutually exclusive if they have no common element AB Complement: The set of elements not contained in A Ac { w | w A } Difference of two sets A and B, denoted by A – B, is defined as c A B { w | w A , w B } A B i.e. -

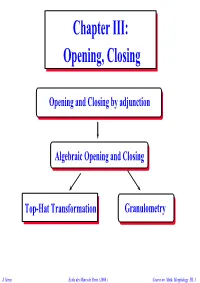

Chapter III: Opening, Closing

ChapterChapter III:III: Opening,Opening, ClosingClosing Opening and Closing by adjunction Algebraic Opening and Closing Top-Hat Transformation Granulometry J. Serra Ecole des Mines de Paris ( 2000 ) Course on Math. Morphology III. 1 AdjunctionAdjunction OpeningOpening andand ClosingClosing The problem of an inverse operator Several different sets may admit a same erosion, or a same dilate. But among all possible inverses, there exists always a smaller one (a larger one). It is obtained by composing erosion with the adjoint dilation (or vice versa) . The mapping is called adjunction opening, structuring and is denoted by element γ Β = δΒ εΒ ( general case) XoB = [(X B) ⊕ Β] (τ-operators) Erosion By commuting the factors δΒ and εΒ we obtain the adjunction closing ϕ ==εε δ ? Β Β Β (general case), X•Β = [X ⊕ Β) B] (τ-operators). ( These operators, due to G. Matheron are sometimes called morphological) J. Serra Ecole des Mines de Paris ( 2000 ) Course on Math. Morphology III. 2 PropertiesProperties ofof AdjunctionAdjunction OpeningOpening andand ClosingClosing Increasingness Adjunction opening and closing are increasing as products of increasing operations. (Anti-)extensivity By doing Y= δΒ(X), and then X = εΒ(Y) in adjunction δΒ(X) ⊆ Y ⇔ X ⊆ εΒ(Y) , we see that: δ ε ε δ ε (δ ε ) ε (ε δ )ε εεΒ δδΒ εεΒ ==ε==εεεΒ δΒΒ εΒΒ(X)(X) ⊆ ⊆XX ⊆⊆ εΒΒ δΒΒ (X) (X) hence Β Β Β ⊆ Β ⊆ Β Β Β⇒ Β Β Β Β Idempotence The erosion of the opening equals the erosion of the set itself. This results in the idempotence of γ Β and of ϕΒ : γ γ = γ γΒΒ γΒΒ = γΒΒand,and, εΒ (δΒ εΒ) = εΒ ⇒ δΒ εΒ (δΒ εΒ) = δΒ εΒ i.e. -

Grayscale Mathematical Morphology Václav Hlaváč

Grayscale mathematical morphology Václav Hlaváč Czech Technical University in Prague Czech Institute of Informatics, Robotics and Cybernetics 160 00 Prague 6, Jugoslávských partyzánů 1580/3, Czech Republic http://people.ciirc.cvut.cz/hlavac, [email protected] also Center for Machine Perception, http://cmp.felk.cvut.cz Courtesy: Petr Matula, Petr Kodl, Jean Serra, Miroslav Svoboda Outline of the talk: Set-function equivalence. Top-hat transform. Umbra and top of a set. Geodesic method. Ultimate erosion. Gray scale dilation, erosion. Morphological reconstruction. A quick informal explanation 2/42 Grayscale mathematical morphology is the generalization of binary morphology for images with more gray levels than two or with voxels. 3 The point set A ∈ E . The first two coordinates span in the function (point set) domain and the third coordinate corresponds to the function value. The concepts supremum ∨ (also the least upper bound), resp. infimum ∧ (also the greatest lower bound) play a key role here. Actually, the related operators max, resp. min, are used in computations with finite sets. Erosion (resp. dilation) of the image (with the flat structuring) element assigns to each pixel the minimal (resp. maximal) value in the chosen neighborhood of the current pixel of the input image. The structuring element (function) is a function of two variables. It influences how pixels in the neighborhood of the current pixel are taken into account. The value of the (non-flat) structuring element is added (while dilating), resp. subtracted (while eroding) when the maximum, resp. minimum is calculated in the neighborhood. Grayscale mathematical morphology explained via binary morphology 3/42 It is possible to introduce grayscale mathematical morphology using the already explained binary (black and white only) mathematical morphology. -

Abstract Mathematical Morphology Based on Structuring Element

Abstract Mathematical morphology based on structuring element: Application to morpho-logic Marc Aiguier1 and Isabelle Bloch2 and Ram´on Pino-P´erez3 1. MICS, CentraleSupelec, Universit´eParis Saclay, France [email protected] 2. LTCI, T´el´ecom Paris, Institut Polytechnique de Paris, France [email protected] 3. Departemento de Matematicas, Facultad de Ciencias, Universidad de Los Andes, M´erida, Venezuela [email protected] Abstract A general definition of mathematical morphology has been defined within the algebraic framework of complete lattice theory. In this frame- work, dealing with deterministic and increasing operators, a dilation (re- spectively an erosion) is an operation which is distributive over supremum (respectively infimum). From this simple definition of dilation and ero- sion, we cannot say much about the properties of them. However, when they form an adjunction, many important properties can be derived such as monotonicity, idempotence, and extensivity or anti-extensivity of their composition, preservation of infimum and supremum, etc. Mathemati- cal morphology has been first developed in the setting of sets, and then extended to other algebraic structures such as graphs, hypergraphs or sim- plicial complexes. For all these algebraic structures, erosion and dilation are usually based on structuring elements. The goal is then to match these structuring elements on given objects either to dilate or erode them. One of the advantages of defining erosion and dilation based on structuring ele- arXiv:2005.01715v1 [math.CT] 4 May 2020 ments is that these operations are adjoint. Based on this observation, this paper proposes to define, at the abstract level of category theory, erosion and dilation based on structuring elements. -

Computer Vision – A

CS4495 Computer Vision – A. Bobick Morphology CS 4495 Computer Vision Binary images and Morphology Aaron Bobick School of Interactive Computing CS4495 Computer Vision – A. Bobick Morphology Administrivia • PS7 – read about it on Piazza • PS5 – grades are out. • Final – Dec 9 • Study guide will be out by Thursday hopefully sooner. CS4495 Computer Vision – A. Bobick Morphology Binary Image Analysis Operations that produce or process binary images, typically 0’s and 1’s • 0 represents background • 1 represents foreground 00010010001000 00011110001000 00010010001000 Slides: Linda Shapiro CS4495 Computer Vision – A. Bobick Morphology Binary Image Analysis Used in a number of practical applications • Part inspection • Manufacturing • Document processing CS4495 Computer Vision – A. Bobick Morphology What kinds of operations? • Separate objects from background and from one another • Aggregate pixels for each object • Compute features for each object CS4495 Computer Vision – A. Bobick Morphology Example: Red blood cell image • Many blood cells are separate objects • Many touch – bad! • Salt and pepper noise from thresholding • How useable is this data? CS4495 Computer Vision – A. Bobick Morphology Results of analysis • 63 separate objects detected • Single cells have area about 50 • Noise spots • Gobs of cells CS4495 Computer Vision – A. Bobick Morphology Useful Operations • Thresholding a gray-scale image • Determining good thresholds • Connected components analysis • Binary mathematical morphology • All sorts of feature extractors, statistics (area, centroid, circularity, …) CS4495 Computer Vision – A. Bobick Morphology Thresholding • Background is black • Healthy cherry is bright • Bruise is medium dark • Histogram shows two cherry regions (black background has been removed) Pixel counts Pixel 0 Grayscale values 255 CS4495 Computer Vision – A. Bobick Morphology Histogram-Directed Thresholding • How can we use a histogram to separate an image into 2 (or several) different regions? Is there a single clear threshold? 2? 3? CS4495 Computer Vision – A. -

Morphological Operations on Matrix-Valued Images

Morphological Operations on Matrix-Valued Images Bernhard Burgeth, Martin Welk, Christian Feddern, and Joachim Weickert Mathematical Image Analysis Group Faculty of Mathematics and Computer Science, Bldg. 27 Saarland University, 66041 Saarbruc¨ ken, Germany burgeth,welk,feddern,weickert @mia.uni-saarland.de f g http://www.mia.uni-saarland.de Abstract. The output of modern imaging techniques such as diffusion tensor MRI or the physical measurement of anisotropic behaviour in materials such as the stress-tensor consists of tensor-valued data. Hence adequate image processing methods for shape analysis, skeletonisation, denoising and segmentation are in demand. The goal of this paper is to extend the morphological operations of dilation, erosion, opening and closing to the matrix-valued setting. We show that naive approaches such as componentwise application of scalar morphological operations are unsatisfactory, since they violate elementary requirements such as invariance under rotation. This lead us to study an analytic and a geo- metric alternative which are rotation invariant. Both methods introduce novel non-component-wise definitions of a supremum and an infimum of a finite set of matrices. The resulting morphological operations incorpo- rate information from all matrix channels simultaneously and preserve positive definiteness of the matrix field. Their properties and their per- formance are illustrated by experiments on diffusion tensor MRI data. Keywords: mathematical morphology, dilation, erosion, matrix-valued imaging, DT-MRI 1 Introduction Modern data and image processing encompasses more and more the analysis and processing of matrix-valued data. For instance, diffusion tensor magnetic reso- nance imaging (DT-MRI), a novel medical image acquisition technique, measures the diffusion properties of water molecules in tissue. -

Minimal Representations for Translation-Invariant Set

Minimal Representations for Translation-Invariant Set Mappings by Mathematical Morphology Author(s): Gerald Jean Francis Banon and Junior Barrera Source: SIAM Journal on Applied Mathematics, Vol. 51, No. 6 (Dec., 1991), pp. 1782-1798 Published by: Society for Industrial and Applied Mathematics Stable URL: http://www.jstor.org/stable/2102371 . Accessed: 02/04/2013 13:46 Your use of the JSTOR archive indicates your acceptance of the Terms & Conditions of Use, available at . http://www.jstor.org/page/info/about/policies/terms.jsp . JSTOR is a not-for-profit service that helps scholars, researchers, and students discover, use, and build upon a wide range of content in a trusted digital archive. We use information technology and tools to increase productivity and facilitate new forms of scholarship. For more information about JSTOR, please contact [email protected]. Society for Industrial and Applied Mathematics is collaborating with JSTOR to digitize, preserve and extend access to SIAM Journal on Applied Mathematics. http://www.jstor.org This content downloaded from 200.130.19.189 on Tue, 2 Apr 2013 13:46:44 PM All use subject to JSTOR Terms and Conditions SIAM J. APPL. MATH. ? 1991 Society for Industrial and Applied Mathematics Vol. 51, No. 6, pp. 1782-1798, December 1991 018 MINIMAL REPRESENTATIONS FOR TRANSLATION-INVARIANT SET MAPPINGS BY MATHEMATICAL MORPHOLOGY* GERALD JEAN FRANCIS BANONt AND JUNIOR BARRERAt Abstract. In his 1975 book, Matheron introduced a pair of dual representations, written in terms of erosions and dilations, for increasing translation invariant set mappings, using the concept of a kernel. Based on hit-miss topology, Maragos, in his 1985 Ph.D. -

Applications of Set Theory in Digital Image Processing-IJAERD

e-ISSN (O): 2348-4470 Scientific Journal of Impact Factor (SJIF): 4.72 p-ISSN (P): 2348-6406 International Journal of Advance Engineering and Research Development Volume 4, Issue 11, November -2017 Applications of Set Theory in Digital Image Processing Suresha M Department of Computer Science, Kuvempu University, Karnataka, India. Abstract: Set Theory is an important language and tool in digital image processing. It has fundamental ideas in computer science from theory to practice. Many ideas and methodologies in computer science are inspired by set theory. One among those ideas, morphological image processing is the application of set theory. Basic morphological algorithms and application in finger print identification has been discussed in this chapter. Keywords: Set Theory, Morphological Operations, Finger Print, Recognition. 1. Morphological Image Processing The word morphology commonly denotes a branch of biology that deals with the form and structure of animals and plants. The same word here in the context of mathematical morphology as a tool for extracting image components. Here explained and illustrated several important concepts in mathematical morphology. Many of the ideas introduced here can be formulated in terms of n-dimensional Euclidean space, En. However, here discussing on binary images (Refer Appendix I for image types) whose elements are elements of Z2. The language of mathematical morphology is a set theory. As such, morphology offers a unified and powerful approach to numerous image processing problems. Sets in mathematical morphology represent objects in an image. For example, the set of all white pixels in a binary image is a complete morphological description of the image. -

Chapter 7 Mathematical Morphology with Noncommutative Symmetry Groups Jos B.T.M

Chapter 7 Mathematical Morphology with Noncommutative Symmetry Groups Jos B.T.M. Roerdink Centre for Mathematics and Computer Science, Amsterdam, The Netherlands Mathematical morphology as originally developed by Matheron and Serra is a theory of set mappings, modeling binary image transformations, that are invar iant under the group of Euclidean translations. Because this framework turns out to be too restricted for many practical applications, various generalizations have been proposed. First, the translation group may be replaced by an arbitrary com mutative group. Second, one may consider more general object spaces, such as the set of all convex subsets of the plane or the set of gray-level functions on the plane, requiring a formulation in terms of complete lattices. So far, symmetry properties have been incorporated by assuming that the allowed image transfor mations are invariant under a certain commutative group of automorphisms on the lattice. In this chapter we embark on another generalization of mathematical morphology by dropping the assumption that the invariance group is commuta tive. To this end we consider an arbitrary homogeneous space (the plane with the Euclidean translation group is one example, the sphere with the rotation group another), that is, a set 2e on which a transitive but not necessarily commutative transformation group f is defined. As our object space we then take the Boolean algebra 0P(2e) of all subsets of this homogeneous space. First we consider the case in which the transformation group is simply transitive or, equivalently, the basic set 2e is itself a group, so that we may study the Boolean algebra '2?(f).