Estimation of Small Failure Probabilities in High Dimensions by Subset Simulation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Verifiable Semantic Difference Languages

Verifiable Semantic Difference Languages Thibaut Girka David Mentré Yann Régis-Gianas Mitsubishi Electric R&D Centre Mitsubishi Electric R&D Centre Univ Paris Diderot, Sorbonne Paris Europe Europe Cité, IRIF/PPS, UMR 8243 CNRS, PiR2, Rennes, France Rennes, France INRIA Paris-Rocquencourt Paris, France ABSTRACT 1 INTRODUCTION Program differences are usually represented as textual differences Let x be a strictly positive natural number. Consider the following on source code with no regard to its syntax or its semantics. In two (almost identical) imperative programs: this paper, we introduce semantic-aware difference languages. A difference denotes a relation between program reduction traces. A s = 0 ; 1 s = 0 ; 1 difference language for the toy imperative programming language d = x ; 2 d = x − 1 ; 2 while (d>0) { 3 while (d>0) { 3 Imp is given as an illustration. i f (x%d== 0) 4 i f (x%d== 0) 4 To certify software evolutions, we want to mechanically verify s = s + d ; 5 s = s + d ; 5 − − that a difference correctly relates two given programs. Product pro- d = d 1 ; 6 d = d 1 ; 6 } 7 } 7 grams and correlating programs are effective proof techniques for s = s − x 8 8 relational reasoning. A product program simulates, in the same programming language as the compared programs, a well-chosen The program P1 (on the left) stores in s the sum of the proper interleaving of their executions to highlight a specific relation be- divisors of the integer x. To that end, it iterates over the integers tween their reduction traces. While this approach enables the use from x to 1 using the variable d and accumulates in the variable s of readily-available static analysis tools on the product program, it the values of d that divide x. -

An Introduction to Programming in Simula

An Introduction to Programming in Simula Rob Pooley This document, including all parts below hyperlinked directly to it, is copyright Rob Pooley ([email protected]). You are free to use it for your own non-commercial purposes, but may not copy it or reproduce all or part of it without including this paragraph. If you wish to use it for gain in any manner, you should contact Rob Pooley for terms appropriate to that use. Teachers in publicly funded schools, universities and colleges are free to use it in their normal teaching. Anyone, including vendors of commercial products, may include links to it in any documentation they distribute, so long as the link is to this page, not any sub-part. This is an .pdf version of the book originally published by Blackwell Scientific Publications. The copyright of that book also belongs to Rob Pooley. REMARK: This document is reassembled from the HTML version found on the web: https://web.archive.org/web/20040919031218/http://www.macs.hw.ac.uk/~rjp/bookhtml/ Oslo 20. March 2018 Øystein Myhre Andersen Table of Contents Chapter 1 - Begin at the beginning Basics Chapter 2 - And end at the end Syntax and semantics of basic elements Chapter 3 - Type cast actors Basic arithmetic and other simple types Chapter 4 - If only Conditional statements Chapter 5 - Would you mind repeating that? Texts and while loops Chapter 6 - Correct Procedures Building blocks Chapter 7 - File FOR future reference Simple input and output using InFile, OutFile and PrintFile Chapter 8 - Item by Item Item oriented reading and writing and for loops Chapter 9 - Classes as Records Chapter 10 - Make me a list Lists 1 - Arrays and simple linked lists Reference comparison Chapter 11 - Like parent like child Sub-classes and complex Boolean expressions Chapter 12 - A Language with Character Character handling, switches and jumps Chapter 13 - Let Us See what We Can See Inspection and Remote Accessing Chapter 14 - Side by Side Coroutines Chapter 15 - File For Immediate Use Direct and Byte Files Chapter 16 - With All My Worldly Goods.. -

Numerical Models Show Coral Reefs Can Be Self -Seeding

MARINE ECOLOGY PROGRESS SERIES Vol. 74: 1-11, 1991 Published July 18 Mar. Ecol. Prog. Ser. Numerical models show coral reefs can be self -seeding ' Victorian Institute of Marine Sciences, 14 Parliament Place, Melbourne, Victoria 3002, Australia Australian Institute of Marine Science, PMB No. 3, Townsville MC, Queensland 4810, Australia ABSTRACT: Numerical models are used to simulate 3-dimensional circulation and dispersal of material, such as larvae of marine organisms, on Davies Reef in the central section of Australia's Great Barner Reef. Residence times on and around this reef are determined for well-mixed material and for material which resides at the surface, sea bed and at mid-depth. Results indcate order-of-magnitude differences in the residence times of material at different levels in the water column. They confirm prevlous 2- dimensional modelling which indicated that residence times are often comparable to the duration of the planktonic larval life of many coral reef species. Results reveal a potential for the ma~ntenanceof local populations of vaiious coral reef organisms by self-seeding, and allow reinterpretation of the connected- ness of the coral reef ecosystem. INTRODUCTION persal of particles on reefs. They can be summarised as follows. Hydrodynamic experiments of limited duration Circulation around the reefs is typified by a complex (Ludington 1981, Wolanski & Pickard 1983, Andrews et pattern of phase eddies (Black & Gay 1987a, 1990a). al. 1984) have suggested that flushing times on indi- These eddies and other components of the currents vidual reefs of Australia's Great Barrier Reef (GBR) are interact with the reef bathymetry to create a high level relatively short in comparison with the larval life of of horizontal mixing on and around the reefs (Black many different coral reef species (Yamaguchi 1973, 1988). -

Object-Oriented Programming in the Beta Programming Language

OBJECT-ORIENTED PROGRAMMING IN THE BETA PROGRAMMING LANGUAGE OLE LEHRMANN MADSEN Aarhus University BIRGER MØLLER-PEDERSEN Ericsson Research, Applied Research Center, Oslo KRISTEN NYGAARD University of Oslo Copyright c 1993 by Ole Lehrmann Madsen, Birger Møller-Pedersen, and Kristen Nygaard. All rights reserved. No part of this book may be copied or distributed without the prior written permission of the authors Preface This is a book on object-oriented programming and the BETA programming language. Object-oriented programming originated with the Simula languages developed at the Norwegian Computing Center, Oslo, in the 1960s. The first Simula language, Simula I, was intended for writing simulation programs. Si- mula I was later used as a basis for defining a general purpose programming language, Simula 67. In addition to being a programming language, Simula1 was also designed as a language for describing and communicating about sys- tems in general. Simula has been used by a relatively small community for many years, although it has had a major impact on research in computer sci- ence. The real breakthrough for object-oriented programming came with the development of Smalltalk. Since then, a large number of programming lan- guages based on Simula concepts have appeared. C++ is the language that has had the greatest influence on the use of object-oriented programming in industry. Object-oriented programming has also been the subject of intensive research, resulting in a large number of important contributions. The authors of this book, together with Bent Bruun Kristensen, have been involved in the BETA project since 1975, the aim of which is to develop con- cepts, constructs and tools for programming. -

The Birth of Simula

THE BIRTH OF SIMULA Stein Krogdahl Department of Informatics, University of Oslo, Norway; [email protected] Abstract: When designing Simula, Ole-Johan Dahl and Kristen Nygaard introduced the basic concepts of what later became object-orientation, which still, 35 years later, has a profound impact on computing. This paper looks at the background for the Simula project, the way it developed over time, and the reason it could became so successful. Key words: Computing history, programming languages, Simula 1. INTRODUCTION On many occasions, people have told the history of how the programming language Simula came into being. Perhaps the foremost of these was the paper on Simula at the "History of Programming Languages" conference in 1978 [5] by Ole-Johan Dahl (OJD) and Kristen Nygaard (KN) themselves. However, people have given many other accounts, e.g. one by J.R. Holmevik [9] in 1994 and one by OJD [2] in 2001. Most of these papers provided chronological accounts of what happened during the years when OJD and KN developed the two Simula languages, first the special purpose simulation language Simula (now usually referred to as Simula 1) and then the general-purpose language Simula 67. The latter is now officially renamed "Simula", but to make a clear distinction, we shall refer to it here as Simula 67. This paper will take a slightly different view in that it will first give a rather short chronological overview of what happened when they developed the Simula languages during the years 1961 to 1967. After that, we will look at different aspects of Simula 67, and try to find where they originated, when 262 Stein Krogdahl they came into the development of the Simula languages, and how and why they got their final form in the language. -

Combined Clean Questions/Answers for Chapter 9

Review questions for Chapter 9 Answer first, then check at the end. True/False 1. A compiler translates a high-level language program into the corresponding program in machine code. 2. An interpreter is a simulator that executes high-level language code directly. 3. A compiler and an interpreter both accept a program in a high-level language as input. 4. A compiler and an interpreter produce the same output. 5. Bytecode is a standard machine language into which Java source code is compiled. 6. The Java Virtual Machine is a hypothetical computer that executes Bytecode. 7. Boolean expressions are used to make decisions in a high-level language. 8. Strong typing is a mechanism by which a high-level program is entered into a computer. 9. A variable declaration associates the variable name with a data. 10. A declaration is an example of a control structure. 11. An IF statement is an example of a control structure 12. A parameter is a mechanism that allows data to be passed into a subprogram. 13. Clicking a mouse is an example of asynchronous processing. 14. A loop can be nested within a selection statement, but a selection statement cannot be nested within a loop. 15. Encapsulation is a language feature that enforces information hiding. 16. A class as a language construct is a pattern for an object. 17. Instantiation is the process of creating an object from a class. 18. Polymorphism is the ability of a language to have duplicate method names in an inheritance hierarchy and to apply the method that is appropriate for the object to which the method is applied. -

Organization of Programming Languages

CMSC 330: Organization of Programming Languages A Brief History of Programming Languages CMSC330 Fall 2020 Babylon • Founded roughly 4000 years ago – Located near the Euphrates River, 56 miles south of Baghdad, Iraq • Historically influential in ancient western world • Cuneiform writing system, written on clay tablets – Some of those tablets survive to this day – Those from Hammurabi dynasty (1800-1600 BC) include mathematical calculations • (Also known for Code of Hammurabi, an early legal code) A Babylonian Algorithm A [rectangular] cistern. The height is 3, 20, and a volume of 27, 46, 40 has been excavated. The length exceeds the width by 50. You should take the reciprocal of the height, 3, 20, obtaining 18. Multiply this by the volume, 27, 46, 40, obtaining 8, 20. Take half of 50 and square it, obtaining 10, 25. Add 8, 20, and you get 8, 30, 25 The square root is 2, 55. Make two copies of this, adding [25] to the one and subtracting from the other. You find that 3, 20 [i.e., 3 1/3] is the length and 2, 30 [i.e., 2 1/2] is the width. This is the procedure. – Donald E. Knuth, Ancient Babylonian Algorithms, CACM July 1972 The number n, m represents n*(60k) + m*(60k-1) for some k More About Algorithms • Euclid’s Algorithm (Alexandria, Egypt, 300 BC) – Appeared in Elements • See http://aleph0.clarku.edu/~djoyce/elements/bookVII/propVII2.html – Computes gcd of two integers let rec gcd a b = if b = 0 then a else gcd b (a mod b) • Al-Khwarizmi (Baghdad, Iraq, 780-850 AD) – Al-Khwarizmi Concerning the Hindu Art of Reckoning – Translated into -

Partial History of Programming Languages � Based on Slides Originally Published by Thomas J

15-292 History of Computing Partial History of Programming Languages ! Based on slides originally published by Thomas J. Cortina in 2004 for a course at Stony Brook University. Revised in 2013 by Thomas J. Cortina for a computing history course at Carnegie Mellon University. The first assembler l Assembler - a computer program for translating assembly language into executable machine code l Example: ADD R1, R2, R3 0110000100100011 l The EDSAC programming system was based on a subroutine library l commonly used functions that could be used to build all sorts of more complex programs l the first version, Initial Orders 1, was devised by David Wheeler, then a research student, in 1949 l Team published “The Preparation of Programs for an Electronic Digital Computer” l the only programming textbook then available l computers today still use Cambridge model for subroutines library The first compiler l A compiler is a computer program that translates a computer program written in one computer language (the source language) into a program written in another computer language (the target language). l Typically, the target language is assembly language l Assembler may then translate assembly language into machine code l Machine code are directions a computer can understand on the lowest hardware level (1s & 0s) l A-0 is a programming language for the UNIVAC I or II, using three- address code instructions for solving mathematical problems. l A-0 was the first language for which a compiler was developed. l It was produced by Grace Hopper's team at -

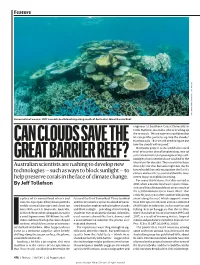

Can Clouds Save the Great Barrier Reef?

Feature JUERGEN FREUND/NATURE PICTURE LIBRARY FREUND/NATURE JUERGEN A marine heat wave in 2017 caused coral bleaching along much of Australia’s Great Barrier Reef. engineer at Southern Cross University in Coffs Harbour, Australia, who is heading up the research. “We are now very confident that we can get the particles up into the clouds,” CAN CLOUDS SAVE THE Harrison says. “But we still need to figure out how the clouds will respond.” Harrison’s project is the world’s first field trial of marine cloud brightening, one of several controversial geoengineering tech- GREAT BARRIER REEF? nologies that scientists have studied in the laboratory for decades. The research has been Australian scientists are rushing to develop new driven by fear that humans might one day be forced to deliberately manipulate the Earth’s technologies — such as ways to block sunlight — to climate and weather systems to blunt the most help preserve corals in the face of climate change. severe impacts of global warming. For many Australians, that day arrived in By Jeff Tollefson 2017, when a marine heat wave spurred mas- sive coral bleaching and death across much of the 2,300-kilometre Great Barrier Reef. That crisis hit just a year after another bleaching n place of its normal load of cars and future of the Great Barrier Reef. Three-hundred event along the reef, which supports more vans, the repurposed ferry boat sported a and twenty nozzles spewed a cloud of nano- than 600 species of coral and an estimated mobile science laboratory and a large fan sized droplets engineered to brighten clouds 64,000 jobs in industries such as tourism and on its deck as it left Townsville, Australia, and block sunlight — providing a bit of cooling fishing. -

Advanced Programming Language Design (Raphael A. Finkel)

See discussions, stats, and author profiles for this publication at: https://www.researchgate.net/publication/220692467 Advanced programming language design. Book · January 1996 Source: DBLP CITATIONS READS 41 3,040 1 author: Raphael Finkel University of Kentucky 122 PUBLICATIONS 6,757 CITATIONS SEE PROFILE All content following this page was uploaded by Raphael Finkel on 16 December 2013. The user has requested enhancement of the downloaded file. ADVANCED PROGRAMMING LANGUAGE DESIGN Raphael A. Finkel ❖ UNIVERSITY OF KENTUCKY ▲ ▼▼ Addison-Wesley Publishing Company Menlo Park, California · Reading, Massachusetts New York · Don Mills, Ontario · Harlow, U.K. · Amsterdam Bonn · Paris · Milan · Madrid · Sydney · Singapore · Tokyo Seoul · Taipei · Mexico City · San Juan, Puerto Rico hhhhhhhhhhhhhhhhhhhhhhhhhhhhhhhhhhhh On-line edition copyright 1996 by Addison-Wesley Publishing Company. Permission is granted to print or photo- copy this document for a fee of $0.02 per page, per copy, payable to Addison-Wesley Publishing Company. All other rights reserved. Acquisitions Editor: J. Carter Shanklin Proofreader: Holly McLean-Aldis Editorial Assistant: Christine Kulke Text Designer: Peter Vacek, Eigentype Senior Production Editor: Teri Holden Film Preparation: Lazer Touch, Inc. Copy Editor: Nick Murray Cover Designer: Yvo Riezebos Manufacturing Coordinator: Janet Weaver Printer: The Maple-Vail Book Manufacturing Group Composition and Film Coordinator: Vivian McDougal Copyright 1996 by Addison-Wesley Publishing Company All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, electronic, mechanical, photocopying, recording, or any other media embodiments now known, or hereafter to become known, without the prior written permission of the publisher. -

Objectives 30

Lehigh Preserve Institutional Repository A study of the simula 67 language. Tanhauser, Andrew Joseph 1984 Find more at https://preserve.lib.lehigh.edu/ This document is brought to you for free and open access by Lehigh Preserve. It has been accepted for inclusion by an authorized administrator of Lehigh Preserve. For more information, please contact [email protected]. A STUDY OF THE SIMULA 67 LANGUAGE by Andrew Joseph Tanhauser A Thesis Presented to the Graduate Committee of Lehigh University in Candidacy for the Degree of Master of Science in Computing'N1 Science Lehigh University 1984 ProQuest Number: EP76467 All rights reserved INFORMATION TO ALL USERS The quality of this reproduction is dependent upon the quality of the copy submitted. In the unlikely event that the author did not send a complete manuscript and there are missing pages, these will be noted. Also, if material had to be removed, a note will indicate the deletion. uest ProQuest EP76467 Published by ProQuest LLC (2015). Copyright of the Dissertation is held by the Author. All rights reserved. This work is protected against unauthorized copying under Title 17, United States Code Microform Edition © ProQuest LLC. ProQuest LLC. 789 East Eisenhower Parkway P.O. Box 1346 Ann Arbor, Ml 48106-1346 This thesis is accepted and approved in partia.1 fulfillment of the requirements for the degree of Master of Science. Date Professor in Charge Head of Division il TABLE OF CONTENTS Abstract 1 I. SIMULA I A. History/Background 2 B. Development Stages 3 C. Development Process 6 D. A Detailed SIMULA Example 16 II. -

Evolution of the Major Programming Languages Genealogy of Common Languages Zuse’S Plankalkül

CHAPTER 2 Evolution of the Major Programming Languages Genealogy of Common Languages Zuse’s Plankalkül • Designed in 1945, but not published until 1972 • Never implemented • Advanced data structures • floating point, arrays, records • Invariants Plankalkül Syntax • An assignment statement to assign the expression A[4] + 1 to A[5] | A + 1 => A V | 4 5 (subscripts) S | 1.n 1.n (data types) Minimal Hardware Programming: Pseudocodes • What was wrong with using machine code? • Poor readability • Poor modifiability • Expression coding was tedious • Machine deficiencies--no indexing or floating point Pseudocodes: Short Code • Short Code developed by Mauchly in 1949 for BINAC computers • Expressions were coded, left to right • Example of operations: 01 – 06 abs value 1n (n+2)nd power 02 ) 07 + 2n (n+2)nd root 03 = 08 pause 4n if <= n 04 / 09 ( 58 print and tab Pseudocodes: Speedcoding • Speedcoding developed by Backus in 1954 for IBM 701 • Pseudo ops for arithmetic and math functions • Conditional and unconditional branching • Auto-increment registers for array access • Slow! • Only 700 words left for user program Pseudocodes: Related Systems • The UNIVAC Compiling System • Developed by a team led by Grace Hopper • Pseudocode expanded into machine code • David J. Wheeler (Cambridge University) • developed a method of using blocks of re-locatable addresses to solve the problem of absolute addressing IBM 704 and Fortran • Fortran 0: 1954 - not implemented • Fortran I:1957 • Designed for the new IBM 704, which had index registers and floating point