A Foundation for Inductive Reasoning in Harnessing the Potential of Big Data

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

1.2. INDUCTIVE REASONING Much Mathematical Discovery Starts with Inductive Reasoning – the Process of Reaching General Conclus

1.2. INDUCTIVE REASONING Much mathematical discovery starts with inductive reasoning – the process of reaching general conclusions, called conjectures, through the examination of particular cases and the recognition of patterns. These conjectures are then more formally proved using deductive methods, which will be discussed in the next section. Below we look at three examples that use inductive reasoning. Number Patterns Suppose you had to predict the sixth number in the following sequence: 1, -3, 6, -10, 15, ? How would you proceed with such a question? The trick, it seems, is to discern a specific pattern in the given sequence of numbers. This kind of approach is a classic example of inductive reasoning. By identifying a rule that generates all five numbers in this sequence, your hope is to establish a pattern and be in a position to predict the sixth number with confidence. So can you do it? Try to work out this number before continuing. One fact is immediately clear: the numbers alternate sign from positive to negative. We thus expect the answer to be a negative number since it follows 15, a positive number. On closer inspection, we also realize that the difference between the magnitude (or absolute value) of successive numbers increases by 1 each time: 3 – 1 = 2 6 – 3 = 3 10 – 6 = 4 15 – 10 = 5 … We have then found the rule we sought for generating the next number in this sequence. The sixth number should be -21 since the difference between 15 and 21 is 6 and we had already determined that the answer should be negative. -

There Is No Pure Empirical Reasoning

There Is No Pure Empirical Reasoning 1. Empiricism and the Question of Empirical Reasons Empiricism may be defined as the view there is no a priori justification for any synthetic claim. Critics object that empiricism cannot account for all the kinds of knowledge we seem to possess, such as moral knowledge, metaphysical knowledge, mathematical knowledge, and modal knowledge.1 In some cases, empiricists try to account for these types of knowledge; in other cases, they shrug off the objections, happily concluding, for example, that there is no moral knowledge, or that there is no metaphysical knowledge.2 But empiricism cannot shrug off just any type of knowledge; to be minimally plausible, empiricism must, for example, at least be able to account for paradigm instances of empirical knowledge, including especially scientific knowledge. Empirical knowledge can be divided into three categories: (a) knowledge by direct observation; (b) knowledge that is deductively inferred from observations; and (c) knowledge that is non-deductively inferred from observations, including knowledge arrived at by induction and inference to the best explanation. Category (c) includes all scientific knowledge. This category is of particular import to empiricists, many of whom take scientific knowledge as a sort of paradigm for knowledge in general; indeed, this forms a central source of motivation for empiricism.3 Thus, if there is any kind of knowledge that empiricists need to be able to account for, it is knowledge of type (c). I use the term “empirical reasoning” to refer to the reasoning involved in acquiring this type of knowledge – that is, to any instance of reasoning in which (i) the premises are justified directly by observation, (ii) the reasoning is non- deductive, and (iii) the reasoning provides adequate justification for the conclusion. -

A Philosophical Treatise on the Connection of Scientific Reasoning

mathematics Review A Philosophical Treatise on the Connection of Scientific Reasoning with Fuzzy Logic Evangelos Athanassopoulos 1 and Michael Gr. Voskoglou 2,* 1 Independent Researcher, Giannakopoulou 39, 27300 Gastouni, Greece; [email protected] 2 Department of Applied Mathematics, Graduate Technological Educational Institute of Western Greece, 22334 Patras, Greece * Correspondence: [email protected] Received: 4 May 2020; Accepted: 19 May 2020; Published:1 June 2020 Abstract: The present article studies the connection of scientific reasoning with fuzzy logic. Induction and deduction are the two main types of human reasoning. Although deduction is the basis of the scientific method, almost all the scientific progress (with pure mathematics being probably the unique exception) has its roots to inductive reasoning. Fuzzy logic gives to the disdainful by the classical/bivalent logic induction its proper place and importance as a fundamental component of the scientific reasoning. The error of induction is transferred to deductive reasoning through its premises. Consequently, although deduction is always a valid process, it is not an infallible method. Thus, there is a need of quantifying the degree of truth not only of the inductive, but also of the deductive arguments. In the former case, probability and statistics and of course fuzzy logic in cases of imprecision are the tools available for this purpose. In the latter case, the Bayesian probabilities play a dominant role. As many specialists argue nowadays, the whole science could be viewed as a Bayesian process. A timely example, concerning the validity of the viruses’ tests, is presented, illustrating the importance of the Bayesian processes for scientific reasoning. -

Evaluating Empirical Research

EVALUATING EMPIRICAL RESEARCH A NTIOCH It is important to maintain an objective and respectful tone as you evaluate others’ empirical studies. Keep in mind that study limitations are often a result of the epistemological limitations of real life research situations rather than the laziness or ignorance of the researchers. It’s U NIVERSITY NIVERSITY normal and expected for empirical studies to have limitations. Having said that, it’s part of your job as a critical reader and a smart researcher to provide a fair assessment of research done on your topic. B.A. Maher’s guidelines (as cited in Cone and Foster, 2008, pp 104- V 106) are useful in thinking of others’ studies, as well as for writing up your IRTIUAL own study. Here are the recommended questions to consider as you read each section of an article. As you think of other questions that are particularly W important to your topic, add them to the list. RITING Introduction Does the introduction provide a strong rationale for why the study is C ENTER needed? Are research questions and hypotheses clearly articulated? (Note that research questions are often presented implicitly within a description of the purpose of the study.) Method Is the method described so that replication is possible without further information Participants o Are subject recruitment and selection methods described? o Were participants randomly selected? Are there any probable biases in sampling? A NTIOCH o Is the sample appropriate in terms of the population to which the researchers wished to generalize? o Are characteristics of the sample described adequately? U NIVERSITY NIVERSITY o If two or more groups are being compared, are they shown to be comparable on potentially confounding variables (e.g. -

Argument, Structure, and Credibility in Public Health Writing Donald Halstead Instructor and Director of Writing Programs Harvard TH Chan School of Public Heath

Argument, Structure, and Credibility in Public Health Writing Donald Halstead Instructor and Director of Writing Programs Harvard TH Chan School of Public Heath Some of the most important questions we face in public health include what policies we should follow, which programs and research should we fund, how and where we should intervene, and what our priorities should be in the face of overwhelming needs and scarce resources. These questions, like many others, are best decided on the basis of arguments, a word that has its roots in the Latin arguere, to make clear. Yet arguments themselves vary greatly in terms of their strength, accuracy, and validity. Furthermore, public health experts often disagree on matters of research, policy and practice, citing conflicting evidence and arriving at conflicting conclusions. As a result, critical readers, such as researchers, policymakers, journal editors and reviewers, approach arguments with considerable skepticism. After all, they are not going to change their programs, priorities, practices, research agendas or budgets without very solid evidence that it is necessary, feasible, and beneficial. This raises an important challenge for public health writers: How can you best make your case, in the face of so much conflicting evidence? To illustrate, let’s assume that you’ve been researching mother-to-child transmission (MTCT) of HIV in a sub-Saharan African country and have concluded that (claim) the country’s maternal programs for HIV counseling and infant nutrition should be integrated because (reasons) this would be more efficient in decreasing MTCT, improving child nutrition, and using scant resources efficiently. The evidence to back up your claim might consist of original research you have conducted that included program assessments and interviews with health workers in the field, your assessment of the other relevant research, the experiences of programs in other countries, and new WHO guidelines. -

Internationalizing the University Curricula Through Communication

DOCUMENT RESUME ED 428 409 CS 510 027 AUTHOR Oseguera, A. Anthony Lopez TITLE Internationalizing the University Curricula through Communication: A Comparative Analysis among Nation States as Matrix for the Promulgation of Internationalism, through the Theoretical Influence of Communication Rhetors and International Educators, Viewed within the Arena of Political-Economy. PUB DATE 1998-12-00 NOTE 55p.; Paper presented at the Annual Meeting of the Speech Communication Association of Puerto Rico (18th, San Juan, Puerto Rico, December 4-5, 1998). PUB TYPE Reports Research (143) Speeches/Meeting Papers (150) EDRS PRICE MF01/PC03 Plus Postage. DESCRIPTORS *College Curriculum; *Communication (Thought Transfer); Comparative Analysis; *Educational Change; Educational Research; Foreign Countries; Global Approach; Higher Education; *International Education IDENTIFIERS *Internationalism ABSTRACT This paper surveys the current situation of internationalism among the various nation states by a comparative analysis, as matrix, to promulgate the internationalizing process, as a worthwhile goal, within and without the college and university curricula; the theoretical influence and contributions of scholars in communication, international education, and political-economy, moreover, become allies toward this endeavor. The paper calls for the promulgation of a new and more effective educational paradigm; in this respect, helping the movement toward the creation of new and better schools for the next millennium. The paper profiles "poorer nations" and "richer nations" and then views the United States, with its enormous wealth, leading technology, vast educational infrastructure, and its respect for democratic principles, as an agent with agencies that can effect positive consequences to ameliorating the status quo. The paper presents two hypotheses: the malaise of the current educational paradigm is real, and the "abertura" (opening) toward a better paradigmatic, educational pathway is advisable and feasible. -

Psychology 205, Revelle, Fall 2014 Research Methods in Psychology Mid-Term

Psychology 205, Revelle, Fall 2014 Research Methods in Psychology Mid-Term Name: ________________________________ 1. (2 points) What is the primary advantage of using the median instead of the mean as a measure of central tendency? It is less affected by outliers. 2. (2 points) Why is counterbalancing important in a within-subjects experiment? Ensuring that conditions are independent of order and of each other. This allows us to determine effect of each variable independently of the other variables. If conditions are related to order or to each other, we are unable to determine which variable is having an effect. Short answer: order effects. 3. (6 points) Define reliability and compare it to validity. Give an example of when a measure could be valid but not reliable. 2 points: Reliability is the consistency or dependability of a measurement technique. [“Getting the same result” was not accepted; it was too vague in that it did not specify the conditions (e.g., the same phenomenon) in which the same result was achieved.] 2 points: Validity is the extent to which a measurement procedure actually measures what it is intended to measure. 2 points: Example (from class) is a weight scale that gives a different result every time the same person stands on it repeatedly. Another example: a scale that actually measures hunger but has poor test-retest reliability. [Other examples were accepted.] 4. (4 points) A consumer research company wants to compare the “coverage” of two competing cell phone networks throughout Illinois. To do so fairly, they have decided that they will only compare survey data taken from customers who are all using the same cell phone model - one that is functional on both networks and has been newly released in the last 3 months. -

Research Methods: Empirical Research

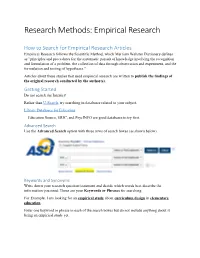

Research Methods: Empirical Research How to Search for Empirical Research Articles Empirical Research follows the Scientific Method, which Merriam Webster Dictionary defines as "principles and procedures for the systematic pursuit of knowledge involving the recognition and formulation of a problem, the collection of data through observation and experiment, and the formulation and testing of hypotheses." Articles about these studies that used empirical research are written to publish the findings of the original research conducted by the author(s). Getting Started Do not search the Internet! Rather than U-Search, try searching in databases related to your subject. Library Databases for Education Education Source, ERIC, and PsycINFO are good databases to try first. Advanced Search Use the Advanced Search option with three rows of search boxes (as shown below). Keywords and Synonyms Write down your research question/statement and decide which words best describe the information you need. These are your Keywords or Phrases for searching. For Example: I am looking for an empirical study about curriculum design in elementary education. Enter one keyword or phrase in each of the search boxes but do not include anything about it being an empirical study yet. To retrieve a more complete list of results on your subject, include synonyms and place the word or in between each keyword/phrase and the synonyms. For Example: elementary education or primary school or elementary school or third grade In the last search box, you may need to include words that focus the search towards empirical research: study or studies, empirical research, qualitative, quantitative, methodology, Nominal (Ordinal, Interval, Ratio) or other terms relevant to empirical research. -

The Problem of Induction

The Problem of Induction Gilbert Harman Department of Philosophy, Princeton University Sanjeev R. Kulkarni Department of Electrical Engineering, Princeton University July 19, 2005 The Problem The problem of induction is sometimes motivated via a comparison between rules of induction and rules of deduction. Valid deductive rules are necessarily truth preserving, while inductive rules are not. So, for example, one valid deductive rule might be this: (D) From premises of the form “All F are G” and “a is F ,” the corresponding conclusion of the form “a is G” follows. The rule (D) is illustrated in the following depressing argument: (DA) All people are mortal. I am a person. So, I am mortal. The rule here is “valid” in the sense that there is no possible way in which premises satisfying the rule can be true without the corresponding conclusion also being true. A possible inductive rule might be this: (I) From premises of the form “Many many F s are known to be G,” “There are no known cases of F s that are not G,” and “a is F ,” the corresponding conclusion can be inferred of the form “a is G.” The rule (I) might be illustrated in the following “inductive argument.” (IA) Many many people are known to have been moral. There are no known cases of people who are not mortal. I am a person. So, I am mortal. 1 The rule (I) is not valid in the way that the deductive rule (D) is valid. The “premises” of the inductive inference (IA) could be true even though its “con- clusion” is not true. -

1 a Bayesian Analysis of Some Forms of Inductive Reasoning Evan Heit

1 A Bayesian Analysis of Some Forms of Inductive Reasoning Evan Heit University of Warwick In Rational Models of Cognition, M. Oaksford & N. Chater (Eds.), Oxford University Press, 248- 274, 1998. Please address correspondence to: Evan Heit Department of Psychology University of Warwick Coventry CV4 7AL, United Kingdom Phone: (024) 7652 3183 Email: [email protected] 2 One of our most important cognitive goals is prediction (Anderson, 1990, 1991; Billman & Heit, 1988; Heit, 1992; Ross & Murphy, 1996), and category-level information enables a rich set of predictions. For example, you might not be able to predict much about Peter until you are told that Peter is a goldfish, in which case you could predict that he will swim and he will eat fish food. Prediction is a basic element of a wide range of everyday tasks from problem solving to social interaction to motor control. This chapter, however, will focus on a narrower range of prediction phenomena, concerning how people evaluate inductive “syllogisms” or arguments such as the following example: Goldfish thrive in sunlight --------------------------- Tunas thrive in sunlight. (The information above the line is taken as a premise which is assumed to be true, then the task is to evaluate the likelihood of the conclusion, below the line.) Despite the apparent simplicity of this task, there are a variety of interesting phenomena that are associated with inductive arguments. Taken together, these phenomena reveal a great deal about what people know about categories and their properties, and about how people use their general knowledge of the world for reasoning. -

Structured Statistical Models of Inductive Reasoning

CORRECTED FEBRUARY 25, 2009; SEE LAST PAGE Psychological Review © 2009 American Psychological Association 2009, Vol. 116, No. 1, 20–58 0033-295X/09/$12.00 DOI: 10.1037/a0014282 Structured Statistical Models of Inductive Reasoning Charles Kemp Joshua B. Tenenbaum Carnegie Mellon University Massachusetts Institute of Technology Everyday inductive inferences are often guided by rich background knowledge. Formal models of induction should aim to incorporate this knowledge and should explain how different kinds of knowledge lead to the distinctive patterns of reasoning found in different inductive contexts. This article presents a Bayesian framework that attempts to meet both goals and describe 4 applications of the framework: a taxonomic model, a spatial model, a threshold model, and a causal model. Each model makes probabi- listic inferences about the extensions of novel properties, but the priors for the 4 models are defined over different kinds of structures that capture different relationships between the categories in a domain. The framework therefore shows how statistical inference can operate over structured background knowledge, and the authors argue that this interaction between structure and statistics is critical for explaining the power and flexibility of human reasoning. Keywords: inductive reasoning, property induction, knowledge representation, Bayesian inference Humans are adept at making inferences that take them beyond This article describes a formal approach to inductive inference the limits of their direct experience. -

A Philosophical Treatise of Universal Induction

Entropy 2011, 13, 1076-1136; doi:10.3390/e13061076 OPEN ACCESS entropy ISSN 1099-4300 www.mdpi.com/journal/entropy Article A Philosophical Treatise of Universal Induction Samuel Rathmanner and Marcus Hutter ? Research School of Computer Science, Australian National University, Corner of North and Daley Road, Canberra ACT 0200, Australia ? Author to whom correspondence should be addressed; E-Mail: [email protected]. Received: 20 April 2011; in revised form: 24 May 2011 / Accepted: 27 May 2011 / Published: 3 June 2011 Abstract: Understanding inductive reasoning is a problem that has engaged mankind for thousands of years. This problem is relevant to a wide range of fields and is integral to the philosophy of science. It has been tackled by many great minds ranging from philosophers to scientists to mathematicians, and more recently computer scientists. In this article we argue the case for Solomonoff Induction, a formal inductive framework which combines algorithmic information theory with the Bayesian framework. Although it achieves excellent theoretical results and is based on solid philosophical foundations, the requisite technical knowledge necessary for understanding this framework has caused it to remain largely unknown and unappreciated in the wider scientific community. The main contribution of this article is to convey Solomonoff induction and its related concepts in a generally accessible form with the aim of bridging this current technical gap. In the process we examine the major historical contributions that have led to the formulation of Solomonoff Induction as well as criticisms of Solomonoff and induction in general. In particular we examine how Solomonoff induction addresses many issues that have plagued other inductive systems, such as the black ravens paradox and the confirmation problem, and compare this approach with other recent approaches.